PCI Express Power Bottleneck

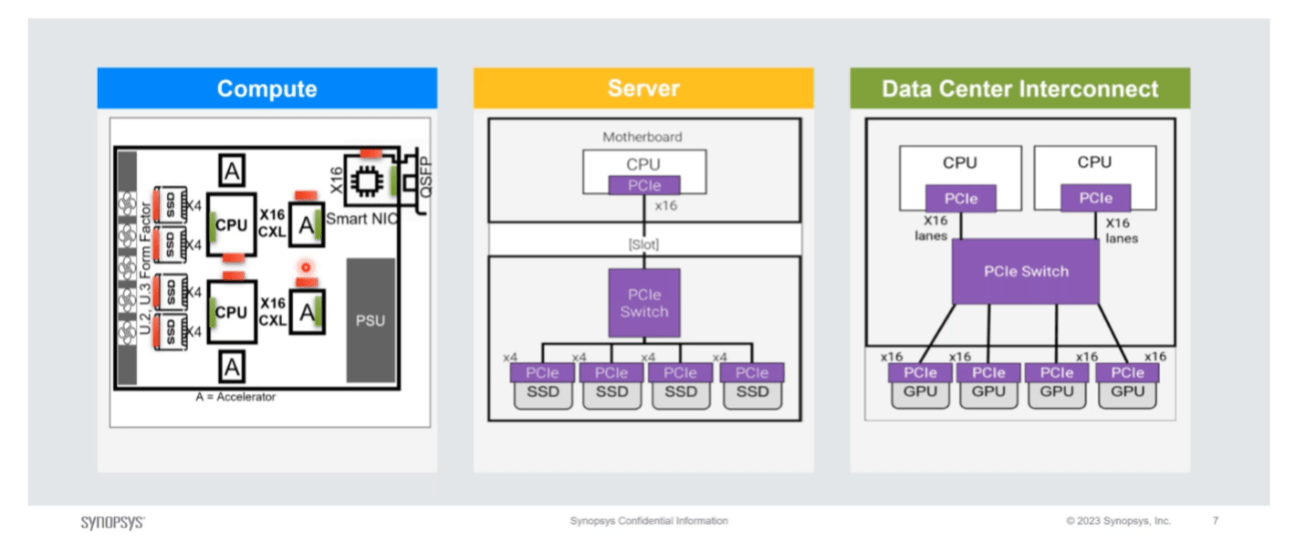

Madhumita Sanyal, Sr. Technical Product Manager, and Gary Ruggles, Sr. Product Manager, discussed the tradeoffs between power and latency in PCIe/CXL data centers during a live SemiWiki webinar on January 26, 2023. The demands on PCIe continue to grow with the integration of multiple components and the challenge of balancing power and latency. The increasing number of lanes, multicore processors, SSD storage, GPUs, accelerators, and network switches have contributed to this growth in demand for PCIe in compute, servers, and datacenter interconnects. Gary and Madhumita provided expert insights on PCIe power states and power/latency optimization. I will cherry pick a few things that interested me.

Watch the full webinar for a more comprehensive understanding on Power, Latency for PCIe/CXL in Datacenters from Synopsys experts.

Figure 1. Compute, Server, and Data Center Interconnect Devices with Multiple Lanes Hit the Power Ceiling

Reducing Power with L1 & L2 PCIe Power States

In the early days of PCIe, the standard was primarily focused on PCs and servers, for example achieving high throughput. This early standard lacked considerations for what we would now consider green or mobile friendly. However, since the introduction of PCIe 3.0, PCI-SIG has placed a strong emphasis on supporting aggressive power savings while continuing to advance performance goals. These power savings are achieved through the implementation of a standard defined as link states. Link states range from L0 (everything on) to L3 (everything off) with intermediate states contributing various levels of power savings. Possible link states continue to be refined as the standard advances.

Madhumita explained that PCIe PHYs are the big power hogs, accounting for as much as 80% to power consumption in a fully-on (L0) state! The lower power, L1 state, now includes various sub-states, enabling the deactivation of transceivers, PLLs, and analog circuitry in the PHY. The L2 power state reflects a power-off state with only auxiliary power to support circuitry such as retention logic. L1 (and its sub-states) and L2 are the workhorses for fine-tuning power savings. PCIe 6.0 introduces the option of L0p, which allows for dynamic power down on a subset of lanes in a link while keeping the remainder fully active. This feature results in both a reduction of the number of active lanes via L0p, which lowers the bandwidth, with a simultaneous reduction in the power consumption.

With PCIe power states defined, the Synopsys experts delved deeper into the process for the host and device to determine the appropriate link state. A link in any form of sleep state will incur a latency penalty upon waking – known as exit latency – such as when transitioning to an L0 state to support communication with an SSD. To reduce the system impact of this penalty, the standard specifies a latency tolerance reporting (LTR) mechanism which informs the host of the latency tolerance of the device towards an interrupt request, ultimately guiding the negotiation process.

Using Clock-Gating to Reduce Activity

The range of power saving options in digital logic is well known. I was particularly interested in the usage of clock gating techniques to optimize energy consumption by eliminating wasted clock toggling on individual flops or banks of flops, even globally for entire blocks. Dynamic voltage and frequency scaling (DVFS) decreases power by reducing operating voltage and clock frequency on functions which can afford to run slower at times. Although DVFS can result in significant power savings, it also adds complexity to the logic. Finally, power gating allows for the shutting off both dynamic and leakage power at a block level, except perhaps for auxiliary power to support retention logic.

In addition to these options, there are other techniques such as the use of mixed VT libraries. Madhumita also expanded on board and backplane considerations in balancing performance vs. power in PCIe 6.0. Low power can be achieved with lower channel reaches. For a more comprehensive discussion on these topics, I encourage you watch the webinar.

Latency in PCIe/CXL: Waiting is the Hardest Part!

Gary Ruggles recommends utilizing optimized embedded endpoints to reduce latency. These endpoints avoid the need for the full PCIe protocol from the host, through a physical connection and again through the full PCIe protocol on the device side. For example, a NIC interface could be embedded directly in the same SoC as the host, connecting to the PCIe switch directly through a low latency interface.

Gary also expanded on using a faster clock to decrease latency, while acknowledging the obvious challenges. A faster clock may require higher voltage levels, leading to increased dynamic power consumption, and higher speed libraries increase leakage power. However, the tradeoff between clock speed and pipelining is not always a total clearcut. Despite the potential increase in power consumption, a faster clock may still result in a performance advantage if the added pipelining latency is outweighed by the reduction in functional latency. Latency considerations factor in how you plan power states in PCIe. Fine-grained power state management can reduce power usage, but it also results in increased exit latencies, which can become more consequential when managing power aggressively.

Gary’s final point in managing latency is considering the use of CXL. This protocol is built based PCIe, while also supporting the standard protocol through CXL.io. CXL’s claim to fame is support for cache coherent communication through CXL.cache and CXL.mem. These interfaces offer much lower latency than PCIe. If you have need for coherent cache/memory access, CXL could be a good option.

Takeaways

Power consumption is a major concern in datacenters. The PCIe standard makes allowance for multiple power states to take advantage of opportunities to reduce power in the PHY and in the digital logic. Taking full advantage of the possibilities requires careful tradeoffs between optimization for latency, power, and throughput, all the way from software down to the PCIe physical layer. When suitable, CXL proves to be a promising solution, offering much lower latency compared to conventional PCIe.

Naturally ,Synopsys has production IP for PCIe (all the way up to Gen 6) and for CXL (all the way to CXL 3.0).

You can watch the webinar HERE.

Also Read:

PCIe 6.0: Challenges of Achieving 64GT/s with PAM4 in Lossy, HVM Channels

How to Efficiently and Effectively Secure SoC Interfaces for Data Protection

ARC Processor Summit 2022 Your embedded edge starts here!

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.