High level synthesis (HLS) seems to have been part of the backdrop of design automation for so long that it seems to be one of those things that nobody notices any more. But it has also crept up on people and gone from interesting technology to keep an eye on to getting genuine adoption. The first commercial product in the space was behavioral compiler introduced in 1994 by Synopsys. In that era we all thought that design would inevitably move up from RTL to C, but in fact IP came along as the dominant methodology, with IP blocks largely designed in RTL (if they were digital anyway) and then assembled into SoCs.

High level synthesis (HLS) seems to have been part of the backdrop of design automation for so long that it seems to be one of those things that nobody notices any more. But it has also crept up on people and gone from interesting technology to keep an eye on to getting genuine adoption. The first commercial product in the space was behavioral compiler introduced in 1994 by Synopsys. In that era we all thought that design would inevitably move up from RTL to C, but in fact IP came along as the dominant methodology, with IP blocks largely designed in RTL (if they were digital anyway) and then assembled into SoCs.

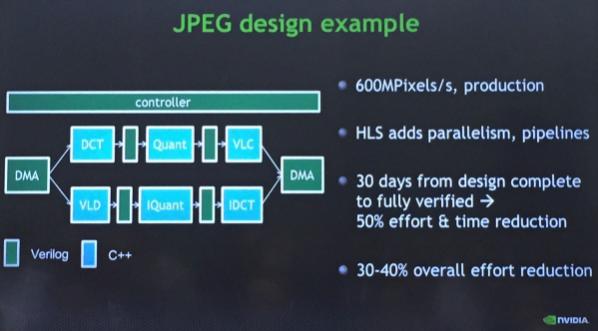

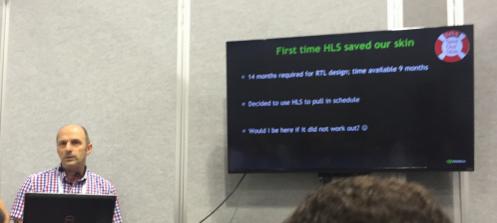

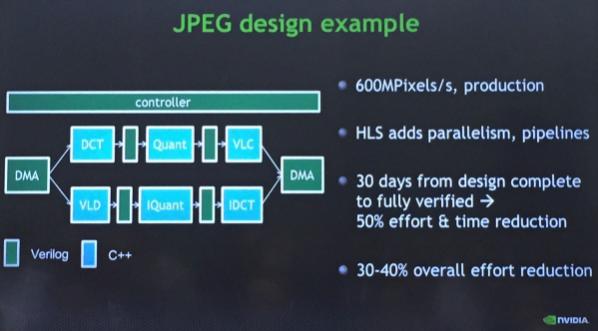

I attended a presentation at the Calypto theatre by Frans Sijstermans of Nvidia about How HLS Saved Our Skin…Twice. Nvidia were facing the problem that designs were growing at 1.4X per generation but design capacity only at 1.2X causing problem. In 2013 the video team faced a new chip with all sorts of new technologies on the must-have list: HEVC, VP9, deep color, 4K resolution and more. An analysis showed that using their old methodology would take 14 months but only 9 months were available. Google had been using HLS successfully for video and talking publicly about it so Nvidia contacted them and then they decided to use HLS to pull in the schedule.

So the first time their skin was saved was getting the design on-track for an RTL freeze in March 2014, using 8 bit color. Then in January 2014 was the consumer electronics show (CES). Lots of people were showing HEVC but with 10 bit color. They realized that the project had no future, they had guessed wrong about market requirements. “We messed up,” the head of the division admitted. Without HLS there was absolutely no way to fix this since it affected every register and operator of every datapath in the entire design.

They recoded the C++ for 10 bit instead of 8. They ran it through HLS. All the pipeline stages changed due to the different performance. But would it work? How could they verify it? They worked out that to run the 100,000 tests the HEVC standard provided at the RTL level would take 1000 cores for 3 months. Instead they did all the verification running all the tests at the C++ level. It took a single CPU 3 days. Of course they still ran a bunch of tests at the RTL level, just to be safe. They taped out the two versions, a 20nm block for mobile at 510MHz and a 28HP discrete GPU at 800MHz.

Nvidia’s conclusions:

[LIST=1]

One company who have been using HLS for a long time is ST Microelectronics. Their experience has been that increasingly RTL is the wrong level to code IP since it locks in the microarchitecture in a way that is next to impossible to change. The same algorithm in 28nm and 16nm will require a completely different pipeline running at a completely different frequency (after all, the throughput of, say, 4K video doesn’t change depending on the silicon used for implementation, it is set by the video standards).

One company who have been using HLS for a long time is ST Microelectronics. Their experience has been that increasingly RTL is the wrong level to code IP since it locks in the microarchitecture in a way that is next to impossible to change. The same algorithm in 28nm and 16nm will require a completely different pipeline running at a completely different frequency (after all, the throughput of, say, 4K video doesn’t change depending on the silicon used for implementation, it is set by the video standards).

The world of using FPGAs in datacenters has been forefront in everyone’s attention recently with Intel’s acquisition of Altera (not yet completed). Nobody thinks Intel is doing this because they want a small (by their standards) FPGA business but because if integrated FPGAs in the datacenter are important they need control of that technology at more than a partner/foundry level. Last year a high-profile paper by Microsoft showed how using FPGAs and high-level synthesis to get the algorithms in could double the performance of the Bing search engine. Other similar papers have shown acceleration of other algorithms that are not especially well handled on a microprocessor compared to the huge inherent parallelism of an FPGA.

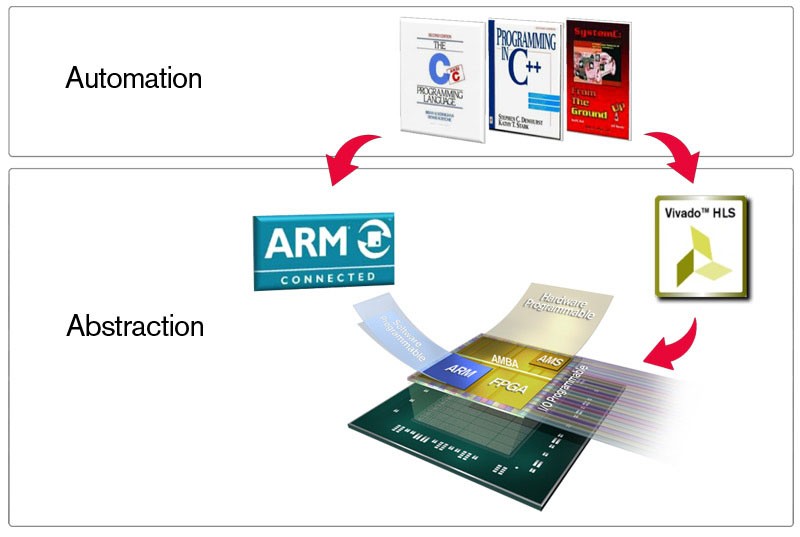

The leader in HLS, at least in terms of real active users, has to be Xilinx. They acquired AutoESL a few years ago as the seed for what has become Vivado HLS. There are over 1000 users not just playing around with it but using it for production bitstreams (the FPGA equivalent of a tapeout). For sure there is some learning curve, but it seems to take about a week with an AE to get a team proficient in the new methodology. That mirrors the Nvidia experience where the team hit the ground running with zero prior HLS experience.

The leader in HLS, at least in terms of real active users, has to be Xilinx. They acquired AutoESL a few years ago as the seed for what has become Vivado HLS. There are over 1000 users not just playing around with it but using it for production bitstreams (the FPGA equivalent of a tapeout). For sure there is some learning curve, but it seems to take about a week with an AE to get a team proficient in the new methodology. That mirrors the Nvidia experience where the team hit the ground running with zero prior HLS experience.

It is also used in several other Xilinx approaches. For example, under the hood of SDSoc it is just necessary to mark a block of software code for acceleration. Using HLS that block will be turned into FPGA gates, along with both the gates needed to move data in and out, and the software stubs. The rest of the code runs on the ARM processor on the same silicon. In a sense, HLS is the key technology to software-defined-hardware.

They are not the only companies in the space. Cadence developed their C-to-silicon compiler and then acquired Forte Design so are also a player in the space. That would be a bagpipe player, because by acquiring Forte they also acquired the responsibility to arrange the traditional bagpipers to play Amazing Grace to close the Design Automation Conference exhibits, which they duly did on Wednesday evening.

They are not the only companies in the space. Cadence developed their C-to-silicon compiler and then acquired Forte Design so are also a player in the space. That would be a bagpipe player, because by acquiring Forte they also acquired the responsibility to arrange the traditional bagpipers to play Amazing Grace to close the Design Automation Conference exhibits, which they duly did on Wednesday evening.

It is a long way from academic research and behavioral compiler to where we are today with mature technology in use for some extremely demanding SoCs and high-end FPGA systems.

Share this post via:

Comments

0 Replies to “High Level Synthesis. Are We There Yet?”

You must register or log in to view/post comments.