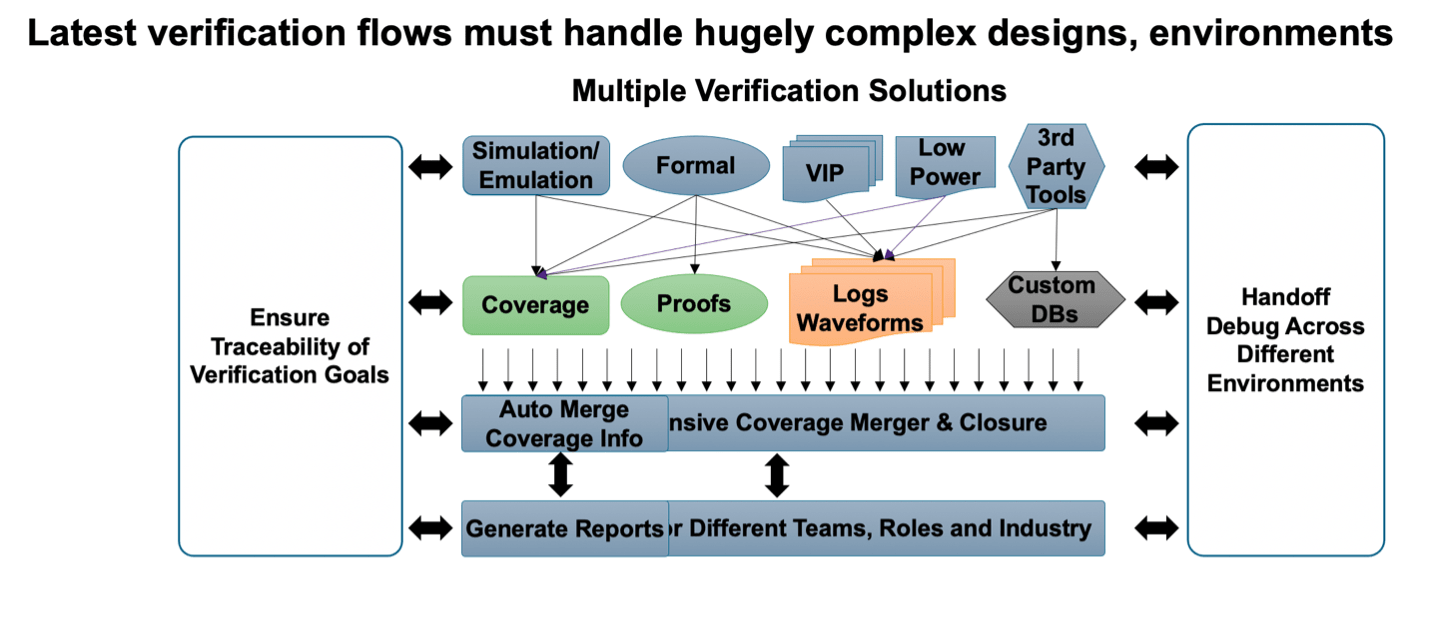

Remember the days when verification meant running a simulator with directed tests? (Back then we just called them tests.) Then came static and formal verification, simulation running in farms, emulation and FPGA prototyping. We now have UVM, constrained random testing and many different test objectives (functional, power, DFT, safety, security, cache coherence). Over giant designs now needing hierarchies of test suites. And giant regressions to ensure backward compatibility, compliance and coverage while aiming to optimize use of compute farms and clouds. It’s all become a bit more complicated than it used to be. To achieve the productivity and efficiency gain needed to keep up, automating verification management of this complex and diverse set of objectives becomes essential.

Comprehensive verification management

In a recent recorded video Kirankumar Karanam (AE Mgr Synopsys Verification Group) walks through the Synopsys VC Execution Manager (ExecMan) answer to this need. The ExecMan solution has five primary goals:

- Provide a systematic path linking from testplan to execution, debug and coverage and trend analysis

- Optimize regression turn-around times

- Minimize debug turn-around times

- Optimize time to closure

- Utilize the grid as effectively as possible

The planning phase always intrigues me, linking a design plan to a test plan and subsequently through to analysis and debug. In a short overview there wasn’t time to go into more detail on this topic. I could see this being very useful in establishing traceability between specs and testing.

Optimizing regression turnaround-time and debug productivity

One important consideration in optimizing regression throughput is simply load-balancing. Packing jobs in such a way that total turn-around time per regression pass is minimized to the greatest extent possible. The manager helps optimize this balancing. It also apparently does some level of reduction in redundant test identification, using coverage analytics. There’s also a note in the slides on VCS engine performance enhancement in this release – I believe VCS 2020.12.

To optimize debug productivity, the manager provides help in several ways. First it automatically sets up debug runs to run in parallel with ongoing regression runs. You can supply debug hooks up-front to drive such runs. There’s also mention in the slides of ML-based failure triage and debug assistant(s), though not elaborated in the talk. These are topics I cover from time to time. Could be very helpful.

Optimizing closure turn times and grid utilization

Here there’s more focus on test grading by coverage, to filter out tests which don’t contribute significantly. Synopsys have also just introduced a feature called Intelligent Coverage Optimization (ICO), using ML to bias constraints for randomization, again to minimize low value sims. They claim 5X reduction in turn-around time using this technique for stable CR regressions.

Finally, on this general optimization theme, the manager optimizes for grid efficiency, looking at the best way to assign tasks to specific grid hosts. The manager does this by analyzing environment and historical data.

More goodies

ExecMan adds further automation for results binning, re-run and debug through Verdi. It further supports coverage analysis through test grading and plan grading tools and can link with bugs tracked in Redmine issue-tracking.

Kirankumar wraps up by describing a use-case they developed with a memory customer, based in this instance on VC SpyGlass regressions. An interesting point here is that this customer uses Jenkins for regression management. Requiring that ExecMan work with that flow. I don’t know how far that customer takes their use of Jenkins, but it’s encouraging to see tools from the agile world appearing in hardware regression flows.

You can watch the recorded video HERE.

Also Read:

Synopsys Debuts Major New Analog Simulation Capabilities

Accelerating Cache Coherence Verification

Addressing SoC Test Implementation Time and Costs

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.