I think most of us have come to terms with the need for multiple verification platforms, from virtual prototyping, through static and formal verification, to simulation, emulation and FPGA-based prototyping. The verification problem space is simply too big, in size certainly but also in dynamic range, to be effectively addressed by just one or even a couple of platforms. We need microscopes, magnifying glasses and telescopes to fully inspect this range.

Which is all very well, but real problems don’t neatly divide themselves into domains that can be fully managed within one platform. When debugging such a problem, sometimes you need the telescope and sometimes the microscope or magnifying glass.

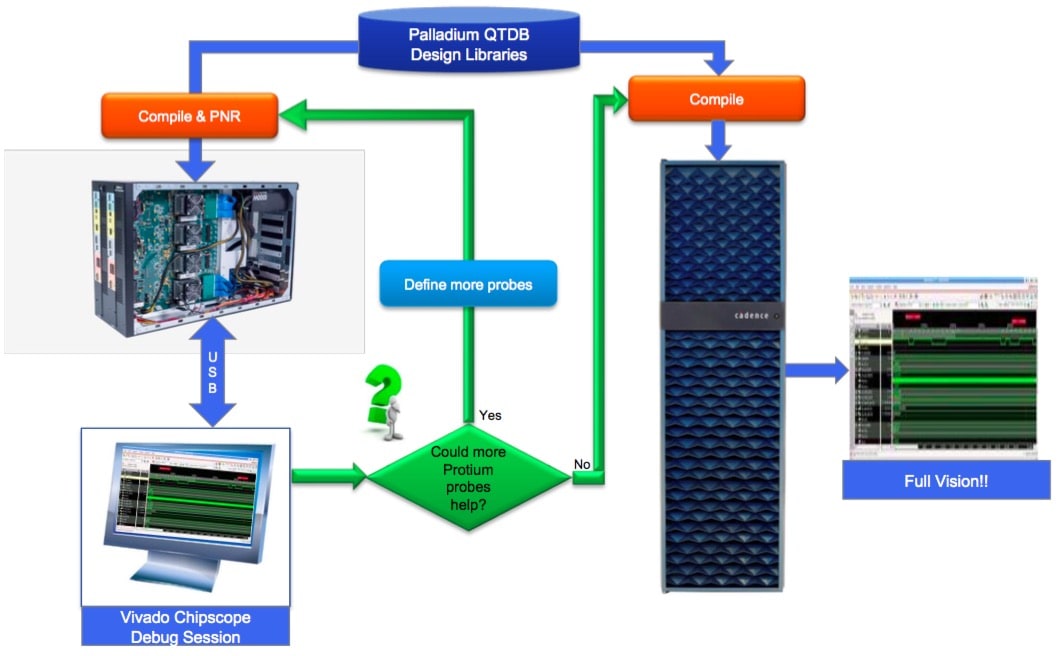

This in turn means you need to be able to flip back and forth easily between these platforms. I wrote in an earlier blog (Aspirational Congruence) on a Cadence goal to make these transitions as easy as possible between emulation (on Palladium) and prototyping (on Protium). It looks like they have succeeded, so much so that the boundaries between emulation and prototyping are starting to blur. Frank Schirrmeister at Cadence pointed me to three customer presentations which illustrate collaborative verification between these platforms.

One, from Microsemi, illustrates needs in bringing up hardware and firmware more or less simultaneously for multi-processor RAID SoCs. Need to model with software and realistic traffic make hardware assist essential, but they also must span a range for detailed hardware debug and power and performance modeling to the faster performance required for regressions, where detailed debug access is not necessary. If a regression fails on the prototyper they may want to drop that case back onto the emulator, a step greatly simplified by the common compile front-end and memory backdoor access between the platforms.

Amlogic, who build over-the-top set-top boxes, media dongles and related products have similar needs but helpfully highlighted different aspects of the benefits of using a mixed prototyping/emulation flow. Their systems obviously depend on a lot of software (they have to be able to boot Linux and Android in full-system debug) and naturally some post-boot problems straddle both hardware and software. Again, they saw the benefit of speed in prototyping with ease of switching back to emulation for detailed debug. An interesting point here is that Amlogic used hands-free setup for Protium, which gave them 5MHz performance over 1MHz on Palladium. And probably a pretty speedy setup since they weren’t trying to tune the prototype; yet this apparently delivered enough performance for their needs. Amlogic’s measure of the effectiveness of this flow was pretty simple. They were able to get basic Android running on first silicon after 30 minutes and deliver a full Android demo to a customer in 3 days. That’s pretty compelling.

Finally, Sirius-XM Radio gave a presentation at DAC this year on their use and benefits in this coupled configuration. (If you weren’t aware, Sirius-XM designs all the baseband devices that go in every satellite receiver.) There are some similar needs, but also some interesting differences. Between satellites, ground-based repeaters and temporary loss of signal (going under a bridge for example), there’s significant interleaving of signals spanning between 4-20 seconds which must be modeled. Overall for their purposes they have to support 2 full real-time weeks of testing; both of these needs obviously require hardware assist. Historically they did this with their own custom-crafted FPGA prototype boards, but observed that this wouldn’t scale to newer designs. For example, for the DDR3 controller in their design, they had to use the Xilinx hard macro, which didn’t correspond to what they would build in silicon and might have (had they followed this path on their latest device) led to a silicon re-spin bug.

Instead they switched to a Palladium/Protium combo where they could model exactly what they were going to build. They used the Palladium for end-to-end hardware verification, running 1.5 minutes of real-time data in 15 hours. Meantime, the software team did their work on a Protium platform, running 1.5 minutes of real-time data in 3 hours, which served them well enough to port libraries, the OS and other components and to validate all of these. Again, where issues cropped up (which they did), the hardware team was able to switch the problem test-run over to the Palladium where they could debug the problem in detail.

The thread that runs through all these examples is default (and therefore fast) setup on the prototyper being good enough for all these teams, and ease of interoperability between the emulator and the prototyper. For hardware developers, this supports switching back from a software problem in prototyping to emulation when you suspect a hardware bug and need greater visibility. Equally, the hardware team could use the prototyper to get past lengthy boot and OS bring-up, to move onto where the interesting bugs start to appear. And for software developers, this setup enabled work on porting, development and regression testing using the same device model used in hardware development. For each of these customers, the bottom line was that they were able to bring up software on first silicon in no more than a few hours. Who wouldn’t want to be able to deliver that result?

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.