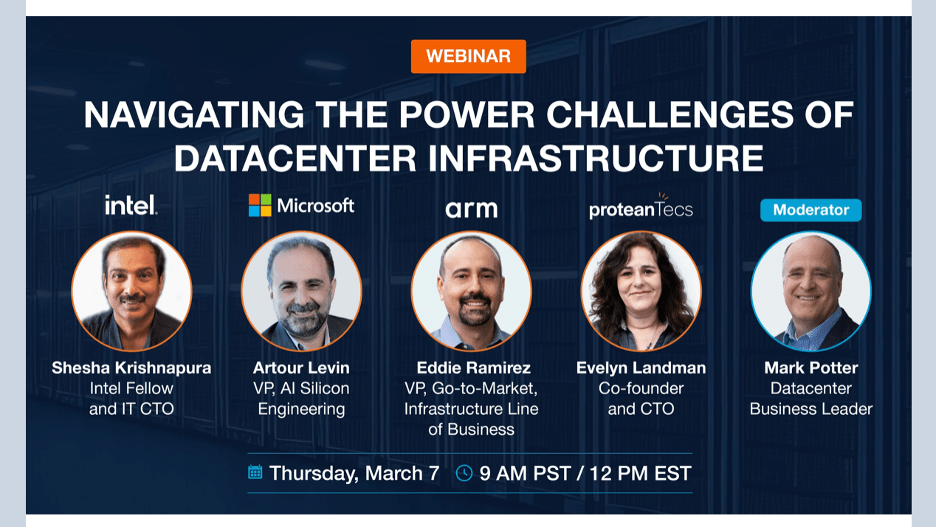

That datacenters are power hogs is not news, especially now AI is further aggravating this challenge. I found a recent proteanTecs-hosted panel on power challenges in datacenter infrastructure quite educational both in quantifying the scale of the problem and in understanding what steps are being taken to slow growth in power consumption. Panelists included Shesha Krishnapur (Intel fellow and IT CTO), Artour Levin (VP, AI silicon engineering at Microsoft). Eddie Ramirez (Arm VP for Go-to-Market in the infrastructure line of business), and Evelyn Landman (Co-founder and CTO at proteanTecs). Mark Potter (VC and previously CTO and Director of HP Labs) moderated. This is an expert group directly responsible for or closely partnered with some of the largest datacenters in the world. What follows is a condensation of key points from all speakers.

Understanding the scale and growth trends

In 2022 US datacenters accounted for 3.5% of total energy consumption in the country. Intel sees 20% compute growth year over year which through improved designs and process technologies is translating into a 10% year over year growth in power consumption.

But that’s for CPU-based workloads. Sasha expects demand from AI-based workloads will grow at twice that rate. One view is that a typical AI-accelerated server is drawing 4X the power of a conventional server. A telling example suggests that AI-based image generation consumes almost 10X the power of just trying to find images online. Not an apples and apples comparison of course but if the AI option is easier and produces more intriguing results, are end-users going to worry about power? AI has the potential to turn an already serious power consumption problem into a crisis.

For cooling/thermal management the default today is still forced air cooling, itself a significant contributor to power consumption. There could be better options but re-engineering existing infrastructure for options like liquid/immersion cooling is a big investment for a large datacenter; changes will move slowly.

Getting back onto a sustainable path

Clearly this trend is not sustainable. There was consensus among panelists that there isn’t a silver bullet fix and that datacenter power usage effectiveness (PUE) must be optimized system-wide through an accumulation of individually small refinements, together adding up to major improvements.

Shesha provided an immediate and intriguing example of improvements he has been driving for years in Intel datacenters worldwide. The default approach, based on mainframe expectations, had required cooling to 64-68oF to maximize performance and reliability. Research from around 2010 suggested improvements in IT infrastructure would allow 78oF as a workable operating temperature. Since then the limit has been pushed up higher still, so that PUEs have dropped from 1.7/1.8 to 1.06 (at which level almost all the power entering the datacenter is used by the IT equipment rather than big cooling systems).

In semiconductor design everyone stressed that power optimization will need to be squeezed through an accumulation of many small improvements. For AI, datacenter inference usage is expected to dominate training usage if AI monetization is going to work. (Side note: this has nothing to do with edge-based inference. Business applications at minimum are likely to remain cloud based.) One way to reduce power in inference is through low-precision models. I wouldn’t be surprised to see other edge AI power optimizations such as sparse matrix handling making their way into datacenters.

Conversely AI can learn to optimize resource allocation and load balancing for varying workloads to reduce net power consumption. Aligning compute and data locations and packing workloads more effectively across servers will allow for more inactive servers which can be powered down at any given time.

Naturally Eddie promoted performance/watt for scale-out workloads; Arm have been very successful in recognizing that one size does not fit all in general-purpose datacenters. Servers designed for high performance compute must coexist with servers for high traffic tasks like video-serving and network/storage traffic optimization. Each tuned for different performance/watt profiles.

Meanwhile immersion and other forms of liquid cooling, once limited to supercomputer systems, are now finding their way into regular datacenters. These methods don’t reduce IT systems power consumption, but they are believed to be more power-efficient in removing heat than traditional cooling methods, allowing for either partial or complete replacement of forced air systems over time.

Further opportunities for optimization

First, a reminder of why proteanTecs is involved in this discussion. They are a very interesting organization providing monitor/control “agent” IPs which can be embedded in a semiconductor design. In mission mode these can be used to supply in-field analytics and actionable insights on performance, power and reliability. Customers can for example use these agents to adaptively optimize voltages for power reduction while not compromising reliability. proteanTecs claim demonstrated 5% to 12% power savings across different applications when using this technology.

Evelyn stressed that such approaches are not only a chip level techonology. The information provided must be processed in datacenter software stacks so that workload optimization solutions can take account of on-chip metrics in balancing between resources and systems. Eddie echoed this point in adding that the more information you have and the more telemetry you can provide the software stack, the better the stack can exploit AI-based power management.

Multi-die systems are another way to reduce power since they bring otherwise separate components closer together, avoiding power-hungry communication through board traces and device pins.

Takeaways

For semiconductor design teams, expect power envelopes to be squeezed more tightly. Since thermal mitigation requirements are closely coupled to power, expect even more work to reduce hotspots. Also expect to add telemetry to hardware and firmware to guide adaptive power adjustments. Anything that affects service level expectations and cooling costs will go under the microscope. Designers may also be borrowing more power reducing design techniques from the edge. AI design teams will be squeezed extra hard 😀 Also expect a bigger emphasis on chiplet-based design.

In software stacks, power management is likely to become more sophisticated for adaptation to changing workloads in resource assignments and power down for systems not currently active.

In racks and the datacenter at large, expect more in-rack or on-chip liquid-based cooling, changing thermal management design and analysis at the package, board and rack level.

Lots to do! You can learn more HERE.

Also Read:

proteanTecs Addresses Growing Power Consumption Challenge with New Power Reduction Solution

Fail-Safe Electronics For Automotive

Building Reliability into Advanced Automotive Electronics

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.