Key Takeaways

- Semidynamics introduced an All-In-One AI IP processing element at Embedded World 2024, combining multiple IPs into a single integrated solution.

- The new design addresses high latency and programming challenges associated with traditional AI chip architectures that use separate IP blocks.

- The solution features a fully customizable RISC-V 64-bit core, Vector Units, and Tensor Units, enabling easier implementation with lower risk.

Semidynamics takes a non-traditional approach to design enablement. Not long ago, the company’s Founder and CEO, Roger Espasa unveiled extreme customization at the RISC-V Summit. That announcement focused on a RISC-V Tensor Unit designed for ultra-fast AI solutions. Recently, at Embedded World 2024 the company took this strategy a step further with an All-In-One AI IP processing element. Let’s look at the challenges addressed by this new IP to understand how Semidynamics shakes up Embedded World 2024 with All-In-One AI IP to power nextgen AI chips.

The Problem

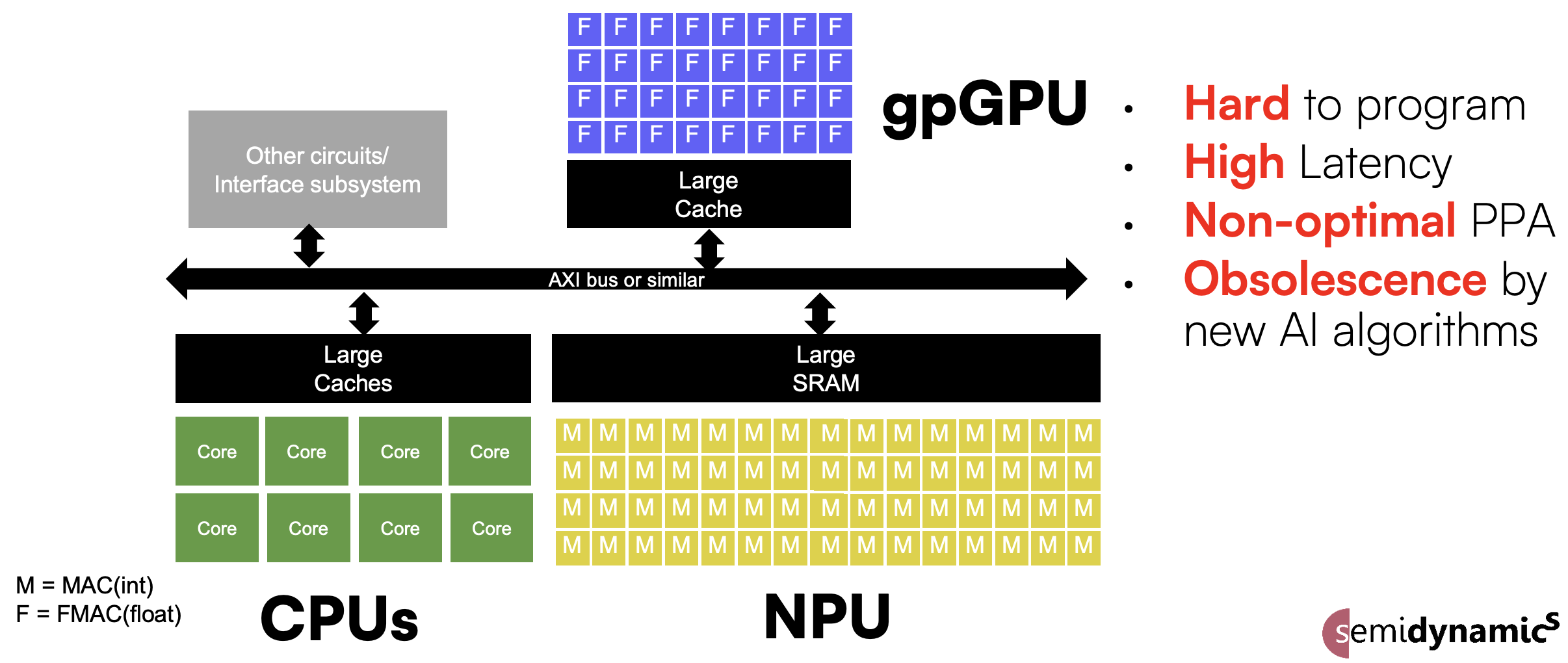

The current approach to AI chip design is to integrate separate IP blocks next to the system CPU to handle the ever-increasing demands of AI. As data volume and processing demands of AI increase, more individual functional blocks are integrated. The CPU distributes dedicated partial workloads to gpGPUs (general purpose Graphical Processor Units) and NPUs (Neural Processor Units). It also manages the communication between these units.

Moving data between the blocks this way causes high latency. Programming is also challenging since there are three different types of IP blocks with different instruction sets and tool chains. It is also worth noting that fixed-function NPU blocks can become obsolete quickly due to constant changes in AI algorithms. Software evolves faster than hardware.

The figure below illustrates what a typical AI-focused SoC looks like today.

The Semidynamics Solution

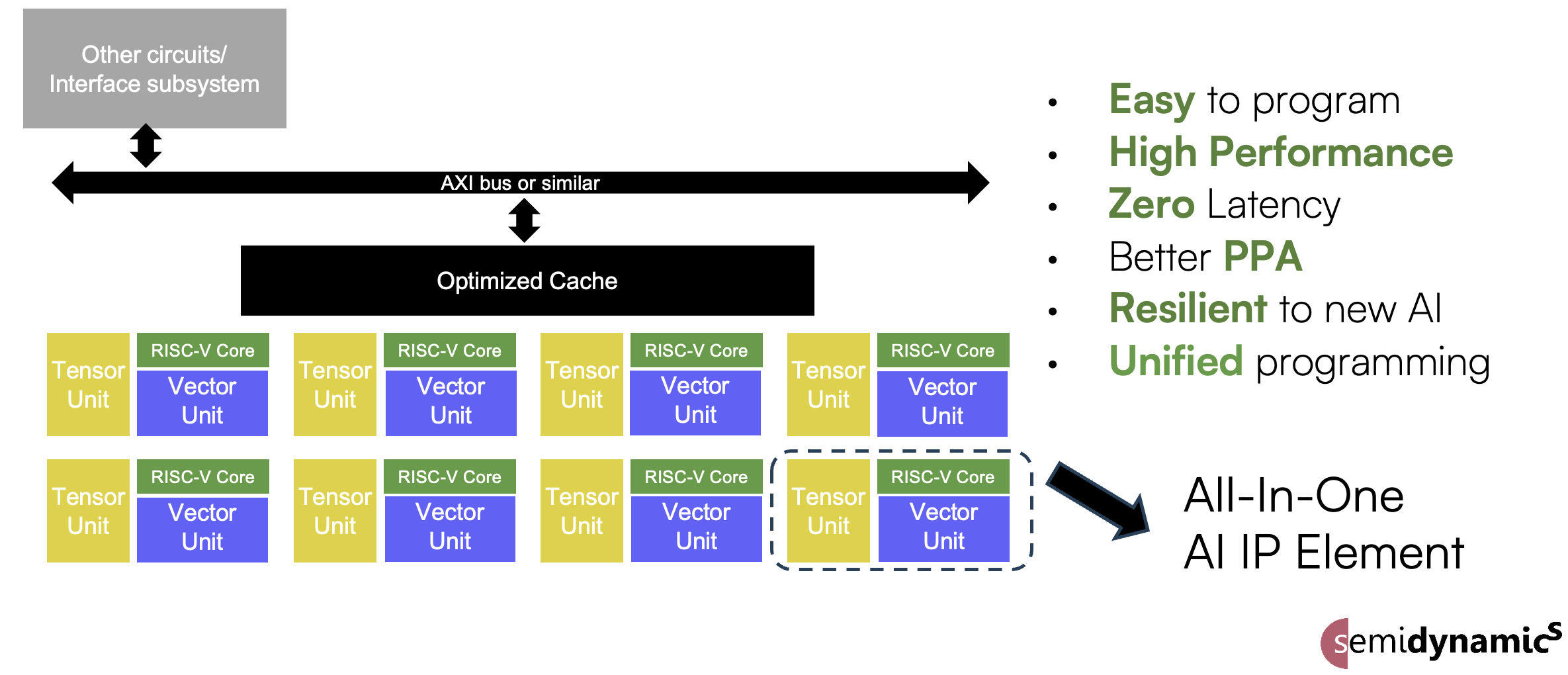

Semidynamics has taken a completely different approach to AI chip design. The company has combined four of its IPs together to form one, fully integrated solution dubbed the All-In-One AI IP processing element. The approach delivers a fully customizable RISC-V 64-bit core, Vector Units (as the gpGPUs), and a Tensor Units (as the NPUs). Semidynamics Gazzillion® technology ensures huge amounts of data can be handled without the issues of cache misses. You can learn more about Gazillion here.

This approach delivers one IP supplier, one RISC-V instruction set and one tool chain making implementation easier and faster with lower risk. The approach is scalable, allowing as many new processing elements as required to be integrated on a single chip. The result is easier access to next generation, ultra-powerful AI chips.

The figure below illustrates this new approach of fusing CPU, gpGPU, and NPU.

This approach goes well beyond what was announced at the RISC-V Summit. A powerful 64-bit out-of-order based RISC-V CPU is combined with a 64-bit in-order based RISC-V CPU, a vector unit and a tensor unit. This delivers powerful AI capable compute building blocks. Hypervisor support is enabled for containerization and crypto is enabled for security and privacy. And Gazzillion technology efficiently manages large date sets

The result is a system that is easy to program with high-performance for parallel codes and zero communication latency.

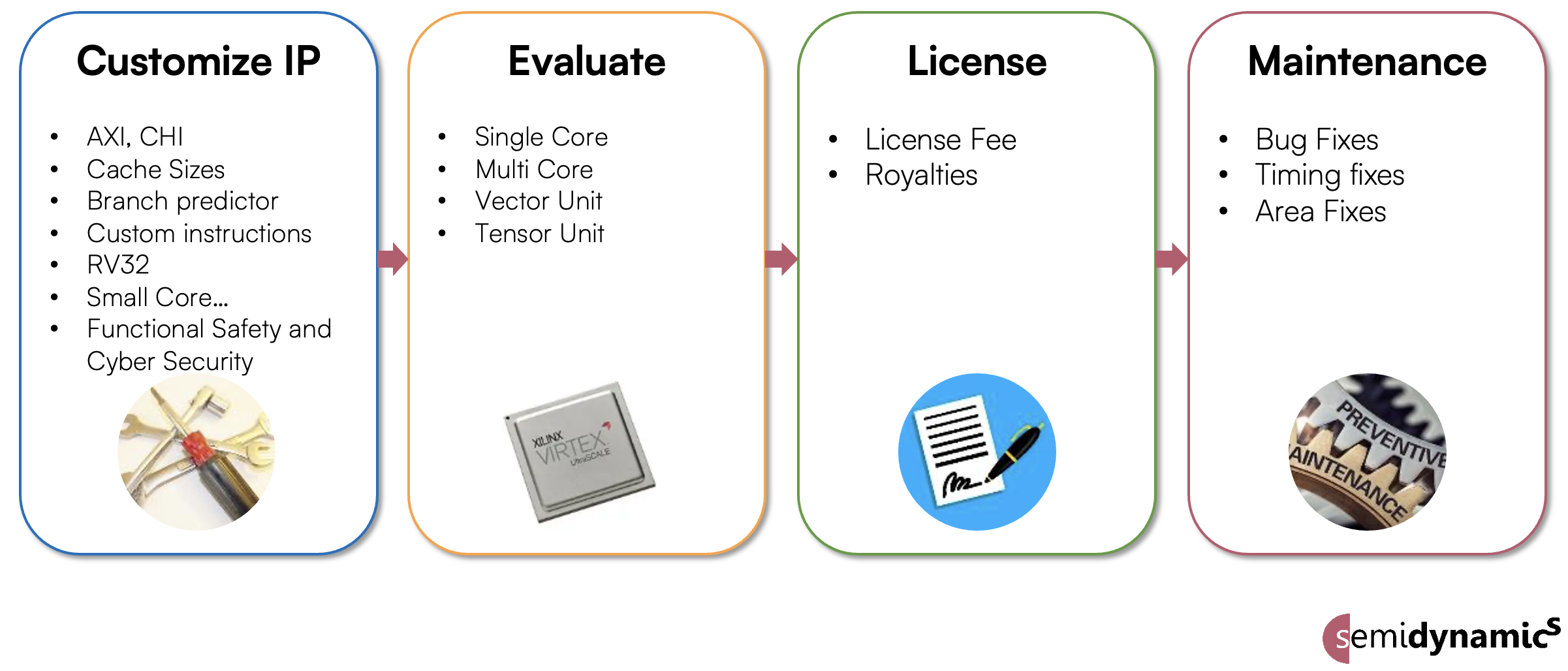

The technology is available today with a straight-forward business model as shown below.

Comments from the CEO

Recently, I was able to get a few questions answered by Roger Espasa, the founder and CEO of Semidynamics.

Q: It seems like integration is the innovation here. If it’s easy, why has it not been done before?

A: It is a paradigm change – the starting RISC-V momentum was focussed solely on CPU, both in the RISC-V community and with the customers. We have seen vector benefits way earlier than others and AI very recently demands more flexible response to things like transformers and LLMs. In fact, it’s far from easy. That’s why it’s not been done before. Especially as there was no consistent instruction set in one environment until CPU+Vector and the Semidynamics Tensor from our prior announcement.

Q: What were the key innovations you needed to achieve to make this happen?

A: I’ll start with eliminating the horribly-difficult-to-program DMAs typical of other NPU solutions and substituting their function by normal loads and stores inside a RISC-V core that get the same sustained performance – actually better. That particular capability is only available in Semidynamic’s RISC-V cores with Gazzillion technology. Instead of a nasty DMA, with our solution the software only needs to do regular RISC-V instructions for moving data (vector loads and stores, to be precise) into the tensor unit.

Also, connecting the tensor unit to the existing vector unit, where the vector register storage is used to hold tensor data. This reduces area and data duplication, enables a lower power implementation, and, again, makes the solution easier to be programmed. Now, firing the tensor unit is very simple: instead of a complicated sequence of AXI commands, it’s just a vanilla RISC-V instruction (called vmxmacc, short for “matrix-multiply-accumulate“). Adding to this, AXI commands mean that the CPU has to read the NPU data and either slowly process it by itself or send it over AXI to, for example, a gpGPU to continue calculations there.

And adding specific vector load instructions that are well suited to the type of “tiled” data used in AI convolutions and can take advantage of our underlying Gazzillion technology.

I should mention that this result can only be done by an IP provider that happens to have (1) a high-bandwidth RISC-V core, (2) a very good vector unit and (3) a tensor unit and can propose new instructions to tie all three solutions together. And that IP provider is Semidynamics!

The resulting vision is a “unified compute element” that:

1) Can be scaled up by simple replication to reach the customer TOPS target – very much like multi cores are built now. I will offer an interesting observation here: nobody seems to have a concern to have a multicore system where each core is an FPU, but once there is more than one FPU, i.e. a Vector unit, nobody understands it anymore!

2) Keeps a good balance between “control” (the core), “activation performance” (the vector unit) and “convolution performance” (the tensor unit) as the system scales.

3) Is future proofed. By having a completely programmable vector unit within the solution, the customer gets a future-proofed IP. No matter what type of AI gets invented in the near future, the combination of the core+vector+tensor is guaranteed to be able to run it.

Q: What were the key challenges to get to this level of integration?

A: Two come to mind: (1) inventing the right instructions that are simple enough to be integrated into a RISC-V core and, yet provide sufficient performance, and (2) designing a tensor unit that works hand-in-hand with the vector unit. There are many more technical and architectural challenges we solved as well.

To recap: the challenge is that we change the paradigm: we do a modern AI solution that is future proof and based on an open source ISA.

To Learn More

The full text of the Semidynamics announcement can be found here. You can learn more about the Semidynamics Configurator here. And that’s how Semidynamics shakes up Embedded World 2024 with All-In-One AI IP to power nextgen AI chips.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.