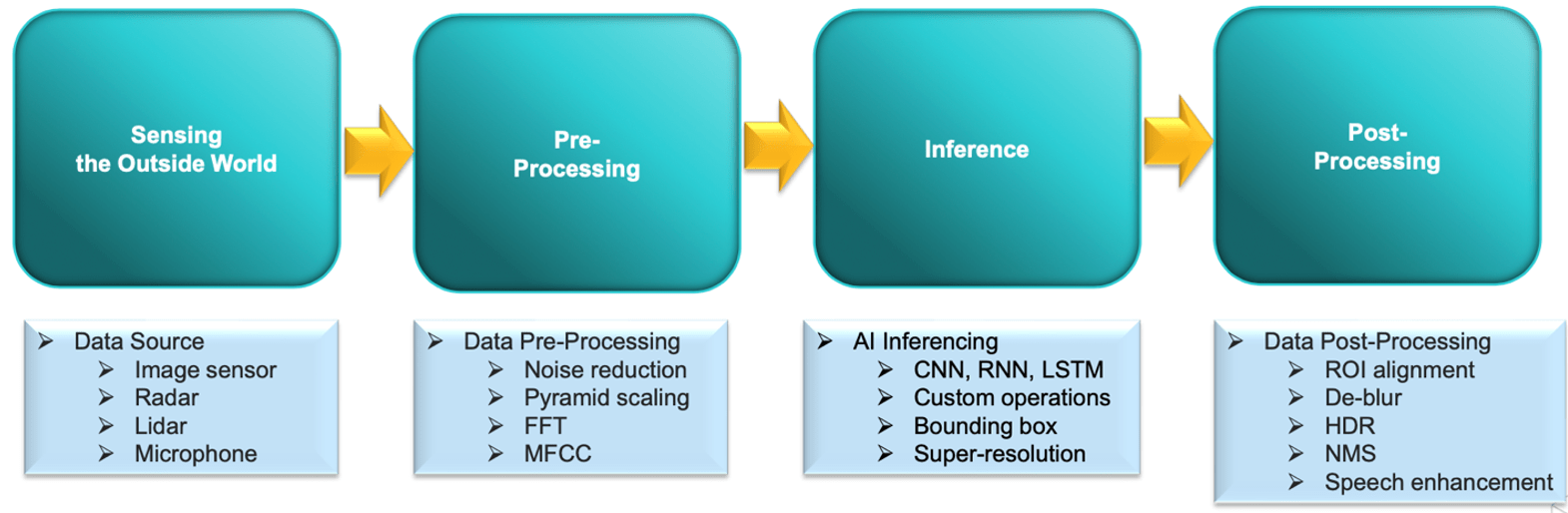

I wrote previously that the debate over which CPU rules the world (Arm versus RISC-V) somewhat misses the forest for the trees in modern systems. This is nowhere more obvious that in intelligent audio and vision: smart doorbells, speakers, voice activated remotes, intelligent earbuds, automotive collision avoidance, self-parking, and a million other applications. None of these would be possible simply by connecting a CPU or even a bank of CPUs directly to an AI engine. CPUs play an important administrative role, but Audio and Vision-based intelligence-based systems depend on complex pipelines of signal processing surrounding the AI core. However building these pipelines can add significant complexity and schedule risk to product development in very competitive markets.

What is an AI pipeline?

Audio samples are one-dimensional continuously varying signals, image signals are similar but two dimensional. After noise reduction, each must at minimum be scaled into a form compatible with samples on which the AI model was trained. For example, an image may be reduced to grayscale, since color adds significantly to training and inference cost. An audio signal may be filtered to eliminate low and high-frequency bands. For similar reasons signal range will be resized and averaged. All these steps are easily handled by software running on a DSP.

This level of pre-processing is basic. Pulin Desai (Product Marketing and Management for Tensilica vison, radar, lidar and communication DSPs at Cadence) introduced me to a few recent advances pushing higher accuracy. For example, voice recognition uses a technique called MFCC to extract features from speech which are considered more directly determinative than a simple audio wave. As another example, noise suppression now adds intelligence to support voice commands overriding background music speech or to make sure you don’t miss emergency sirens. Both these cases are controlled by signal processing, sometimes with sprinkle of AI.

Emerging vision transformers with global recognition strengths are now hot and are moving to multiscale-based image recognition where an image is broken down into a pyramid of progressively reduced images with lower resolution. There are more pre-inference possibilities and then (eventually) the actual inference step (CNN, transformer, or other options) will run. Following inference, post processing steps must activate; these may include non-maximal suppression (NMS), image sharpening or speech enhancement. Possibly the pipeline may even run an additional inference step for classification. It is obvious why such a process is viewed as a pipeline – many steps from an original image or sound, mostly signal processing accomplished through software running on DSPs.

Just as AI models continue to evolve, pipeline algorithms also continue to evolve. For a product OEM it must be possible to develop and maintain these complex pipelines for release within say 18-24 months and to have products remain competitive for a product life of 5 years or more. Bottom line, OEMs need simplification and acceleration in developing and maintaining DSP software to meet these goals.

Streamlining the development flow

The Tensilica portfolio is already supported by an extensive partnership ecosystem in Audio, Voice and Vision, which should provide much of the help needed in filling out a pipeline implementation. Naturally OEMs will want to selectively differentiate through their own algorithms where appropriate and here recent advances become especially important.

The first and most attention grabbing is auto-vectorization. You write software for a DSP in much the same way you would write software for a CPU, except where you can take full advantage of the DSP to accelerate wide vector calculations. Previously, writing software to fully exploit this capability has required special expertise in manual coding for vectorization, creating a bottleneck in development and upgrades.

Auto-vectorization aims to automate this task. Prakash Madhvapathy (Director of Product Marketing and Management for Tensilica audio/voice DSPs at Cadence) tells me that there are some guidelines the developer must follow to make this pushbutton, but code written to these guidelines works equally well (though slower) on a CPU. They have run trials on a variety of industry standard applications and have found the compiler performs as well as hand-coded software. Auto-vectorization thus opens up DSP algorithm development to a wider audience to help reduce development bottlenecks.

The next important improvement supports double-precision floating point where needed. Double precision might seem like overkill for edge applications, however newer algorithms such as MFCC and softmax are now using functions such as exponentials and logs which will quickly overflow/underflow single-point floats. Double point helps maintain precision, ensuring a developer can avoid need for special handling when porting from datacenter-based software development platforms.

Another important improvement is support for 40-bit addresses in the iDMA, allowing the system to directly address up to a 1TB of memory without requiring the developer to manage address offsets through special code. MSoundslike a lot of memory for an edge device but you only need to consider automotive applications to realize such sizes are becoming common in some applications. The wider address range also offers more simplification when porting from datacenter platforms.

Product enhancements and availability

All existing DSPs are ported to the Tensilica Xtensa LX8 platform which supports all of the above advances together with an L2 cache option, enhanced branch prediction recognizing that intermixed control and vector code is becoming more common, direct AXI4 connection to each core, and expanded interrupt support.

Cadence has recently released two new Tensilica audio DSPs (HiFi 1s and HiFi 5s) and two new vision DSPs (Vision 110 and Vision 130), all building on the LX8 platform. These include several improvements for performance and power, but I want here to focus particularly on improvements to simplify and accelerate software development.

The Vision 130/110 DSPs support on-the-fly decompression, as mentioned in an earlier blog. Add to that higher fmax, higher floating-point performance, faster FFTs and support for floating point complex types delivering overall up to 5X performance improvements on standard DSP benchmarks and up to 3.5X improvement on AI benchmarks. These new Vision processors are available now.

The audio HiFi 1s and 5s DSPs deliver up to 35X improvement on double precision XCLIB functions, 5-15% codec performance through improved branch prediction, and up to 50% improved performance thanks to the L2 cache. These new DSP processors are expected to be available by December of 2023. (I should add by the way that HiFi 1s, though an audio DSP, has enough capability to also handle ultra-low power video wake-up. Pretty cool!)

You can learn more about HiFi DSPs HERE and Vision DSPs HERE. You should also check out a white paper on the importance of DSPs in our brave new AI world.

Also Read:

Developing Effective Mixed Signal Models. Innovation in Verification

Assertion Synthesis Through LLM. Innovation in Verification

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.