When considering SoC architectures it is easy to become trapped in simple narratives. These assume the center of compute revolves around a central core or core cluster, typically Arm, more recently perhaps a RISC-V option. Throw in an accelerator or two and the rest is detail. But for today’s competitive products that view is a dangerous oversimplification. Most products must tune for application-dependent performance, battery life, and unit cost. In many systems general purpose CPU cores may still manage control, however the heavy lifting for the hottest applications has moved to proven mainstream DSPs or special purpose AI accelerators. In small, price-sensitive, power-sipping systems, DSPs can also handle control and AI in one core.

When only a DSP can do the job

While general purpose CPUs or CPUs with DSP extensions can handle some DSP processing, they are not designed to handle the high throughput streaming data flows common in a wide range of communications protocols, high quality audio applications, high quality image signal processing, safety-critical Radar and Lidar processing or the neural network processing common in object recognition and classification.

DSPs natively support fixed- and floating-point arithmetic essential for handling analog values that dominate signal processing, and they support massively parallel execution pipelines to accelerate the complex computation through which these values flow (think FFTs and filters for example) while also supporting significant throughput for streaming data. Yet these DSPs are still processors, fully software programmable therefore retaining the flexibility and futureproofing that application developers expect. Which is why, after years of Arm embedded processor ubiquity and the emerging wave of RISC-V options, DSPs still sit at the heart of devices you use every day, including communication, automotive infotainment and ADAS, and home automation. They also support the AI-powered functions within many compact power sensitive devices – smart speakers, smart remotes even smart earbuds, hearing aids, and headphones.

The Tensilica LX series and LX8

The Tensilica Xtensa LX series has offered a stable DSP platform for many years. A couple of stats that were new to me are that Tensilica counts over 60 billion devices shipped around their cores and they are #2 in processor licensing revenue (behind Arm), reinforcing how dominant their solutions are in this space.

Customers depend on the stability of the platform, so Tensilica evolves the architecture slowly; the last release, LX7, was back in 2016. As you might expect, Tensilica ensures that platforms remain compatible with all major OSes, debug tools and ICE solutions, supported by an ecosystem of third-party software/dev tools. The ISA has been extensible from the outset, long before RISC-V emerged while offering the same opportunities for differentiation that are now popular in RISC-V. The platform is aimed very much at embedded applications, delivering high performance at low power.

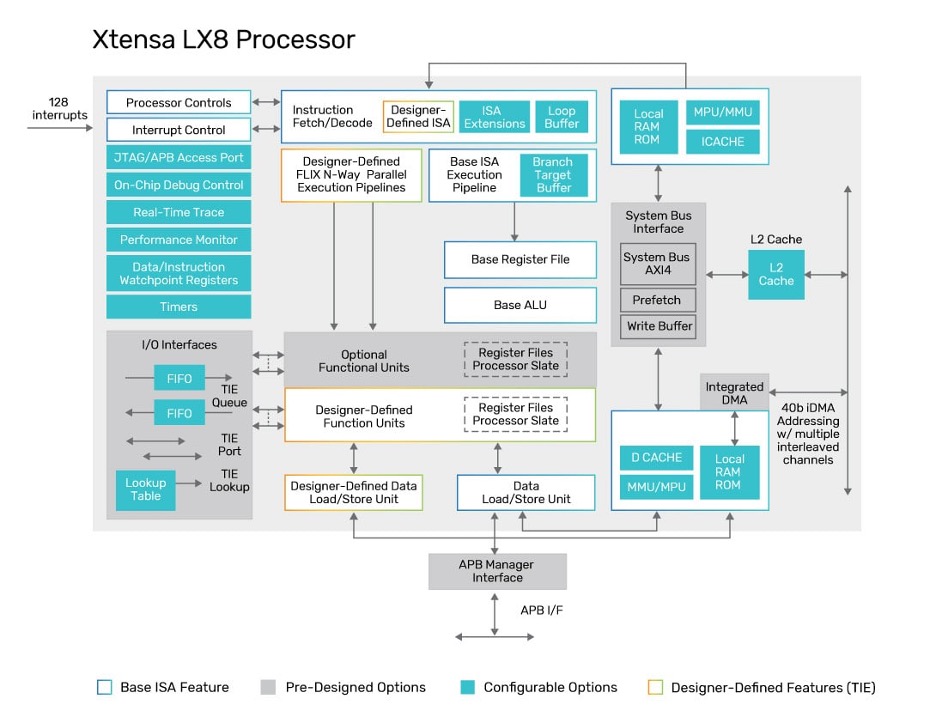

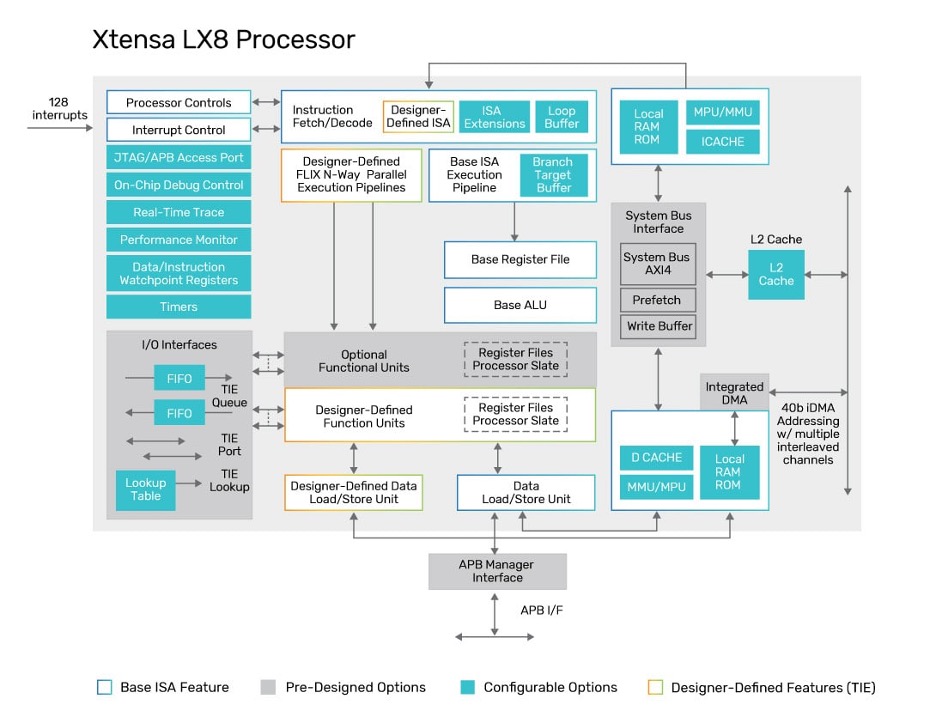

The latest version in this series, LX8, was released recently and adds two major features to the architecture in support of growing intelligence at the edge, a new L2 memory subsystem and an integrated DMA. I always like looking at features like this in terms of how they enable larger system objectives, so here is my take.

First the L2 cache will improve performance on L1 misses which should translate to higher frames per second rates for object recognition applications, as one example. The L2 can also be partitioned into cache and fixed memory sections, offering application flexibility through optimizing the L2 memory for a variety of workloads. The integrated DMA among other features supports 1D, 2D and 3D transfers, very important in AI functions. 1D could support a voice stream, 2D an image and 3D would be essential for radar/lidar data cubes. This hardware support will further accelerate frame rates. Also, the iDMA in LX8 supports zero value decompression, a familiar need in transferring trained network weights where significant stretches of values may be zeroed either through quantization or pruning and are compressed to something like <12:0> rather than a string of twelve zeroes. This is good for compression, but the expanded structure must be recovered before tensor operations can be applied in inference. Again, hardware assist accelerates that task, reducing latency between updates to the weight matrix.

Not revolutionary changes but essential to product builders who must stay on the leading edge of performance while preserving a low power footprint. Both SK Hynix and Synaptics have provided endorsements. You can read the press release HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.