You don’t know you’re at a peak until you start to descend, and Hot Chips 2024 is proof that AI hype is still climbing among semiconductor vendors. Juggernaut Nvidia, startups, hyperscalers, and major companies presented their AI accelerators (GPUs and neural-processing units—NPUs) and touched on the challenges of software, memory access, power, and networking. As always, microprocessors also made a significant contribution to the conference program.

Nvidia recapitulated Blackwell details, the monster chip introduced earlier this year. Comprising 208 billion transistors on two reticle-limited dice, it can deliver a theoretical maximum of 20 PFLOPS on four-bit floating-point (FP4) data. The castle wall protecting its dominance is Nvidia’s software, and the company discussed its Quasar quantization stack that facilitates FP4 use and reminded the audience of its 400-plus Cuda-X libraries.

The software barrier for inference is lower. Seeking to bypass it altogether—as well as to offer AI processing in an easier-to-consume chunk than a whole system—AI challengers such as Cerebras and SambaNova provide API access to cloud-based NPUs. Cerebras is unusual in operating multiple data centers, and both companies also offer the option to buy ready-to-run systems. Tenstorrent, however, sees a developer community and software ecosystem as essential to the long-term success of a processor vendor. The company presented its open-source stack and described how developers can contribute to it at any level, facilitated by its use of hundreds of C-programmed RISC-V CPUs.

AI Networking at Hot Chips 2024

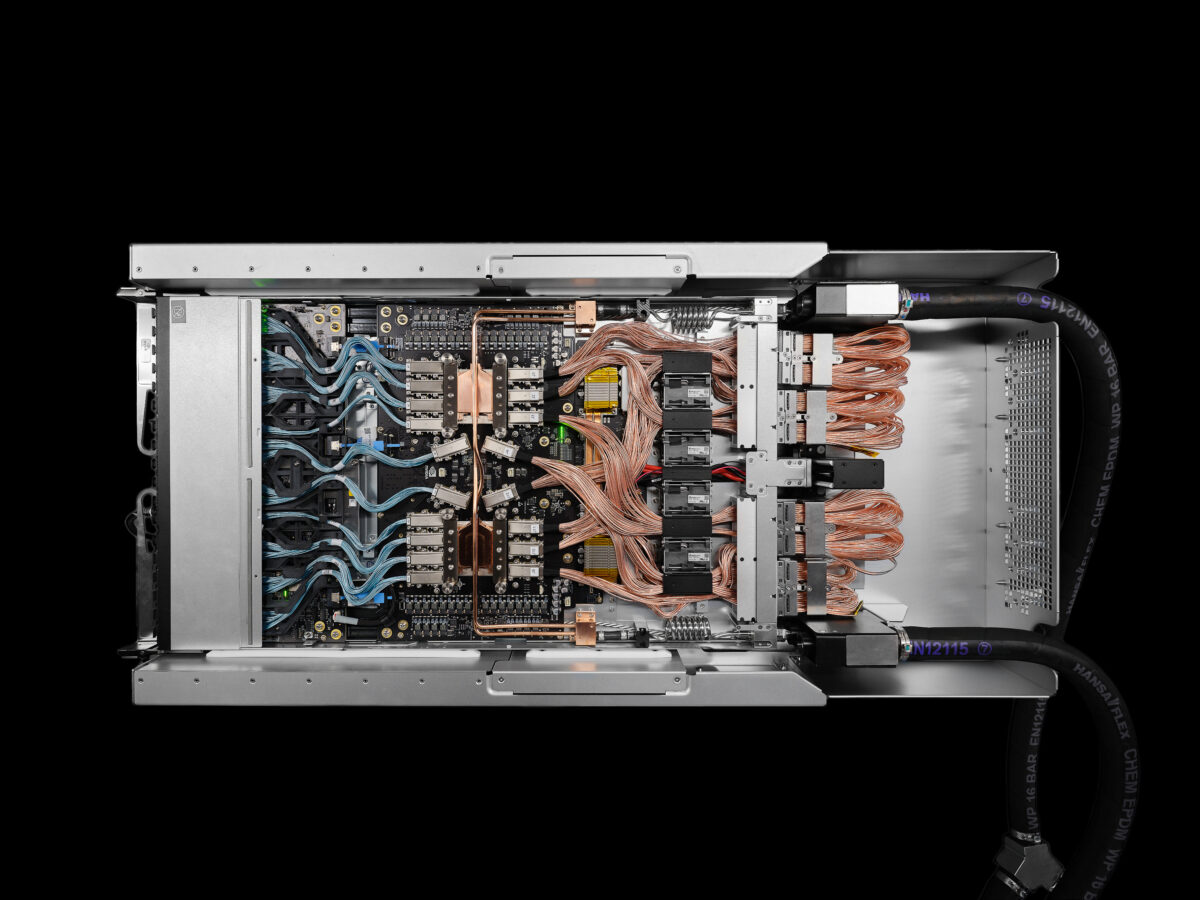

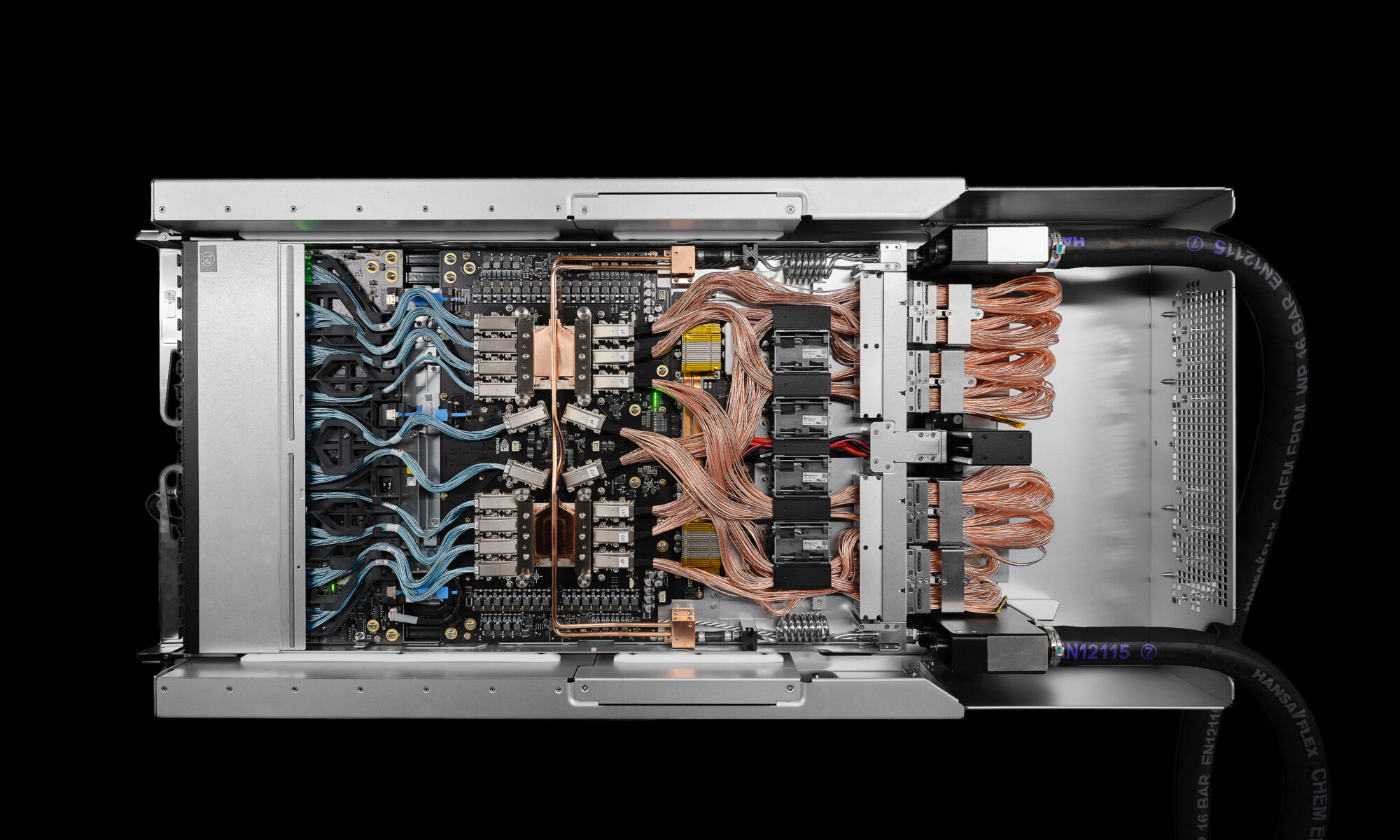

Networking is critical to building a Blackwell cluster, and Nvidia showed InfiniBand, Ethernet, and NVLink switches based on its silicon. The NVLink switch picture reveals a couple of interesting challenges to scaling out AI systems. Employing 200 Gbps serdes, signals in and out of the NVLink chips must use flyover cables, shown in blue and pink in Figure 1, because PCBs can’t handle the data rate. Moreover, the picture suggests the blue lines connect to front-panel ports, indicating customers’ data centers don’t have enough power to support 72-GPU racks and must divide this computing capacity among two racks.

Short-hop links eventually will require optical networking. Broadcom updated the audience on its efforts developing copackaged optics (CPO). Having created two CPO generations for its Tomahawk switch IC, Broadcom completed a third CPO-development vehicle for an NPU. The company expects CPO to enable all-to-all connectivity among 512 NPUs. Intel also discussed its CPO progress. Disclosed two years ago, Intel’s key technology is an eight-laser IC its CPO design integrates, replacing the conventional external light source Broadcom requires.

Software and networking intersect at protocol processing. Hyperscalers employ proprietary protocols, adapting the standard ones to their data centers’ rigorous demands. For example, in presenting its homegrown Maia NPU, Microsoft alluded to the custom protocol it employs on the Ethernet backbone connecting a Maia cluster. Seeing standards’ inadequacies but also valuing their economies, Tesla presented its TTPoE protocol, advocating for the industry to adopt it as a standard. It has joined the Ultra Ethernet Consortium (UEC) and submitted TTPoE. Unlike other Ethernet trade groups focused on developing a new Ethernet data rate, the UEC has a broader mission to improve the whole networking stack.

Alternative Memory Hierarchies at Hot Chips 2024

Despite Nvidia’s success, a GPU-based architecture is suboptimal for AI acceleration. Organizations that started with a clean sheet have gone in different directions particularly with their memory hierarchies. Hot Chips 2024 highlighted several different approaches. The Meta MTIA accelerator has SRAM banks along its sides and 16 LPDDR5 channels, eschewing HBM. By contrast, Microsoft distributes memory among Maia’s computing tiles and employs HBM for additional capacity. In its Blackhole NPU, Tenstorrent similarly distributes SRAM among computing tiles and avoids expensive HBM, using GDDR6 memory instead. SambaNova’s SN40L takes a “yes-and” approach, integrating prodigious SRAM, including HBM in the package, and additionally supporting standard external DRAM. For on-chip memory capacity, nothing can touch the Cerebras WS-3 because no other design comes close to its wafer-scale integration.

CPUs Still Matter

AMD, Intel, and Qualcomm discussed their newest processors, mostly repeating information previously disclosed. Ampere discussed the Arm-compatible microarchitecture employed in the 192-core AmpereOne chip, revealing it to be in a similar class as the Arm Neoverse-N2 and adapted to many-core integration.

The RISC-V architecture is the standard for NPUs, being employed by Meta, Tenstorrent, and others. The architecture was also the subject of a presentation by the Chinese Academy of Sciences. The organization has two open-source projects under its XiangShan umbrella, which covers microarchitecture, chip generation, and development infrastructure. Billed as comparable to an Arm Cortex-A76, the Nanhu microarchitecture shown in Figure 2 is a RISC-V design focused more on power- and area-efficiency than maximizing performance. The Kunminghu microarchitecture is a high-performance RISC-V design the academy compares with the Neoverse-N2. Open source, and thus freely available, these CPUs present a business-model challenge to the many companies developing and hoping to sell RISC-V cores.

Bottom Line

Artificial-intelligence mania is propelling chip and networking developments. The inescapable conclusion, however, is that too many companies are chasing the opportunity. Beyond the companies highlighted above, others presented their technologies—a key takeaway about each is available at xpu.pub. The biggest customers are the hyperscalers, and they’re gaining leverage over merchant-market suppliers by developing their own NPUs, such as the Meta MTIA and Microsoft Maia presented at Hot Chips 2024.

Nvidia’s challengers, therefore, are targeting smaller customers by employing various strategies, such as standing up their own data centers (Cerebras), offering API access and selling turnkey systems (SambaNova), or fostering a software ecosystem (Tenstorrent). The semiconductor business, however, is one of scale economies, and aggregating small customers’ demand is rarely as effective as landing a few big buyers.

Although less prominent, RISC-V is another frothy technology. An open-source instruction-set architecture, it also has open-source implementations. Businesses have been built around Linux, but they involve testing, improving, packaging, and contributing to the open-source OS, not replacing it. Their business model could be a template for CPU companies, which have focused on developing better RISC-V implementations—which could be fruitless given the availability of high-end cores like Kunminghu.

At some point, both the AI and RISC-V bubbles will burst. If it happens in the next 12 months, we’ll learn that Hot Chips 2024 was the zenith of hype.

Joseph Byrne is an independent analyst. For more information, see xampata.com.

Also Read:

The Semiconductor Business will find a way!

Powering the Future: The Transformative Role of Semiconductor IP

Nvidia Pulled out of the Black Well

Share this post via:

Serving their AI Masters