Previously, Dr. Carter served as Chief Commercial Officer at Foxconn Interconnect and Oclaro. At Oclaro, he served as a member of the senior executive team from July 2014 to December 2018, when it was acquired by Lumentum Holdings for $1.85B. Prior to that, he served as the Senior Director and General Manager of the Transceiver Module Group at Cisco from February 2007 to July 2014, where he was instrumental in the acquisition of Lightwire, a Silicon Photonics start-up, and released the first internally-designed CPAK 100G transceiver family utilizing a Silicon Photonics Optical engine.

Tell us about your company?

Formed in 2022, OpenLight is the world’s first open silicon photonics platform with integrated lasers. We enable our customers to incorporate photonic building blocks into their designs, making it more like a photonic ASIC (Application Specific Integrated Circuits) rather than an optical subassembly. As part of our broader vision and business model, we do not manufacture products, instead, we provide customers access to a Process Design Kit (PDK) that facilitates the creation of photonic integrated circuits. We deliver custom PASIC design and license our intellectual property (IP) to a variety of customers, which currently includes over 350 patents, with our portfolio expected to grow to 400 patents in the near future.

What problems are you solving?

While silicon photonics was once met with skepticism, its benefits particularly in efficiency and scalability are now well recognized. Despite this progress, the industry still faces significant challenges in design, manufacturing, and deployment. Through our recent partnerships with Jabil, VLC Photonics, Spark Photonics, and Epiphany, we are working to create a more resilient and collaborative ecosystem while driving innovation across various market segments and transforming the manufacturing landscape.

What application areas are your strongest?

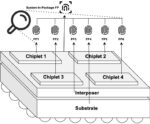

OpenLight’s platform enables companies to design and manufacture at scale, which is crucial as the industry faces increasing demand in areas like data centers, AI/ML, and high-performance computing (HPC). As data centers rapidly multiply, they have become the largest consumers of silicon photonics, driven by the need to interconnect servers, switches, and routers at ever-increasing bandwidths while minimizing costs and power consumption.

Meanwhile, the rise of AI and ML has transformed data center architectures, shifting traffic patterns primarily between GPU and CPU clusters, significantly increasing the demand for higher bandwidth and lower latency. Our technology enhances the performance, scalability, and manufacturability of critical photonic components, including waveguides, splitters, modulators, and photodetectors. By facilitating heterogeneous laser integration in silicon, OpenLight enables data rates to scale from 10G to 800G and beyond, effectively replacing traditional copper cables.

What keeps your customers up at night?

One of the most common concerns we notice among our customers is the reliability and scalability of manufacturing processes, especially in light of supply chain disruptions that have affected many industries. Additionally, there is always hesitation regarding the return on investment in new technologies. Customers want to ensure that their investments in silicon photonics will yield tangible benefits. We help alleviate their concerns by providing expert guidance throughout the design and manufacturing journey. We not only facilitate the adoption of new photonic components into existing infrastructures, but we also equip our customers to confidently navigate challenges while staying competitive in a rapidly evolving market.

What does the competitive landscape look like and how do you differentiate?

Unlike traditional silicon photonics processes, OpenLight’s business model provides open access to its PDK, allowing any company to utilize its technology. The PDK, featuring validated components, is accessible through Tower Semiconductor’s PH18DA production process, where companies can either design their circuits independently or seek OpenLight’s assistance. This openness contrasts with earlier models where access to silicon photonics was limited, often requiring acquisitions or significant investment in new startups.

OpenLight’s platform is the only platform that enables the integration of active components such as lasers and amplifiers directly into the silicon within a foundry process — that’s our key differentiator. We enable customers to integrate photonic designs into silicon more easily and support the broader development of the photonics industry, which is still in its early stages of maturity.

What new features/technology are you working on?

We are making significant strides in the development of PICs focusing on both existing and future advancements. Currently, we offer two 800-gigabit PIC reference designs: an 8×100-gigabit (800G-DR8) design and an 800-gigabit 2xFR4 CWDM one. Now, we are expanding our portfolio to include an 8×200-gigabit design, targeting a 1.6-terabit DR8 and a corresponding 1.6-terabit CWDM variant. The 1.6Tb will mark the first product to utilize distributed feedback (DFB) lasers as its light source and will incorporate semiconductor optical amplifiers to compensate for higher optical losses at 200 gigabits, ensuring optimal performance and meeting stringent specifications.

How do customers normally engage with your company?

OpenLight has made remarkable strides since its inception, growing from three to seventeen customers, and demonstrating a tenfold increase in revenue. We work with a diverse range of companies and organizations involved in various sectors that require silicon photonics technology.

We provide two primary services to our customers. The first is design assistance. For those who lack expertise in silicon photonics designs, we develop custom silicon photonics chips and manage the production process. Once designed, these chips are sent to Tower Semiconductor for production, and wafer-level testing on chip prototypes is done before delivery. OpenLight provides the Graphic Data Stream (GDS) file to the customer, detailing the mask set required for ordering the production of the photonic integrated circuit (PIC) from Tower.

Through our second service, we serve companies that have in-house photonic expertise but haven’t been able to use processes involving active components such as lasers, semiconductor optical amplifiers (SOAs), and modulators. These components are a part of PDKs, and companies can choose a PDK that fits their specific designs, allowing the foundry to create the devices. We offer two types of PDKs through Tower Semiconductor: one from Synopsys and another from Luceda Photonics. While we do not make any components, we do offer reference designs.

Also Read:

CEO Interview: Sean Park of Point2 Technology