The Broadcom bid for Qualcomm is the biggest, boldest semiconductor deal to date. Just when we thought semi M&A was cooling off, this deal is a years worth of deals rolled into one. Not only does this deal upset the current balance between chip suppliers and customers, it would create a giant entity smack in the middle of IOT, AI, AR & VR roadmaps.

Continue reading “Will Broadcom become a Chipzilla or is the deal DOA?”

High performance processor IP targets automotive ISO 26262 applications

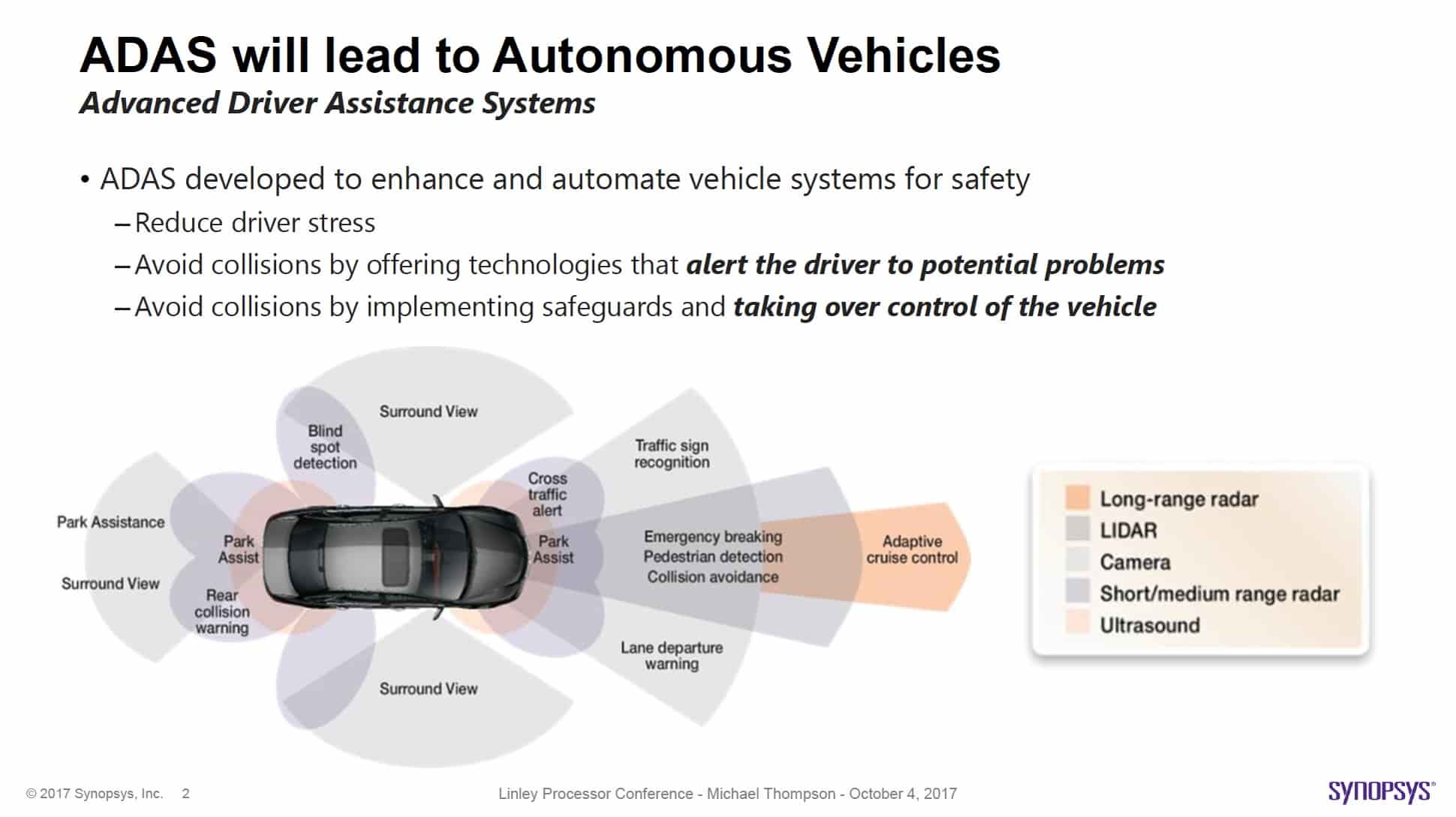

The reason you are seeing a lot more written about the ISO 26262 requirements for automotive electronics is, to put it bluntly, this stuff is getting real. Driver assist systems are no longer only found in the realm of Mercedes and Tesla, almost every car in every brand offers some driver assist features. However, the heavy lifting required for ISO 26262 is coming with the advent of autonomous vehicles. This is where the risk of electronic system malfunction transforms from problematic to potentially lethal.

ISO 26262 is really a standard for completed systems, where the operation of the subunits is in a known context. Without an operational context, not enough is known to provide any meaningful qualification. Nevertheless, the components of these systems need to be designed, at each level, with consideration of the final qualification requirements.

ISO 26262 starts by identifying every anticipated failure event and assigning an ASIL to each. ASIL is a way of determining the severity of the failure. ASIL A being the least severe and easiest to recover from, and ASIL D being the most dangerous and also difficult to handle. A door lock mechanism failure is quite a bit different than a failure in a fully autonomous driving system. Indeed, the error checking and recovery methods for ASIL D are extensive and require a lot of forethought – even at the IP level during system design.

During the Linley Processor Conference in early October in Santa Clara, Michael Thompson, product marketing manager for Synopsys ARC HS processors, gave a presentation discussing how IP needs to be designed so that it can be incorporated into ASIL A to ASIL D qualified systems. He was quick to point out that even as reliability features are being added, the complexity and performance requirements are rising dramatically as well. For instance, by 2020 it is expected that the electronics content of an automobile will reach 35% and there will be ~100M lines of code. By 2030 the electronic content will be closer to 50% and there will be ~300M lines of code.

Michael mentioned that for ASIL C and D, fault injection testing is highly recommended. For ASIL D the expected single point fault detection rate should be 99% or better. Multipoint faults are also intended to be detected at better than 90%. Below is a slide from his presentation showing the expected effectiveness for a range of diagnostic methods.

To deal with high performance requirements and ISO 26262 safety features, Synopsys presented their ARC HS Quad Core targeted toward the automotive market. Michael gave an overview of its safety features. Synopsys has developed a dedicated safety manager unit that is responsible for the HS cluster bring up. It also manages boot time LBIST and MBIST. The safety manager additionally monitors and executes safety escalations on the SOC.

Synopsys also offers an integrated safety bus architecture. This is involved in passing error information and monitoring other ASIL ARC cores. The memories integrated into the HS Quad Core are ASIL D ready with support for ECC. Internally there is parity checking for processor registers and other safety critical registers. Each core has its own dedicated safety manager and watchdog timer. For bus transactions there is ECC protection on AXi/NoC for address and data values.

Safety monitoring is accomplished with error injection to verify and test the safety mechanisms. Tailored SW diagnostic tests are also supported. Power on and reset LBIST, SMS and SHS are part of the safety monitoring system. Michael’s presentation went into detail on the specifics of the integrated safety features.

Another key element of successful ISO 26262 qualification is component and system level documentation. Without adequate and proper documentation, no IP can be included in a system intended for ISO 26262 qualification. Furthermore, the same is generally true for the development tools used to build and integrate the IP – including software development tools used subsequently to complete the operational system. Synopsys has gone to great lengths to provide a tool chain suitable for ISO 26262 qualification. This extends to compiler, IDE and debugger support.

As the transition from assisted driving to autonomous driving takes place, both the performance and reliability of the onboard electronics will need to increase. Autonomous vehicles will call for much higher data processing requirements with zero room for functional failure. Certainly for autonomous vehicles to succeed, consumers will have to have full confidence in their safety. We used to think of automotive safety in terms of steering linkage, brake line and gearbox reliability. However, with increasing semiconductor IP content and its significant role in vehicle operation, it is this IP that will become the focal point of reliability concerns and activity. It is a good thing that ISO 26262 lays out a definite process for achieving the necessary quality goals. It is heartening to see products, such as the Synopsys ARC HS Quad Core IP coming to market to deliver on the needs of fully autonomous driving. For more information on the full line of Synopsys ARC HS cores, please visit their website.

The DIY Syndrome

When facing a new design objective, we check off all the established tools and flows we know we are going to need. For everything else, we default to an expectation that we will paper over the gaps with scripting, documentation and spreadsheets. And why not? When we don’t know what we will have to deal with, in documentation, scheduling, project communication or requirements tracking, starting with unstructured MS Office documents (Word, Excel, PowerPoint) looks like a no-brainer. There’s nothing new to learn and because these documents are unstructured, you can do whatever you want and adapt easily as needs change.

This works well when supporting the needs of a small team, or even larger teams when expectations around structure and traceability are primarily informal and any compliance documentation required with products can be generated through brute-force reviews. But it doesn’t work so well when you’re trying to track changes across multiple inter-dependent products and you need to keep track of how changes relate to evolving requirements, why changes were made, the discussion that went into accepting (or rejecting changes) and being able to defend all of that to a customer or in a compliance review.

This isn’t about design data management (DDM); of course you need to handle that too. This is about traceability in the decision-making that went into approving changes to the requirements, which ultimately led to the design changes tracked in the DDM. You can store comments in a DDM when you check-in a design change but 99 times out of 100 these are pretty informal, and far from meeting traceability requirements of standards like DO-254.

So you decide to manage requirements changes/tracing in Word or Excel or a combination of the two. Seems reasonable. You turn on review tracking so reviewers can add comments and make changes. Nice and disciplined, traceable, you know who made what comments, what’s not to like? Again, there’s nothing wrong with this approach when what you are doing is entirely for the benefit of a local team, isn’t going to lead to need for automation and doesn’t have to be formally shared with independent and distributed design team or with customers.

When any of these needs arise, DIY (do it yourself) requirements tracing in Office starts to show some fairly serious weaknesses. I’ll use Word to illustrate; Excel is better in some respects, worse in others. We’ll ease into why slowly, starting with revision tracking. With tracking turned on, Word does a good job of tracking changes and comments, requirements by requirement, but it doesn’t show when comments or changes were made, comments are not time-ordered and, without manually-added information, there is no way to link a change or comment to a higher-level requirement or to impact on lower-level functions.

In addition, anyone who has used revision-tracking in Word knows that after a few rounds of review, text littered with edits and comments becomes difficult to read. You have a meeting in which you agree to accept all changes (or a subset), the text is cleaned up but you just lost traceability and, if you remove comments, history on why changes were made. When the document is finally released, all of those edits and comments disappear.

Suppose you decide to restructure the document; maybe a select set of requirements and associated comments should be moved under a different higher-level requirement. Do you do this with change tracking on (which will mess up your document even more) or do you turn tracking off, make the move then hope you remember to turn it back on again? This gets scary when structure changes become common.

Of course you can revision control the doc in a DDM, but how often should you do that? Per individual change (in which case, why are you using Word tracking) or periodically? If periodically, how easily can you compare versions of the document, which may contain figures and other explanatory text for requirements, varying substantially between versions? Methods to compare documents work well up to a point but can be derailed by significant differences. Which makes it very painful to compare how requirements have evolved over time.

Then think about how you might handle hierarchy. Requirements generally aren’t a flat list. There are top-level requirements, sub-requirements under each of these and so on down to leaf-level requirements. You could put all of this in a single document with sections and sub-sections and sub-sub-sections and so on until Word has right shifted so far that whatever text and diagrams you have to enter are squeezed into a narrow column at the right of the page. You might also start to worry about Word reliability on large documents. Word is a great tool, very widely used but I have personal experience that as documents get very large, behavior starts to become unpredictable.

More probably you split the whole thing into multiple documents, but now you have to track interdependencies between these documents. Word doesn’t provide much help there. You might use hyperlinks but how are you going to handle ancestor and descendent connections on a requirement? And you better be careful about file locations – moving files could break links. Maybe put it all in a sharefile location – more complications.

Which brings me to another point; in general, how do you plan to track relationships between requirements in Word? And if you make a change to a requirement, how do you know what other requirements will be impacted? In theory through the document organization, but how many projects do you know of where all requirements are nicely bounded in a tree hierarchy so that any change in a requirement will only impact sub-requirements, and all appropriate sub-requirements (a coverage question)? Conversely, what will be impacted in higher-level requirements if I change this requirement?

There are more issues, but you get the point. Using Word or Excel starts easy but can become very cumbersome very quickly. A common reaction at this point is to launch a project to develop Visual Basic software or something similar to automate away these problems. At that point, you should say “stop – what are we doing?”. I have personal experience in developing apps around Word and Excel. It’s do-able, it takes a lot of time and it takes a lot of maintenance. Is that really the best use of your time, or should you look for an existing commercial solution?

You might check out Aldec Spec-TRACER. It addresses all of these points, it can import existing documents and output spec, compliance and other documents. In the long haul, it will cost you a lot less than your DIY project and it won’t go up in smoke as soon as the one person who understands the doc and customization finds another job. You can watch an Aldec webinar on this topic HERE.

VESA DSC Encoder Enables MIPI DSI to Support 4K resolutions

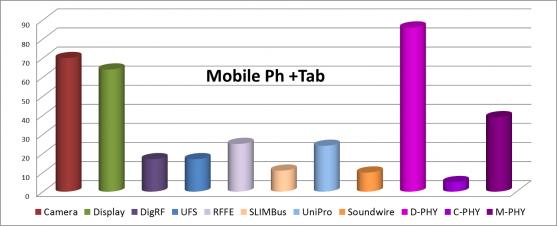

Some of the MIPI specifications are now massively used in mobile (smartphone), like the Multimedia related specs, Camera Serial Interface (CSI-2) and Display Serial Interface (DSI). These specifications are now adopted inautomotive infotainment systems, and augmented reality (AR)/ virtual reality (VR) devices. If you look at the picture below (MIPI Ecosystem survey 2015), MIPI DSI and the associated D-PHY are seeing a very high adoption level in mobile phone. We know that pervasion is strong beyond mobile: automotive or AR/VR.

But displays for these applications are becoming more sophisticated with quad HD or 4K resolutions at faster frame rates and support for RGB formats. This evolution has introduced new challenges for designers – managing the required data bandwidth while reducing power consumption and without compromising visual quality. Designers need a protocol that enables visually lossless compression over display interfaces like MIPI® Display Serial interface (DSI®).

The Video Electronics Standards Association (VESA) Display Stream Compression (DSC) standard offers visually lossless performance and low latency for ultra-high-definition (UHD) displays. VESA has collaborated with the MIPI Alliance to get the DSC standard adopted into the MIPI DSI standard. The challenge is to enable higher resolution, like 4K displays, which would require higher bandwidth, while keep using the mature, production proven MIPI D-PHY specification. MIPI DSI operates on the MIPI D-PHY physical link at 2.5 Gbps per four lanes yielding a maximum data rate of 10 Gbps per link. However, as outlined below, high-end video and image resolutions such as 4K and 3D 1080p require higher bandwidth:

– 4K: 24-bit RGB @ 60 frames per second (FPS) requires 13 Gbps (12 Gbps for active area)

– 3D 1080p: 24-bit RGB @ 60 FPS requires 12 Gbps (11 Gbps for active area)

For deeper color modes, bandwidth requirements are even higher, creating a problem that would normally require designers to increase DSI data lanes by re-architecting devices and redesigning circuits, which results in higher design time, cost and risk.

The solution came from VESA, defining Display Stream Compression (DSC) algorithm and the collaboration between MIPI and VESA has allowed incorporating DSC into DSI. According with VESA, DSI encapsulation into DSC protocol has provided “designers of source and display devices [with] a visually lossless, standardized way to transfer more pixel data over display links and to save memory size in embedded frame buffers in display driver ICs.” The Figure below shows a block diagram of VESA DSC integrated into MIPI DSI:

The VESA DSC algorithm can compress data in constant bit rate mode, providing a deterministic size stream that can be transported by DSI without further processing or padding. With VESA DSC, 4K and 3D 1080p video and image resolutions are now possible over existing display links.

– 4K, compressed to 12 bpp @ 60 FPS requires 6.5 Gbps –> 3 or 4 lanes- 4K, compressed to 8 bpp @ 60 FPS requires 4.4 Gbps –> 2, 3 or 4 lanes

– 3D 1080p, compressed to 12 bpp @ 60 FPS requires 6 Gbps –> 3 or 4 lanes

– 3D 1080p, compressed to 8 bpp @ 60 FPS requires 4 Gbps –> 2, 3 or 4 lanes

How to implement this compression? Before compression, an image is divided into a grid of slices. A DSC encoder, which can be made up of multiple cores that operate in parallel, applies compression to each slice independently. The next picture shows an example of how DSC cores can compress 4K image efficiently. The DSC encoder in this example (on the left) includes four cores and the image (on the left) is split into 4 columns by 15 rows, and each resulting slice is compressed by the corresponding DSC cores (indicated by color) in parallel.

Unlike compression standards such as H.264 or H.265, DSC does not use inter-frame compression, it offers very low latency and reduced memory size. DSC’s compression algorithm was designed to be implemented in hardware without the need for multimedia processors, making it highly efficient for area and power in SoCs.

Visually lossless compression is the ideal method to enable quad HD or 4K resolution embedded displays in high-end smart phones, automotive infotainment systems and AR/VR devices. The VESA DSC standard helps designers overcome bandwidth limitations associated with MIPI D-PHY without costly and risky circuit redesign. VESA DSC is an emerging standard that delivers visually lossless compression, providing designers flexible, low-latency, low-memory, and error-resilient results.

Synopsys has integrated VESA DSC into its silicon-proven DesignWare® MIPI DSI IP to enable quad HD or 4K resolution displays.

You can look at this articlefrom Synopsys, precisely describing how DSC and DSI can be implemented.

From Eric Esteve from IPnest

DesignShare is all About Enabling Design Wins!

One of the barriers to silicon success has always been design costs, especially if you are an emerging company or targeting an emerging market such as IoT. Today design start costs are dominated by IP which is paid at the start of the project and that is after costly IP evaluations and other IP verification and integration challenges. Given that, reducing design costs and enabling design starts has always been a major industry focus starting with the fabless semiconductor transformation that began 30 years ago, which brings us to the DesignShare announcement made by SiFive and Flex Logix last week.

As an emerging IP company one of the greatest challenges is getting customers comfortable with your IP, your support model, and your company in general. Nobody knows this better than Andy Jaros, Vice President of Sales at Flex Logix. Andy started his semiconductor career with Motorola, followed by ARM, then ARC which is where I met him. Virage Logic acquired ARC in 2009 and Andy assumed a leadership role which continued for five years after Synopsys acquired Virage in 2010. Andy has been enabling design starts for most of his career so he knows IP.

I caught up with Andy at ARM TechCon last month and talked about the SiFive DesignShare program. Flex Logix joined DesignShare so customers can get customized chip prototypes with eFPGA cores at a very low cost because Flex Logix and other DesignShare participants defer IP costs until the customer is ready to go into production. Needless to say, Andy is 100% behind this program as it will allow him to work with a much wider range of design starts in a much more efficient manner, for the greater good of the semiconductor industry, absolutely.

By the way, I found the new Flex Logix website to be one of the best IP company websites in regards to content and user friendliness, check it out.

“There is a critical need in the chip industry to provide a faster, cheaper way for innovative companies to rapidly prototype new, advanced chip architectures,” said Geoff Tate, CEO of Flex Logix. “Through DesignShare, SiFive and Flex Logix can give customers a highly programmable, flexible chip design for both microcontroller SoCs and multicore process SoCs. The RISC-V architecture provides excellent performance, and – when combined with embedded FPGA functionality, can provide higher performance in a reconfigurable way.”

Geoff Tate (founding CEO of Rambus) is one of the more interesting and more available IP CEO’s you can meet. He attends as many conferences as I do and is eager to interact with customers and partners. You can meet Geoff and the Flex Logix team next at the REUSE 2017 Conference on December 14[SUP]th[/SUP].

“The addition of Flex Logix’s best-in-class embedded FPGA platform to the DesignShare ecosystem provides engineers with a new and better way to bring SoCs to market,” said Naveed Sherwani, CEO of SiFive. “The adoption of the RISC-V architecture continues to experience significant growth, and the addition of embedded FPGA technologies through DesignShare will make it easier and more flexible for designers to employ RISC-V in their future designs across a wide range of implementations, from embedded devices to the data center.”

Naveed Sherwani is another friendly semiconductor CEO that attends many of same events I do. He has founded or cofounded 9 different companies including Open-Silicon and is quite the semiconductor historian.

Bottom line: Design starts are the lifeblood of the semiconductor industry and we should all do whatever is possible to enable them and DesignShare does just that.

12 Year-old Semiconductor IP and Design Services Company Receives New Investment

I have a transistor-level IC design background so was intrigued to learn more from the CEO of an IP and services company that started out in India 12 years ago. Last week I spoke with Samir Patel, CEO of Sankalp Semiconductorabout the newest $5 million financial investment in his company from Stakeboat Capital Fund. The Stakeboat Capital Fund has some 28 years of investing experience and a current market capitalization above $8B.

I knew that the general trend was for IP companies to provide more specialized content along with design services to help speed new electronic products to market, but I didn’t realize that there are some 200 IC design services companies around the world competing for this growing market segment. What makes outsourced IC design services attractive to OEMs are:

- Lower cost of development than hiring and training new engineers

- Lower risk with a supplier that has a good track record

- Access to analog and mixed-signal IP blocks

Related blog – ARM’s back in FD-SOI. NXP’s showing real chips

Sure, there are giants in outsourcing like Wipro and Infosys, so what makes Sankalp interesting to me is their focus on analog and mixed-signal IP, something that is in strong demand these days as every IoT device with a sensor will require an AMS chip to get data into a digital format. The engineers at Sankalp have quite a broad range of experience to help speed the AMS parts or all of your next chip projects:

- Specification definition

- RTL design and verification

- SoC Implementation

- IP blocks

- AMS design

- Custom layout and P&R

- Technology Foundry Interface

- Validation and Characterization

Related blog – Can FD-SOI Change the Rules of the Game?

The success at Sankalp can be measured by the staff size with some 600 employees worldwide, serving tier one semiconductor companies. They can work with any foundry partner that you choose.

I learned that their logo shows two hands above a fire, and that Sankalp is a Sanskrit word meaning an oath or resolve. Instead of starting up the company in Bangalore, they located outside of Bangalore closer to engineering colleges and where they could train new hires at a lower cost and have lower attrition. TI was an early anchor client for them. Global locations include:

- India

- Noida

- Kolkata

- Bhubaneswar

- Hubli

- Bangalore

- Franfurt, Germany

- Ottawa, Canada

- Sunnyvale, CA

- Dallas, TX

Related blog – IP/SoC Rebound in 2015!

I did ask about exit plans or getting acquired by a larger firm, and was refreshed to hear that they will continue to grow and invest in their own success instead of cashing out. Past acquisitions include Interra SSG and KPIT SSG. Mr. Patel previously started up the RAMBUS design center in India, so really knows the semiconductor design industry. One new acronym that I heard for the first time was Application Specific System on a Chip (ASOC). I found Samir’s photo online and felt connected to him because we both have some grey hair and have followed the semiconductor industry for decades.

Webinar: High-Capacity Power Signoff Using Big Data

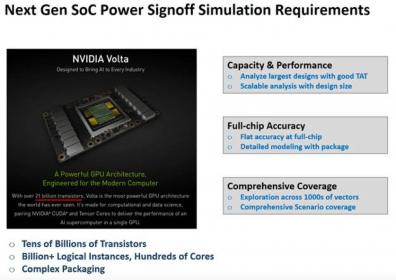

Want to know how NVIDIA signs off on power integrity and reliability on mega-chips? Read on.

PPA over-design has repercussions in increased product cost and potential missed schedules with no guarantee of product success. Advanced SoCs pack more functionality and performance resulting in higher power density, but traditional approaches of uniformly over-designing the power grid which have worked in the past are no longer an option with severely constrained routing resources. To add to these problems, there are hundreds of combinations of PVT corners to consider for each of an increasing number of verification objectives.

REGISTER HERE for the webinar on Tuesday Nov 14[SUP]th[/SUP] at 8am PST

For example, an ADAS SoC is used for a variety of applications such as pedestrian detection, parking assist, vehicle exit assist, night vision, blind spot monitoring, collision avoidance, and a whole lot more. The numbers of vectors designers need to run simulations for have increased enormously. It is nearly impossible to uncover potential design weaknesses when you are simulating only a handful of vectors for just a fraction of second. How do you ensure you have enough design coverage?

Power grid design has become a limiting factor for achieving the desired performance and area targets in next generation SoCs. Sharper slew rates, higher current densities and faster switching speeds pose significant challenges to power integrity and reliability signoff. Lower operating voltages lead to tighter noise margins, resulting in a chip that is very sensitive to changes in supply voltage. Higher device density and longer wires in these advanced designs lead to increased node count by at least an order of magnitude posing significant capacity and performance challenges for traditional EDA tools to address.

As design size increases, turnaround time for solving billion-plus instance designs becomes critical. Next generation SoC power integrity and reliability signoff solution should scale elastically with capacity and performance. It is imperative to iterate designs over multiple operating conditions and scenarios rapidly, with an overnight turnaround time to maximize design coverage. Also, it is equally important to gain key insights from these large design databases to prioritize design fixes.

In this webinar, learn how NVIDIA has developed a workflow to run a flat, full-chip power integrity and reliability signoff analysis using a fully distributed compute and big data solution with ANSYS RedHawk-SC. They achieved a turn-around time of well under 24 hours for full-chip flat power signoff analysis on NVIDIA’s largest GPU – Volta, which contains around 21 billion transistors.

Additionally, silicon correlation exercises performed on the Volta chip using RedHawk-SC produced simulated voltage values that were within 10 percent of silicon measurement results. Discover how NVIDIA’s most powerful GPU uses ANSYS’ next generation SoC power signoff solution based on big data to deliver the best performance for cutting-edge AI and machine learning applications.

REGISTER HERE for the webinar on Tuesday Nov 14[SUP]th[/SUP] at 8am PST

About Ansys

If you’ve ever seen a rocket launch, flown on an airplane, driven a car, used a computer, touched a mobile device, crossed a bridge, or put on wearable technology, chances are you’ve used a product where ANSYS software played a critical role in its creation. ANSYS is the global leader in engineering simulation. We help the world’s most innovative companies deliver radically better products to their customers. By offering the best and broadest portfolio of engineering simulation software, we help them solve the most complex design challenges and engineer products limited only by imagination.

Webinars: Bumper Pack of AMS Webinars from ANSYS

Power integrity and reliability are just as important for AMS designs as they are for digital designs. Ansys is offering a series of five webinars on this topic, under a heading they call ANSYS in ACTION, a bi-weekly demo series from ANSYS in which an application engineer shows you how simulation can address common applications. Schedule a few 20-minute breaks to see how Ansys can solve your problems.

You can REGISTER HERE for any or all of these webinars.

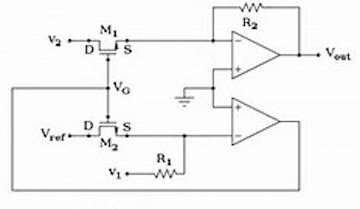

November 16[SUP]th[/SUP] 2017 at 10AM PST The first webinar in the series is on Analog and Mixed Signal Workflows for Power and Reliability Signoff for SerDes IP and PMIC. Analog and mixed signal IPs are very complex and require significant time to design, verify and validate. With increasing mask costs and tighter design cycles, first time silicon success is key to accelerate time to market and beat the competition. Join us for this 20-minute webinar to learn how AMS workflows based on ANSYS Totem, a layout-based transistor level power and reliability signoff platform, can enable you to design the next generation of SerDes IP or PMIC for cutting-edge applications.

November 30[SUP]th[/SUP] 2017 at 10AM PST The second webinar in the series is on Enabling Early Power and Reliability Analysis for AMS Designs. Attend this webinar to discover the benefits of doing early power and reliability analysis with just a GDS layout. This presentation will demonstrate early IP level connectivity checks; missing via checks; point to point resistance and short path resistance checks; and early static analysis to identify structural design weaknesses using ANSYS Totem’s rich GUI interface.

December 7[SUP]th[/SUP] 2017 at 10AM PST The third webinar in the series is on Enabling Power and Reliability Signoff for AMS Designs. Attend this webinar to learn how ANSYS Totem can model and simulate the required power and reliability signoff checks for AMS designs. This presentation will demonstrate the benefits of using Totem to perform dynamic voltage drop and EM checks through its rich GUI interface, which can also be leveraged for debugging purposes.

December 14[SUP]th[/SUP] 2017 at 10AM PST The fourth webinar in the series is on Enabling FinFET Thermal Reliability Signoff for IPs. ANSYS Totem can solve the challenges faced by engineers trying to ensure thermal and electromigration (EM) reliability in advanced IPs. Join this webinar to see a demonstration of self-heat analysis on an IP test case using ANSYS Totem’s rich GUI interface. The demonstration will cover flow setup, debugging and result exploration for reliability signoff.

January 11[SUP]th[/SUP] 2018 at 10AM PST The fifth webinar in the series is on Enabling ESD Reliability Signoff for AMS IPs. Attend this webinar to discover how ANSYS PathFinder can help you solve the challenges you face in trying to ensure ESD robustness in today’s AMS IPs. Watch a live demonstration of ANSYS PathFinder on an IP-level test case to highlight the benefits of a layout-based ESD integrity solution for resistance and current density checks.

REGISTER HERE for any or all of these webinars.

About Ansys

If you’ve ever seen a rocket launch, flown on an airplane, driven a car, used a computer, touched a mobile device, crossed a bridge, or put on wearable technology, chances are you’ve used a product where ANSYS software played a critical role in its creation. ANSYS is the global leader in engineering simulation. We help the world’s most innovative companies deliver radically better products to their customers. By offering the best and broadest portfolio of engineering simulation software, we help them solve the most complex design challenges and engineer products limited only by imagination.

TSMC EDA 2.0 With Machine Learning: Are We There Yet ?

Recently we have been swamped by news of Artificial Intelligence applications in hardware and software by the increased adoption of Machine Learning (ML) and the shift of electronic industry towards IoT and automobiles. While plenty of discussions have covered the progress of embedded intelligence in product roll-outs, an increased focus on applying more intelligence into the EDA world is required.

Earlier this year TSMC reported successful initial deployment of machine learning on ARM A72/73 cores in which it helps predict an optimal cell clock-gating to gain overall chip speeds of 50 – 150 MHz. The techniques include training models using open source algorithms maintained by TSMC.

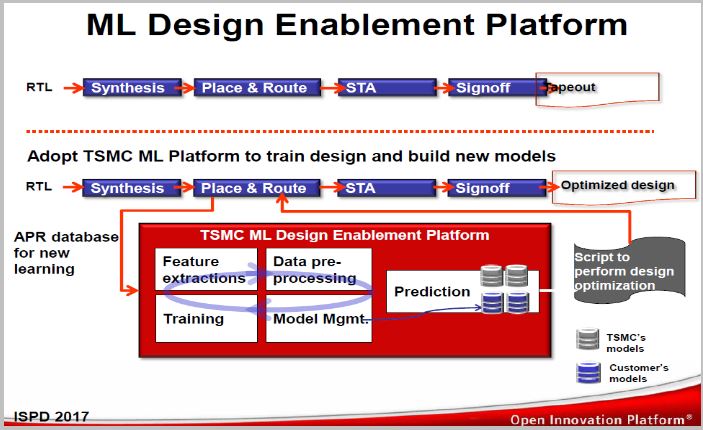

In ISPD 2017,TSMC referred to this platform as the ML Design Enablement Platform. It was anticipated to allow designers to create custom scripts to cover other designs.

During the 2017 CASPA Annual Conference, Cadence Distinguished Engineer, David White shared his thoughts on the current challenges faced by the EDA world which consists of 3 factors:

- Scale – with increasing design sizes, more rules/restrictions and massive data such as simulation, extraction, polygons, technology files are expected.

- Complexity– more complex FinFET process technologies resulting in complicated DRC/ERC, while pervasive interactions between chip and packaging/ board becoming the norm. On the other hand thermal physical effect between devices and wires is needing attention.

- Productivity– introduce uncertainty and more iterations while limited retrained design and physical engineers.

Furthermore, David categorized the pace of ML (or Deep Learning) adoption into 4 phases:

Although the EDA industry has started embracing ML as a new venue to enhance their solutions this year, the question is: How far have we gone? During 2017 Austin DAC, several companies announced augmenting ML in their product offerings as shown in table 2.

You might have heard the famous quote, “War is 90% information“. ML adoption may require good data analytics as one is faced with paramount data size to handle. For most hardware products augmenting ML can be either done on the edge (gateway) or in clouds. With respect to the EDA tools, it also becomes a question of how massive and accurate the trained models need to be and whether it requires many iterations.

For example, predicting the inclusion of via pillar in a FinFET process node could be done at a different stage of design implementation while the model accuracy should be validated at post-route. Injecting them during placement would be different than in physical synthesis where there is still no concept of legalized design and projected track usage.

Let’s revisit David’s presentation and find out what steps are required to design and develop intelligent solutions which involve harnessing ML, analytics and clouds, coupled with prevailing optimizations. He believes it’s comprised of two phases: training development phaseand operational phase.Each implies certain context as shown in the following snapshot (training = data preparation + model based inference; operational = adaptation).

The takeaways from David’s formulation involve properly managing data preparation to reduce its size prior to generating, training, and validating the model. Once completed, the calibration and integration to the underlying optimization or process can take place. He believes that we are just starting phase 2 in augmenting ML into EDA (refer to table 1).

Considering the increased attention given to ML during 2017 TSMC Open Innovation Platform, in which TSMC explored the use of ML to apply path-grouping during P&R to improve timing and Synopsys MLadoption to predict potential DRC hotspots, we are on the right track to have smarter solutions to balance the complexity challenges to high density and finer process technology.

Deep Learning and Cloud Computing Make 7nm Real

The challenges of 7nm are well documented. Lithography artifacts create exploding design rule complexity, mask costs and cycle time. Noise and crosstalk get harder to deal with, as does timing closure. The types of applications that demand 7nm performance will often introduce HBM memory stacks and 2.5D packaging, and that creates an additional long list of challenges. So, who is using this difficult, expensive technology and why?

A lot of the action is centering around cloud data center buildout and artificial intelligence (AI) applications – especially the deep learning aspect of AI. TSMC is teaming with ARM and Cadence to build advanced data center chips. Overall, TSMC has an aggressive stance regarding 7nm deployment. GLOBALFOUNDRIES has announced 7nm to support for, among other things, data center and machine learning applications, details here. AMD launched a 7nm GPU with dedicated AI circuitry. Intel plans to make 7nm chips this year as well. If you’re wondering what Intel’s take is on AI and deep learning, you can find out here. I could keep going, but you get the picture.

It appears that a new, highly connected and automated world is being enabled, in part, by 7nm technology. There are two drivers at play that are quite literally changing our world. Many will cite substantial cloud computing build-out as one driver. Thanks to the massive, global footprint of companies like Amazon, Microsoft and Google, we are starting to see compute capability looking like a power utility. If you need more, you just pay more per month and it’s instantly available.

The build-out is NOT the driver however. It is rather the result of the REAL driver – massive data availability. Thanks to a new highly connected, always-on environment we are generating data at an unprecedented rate. Two years ago, Forbes proclaimed: “more data has been created in the past two years than in the entire previous history of the human race”. There are other mind-blowing facts to ponder. You can check them out here. So, it’s the demand to process all this data that triggers cloud build-out; that’s the core driver.

The second driver is really the result of the first – how to make sense out of all this data. Neural nets, the foundation for deep learning, has been around since the 1950s. We finally have data to analyze, but there’s a catch. Running these algorithms on traditional computers isn’t practical; it’s WAY too slow. These applications have a huge appetite for extreme throughput and fast memory. Enter 7nm with its power/performance advantages and HBM stacks. Problem solved.

There is a lot of work going on in this area, and it’s not just at the foundries. There’s an ASIC side of this movement as well. Companies like eSilicon have been working on 2.5D since 2011, so they know quite a bit about how to integrate HBM memory stacks. They’re also doing a lot of FinFET design these days, with a focus down to 7nm. They’ve recently announced quite a list of IP targeted at TSMC’s 7nm process. Here it is:

Check out the whole 7nm IP story. If you’re thinking of jumping into the cloud or AI market with custom silicon, I would give eSilicon a call, absolutely.