At TSMC 2018 Silcon Valley Technology Symposium, Dr Kevin Zhang, TSMC VP of Business Development covered technology updates for IoT platform. The three growth drivers in this segment namely TSMC low power, RF enhancement and embedded memory technology (MRAM/RRAM) reinforced both progress and growth in global semiconductor revenue since 1980 –from PC, notebook, mobile phone, smartphone and eventually IoT. For 2017-2022 period, CAGR of 24% and 6.2B units of IoT end devices shipment by 2022 are projected.

Continue reading “TSMC Technologies for IoT and Automotive”

Webinar: Thermal and Reliability for ADAS and Autonomy

OK, so maybe the picture here is a little over the top, but thermal and reliability considerations in automotive in general and in ADAS and autonomy in particular, are no joke. Overheating, thermal-induced EM and warping at the board-level, in the package or interposers, are concerns in any environment but especially when you’re concerned about high-reliability, long lifetimes and nasty ambient temperatures. These can’t just be bounded to an on-die concern. The system as a whole has to meet reliability targets appropriate to the target ASIL rating and that means you have to look all the way from the enclosure and board down through regulators, to sensors and the SoCs in their packages. Calculating thermal profiles across this range and the potential impact on EM, stress and other factors, under a representative variety of use-cases and cooling strategies can be challenging. Join ANSYS to learn how you can manage this analysis using their range of tools spanning from the system to the die level and across multiple physics dimensions.

REGISTER HERE for this webinar on May 23[SUP]rd[/SUP], 2018 at 8AM PDT

Summary

Growing market needs for electrification, connectivity on the go, advanced driver assistance systems (ADAS), and ultimately autonomous driving, are creating newer requirements and greater challenges for automotive electronics systems. Automotive electronics, unlike consumer electronics, must operate in very harsh environments for extended periods of time. They must be safe and highly reliable, with a zero-field failure rate over a lifespan of 10 to 15 years. Thermal performance and reliability is critical for these high-power, intelligent electronics, which often include sensors and heterogeneous packaging systems.

Attend this webinar to learn how multiphysics simulations can address key reliability requirements for automotive electronics with thermal, thermal-aware electromigration (EM) and thermal-induced stress analyses across the spectrum of chip, package and system.

Speaker:

Karthik Srinivasan, Senior Corporate AE Manager, Analog & Mixed Signal Products, Semiconductor Business Unit, ANSYS Inc.

Karthik is currently working as a Senior Corporate AE Manager, Analog & Mixed Signal Products at the Semiconductor Business Unit of ANSYS Inc. His work focuses on product planning for analog/nixed signal simulation products and field AE support. His research interests include power estimation, power noise, reliability and thermal analysis for chip-package and system. He joined Apache Design Solutions in 2006 and has taken several roles as part of the field AE team. He received a B.S. in electronics and telecommunication engineering from the University of Madras, India, and an M.S. in electrical engineering from the State University of New York, Buffalo, in 2003 and 2005, respectively.

About ANSYS

If you’ve ever seen a rocket launch, flown on an airplane, driven a car, used a computer, touched a mobile device, crossed a bridge, or put on wearable technology, chances are you’ve used a product where ANSYS software played a critical role in its creation. ANSYS is the global leader in engineering simulation. We help the world’s most innovative companies deliver radically better products to their customers. By offering the best and broadest portfolio of engineering simulation software, we help them solve the most complex design challenges and engineer products limited only by imagination.

Machine Learning Drives Transformation of Semiconductor Design

Machine learning is transforming how information processing works and what it can accomplish. The push to design hardware and networks to support machine learning applications is affecting every aspect of the semiconductor industry. In a video recently published by Synopsys, Navraj Nandra, Sr. Director of Marketing, takes us on a comprehensive tour of these changes and how many of them are being used to radically drive down the power budgets for the finished systems.

According to Navraj, the best estimates that we have today put the human brain’s storage capacity at 2.5 Petabytes, the equivalent of 300 years of continuous streaming video. Estimates are that the computation speed of the brain is 30X faster than the best available computer systems, and it only consumes 20 watts of pwoer. These are truly impressive statistics. If we see these as design targets, we have a long way to go. Nevertheless, there have been tremendous strides in what electronic system can do in the machine learning arena.

Navraj highlighted one such product to illustrate how advanced current technology has become. The Nvidia Xavier chip has 9 billion transistors, containing an 8 core CPU, and using 512 Volta GPUs, with a new deep learning accelerator that can perform 30 trillion operations per second. It uses only 30W. Clearly this is some of the largest and fastest commercial silicon ever produced. There are now many large chips specifically being designed for machine learning applications.

There is, however, a growing gap between the performance of DRAM memories and CPUs. Navraj estimates that this gap is growing at a rate of 50% per year. Novel packaging techniques and advances have provided system designers with choices though. DDR5 is the champion when it comes to capacity, and HBM is ideal where more bandwidth is needed. One comparison shows that dual-rank RDIMM with 3DS offers nearly 256 GB of RAM at a bandwidth of around 51GB/s. HBM2 in the same comparison gives a bandwidth of over 250 GB/s, but only supports 16GB. Machine learning requires both high bandwidth and high capacity. By mixing these two memory types as needed in systems excellent results can be achieved. HBM also has the advantage of very high PHY energy efficiency. Even when compared to LPDDR4, it is much more efficient when measuring Pico joules per bit.

Up until 2015 deep learning was less accurate than humans at recognizing images. In 2015 ResNet using 152 layers exceeded human accuracy by achieving an error rate of 3.6%. If we look at the memory requirements of two of the prior champion recognition algorithms we can better understand the training and inference memory requirements. ResNet-50 and VGG-19 both needed 32 GB for training. With optimization they needed 25 MB and 12 MB respectively for inference. Nevertheless, deep neural networks create three issues for interface IP, capacity, irregular memory access and bandwidth.

Navraj asserts that the capacity issue can be handled with virtualization. Irregular memory access can have large performance impacts. However, by moving cache coherency into hardware overall performance can be improved. CCIX can take things even further and allow memory cache coherence across multiple chips – in effect giving the entire system a unified cache with full coherence. A few years ago you would have had a dedicated GPU chip with GDDR memory connected via PCIe to a CPU with DDR4. Using new technology, a DNN engine can have fast HBM2 memory and connect with CCIX to an apps processor using DDR5, with massive capacity. The CCIX connection boosts bandwidth between these cores from PCIe’s 8GB/s to 25GB/s. And, the system benefits from the improved performance from cache coherence.

Navraj also surveys techniques used for on-chip power reduction and for reducing power consumption in interface IP. PCIe 5.0 utilizes multiple techniques for power reduction. Both the controller and the PHY present big opportunities for power reduction. Using a variety of techniques, controller standby power can be reduced up to 95%. PHY standby power can be less than 100 uW.

Navraj’s talk ends by discussing the changes that are happening in the networking space and also talking about optimal processor architectures for machine learning. Network architectures and technologies are bifurcating based on diverging requirements for enterprise servers and data centers. Data centers like Facebook, Google and Amazon are pushing for the highest speeds. The roadmap for data center network interfaces includes speeds of up to 800 Gbps in 2020. Whereas enterprise servers are looking to optimize power and operate at 100Gbps by then for a single lane interface.

The nearly hour long video entitled “IP with Near Zero Energy Budget Targeting Machine Learning Applications” is filled with interesting information covering nearly every aspect of computing that touches on machine learning applications. While a near zero energy budget is a bit ambitious, aggressive techniques can make huge differences in overall power needs. With that in mind I highly suggest viewing this video.

Is there anything in VLSI layout other than “pushing polygons”? (9)

I moved from real layout work to management so I had little or no “hands-on” layout in my responsibility but I was very close to my team challenges in all 5 locations. During my 13 years in PMC Sierra I was involved in many initiatives some technical and some in developing relations with vendors. The biggest difference was that MOSAID was eager to get media coverage and PMC was very secretive of the tools or vendors it used. In this period I had to sign a lot of personal NDA as software companies wanted to ensure their ideas are kept confidential.

It started with integration of our microprocessor division from the former QED company. They were designing processors and the rest of PMC SoCs. We were using a simple TSMC process and they were using a “fancy” high-end foundry that offered additional speed and had an easy process migration road-map. The task was to unify the PDK, the tools and the efforts in such way that

our teams can provide IP to microprocessors for fast interfaces and we may use their Cores for our needs. In 90 nm we developed an internal LAMBDA process between the 2 flavours, the worst common denominator!!! A very challenging and interesting initiative that enabled me to use my Motorola experience. Working long distance with Sinan Doluca and his team was a challenging task but became a long time friendship. As a processor is very dependent on the quality of Register Files,

Multipliers, Adders, we started to work with vendors to find a Datapath friendly tool.

Sycon had a silicon compiler for Datapath as they were coming from Intel microprocessor group and CAD. They developed a new cell level solution to compliment the initial block level software. I was a very closed reviewer of all these developments and at one DAC the conference electrician had to wait until Jack Feldman finished to show me the “unreleased features” at 5 PM Thursday evening! That was exiting !!! At the same time Cadabra, who had a silicon compiler for cells, was trying to move up to block level but was not ready yet. I decided that the best option will be to work with Pulsic. They had a full custom/digital place and route solution that could add some Datapath options for microprocessor needs. I started to talk

to Dave Noble and Jeremy Birch to add features like global signals pre-placement and device placement based on “pre-routes”. We managed to get the features implemented but by that time PMC decided to stick with a single vendor so we lost the opportunity to use PULSIC. However LAMBDA flow required a lot of DRC fixing so we got Sagantec SiFix at that time, see my previous article.

One of the big issues CADENCE had was that users were reluctant to new tools, most of them were using Virtuoso L, not even the VXL. In top of VXL Cadence bought a few companies (Neolinear, CCT, QDesign,

etc.) with very powerful tools that had introduction issues. The main reason was high introductory price. Each of the licenses with advance features costs a lot of money and nobody was planning to use them for a very long time at once (CPU time)… For a 2 hours routing every week you needed to prove that 150K tool increases productivity and it can be quantified. After many discussion with Cadence I shared with them the tokens based system, an idea coming from Michael Reinhardt former CEO of Rubicad. You can pool together all the expensive licenses like VCP, VCR, VLM, MODGEN, etc. and call it … GXL. Each of these licenses has a now an equivalent value in tokens. Lets say 20 tokens for a global router, 30 for VLM, 6 for MODGEN. This way if you think you know what the team needs you purchase 60 tokens for a year and you can use at the same time (CPU controlled) 2 VLM license or 3 global routers or 10 MODGEN. Once you

release the licence somebody else can reuse the tokens for their needs by calling different tools from the pool. I cannot claim that GXL was my idea, but I was closely involved with Mike Stroobandt and benefited for the following years like many of you.

Saying you want Electromigration tools is a good start but not enough. We did solve the issue in PMC in 2002 but this was a post layout solutionand I wanted an “online as you go” option. I decided that the only way to make this topic popular is to socialise the concept with my friends in the industry and the software world. I built a short PPT and with each occasion I presented to Synopsys, Cadence, Mentor, ClioSoft, etc… From sales people to architects, from technical marketing to implementation guys.

I even spoke with university professors to push it. One of them, Jens Lienig, a German professor presenting in DAC panels, provided me with his papers on Electromigration. Apparently I was not the only one asking for it so Cadence Vinod Kariat and David White promised to do something about it, the problem they saw was to find capable drivers to take over such initiative. I proposed my old friends Michael McSherry & Ed Fisher. Michael was the former marketing manager for Calibre and Ed was the former engineering manager for IC Station, now both in Cadence. To my surprize the following DAC I was invited in a booth and meet both of them, ready to take over the challenge. We spent some time to go over the idea and the basic features. I was interested to follow-up but PMC did not agree to be part of Alpha or Beta testing so I did it from the sidelines… By the time theElectrically Aware Design or as you know it EAD, was ready for public use I was out of PMC. I enjoy all the demos and new developments on this tool as I consider it a revolutionary, by improving design and layout in full custom world in all technologies down to 7 nm.

During my PMC time I I worked with many companies to help them bring new ideas to market. From ClioSoft Laker 4, to Cadence EAD, from Mentor Graphics Calibre CB to Pulsic Animate, I always tried to push forward automation to make layout designers work easier and less monotone. I reviewed a lot of specifications, I was part of products reviews and demos “for your eyes only”, I had (and still have) a lot of fun.

I had endless debates with John Stabenow for complex features and demos (some in Cadence and some in Synopsys), Jeremiah Cessna for MODGEN with PCELLS or not, Olivier Arnaud for VXL grouping features, Philippe Hurat for advance nodes automation, etc. This names are only from Cadence and I have to thank Deana Spencer for enabling me to talk to a lot of internal architects and developers… I had similar talks with Mentor, Synopsys and many small startups to help them translate their vision into something we, the users, can relate… Many time things moved forward or changed direction, sometimes they disappeared like Cadabra or Cosmos. I am sorry that Mar Hershenson did not succeed to bring Barcelona Design or next solutions to success. I considered it a good revolution in automating layout for “template

circuits”. I was lucky to meet all these industry experts as my book was used in many cases to explain concepts or argue in flow debates.

We looked closely at Accelicon AVP with Mahesh Guruswamy help and Bindkey DRC cleaning tools with

Micha Oren, but by the time were ready to buy their tools the companies were bought and all got cancelled. Mixed Signal Layout group liked automation so much that with VCP + VCR Stephane Leclerc rebuilt the ACPD flow in 65 nm and we prepared a paper for CDN Live in 2008.

By 2012 we moved to a new technology node so mixed signal team decided to use automation at maximum by using schematic driven P & R. We needed a high speed library for the digital parts of our analog blocks. We ended up with almost 2000 cells “wish list”. We had 2 option, by hand or automated, but as this was never done at this level in PMC the risk of effort/schedule overrun was big in both cases. Based on our Return On Investment (ROI) presentation the manual solution was 4x the price of automation so it looked worthy. We used2 layout designers, 1 CAD and 1 circuit designer and created in 2.5 months 2000 high-performance standard cells layout in 28 nm running between 1.6 to 6.2 GHzspeed in post layout simulation. Special appreciation for Jens C. Michelsen the COO of Nangate who agreed to a short license “lease” and sent us a great trainer. In one week the team was able to tackle all tool issues and we were able to produce. The team finished 15 days ahead of plan and the final ROI including the tool price, training and ramp up went to 5x cheaper than hand crafted library.

Another proof that automation and not manuallabour is the future!

More to come… stay put on Sankalp contributions…

Dan Clein

Don’t Balkanize Automotive Safety

The Wall Street Journal reported, last week, that auto makers are lining up on opposite sides of the talking cars debate. Some car makers – General Motors, Toyota and Volkswagen – are pushing Wi-Fi-based dedicated short range communication technology (DSRC) while others – Ford, Audi and BMW – are emphasizing 5G for the same application.

“5G Race Pits Ford, BMW, against GM, Toyota”

The importance of this debate derives from the fact that both DSRC and 5G technologies offer the prospect of direct device-to-device or car-to-car communications for the purposes of avoiding collisions using the same wireless frequencies but with incompatible protocols. Both approaches enable communications between cars and, potentially, between cars and infrastructure and between cars and pedestrians.

Not only are the two means of inter-vehicle connections incompatible, they both require infrastructure that does not exist today. Estimates for the cost of deploying a complete DSRC system in the U.S. run as high as $108B. Deploying 5G will be equivalently expensive for wireless carriers.

The difference in deployment scenarios boils down to Federal and state departments of transportation in the U.S. (and elsewhere in the world) with little or no available funds to stand-up the required DSRC network. Meanwhile, wireless carriers, such as AT&T, have stepped forward to acknowledge that they are indeed prepared to deploy 5G technology more rapidly than they have ever deployed any previous technology.

n fact, wireless carriers are working with state regulators in the U.S. to receive permission to install the thousands of micro cells necessary to support 5G transmission. Many, if not most, of those micro cells will be installed along highways to support vehicle connectivity.

But the key to the talking car equation is the direct communications between vehicles that will enable collision avoidance applications along with the near instantaneous communication of road hazard information. Both DSRC and 5G technologies will enable these life-saving communications.

I emphasize the point because many automotive engineers are still skeptical that carriers will deploy wireless modules on their networks that will enable direct communications without network support or connectivity. The reality is that wireless carriers have been taking advantage of Wi-Fi technology to offload traffic for many years. Extending the functionality of Wi-Fi to vehicle safety applications is a small, but important and very real leap.

The bigger problem is the underlying conflict between the DSRC and 5G camps. Since DSRC and 5G share the same frequencies but cannot communicate directly the intransigence of DSRC advocates is setting the stage for the balkanization of vehicle safety.

There is no organic demand for DSRC, beyond commercial vehicle applications successfully deployed and demonstrated by fleet market operators such as Veniam. DSRC is not found in mobile phones and has seen only limited deployment in roadside infrastructure thanks to a few dozen DOT-funded projects around the U.S. – a scenario very much like what has emerged in Europe.

Just as auto makers appear to be divided over the issue of DSRC support, state-level DOTs are similarly split. Seventeen of the 50 state-level DOTs in the U.S. sent a letter to the U.S. DOT earlier this year calling for the Federal agency to proceed with the DSRC mandate.

Letter from Coalition for Safety Sooner from individual U.S. DOTs

These states clearly appreciate the opportunity to tap into the flood of funding ($108B) necessary to support the deployment of DSRC. The remaining states are either indifferent to DSRC or ambivalent or out-and-out hostile. States such as Virginia have already determined that they will waste no more of their own funds on a technology, DSRC, that is on the verge of being bypassed by the more advanced 5G network.

In the end, divisions between car makers and DOTs threaten to balkanize automotive safety while unnecessarily contributing to the increased cost of deploying safety systems. The wireless network being deployed by the carriers such as AT&T and Verizon will deliver this new safety proposition – effectively extending the range of existing vehicle sensors while expanding their capabilities.

The only path to market for DSRC is via a Federal mandate. The mandate is expected to add $300 dollars to the cost of a new vehicle without delivering any customer value proposition for another 10-20 years – i.e. the time it will take for DSRC to become sufficiently penetrated into the broader fleet of consumer vehicles.

At the meeting of the 5G Automotive Association two weeks ago in Washington, DC, the relief of attending DOT representatives in the room was palpable when an executive from AT&T detailed the company’s plans for 5G infrastructure investment. No such similar plan is on offer from the Federal government.

The best news for the DSRC side of the debate is that the estimated $700M spent thus far on DSRC development over the past 15+ years will not go to waste. The coding and standards and testing created for DSRC are now being applied to LTE-based C-V2X technology as well as 5G. C-V2X cellular networks will support PC5 mode 4 interfaces for direct vehicle communications. At the DC event Ford and Audi demonstrated collision avoidance maneuvers using C-V2X.

It’s time for car makers and DOTs to align on the implementation and deployment of 5G technology and abandon the expensive distraction known as DSRC. DSRC will preserve its relevance in the fleet industry and maybe for emergency response vehicles.

Safety systems in cars are expensive enough without requiring auto makers to simultaneously support two incompatible wireless safety systems. DOTs, too, are insufficiently funded to support two paths to saving lives.

Worldwide Design IP Revenue Grew 12.4% in 2017

When starting SemiWiki we focused on three market segments: EDA, IP, and the Foundries. Founding SemiWiki bloggers Daniel Payne and Paul McLellan were popular EDA bloggers with their own sites and I blogged about the foundries so we were able to combine our blogs and hit the ground running. For IP I recruited Dr. Eric Esteve who had never blogged before but he took to it quite quickly. I knew Eric from his IP reports at my previous position working with the foundries at Virage Logic.

Since going online in 2011 SemiWiki has published 693 IP related blogs with 3,572,124 views. Eric has written 277 of those blogs averaging close to 6,000 views per blog. Today Eric is by far the most respected IP analyst with the most detailed and accurate reports and it is an honor to work with him, absolutely.

According to the Design IP Report from IP-Nest the market is still doing very well with YoY growth of 12.4% in 2017. The ARM Group of Softbank (previously known as ARM Holdings) is again a strong #1 with IP revenues (licenses plus royalties) of $1,660 million and 46.2% market share, followed by Synopsys growing by 18% to $525 million and 14.7% share. Broadcom, being the addition of Avago + LSI Logic + Broadcom, is making an entry in the top 3, replacing Imagination. Both Cadence and CEVA are showing 20%+ growth in 2017.

IPnest has defined 11 categories ranking IP vendors. The CPU IP category is the largest with about 42% of revenues from design IP. There are strong disparities between CPU, DSP, and GPU & ISP as the weight of the CPU category is about 9x the DSP and 5x the GPU/ISP.

ARM is obviously #1 in the CPU category, and will probably keep this position for a long time due to the royalty mechanism. Nevertheless, we can see that ARM CPU IP license revenue has declined by 6.8% YoY, more than compensated by the royalty revenue growing by 17.8%. The reasons may be multiple. After the ARM acquisition by SoftBank, the accounting policy was changed creating what Eric calls an “artifact”. However, in my opinion we are starting to see the impact of RISC-V becoming a credible alternative to the ARM CPU hegemony. The 2019 Design IP report should confirm this.

In the Processor group (CPU + DSP + GPU & ISP), Imagination Technologies (IMG) is still #2 but I expect their royalty revenue to collapse when Apple effectively moves to an internal GPU solution. Now, if we consider the followers, both CEVA and Cadence have made 20%+ progression in 2017, then it wouldn’t be surprising to see one of these two companies becoming the #2 next year. In my opinion it will be CEVA but in any case the ranking in the Processor group will be disrupted next year.

The next group after Processor is the Physical IP, including: Wired Interface IP, SRAM memory compiler, other Memory Compilers, Physical Libraries, Analog and Mixed-Signal and Wireless Interface IP.

If we look at the Wired Interface IP category, it’s now at $735 million (20% YoY growth) and 20.5% of the total. Synopsys is the clear leader with about 45% market share, as well as in the Physical IP group with 35% market share.

If you take a look at the picture above you can see an interesting trend; the 2016/2017 evolution of the Wired Interface IP as a portion of the total moves from 19% to 20.5% when Processor IP declines from 58.3% to 56.4%.

As forecasted by IPnest in the “Interface IP Survey & Forecast”, the Wired Interface IP should reach $1 billion in a few years (2021 or 2022). Even though ARM is giving up in China through a so-called “joint venture”, the Wired Interface IP category will remain an island of (growing) stability in the 2020s.

Increasing complexity: can you imagine that the DDR5 memory controller PHY is now running at 4400 Mb/s? This is also 4.4 Gb/s or almost the PCIe Gen-2 data rate (5 Gb/s), which was one of the IP stars 10 years ago.

Eric was chairman of a panel during DAC 2017 “Growing IP market despite semi consolidation” and this panel came to a consensus: The IP market in 2010-2020 is like the EDA market in 1980-1990, outsourcing was the rule and the result was an EDA market completely externalized by 2000. As long as a function is not perceived as a differentiator by a design team, it can be outsourced and it will become an IP sold by commercial vendors (see the Top 10 list).

Bottom line: If you apply a 12% CAGR for the next 5 years you can easily predict a $6 billion IP market in 2022.

To buy this report, or talk to Eric you can contact him at eric.esteve@ip-nest.com. He will also be at DAC again this year if you want to meet him.

TSMC Technologies for Mobile and HPC

During TSMC 2018 Technology Symposium, Dr. B.J. Woo, TSMC VP of Business Development presented market trends in the area of mobile applications and HPC computing as well as shared TSMC progress in making breakthrough efforts in the technology offerings to serve these two market segments.

Both 5G and AI are taking the center stage in shaping the high double-digit data growth demand. For mobile segment, the move from 4G LTE to 5G requires the use of higher modem speed (from 1Gbps to 10Gbps), 50% faster CPU, twice faster GPU, double transistor density, 3X performance increase of AI engines to 3 TOPS (Trillion Operations Per Seconds) target and without much power increase. In this market segment, TSMC is ushering the move from 28HPC+ towards 16FFC.

On the cloud side, data center switch demands double throughput, from 12.8Tbps to 25.6Tbps as the computing demand move towards the network edge. Similarly the drive towards double memory bandwidth, 3 to 4x increase in throughput of AI accelerators and up to 4x transistor density improvement are taking place.

N7 Technology Progress

Dr. Woo stated that delivering high density and power efficiency requirements to satisfy low latency of data intensive AI application is key to the success of TSMC N10 process. It has also enabled AI in the smartphones space. On the sideline, N7 node has been making good progress in providing excellent PPA values with >3x density improvement, >35% speed gain and >65% power reduction over its 16nm predecessor.

N7 HPC track provides 13% speed over N7 mobile (7.5T vs 6T), while it has passed the yield and qual tests (SRAM, FEOL, MEOL, BEOL) and MP-ready D0. Part of the contributing factor is TSMC successful leveraged learning from N10 D0 and it is targeted for Fab15.

The N7 IP ecosystem is also in ready state with over 50 tapeouts slated by end of 2018 for mobile, HPC, automotive and servers. The 7nm technology is anticipated to be having a long life similar to its predecessor 28nm/16nm nodes. The combination of mild pitch scaling from N10 to N7 plus the migration from immersion to EUV scaling and denser standard cell architecture make significant overall improvement.

EUV Adoption and N7+ Process Node

She shared some progress of the EUV application on N7+. Applicable on selected layers, EUV reduces the process complexity and enhances resulting pattern fidelity. It also enables future technology scaling while offering better performance, yield and shorter cycle time. Dr. Woo showed caption of via resistance having much tighter distribution in N7+ EUV versus N7+ immersion as it delivers better uniformity.

The N7+ value proposition includes delivering 20% more logic density over N7, 10% lower power at same speed, and additional performance improvements anticipated from the ongoing collaboration with customers.

N7+ will also have double digit good die increase over N7 node as it gains traction from capitalizing the use of the same equipment and tooling. She claimed that it has lower defect density than other foundries as well as comparable 256Mb SRAM yield and device performance vs N7 baseline. TSMC provides easy IP porting (layout and re-K) from N7 to N7+ for those design entities that do not need to be redesigned.

HPC and N7+ Process Node

For HPC platform solution, the move from N7 to N7+ involves the incorporation of EUV, denser standard cell architecture, ultra-low Vt transistors, high-performance cells, SHDMIM (Super High Density MIM) capacitance and larger CPP (Contacted Poly Pitch) and 1-fin cells.

N7+ offers better performance and power usage through the use of an innovative standard cell architecture. It allows higher fin density in the same footprint for 3% speedup. On the other hand, reducing power by applying single-fin in non-timing-critical area reduces about 20% capacitance and in-turns, the dynamic power number.

The adoption of new structures also enhances MIM capacitance and utilization rate for HPC 3% to 5% performance boost. N7+ design kit is ready for supporting the IP ecosystem.

N5 Value Proposition

It has new elVt (extreme low Vt) offering a 25% max speed-up versus N7, incorporating aggressive scaling and full-fledged EUV. N5 has made good progress with double digit yield on 256Mb SRAM. Risk production is slated to be 1H2019.

Dr. Woo also shared a few metrics compared with N7 process (test vehicles used ARM A72 CPU core + internal ring oscillator):

– 15% speed improvement (up to 25% max speed)

– 30% power reduction

– 1.8x increased logic density through innovative layout and routing

– 1.3x analog density improvement through poly pitch shrink and selective Lg and fin #, yielding a more structured layout (“brick-like” patterns)

16FFC/12FFC Technologies

Dr. Woo covered RF technologies and roadmap (more on this in subsequent blog on IoT and Automotive). She mentioned that N16 and N12 FinFet based platform technologies have broad coverage, addressing HPC, mobile, consumer and automotive. Both 16FFC and 12FFC have shown strong adoption data with over 220 tapeouts. 12FFC should deliver 10% speed gain, 20% power reduction and 20% increased logic density vs 16FFC through dual-pitch BEOL, device boost, 6-track stdcell library and 0.5v VCCmin.

To recap, AI and 5G are key drivers for both mobile and HPC product evolutions. Along this line, TSMC keeps pushing the PPA (Power, Performance and Area) envelopes for the mainstream products while delivering leading RF technologies to keep pace with technology accelerated designs in these segments.

Also read: Top 10 Highlights of the TSMC 2018 Technology Symposium

Converter Circuit Optimization Gets Powerful New Tool

DC converter circuit efficiency can have a big effect on the battery life of mobile devices. It also can affect power efficiency for wall-power operated circuits. Even before parasitics are factored in, converter circuit designers have a lot of issues to contend with. Optimizing circuit operation is essential for giving consumers what they want. Switching converters are light years ahead of old-school transformer based designs. However, switching converters are often operating at high frequencies that can create challenges for efficient operation. In addition to parasitic inductances and additional current from reverse recovery effect, the PowerMOS devices themselves do not operate as ideal devices.

It’s necessary to understand that PowerMOS devices are really an assembly of large numbers of parallel intrinsic devices with a complex and distributed structure. As such, switching does not occur simultaneously across all the intrinsic devices. Within a PowerMOS RC delays greatly affect Vgs present at the gates in the low and high side transistors. Previously it has been difficult to run simulations that take this into consideration. Fine grain extraction of gate, source and drain interconnect is not a good application for traditional rule based extractors. Designers have struggled with this lack of visibility up until now.

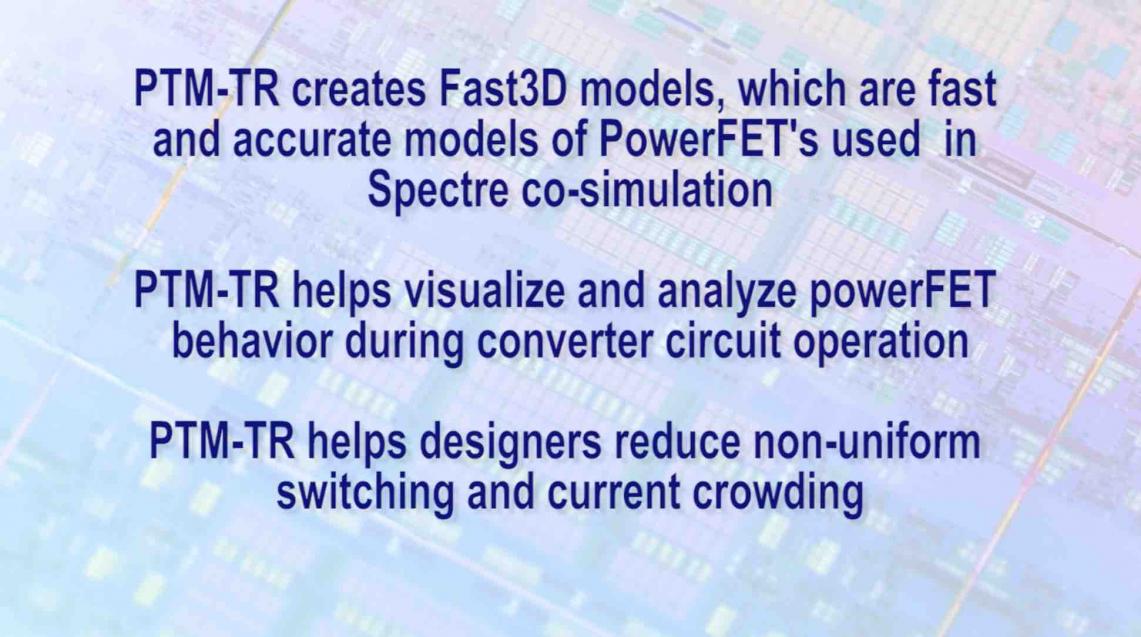

Recently Magwel has released a tool specifically targeted at realizing comprehensive and accurate simulation of converter circuits, including the complex internals of PowerMOS devices. Magwel’s PTM-TR does several unique things to provide transparency into the detailed switching behavior of PowerMOS devices. PTM-TR uses a solver based extractor to correctly and accurately determine parasitics for the internal metallization within PowerMOS devices. The gate regions are divided up according to user set parameters and the intrinsic device model is applied to create a simulation view of the device that incorporates full internal structure. This model is known as a Fast3D model and is used by PTM-TR with Cadence Spectre® to co-simulate dynamic gate switching behavior at each time step of circuit operation.

Because the Fast3D model is used in conjunction with Spectre circuit simulation, it can be used with test benches, or to perform any desired simulation, such as corner analysis. PTM-TR comes with the additional benefit of showing graphically the internal field view of the device at each time step. This is a direct benefit of the co-simulation. Magwel has a fascinating video on their website that shows how the field view of the PowerMOS device can be useful in understanding dynamic switching performance.

The Magwel video highlights how Vgs reported in simulation can differ from Vgs at each individual gate location. At one time step in the transition of the half-bridge, the delta in Vgs is ~2V. This can have a large effect on shoot through current. Also, during early switching with only certain sections of the device turned on, higher than expected current densities are possible – leading to EM and thermal issues. With PTM-TR designers can modify and test PowerMOS devices to achieve optimal performance.

PTM-TR is part of Magwel’s complete family of power transistor modeling tools. The base product, PTM, reports Rdson and static power and current per layer. PTM-ET gives insight into combined electro-thermal performance of PowerMOS devices. PTM-ET uses thermal models that can include heat sources and sinks on the die, as well as thermal properties of the package.

The PTM-TR video can be viewed on the Magwel website. More information about the PTM product family and Magwel’s solutions for ESD and power distribution network analysis can be found there as well.

Semiconductor Specialization Drives New Industry Structure

When traveling the world there are the things that you see and the people that you meet. I have been very fortunate to meet some of the most amazing people and one of those people is Dr. Walden Rhines. Wally spent the first half of his career in semiconductors at TI and the second half in EDA with Mentor Graphics which gives him a cyborg like quality, absolutely.

Continue reading “Semiconductor Specialization Drives New Industry Structure”

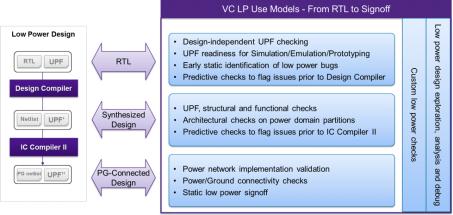

Low Power Verification Shifting Left

I normally think of shift left as a way to move functional verification earlier in design, to compress the overall design cycle. But it can also make sense in other contexts, one particularly important example being power intent verification.

If you know anything about power intent, you know that it affects pretty much all aspects of design, from architecture through verification to PG netlist. If the power intent description (in UPF) isn’t correct and synchronized with the design at any of these stages, you can run into painful rework. At each handoff step you will carefully verify, but design and power intent both iterate and transform through the flow so keeping these aligned can become very time (and schedule) consuming.

One important step towards minimizing the impact is to ensure that interpretation is consistent across the design flow. UPF is a standard but that doesn’t guarantee all tools will interpret commands in exactly the same way, especially where the standard does not completely bound interpretation. Which means, like it or not, closure between synthesis, implementation and verification in mixed vendor flows is likely to be more difficult. Not to say you couldn’t also have problems of this kind in flows from a single vendor, but Synopsys have made special effort to maximize consistency in interpreting power intent across their toolset.

A second consideration is how you verify power intent and at what stage in the design. There are static and dynamic ways to verify this intent (VC LP, and VCS NLP power-aware simulation,). Each of these naturally excel at certain kinds of checks. You couldn’t meaningfully check in simulation that you are using the appropriate level shifters (LS) wherever you need LS, but you can in VC LP. Conversely you can’t check the correct ordering and sequencing of say a soft reset in VC LP, but you can in power-aware sims.

Naturally there are grey areas in this ideal division of techniques and those are starting to draw more attention as design and verification teams push to reduce their cycle times. Simulation is always going to be time-consuming on big designs; simply getting through power-on-reset takes time, before you can start checking detailed power behavior. As usual, the more bugs that can be flushed out before that time-consuming simulation starts, the more you can focus on finding difficult functional bugs. This is why we build smoke tests.

In low power verification this is not a second-order optimization. Power aware sims can take days, whereas a VC LP run can complete in an hour. For example, an incorrect isolation strategy could trigger X-propagation in simulation, which burns up not only run-time but also debug time. Improved static checking to trap at least some of these cases then becomes more than a signoff step – it optimizes the dynamic power verification flow. Hello shift left.

This grey area is ripe for checks. Some already provided by VC LP include checking whether a global clock or reset signal passes through a buffer (or other gate) in a switchable power domain; this can obviously lead to problems when that domain is off. Similarly, a signal controlling isolation between two domains but sourced in an unrelated switchable domain will never switch out of isolation while that domain is off. These are the kinds of problems that can waste simulation cycles. Synopsys tells me they continue to add more checks of this type to minimize these kinds of problems.

Another capability that I have always thought would be useful is to be able to check UPF independent of the RTL. After all, the RTL may not yet be ready or it may be rapidly changing; that shouldn’t mean that the power intent developer is stuck being able to write UPF but not check its validity. VC LP apparently provides this capability, allowing you to check your UPF standalone for completeness and correctness; given the power/state table definition, are all appropriate strategies defined, for isolation and level-shifters for example?

Prior to implementation, VC LP will run predictive checks for DC/ICC2, looking for potential synthesis or P&R issues, such as problems with incorrect or missing library cells and signal routing. And of course for low power signoff, it will run checks on the PG netlist looking for missing or incorrect connections by power/ground domains. Still the signoff value you ultimately need, but now adding capabilities for shifting low power verification left. You can learn more about VC LP HERE.