If you have read the white paper recently launched by Dolphin, “New Power Management IP Solution from Dolphin Integration can dramatically increase SoC Energy Efficiency”, you should already know about the theory. This is a good basis to go further and discover some real-life examples, like Bluetooth Low Energy (BLE) chip in GF 22FDX. Because 22nm is an advanced node compared with 90nm or 65nm for example, the circuit targeting 22nm benefits from a decrease of the dynamic power (at same frequency), but the leakage power will dramatically increase.

In fact, the leakage power increases exponentially with process shrink, as it clearly appears on the second picture below. If you want your SoC to get the maximum benefit of this advanced node to target an IoT application, you will have to seriously deal with the power consumption associated with leakage.

The webinar will address two aspects of power efficiency as described on this picture from STMicroelectronics (“© STMicroelectronics. Used with permission.”): Silicon technology, by targeting FDSOI, and system power management.

Just a remark about the picture below, describing the evolution of the power consumption (active and leakage) in respect with the technology node, for 90nm to 22nm. If you read too quickly, you may think that the dynamic power also increases with process shrink. This is true in Watt per cm[SUP]2[/SUP], but don’t forget the shrink! Your SoC area in 22nm is by far smaller than in 65nm or 90nm, and the result of the equation Area*Power/cm[SUP]2[/SUP] is clearly better when you integrate in 22nm the same gates amount than in 90nm. If you want to increase the battery lifetime of your IoT system, your main concern is to reduce the leakage power by using the proper techniques, described in this webinar.

Dolphin presents various data for typical current consumption of a 2.4 GHz RF BLE when active (Rx/Tx/Processing/Stand By), or in sleep/deep sleep mode and calculate the duty cycle for a specific application: 99.966%. This means that applying power management techniques to reduce the leakage power will be efficient 99.966% of the time, so the designer should do it. This is true for this BLE RF IC example, as well as for IoT sensors, as they typically spend most of their time in sleep.

Improving SoC energy efficiency is clearly a must for IoT systems and any battery-powered devices. The first and obvious reason is to extend the system lifetime (without battery replacement), not by weeks, but really by years. In many cases, the system will be in a place where you just can’t easily replace the battery (think about surveillance cameras).

Other reasons are pushing for maximum energy efficiency, like cost, heat dissipation or scaling. A smaller size battery should allow packaging the system in a cheaper box. Reducing heat dissipation can be important when you want your product to fit with a specific form factor. Or you can decide to keep same battery life but integrate more features in your system…

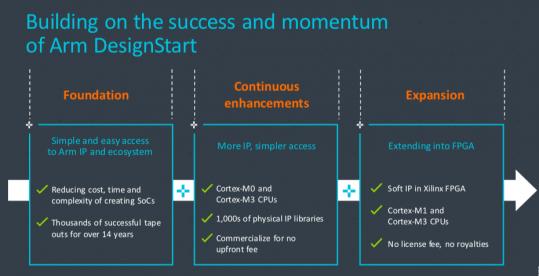

In this webinar you will learn the various solutions to provide energy-efficient SoC. The designer should start at the architecture level and define a power friendly architecture. This is the first action to take: SoC architecture optimization is providing the highest impact, as 70% of power reduction comes from decisions taken at architecture level. The remaining 30% being equally split between Synthesis, RTL design and Place&Route.

At architecture level, you first define clock domains (to later apply clock gating), frequency domains and frequency stepping. Then you define the multi-supply voltage, global power gating and later coarse grain power gating. Power gating is a commonly used circuit technique to remove leakage by turning off the supply voltage of unused circuits. You can also apply I/O power gating, able to provide I/O power consumption reduction by one order of magnitude in advanced technologies where the leakage power is dominating.

As today’s SoCs require advanced power management capabilities like dynamic voltage and frequency scaling (DVFS), you will learn about these techniques and how to implement it to also minimize active power consumption.

The emerging connected systems are most frequently battery-powered and the goal is to design a system able to survive with, for example, a coin cell battery, not for days or even months, but for years. If you dig, you realize that numerous IoT (and communicating) applications, such as BLE, Zigbee…, have an activity rate (duty cycle) such that the power consumption in sleep mode dominates the overall current drawn by the SoC. To reach this target, the designer needs to carefully consider the power network architecture, clock and frequency domains at design start and wisely implement power management IP in the SoC.

Dolphin will hold a one hour live webinar “How to increase battery lifetime of IoT applications when designing an energy-efficient SoC in advanced nodes?” on October 16 (for Europe or Asia), at 10:00 AM CEST, or on October 25 for Americas, at 9:00 AM PDT. This webinar targets the SoC designers wanting to learn about the various Power Management techniques.

You have to register prior to attend, by going here.

By Eric Esteve from IPnest