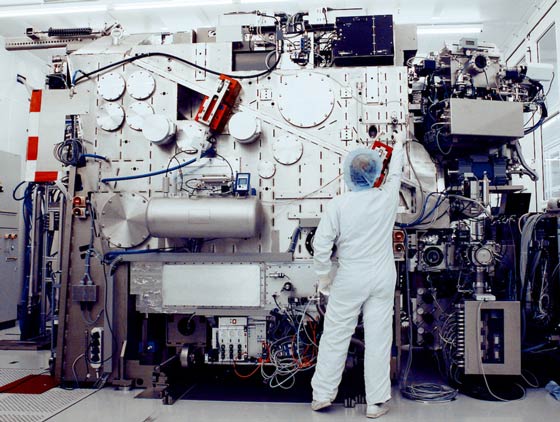

Not as much new – No breakthrough announcements, 300 watts is better than 250 watts – Pellicle Problems, TSMC is EUV king – Third times a charm? We attended this years SPIE Lithography convention in San Jose as we have for many years. Although the show was quite enthusiastic and EUV was the central topic, as it has been for a long time, there were no real “breakthrough” announcements or changes that we have seen previously.

300 is more than 250

It seems that ASML has had good luck and good results with it latest EUV source as it seems capable of 300 watts rather than the 250 watts specified, a nice step on the way to the needed wafer throughput

Pellicle Problems

On the other side of the coin, there has not been any significant progress on the transmission efficiency which is still stuck at 83% or so rather than the 90% previously hoped for. There is some discussion of carbon nanotube pellicles being worked on by IMEC but nothing real yet. Pellicles need to get better to improve throughput.

TSMC is big man on EUV campus

We have heard that TSMC is taking between 18 and 20 of ASML’s planned 30 EUV systems to be produced in 2019. This is a huge 180 degree reversal from a company that said they would never use EUV just two short years ago. We have heard that Intel may be good for another half dozen EUV tools in 2019 with Samsung or others taking the balance. ASML is obviously sold on on EUV for 2019.

The risk of going “bareback”

TSMC seems to be willing to push production without the protection of pellicles. The “print and pray” approach seems to be the way to go as there is still no mask inspection in sight, at least not from KLA. Maybe if TSMC gets paid per wafer not per known good die, they don’t care, its the customers risk. We wonder how long customers might be willing to take the defect loss from running with no pellicle. Maybe TSMC figures out how to get yield without mask inspection and never buys it by the time KLA gets it to market, probably not.

Third times a charm

It sounds as if the “C” version of ASML’s EUV scanner, which is now shipping, will likely be the “go to” production scanner for HVM. The “B” version which was obviously better than the prior version was better but not good enough and not economically viable for ASML whereas the “C” seems to fix all the issues (or at least enough of them) and is financially better for ASML.

It’s party time!

We attended both the Tokyo Electron and ASML parties at the show on Monday evening and for an industry in a slump you wouldn’t know it from the crowds at the parties nor the positive tone coming from the attendees. While there were no major new announcements at the show the tone was very positive about progress towards HVM and more layers going EUV.

Triple patterning versus EUV

A number of people we spoke to at SPIE suggested that the cost crossover between EUV and multipatterning was that EUV costs about the same as current triple patterning techniques. In our view, there is still a lot of room for progress in EUV costs. ASML has projected a “slam dunk” cost advantage of EUV over multipatterning but we are still not near that goal. However, EUV has power performance advantages over multipatterning that outweigh the fact that cost advantages haven’t yet been achieved. The basic fact is that EUV formed transistors are better than multipatterned transistors of the same dimensions and customers want the better product…..especially Apple.

Impact on pricing of dep and etch

We think that both AMAT and Lam have the opportunity to slow the move away from multipatterning to EUV by finding ways to reduce multipatterning costs. This may put pricing pressure on dep and etch tools. We think that margins at AMAT and Lam may already be under pressure as they cut pricing to get more share in a declining market as we are in. When demand has gone down in previous cycles, pricing has suffered more as the competitors have cut each others throats to get the smaller pool of business left. We have already heard of some aggressive pricing in the market that some have walked away from

NAND and DRAM going EUV- question of when not if

Many we spoke to at the show are already talking about when EUV will enter the memory industry. Right now there is no real reason but at some point memory will also have to go with EUV to keep up with Moore’s law…its inevitable.

In our view this is the same question of when logic would go to EUV…..we never doubted that EUV would eventually work if enough time and money was throw at it (which it was). We think that the cost issue is a bigger impediment to EUV being used for memory production and may takes a few years to overcome. We could also hit a technology block that forces memory to go EUV before the costs come down but that doesn’t seem predictable right now

The stocks

We think that those investors who were worried about ASML’s EUV business in the downturn can breath a bit easier, however we can’t say the same about DUV business which will likely be impacted. AMAT and Lam still have some runway left on multipatterning given that EUV costs aren’t coming down as quickly as hoped. All in all we saw nothing at the show that made us want to go out and buy or sell a specific stock…maybe no surprises or big announcements is a good thing.

About Semiconductor Advisors LLC

Semiconductor Advisors is an RIA (a Registered Investment Advisor), specializing in technology companies with particular emphasis on semiconductor and semiconductor equipment companies.

We have been covering the space longer and been involved with more transactions than any other financial professional in the space. We provide research, consulting and advisory services on strategic and financial matters to both industry participants as well as investors. We offer expert, intelligent, balanced research and advice. Our opinions are very direct and honest and offer an unbiased view as compared to other sources.