It is a demand driven downturn – harder to predict

It may not be “business as usual” after this virus

What systemic changes could the industry face?

Trying to figure out another cycle-driven by inorganic catalyst

Investors and industry participants in the semiconductor industry who are used to normal cyclical behavior of over and under supply driven by factors emanating from the technology industry itself now have to try to figure out the impact of an external damper unlike any we have previously seen.

We are truly in uncharted waters as the tech industry in general has continued to grow, perhaps at varying rates, but we haven’t seen a broad based, global downturn such as we may be in line for. Many would point to the economic crisis of 2008/2009 which was certainly negative but does not have the same “off a cliff with no skid marks” that the current global crisis has for its sudden sharp drop.

We would also point out that the semiconductor industry is famous for self inflicted cycles based on over supply from building way too much capacity. In fact we would argue that most chip cycles are self inflicted and most are supply side initiated.

The COVID-19 cycle is demand based as we haven’t had a sudden change in global chip capacity, as fabs have kept running but we can expect a demand drop that has yet to fully manifest itself.

Technology is always the first to get whacked

When the economy goes south, technology buys are the first to suffer. Consumers continue to buy food, shelter, fuel and guns & ammo but don’t buy the next gen Iphone or bigger flat screen. 5G can wait while I put food on the table. Given that smart phones are nothing more than containers of silicon, its clear that the chip industry will get it in the neck.

There are also a lot of other things in addition to the virus such as the oil market issues and the election which has all but been forgotten about. The effects on the technology market will persist long after the virus has been arrested and controlled.

There was a baseline assumption at one time that all the economic damage associated with COVID-19 would certainly be contained within the calendar year such that any business delayed by the virus out of H1 would just make H2 of 2020 that much stronger and it would all be a “wash” on an annualized basis.

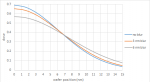

The current trajectory as to the the length and depth of the “COVID-19 Chip Cycle” is unknown as to whether it is “V”, “U” or “L” shaped. Right now it feels at least like a “U” if not an extended “U” shape (a canoe…).

The chip industry was barely a quarter out of a “U” shaped memory driven down cycle, having been pulled out by technology spending on the foundry/logic side when we were ambushed by COVID-19.

Two types of spending cycles; Technology & Capacity

There are two types of spending cycles in the chip industry, technology driven spending versus capacity driven spending. Capacity driven spending is the bigger part and technology spend is more consistent.

The industry has been on a technology driven spending recovery that was just about to turn on a capacity driven push. While we think that technology driven spending to sustain Moore’s Law and 5NM/3NM will continue, we think that capacity spending will likely slow again, especially on the memory side as a drop in demand will get us back into an oversupply condition that we were just starting to emerge from.

The supply/demand balance in the chip industry remains a somewhat delicate balancing act and the COVID-19 elephant just jumped on one side.

One could argue that foundry/logic which has been the driver of the current recovery could falter as 5G, which is a big demand driver, is a “nice to have” not a “gotta have” as we could see 5G phone demand slow before the Iphone 12 ever launches.

On the plus side, work from home and remote learning for schools is clearly stressing demand for server capacity and overall cloud services which should bode well for Intel and AMD and associated chip companies

China Chip Equipment embargo likely off the table for now

At one point, not too long ago, it felt like we were only days away from imposing severe restrictions and licenses on the export of technology that could help China with 5G, such as semiconductor equipment.

We think the likelihood of that happening any time soon is just about zero as the US can’t do anything to upset China as China supplies 90% of our pharmaceuticals, the majority of our PPE (personal protective equipment) like masks, and probably a lot of ventilators.

Politicians have bigger fish to fry with fighting over a trillion dollars of a rescue package and pointing fingers at one another. So at least COVID-19 has crowded out other things we had to worry about in the chip industry. Probably no one cares if the Chinese dominate 5G as there won’t be a lot of demand to dominate.

There may be permanent systemic changes

There is a lot of complaining about corporate “bailouts”, stock buy backs, executive pay etc; associated with any financial rescue package. While much of this may be focused on airlines and other more directly impacted industries, even if the chip industry never gets a dime of bailout money there will likely be increased scrutiny on corporate behavior in general and there could even be some legislation associated with it.

Buy backs which have become very big in the chip industry during good times may become less popular. We would not be surprised to see an increased focus on semiconductor manufacturing moving to Asia as people have figured out we don’t even make our own pharmaceuticals any more.

Is an out sourced global supply chain a bad thing?

The technology industry has prided itself on how far and wide the supply chain for a technology can be. An Iphone is perhaps a poster child for supply chain logistics.

The problem is that broad and wide supply chains have been exposed as our soft underbelly, during COVID-19, that make us more susceptible to interruptions, even in some far away place, that can completely shut us down.

These supply chains work like dominoes that can cause a cascading effect to bring things to a halt.

Truly multinational companies that rely on the free movement of people and goods across borders with no friction might think twice about how they will deal with another global crisis as there is a high likelihood we will experience another one….not a question of if but rather when. That we haven’t had a global disruption like COVID-19 before is probably just pure luck.

Boards of large companies will start to ask and demand for plans to deal with global disruption just as they have local contingency plans today to deal with local disasters.

Will the world get less interconnected?

Maybe moving back to a more vertically integrated, local model is safer albeit a little more expensive. There is likely some political will for more isolationist economic behavior after COVID-19 is over.

The semiconductor industry in the US is a shell of its former self as most production and much technology has been off shored with the primary driver being economic savings which mean less when you can’t produce anything.

Balance sheet safety

We would point out that the semiconductor industry does seem to be relatively flush with cash as compared to cycles past. However there are some companies in the space that have a significant amount of debt (in some cases more than their cash) on their balance sheet.

The US has over 7 Trillion dollars in corporate debt, now more than ever, and about a third of our GDP. In previous cycles in the chip industry we have seen some companies go under due to debt load.

The popular model to lever up balance sheets with debt could potentially reverse itself as there are a long list of companies that will have their hand out for the government bailouts.

If we slow down buy backs, getting out of debt should be easy for most but there still are some that are deeply in debt, just a little less so in the semiconductor industry.

There are a handful of companies that could see COVID-19 related weakness push them closer to debt problems

M&A rebound?

Could we see the government loosen up its dislike of corporate mergers. If companies make the argument that getting together makes them stronger and more resistant to global issues then we could see a few more larger mergers happening under the right circumstances. There is probably not a lot left in the semiconductor industry but there are a few deals that didn’t happen that could be revisited….after all , valuations are a lot more attractive now, especially for those companies that have dry powder in the form of cash.

Stock valuations are attractive

We are seeing stock valuations that are now looking attractive on a P/E basis much as the stocks were getting too expensive just a couple of months ago.

We are seeing multiple contraction rather than expansion as some stocks are discounting an unrealistic level of contraction much as they were previously discounting too bright a future. Much of the sentiment will be determined on Q1 conference calls which are coming up. If we were management, we would probably take a very conservative view as going out on a limb will not likely be rewarded.

Investors also want to see a company reset expectations in one swoop rather than a death by a thousand cuts over the next few quarters

Investors will want some sort of comfort that we are at or near a COVID-19 bottom even though that may not be the real case… just lie to me and make me feel better…..