Time-sensitive networking for aerospace and defense applications is receiving new attention as a new crop of standards and profiles approaches formal release, anticipated before the end of 2024. CAST, partnering with Fraunhofer IPMS, has developed a suite of configurable IP for time-sensitive networking (TSN) applications, with an endpoint IP core now running at 10Gbps and switched endpoint and multiport switch IP cores available at 1Gbps and extending to 10Gbps soon. They have just released a short white paper on TSN in aerospace applications, providing an overview of TSN standards and how they map to aerospace network architectures.

Standards come, standards go, but Ethernet keeps getting better

A couple of decades ago, at a naval installation far, far away – NWSC Dahlgren, Virginia, to be exact – I had the privilege of accompanying Motorola board-level computer architects in our customer research conversation with Dahlgren’s system architects. The topic was high-bandwidth backplane communication, with the premise of moving from the parallel VMEbus to a faster serial interface.

The Dahlgren folks politely listened to a presentation overviewing throughput and latency differences between Ethernet, InfiniBand, and RapidIO. Ethernet was just transitioning to 1Gbit speeds. Our architects leaned toward RapidIO for its faster throughput with low latency but were open to considering input from real-world customers. When the senior Dahlgren scientist started speaking after the last slide, he gave an answer that stuck with me all these years.

His well-entrenched position was that niche use cases notwithstanding, Ethernet would always win by satisfying more system interoperability requirements and positioning deployed systems to survive in long life-cycle applications via upgrades. Just as Token Ring and FDDI appeared and were subsequently displaced by Ethernet, he projected that InfiniBand and RapidIO would eventually fall to Ethernet as standards work improved performance.

The discussion is still relevant today. Real-time applications demand determinism and reliability, especially in mission-critical contexts like aerospace and defense. Enterprise Ethernet technology provides robust interoperability and throughput but occasional non-deterministic behavior. It only takes one late-arriving packet to throw off an application depending on that data within a fixed time window. Bandwidth covers up many sins, but as applications demand more data from more sources in large systems, the margin for error shrinks.

Addressing four TSN concepts and common aerospace profiles

Quoting the white paper: “TSN consists of a set of standards that extend Ethernet network communication with determinism and real-time transmission.” IEEE TSN standards address four key concepts not available in enterprise-class Ethernet:

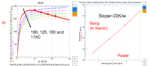

- Time synchronization, setting up a common perception across all devices in a network, builds on concepts from IEEE 1588 and adds resilience through multiple time domains.

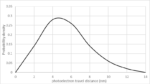

- Latency, establishing the idea of high-priority traffic versus best-effort traffic, and applying time-aware and credit-based shaping.

- Resource management, with protocols to set up switches, determine topology, and request and reserve network bandwidth.

- Reliability focuses on recovering from defective paths while minimizing redundant transmissions and protecting networks from propagating incorrect data.

Profiles are being developed for using TSN in specific industries, such as aerospace and defense, industrial automation, automotive, audio-video bridging systems, and others. Existing communication standards for aerospace, like ARINC-664, MIL-STD-1553, and Spacewire, fail to handle all the above concepts that drove the design of TSN.

CAST/Fraunhofer IPMS offer a concise table of domains and key requirements for networking, noting that a big problem is isolated network islands using various technologies inside a large system. They propose TSN as a unifying network architecture that can meet all the requirements with less expensive components and simpler cabling.

The paper concludes with a brief overview of the CAST/Fraunhofer IPMS TSN IP cores available in RTL source or FPGA-optimized netlists, describing features designed for real-time networking with determinism and low latency.

To get the whole story on time-sensitive networking in aerospace and defense with more detail on the emerging IEEE TSN standards and profiles and aerospace network requirements, download the CAST/Fraunhofer IPMS white paper: