In contrast to the opinions in a recent article here, I think Waze is extremely beneficial to the individuals who use it, other drivers – by virtue of more efficient road usage, and the various jurisdictions that oversee roads and highways. For those not familiar with Waze, it is a smartphone app that provides navigation and… Read More

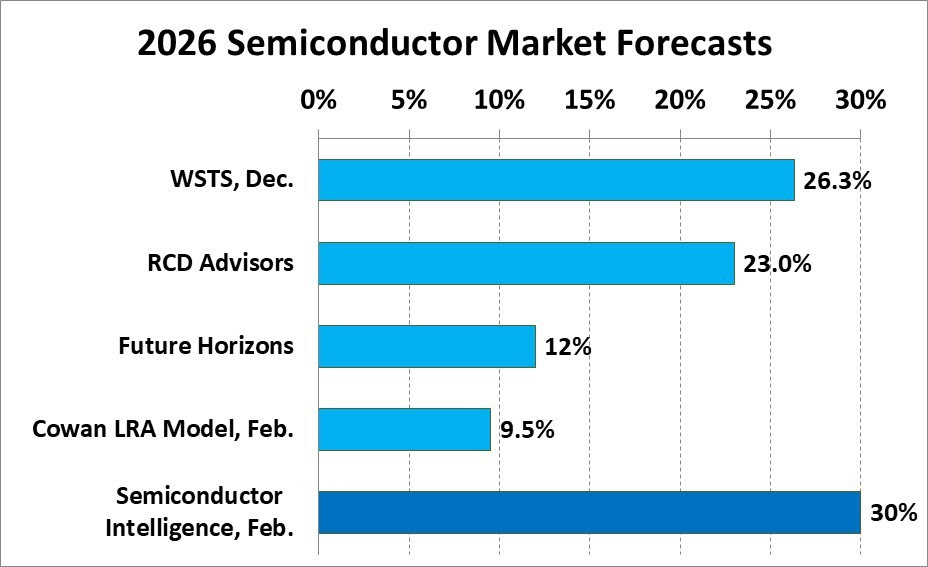

AI Drives Strong Semiconductor Market in 2025-2026The global semiconductor market in 2025 was $792…Read More

AI Drives Strong Semiconductor Market in 2025-2026The global semiconductor market in 2025 was $792…Read More How Customized Foundation IP Is Redefining Power Efficiency and Semiconductor ROIAs computing expands from data centers to edge…Read More

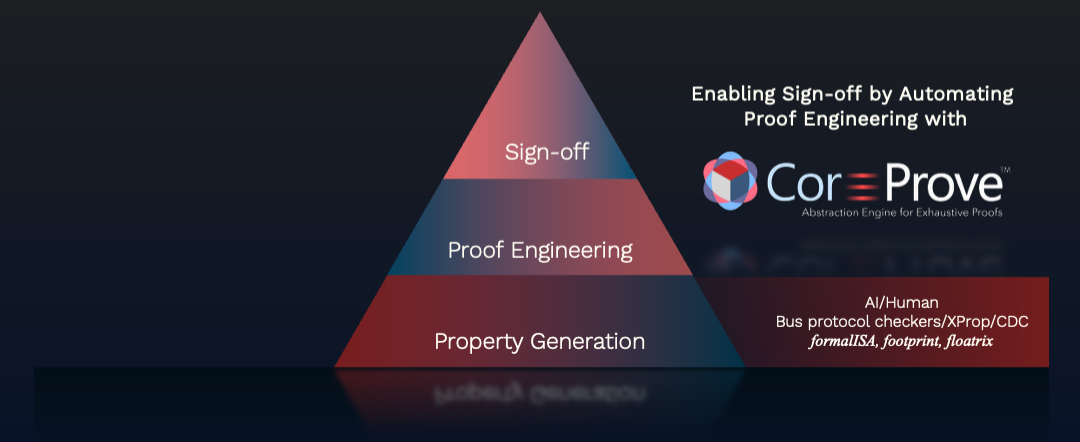

How Customized Foundation IP Is Redefining Power Efficiency and Semiconductor ROIAs computing expands from data centers to edge…Read More Akeana Partners with Axiomise for Formal Verification of Its Super-Scalar RISC-V CoresAkeana Inc. announced a key milestone in the…Read More

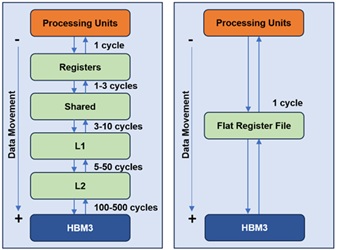

Akeana Partners with Axiomise for Formal Verification of Its Super-Scalar RISC-V CoresAkeana Inc. announced a key milestone in the…Read More An AI-Native Architecture That Eliminates GPU InefficienciesA recent analysis highlighted by MIT Technology Review…Read More

An AI-Native Architecture That Eliminates GPU InefficienciesA recent analysis highlighted by MIT Technology Review…Read More Caspia Technologies Unveils A Breakthrough in RTL Security Verification Paving the Way for Agentic Silicon SecurityIn a significant advancement for the semiconductor industry,…Read More

Caspia Technologies Unveils A Breakthrough in RTL Security Verification Paving the Way for Agentic Silicon SecurityIn a significant advancement for the semiconductor industry,…Read MoreOne, Two, Many – Why You May Not Be Replaced By A Robot

Some aboriginal tribes in Australia see little value in counting and are believed to discriminate only between “one”, “two” and “many”. This is not through lack of intelligence; beyond two they simply lose interest in the details. We can smile and feel superior but I suspect we are not much better when it comes to predicting our technology… Read More

Analog Mixed-Signal Layout in a FinFET World

The intricacies of analog IP circuit design have always required special consideration during physical layout. The need for optimum device and/or cell matching on critical circuit topologies necessitates unique layout styles. The complex lithographic design rules of current FinFET process nodes impose additional restrictions… Read More

Key Takeaways from the TSMC Technology Symposium Part 1

TSMC recently held their annual Technology Symposium in San Jose, a full-day event with a detailed review of their semiconductor process and packaging technology roadmap, and (risk and high-volume manufacturing) production schedules.… Read More

Internet of Things Augmented Reality Applications Insights from Patents

US20150347850 illustrates an IoT (Internet of Things) AR (Augmented Reality) application in a smart home. A smart home IoT device communicates via a local network to a user AR device (e.g., smartphone) for providing the tracking data. The tracking data describes the smart home IoT device. The AR devices can recognize the smart… Read More

How HBM Will Change SOC Design

High Bandwidth Memory (HBM) promises to do for electronic product design what high-rise buildings did for cities. Up until now, electronic circuits have suffered from the equivalent of suburban sprawl. HBM is a radical transformation of memory architecture that will have huge ripple effects on how SOC based electronics are … Read More

Can Qualcomm avoid repeating Motorola’s fate?

NPR had an interesting guest this morning: Edward Luce, author of “Time to Start Thinking: America in the Age of Descent”. I’m not about to turn SemiWiki into a politics blog, but there is some precedent in the technology business. I’ve caught myself saying more than once recently that “Motorola is no longer the company I worked 14… Read More

Custom IC Design Flow with OpenAccess

Imagine being able to use any combination of EDA vendor tools for schematic capture, SPICE circuit simulation, layout editing, place & route, DRC, LVS and extraction. On the foundry side, how about creating just a single Process Development Kit (PDK), instead of vendor-specific kits. Well, this is the basic premise of a recent… Read More

Data Security Predictions for 2016!

2016 has come and with it some of the greatest challenges we have ever faced in the data security industry. Data breaches run rampant, encryption is dead, big security companies rake in billions in consulting fees selling fear and today’s large corporations have no other option than to shell out good money after bad on old … Read More

Autonomy at Odds with Security

It’s funny that we all now believe that Google got the automated driving ball rolling. The reality is that the government started it all with the Defense Advanced Research Projects Agency (DARPA) and its famous DARPA Grand Challenge, which consisted of three tests (in 2004, 2005 and 2007) of driverless cars in different driving… Read More

A Detailed History of Samsung Semiconductor