Among the multiple technologies that are poised to deliver substantial value in the future, Artificial Intelligence (AI) tops the list. An IEEE survey showed that AI will drive the majority of innovation across almost every industry sector in the next one to five years.

As a result, the AI revolution is motivating the need for an entirely new generation of AI systems-on-chip (SoCs). Using AI in chip design can significantly boost productivity, enhance design performance and energy efficiency, and focus expertise on the most valuable aspects of chip design.

AI Accelerators

Big data has led data scientists to deploy neural networks to consume enormous amounts of data and train themselves through iterative optimization. The industry’s principal pillars for executing software – standardized Instruction Set Architectures (ISA) – however aren’t suited for this approach. AI accelerators have instead emerged to deliver the processing power and energy efficiency needed to enable our world of abundant-data computing.

There are currently two distinct AI accelerator spaces: the data center on one end and the edge on the other.

Hyperscale data centers require massively scalable compute architectures. The Wafer-Scale Engine (WSE) for example can deliver more compute, memory, and communication bandwidth, and support AI research at dramatically faster speeds and scalability compared with traditional architectures.

On the other hand, with regards to the edge, energy efficiency is key and real estate is limited, since the intelligence is distributed at the edge of the network rather than a more centralized location. AI accelerator IP is integrated into edge SoC devices which, no matter how small, deliver the near-instantaneous results needed.

Webinar Objective

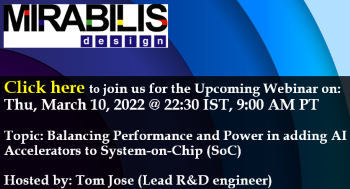

Given this situation, three critical parameters for project success using AI accelerators, will be discussed in detail in the upcoming webinar on Thursday, March 10, 2022:

- Estimating the power advantage of implementing an AI algorithm on an accelerator

- Sizing the AI accelerator for existing and future AI requirements

- The latency advantage between ARM, RISC, DSP and Accelerator in deploying AI tasks

An architect always thinks of the performance or power gain that can be obtained with a proposed design. There are multiple variables, and many viable options available, with a myriad different configurations to choose from. The webinar will focus on the execution of an AI algorithm in an ARM, RISCV, DSP-based system; and in an AI accelerator-based system. The ensuing benefits of power, sizing and latency advantages will be highlighted.

Power Advantage of AI Algorithm on Accelerator using VisualSim

Mirabilis Design’s flagship product VisualSim has library blocks that have power incorporated into the logic of the block. Adding the details of power does not slow down the simulation; it also provides a number of important statistics that can be used to further optimize the AI accelerator design.

VisualSim AI Accelerator Power Designer

VisualSim AI Accelerator Designer uses state-based power modeling methodology. The user inputs two pieces of information – the power in each state (Active, Standby, Idle, Wait, etc.) and the power management algorithm. As the traffic flows into the system and the tasks are executed, the instruction executes in the processor core and requests data from the cache and memory. At the same time, the network is also triggered.

All these devices in the system move from one state to another. VisualSim PowerTable keeps track of the power in each state, the transition between states, and the changes to a lower state based on the power management algorithm.

The power statistics can be exported to a text file, and to a timing diagram format.

Advantages of sizing the AI accelerator

AI accelerators are repetitive operations with large buffers. These IPs occupy significant semiconductor space and thus augmenting the overall cost of the SoC, where the accelerator is just a small section.

The other reason for right-sizing of the accelerator is that, depending on the application, functions can be executed either in parallel or serial, or data size. The buffers, cores and other resources of the IP must be sized differently. Hence the right-sizing is important.

Workloads and Use Cases

The SoC architecture is tested for a variety of workloads and use-cases. An AI accelerator receives a different sequence of matrix multiplication requests, based on the input data, sensor values, task to be performed, scheduling, queuing mechanism and flow control.

For example, the reference data values can be stored off-chip in the DRAM or can be stored in an SRAM adjacent to the AI block. Similarly the math can be executed inline, i.e., without any buffering, or buffered and scheduled.

New VisualSim Insight Methodology and its Application

Insight technology connects the requirements to the entire product lifecycle by tracking the metrics generated at each level, against requirements. The insight engines work throughout the process from planning, design, validation and testing. In the case of the AI accelerator, the initial requirements can be memory bandwidth, cycles per AI, power per AI functions, etc. Functional correctness and flow control correctness can be added later. The goal of the Insight Engine is to carry metrics of system planning all the way to product delivery. There will be a reference to verify at each stage.

Building of AI Accelerators

AI accelerators can be built using a variety of configurations, whether single or multi-core. A number of open-source concepts are available. Companies such as Nvidia and Google have published their own accelerators. The core IP from Tensilica provides AI acceleration as a primary feature.

Mirabilis Design and AI Accelerators

Mirabilis Design has experimented with performance and power analysis of Tensorflow ver 2.0 and 3.0. In addition, we are working on a model of the Tensilica AI accelerator model.

Workload Partitioning in Multi-Core Processors

The user constructs the models in two parts- hardware architecture and behavior flow which resembles a Task Graph. Each element of a task can perform multiple functions- execute, trigger another task or move data from one location to another. Each of these tasks get mapped to a different part of the hardware. There are other aspects that will also affect the partition. For example the coefficients can be stored locally, increased parallel processing of the matrix multiply, masking unused threads to reduce power etc. The goal is to determine the number of operations per second.

Configuration Power and Performance Metrics

The power and performance do not follow the same pattern. They can diverge for a number of reasons. Memory accesses to the same bank group or writing to the same block address or using the same load/store unit can reduce die space and in some cases be faster, but the power consumed could be much higher.

Summary

Finally, we would like to say that this webinar apart from highlighting the above sections with regard to the AI accelerator, will also show how to arrive at the best configuration and detect any bottlenecks in the proposed design.

Also Read:

System-Level Modeling using your Web Browser

Architecture Exploration with Miribalis Design

CEO Interview: Deepak Shankar of Mirabilis Design

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.