Cerebras Systems Unveils World’s Fastest AI Chip with Whopping 4 Trillion Transistors - Cerebras

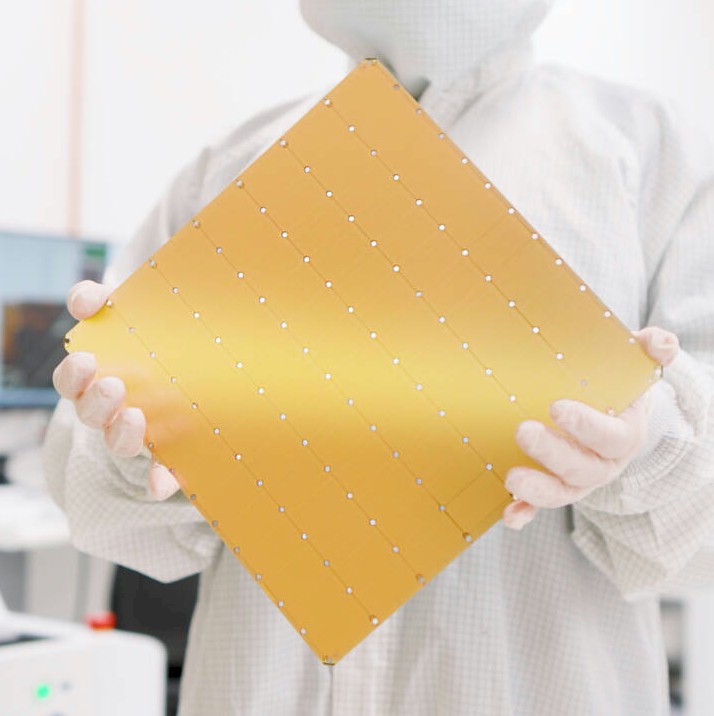

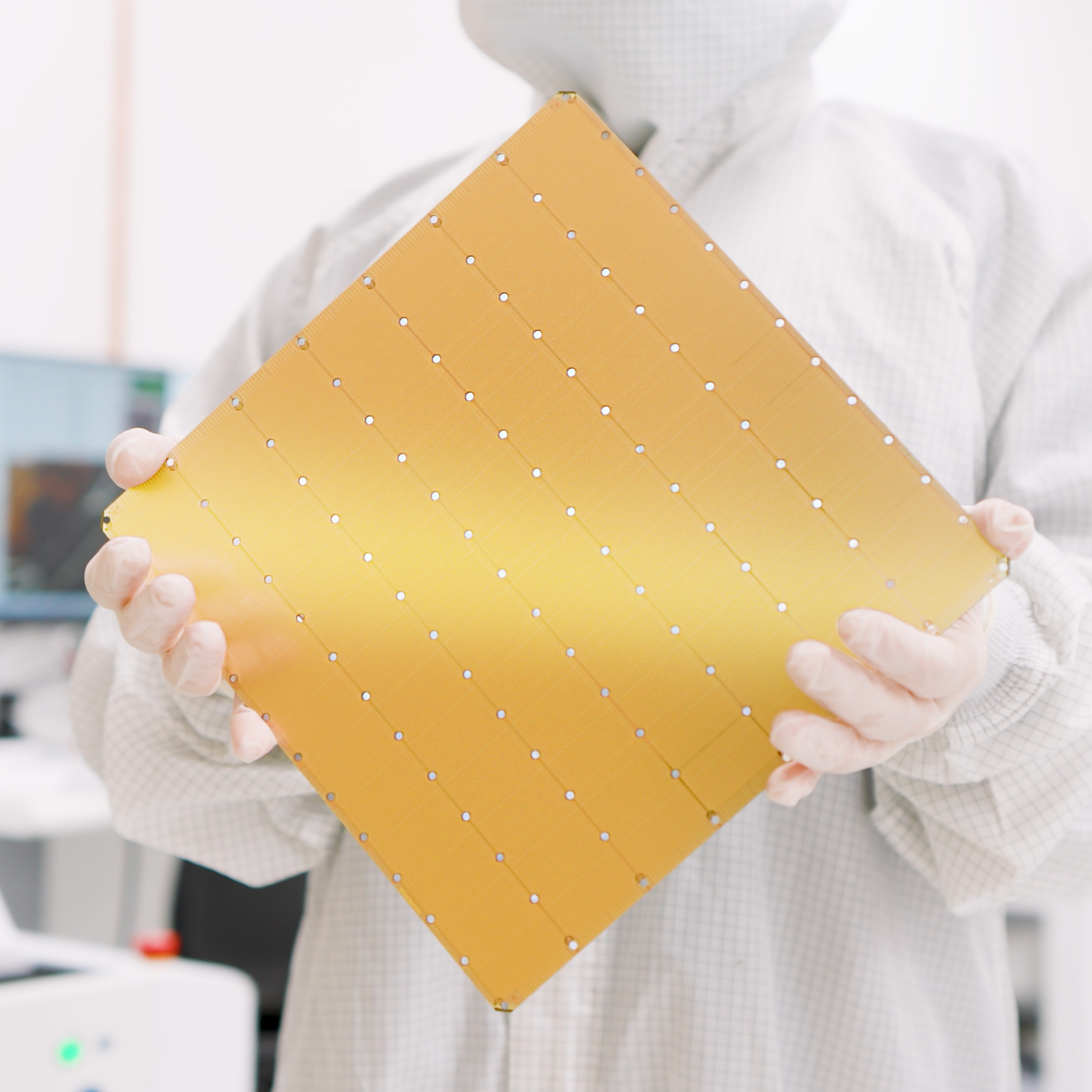

Third Generation 5nm Wafer Scale Engine (WSE-3) Powers Industry’s Most Scalable AI Supercomputers, Up To 256 exaFLOPs via 2048 Nodes

www.cerebras.net

www.cerebras.net

TSMC 5nm version. 850,000 -> 900,000 AI cores versus CSE-2 on 7nm. 10% more on-die SRAM (44GB). The CSE-2 had 1.5% extra cores to achieve a 100% yield. (That means the CSE-2 had about 863,000 cores in the design.) External memory is pumped up to a maximum of 1.5PB, but no word on memory technologies in the announcement. No further details for the CSE-3, but I suspect Ian Cutress will eventually do an article like he did for the CSE-2.

I'm also wondering if they bumped up the Ethernet link support from 100GbE and/or increased the number of links.

It is also interesting that they've pulled everything off with about 350 employees. That's pretty amazing, considering all they've accomplished from chip design tools to clustering.