So-called in-memory computing is drawing new funding—and even inspiring some geopolitical maneuvering.

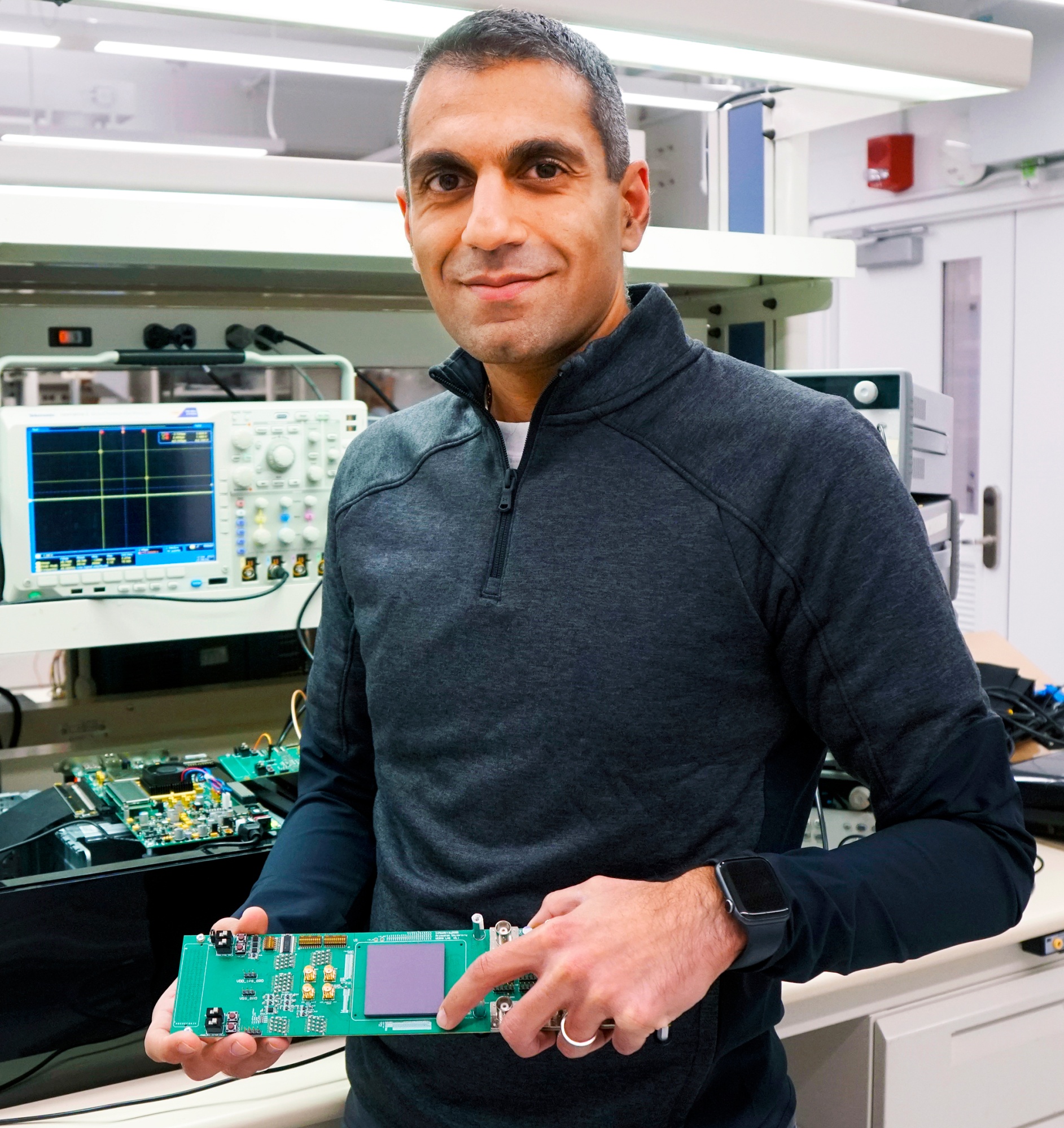

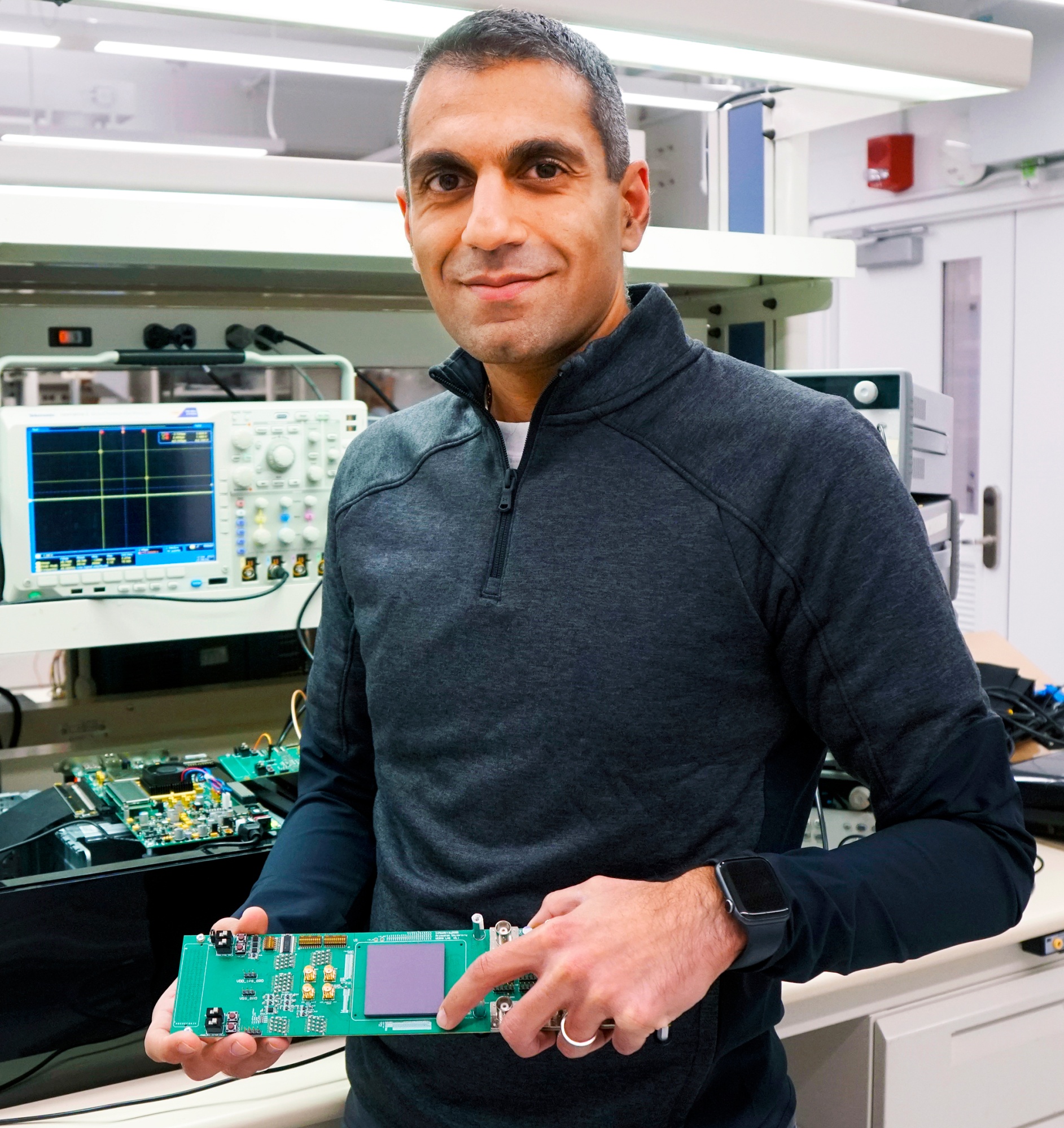

Sid Sheth, founder and CEO of d-Matrix, holds the company’s Jayhawk and Nighthawk chiplets.

Source: Courtesy d-Matrix

To understand a key reason artificial intelligence requires so much energy, imagine a computer chip serving as a branch of the local library and an AI algorithm as a researcher with borrowing privileges. Every time the algorithm needs data, it goes to the library, known as a memory chip, checks out the data and takes it to another chip, known as a processor, to carry out a function.

AI requires massive amounts of data, which means there are the equivalent of billions of books being trucked back and forth between these two chips, a process that burns through lots of electricity. For at least a decade, researchers have tried to save power by building chips that could process data where it’s stored. “Instead of bringing the book from the library to home, you’re going to the library to do your work,” says Stanford University professor Philip Wong, a top expert in memory chips who’s also a consultant to Taiwan Semiconductor Manufacturing Co.

This process, often referred to as in-memory computing, has faced technical challenges and is just now moving beyond the research stage. And with AI’s electricity use raising serious questions about its economic viability and environmental impact, techniques that could make AI more energy efficient might pay off big. This has made in-memory computing the subject of increased excitement—and even meant it’s begun to get caught up in the broader geopolitical wrangling over semiconductors.

Major chip manufacturers such as TSMC, Intel and Samsung are all researching in-memory computing. Intel Corp. has produced some of the chips to conduct research, says Ram Krishnamurthy, senior principal engineer at Intel Labs, the company’s research arm, though he declined to say how in-memory computing could fit into Intel’s product lineup. Individuals such as OpenAI Chief Executive Officer Sam Altman, companies like Microsoft, and government-affiliated entities from China, Saudi Arabia and elsewhere have all invested in startups working on the technology.

In November a US government panel that reviews foreign investments with national security implications forced the venture capital fund of Saudi energy company Aramco to divest from Rain AI, a San Francisco-based startup focused on in-memory computing. Aramco’s venture capital units are now seeking other companies working on in-memory computing outside of the US, with active scouting in China, according to people with knowledge of the matter, who asked to remain anonymous while discussing nonpublic business matters.

China, in particular, is showing increasing interest in the technology. A handful of Chinese startups such as PIM Chip, Houmo.AI and WITmem are raising money from prominent investors, according to PitchBook, which tracks investment data. Naveen Verma, a professor at Princeton University who’s also a co-founder of EnCharge AI, a startup working on in-memory computing, says he’s frequently invited to give talks about the subject at Chinese companies and universities. “They’re trying aggressively to understand how to build systems—in-memory compute systems and advanced systems in general,” he says. Verma says he hasn’t been to China for several years and has only given talks in Asia about his academic work, not about EnCharge’s technology.

EnCharge AI co-founder and CEO Naveen Verma holding a prototype switched-capacitor analog in-memory computing chip at his research lab at Princeton University.Source: Scott Lyon

It’s far from assured that this chip technology will become a significant part of AI computing’s future. Traditionally, in-memory computing chips have been sensitive to environmental factors such as changes in temperature, which have caused computing errors. Startups are working on various approaches to improve this, but the techniques are new. Changing to new types of chips is expensive, and customers are often hesitant to do so unless they’re confident of significant improvements, so startups will have to convince customers that the benefits are worth the risks.

For now, in-memory computing startups aren’t working on the most difficult part of AI computing: training new models. This process, in which algorithms examine petabytes of data to glean patterns that they use to build their systems, is mostly handled by top-line chips designed by companies such as Nvidia Corp. The company has been working on its own strategies for improving power efficiency, including reducing the size of its transistors and improving the ways that chips communicate with one another.

Rather than take on Nvidia directly, startups making in-memory computing chips are aiming to build their businesses on inference, the task of using existing models to take prompts and spit out content. Inference is not as complicated as training, but it happens on a large scale, meaning there could be a good market for chips designed specifically to make it more efficient.

The intensive power consumption of Nvidia’s main product, a type of chip known as a graphics processing unit, makes it a relatively inefficient choice to use for inference, says Sid Sheth, founder and CEO of d-Matrix, a Silicon Valley-based chip startup that’s raised $160 million from investors like Microsoft Corp. and Singaporean state-owned investor Temasek Holdings Pte. He says pitching investors was challenging until the AI boom started. “The first half of ’23, everybody got it because of ChatGPT,” he says. The company plans to sell its first chips this year and reach mass production in 2025.

In-memory computing companies are still feeling out the best uses for their products. One in-memory computing startup, Netherlands-based Axelera, is targeting computer-vision applications in cars and data centers; backers of Austin, Texas-based Mythic see in-memory computing as ideal for applications such as AI-powered security cameras in the near term but eventually hope they can be used to train AI models.

The sheer scale of AI energy use adds urgency to everyone working on ways to make the technology more efficient, says Victor Zhirnov, chief scientist at Semiconductor Research Corp., an industry think tank. “AI desperately needs energy efficient solutions,” he says. "Otherwise it will kill itself very soon."

www.bloomberg.com

www.bloomberg.com

Sid Sheth, founder and CEO of d-Matrix, holds the company’s Jayhawk and Nighthawk chiplets.

Source: Courtesy d-Matrix

To understand a key reason artificial intelligence requires so much energy, imagine a computer chip serving as a branch of the local library and an AI algorithm as a researcher with borrowing privileges. Every time the algorithm needs data, it goes to the library, known as a memory chip, checks out the data and takes it to another chip, known as a processor, to carry out a function.

AI requires massive amounts of data, which means there are the equivalent of billions of books being trucked back and forth between these two chips, a process that burns through lots of electricity. For at least a decade, researchers have tried to save power by building chips that could process data where it’s stored. “Instead of bringing the book from the library to home, you’re going to the library to do your work,” says Stanford University professor Philip Wong, a top expert in memory chips who’s also a consultant to Taiwan Semiconductor Manufacturing Co.

This process, often referred to as in-memory computing, has faced technical challenges and is just now moving beyond the research stage. And with AI’s electricity use raising serious questions about its economic viability and environmental impact, techniques that could make AI more energy efficient might pay off big. This has made in-memory computing the subject of increased excitement—and even meant it’s begun to get caught up in the broader geopolitical wrangling over semiconductors.

Major chip manufacturers such as TSMC, Intel and Samsung are all researching in-memory computing. Intel Corp. has produced some of the chips to conduct research, says Ram Krishnamurthy, senior principal engineer at Intel Labs, the company’s research arm, though he declined to say how in-memory computing could fit into Intel’s product lineup. Individuals such as OpenAI Chief Executive Officer Sam Altman, companies like Microsoft, and government-affiliated entities from China, Saudi Arabia and elsewhere have all invested in startups working on the technology.

In November a US government panel that reviews foreign investments with national security implications forced the venture capital fund of Saudi energy company Aramco to divest from Rain AI, a San Francisco-based startup focused on in-memory computing. Aramco’s venture capital units are now seeking other companies working on in-memory computing outside of the US, with active scouting in China, according to people with knowledge of the matter, who asked to remain anonymous while discussing nonpublic business matters.

China, in particular, is showing increasing interest in the technology. A handful of Chinese startups such as PIM Chip, Houmo.AI and WITmem are raising money from prominent investors, according to PitchBook, which tracks investment data. Naveen Verma, a professor at Princeton University who’s also a co-founder of EnCharge AI, a startup working on in-memory computing, says he’s frequently invited to give talks about the subject at Chinese companies and universities. “They’re trying aggressively to understand how to build systems—in-memory compute systems and advanced systems in general,” he says. Verma says he hasn’t been to China for several years and has only given talks in Asia about his academic work, not about EnCharge’s technology.

EnCharge AI co-founder and CEO Naveen Verma holding a prototype switched-capacitor analog in-memory computing chip at his research lab at Princeton University.Source: Scott Lyon

It’s far from assured that this chip technology will become a significant part of AI computing’s future. Traditionally, in-memory computing chips have been sensitive to environmental factors such as changes in temperature, which have caused computing errors. Startups are working on various approaches to improve this, but the techniques are new. Changing to new types of chips is expensive, and customers are often hesitant to do so unless they’re confident of significant improvements, so startups will have to convince customers that the benefits are worth the risks.

For now, in-memory computing startups aren’t working on the most difficult part of AI computing: training new models. This process, in which algorithms examine petabytes of data to glean patterns that they use to build their systems, is mostly handled by top-line chips designed by companies such as Nvidia Corp. The company has been working on its own strategies for improving power efficiency, including reducing the size of its transistors and improving the ways that chips communicate with one another.

Rather than take on Nvidia directly, startups making in-memory computing chips are aiming to build their businesses on inference, the task of using existing models to take prompts and spit out content. Inference is not as complicated as training, but it happens on a large scale, meaning there could be a good market for chips designed specifically to make it more efficient.

The intensive power consumption of Nvidia’s main product, a type of chip known as a graphics processing unit, makes it a relatively inefficient choice to use for inference, says Sid Sheth, founder and CEO of d-Matrix, a Silicon Valley-based chip startup that’s raised $160 million from investors like Microsoft Corp. and Singaporean state-owned investor Temasek Holdings Pte. He says pitching investors was challenging until the AI boom started. “The first half of ’23, everybody got it because of ChatGPT,” he says. The company plans to sell its first chips this year and reach mass production in 2025.

In-memory computing companies are still feeling out the best uses for their products. One in-memory computing startup, Netherlands-based Axelera, is targeting computer-vision applications in cars and data centers; backers of Austin, Texas-based Mythic see in-memory computing as ideal for applications such as AI-powered security cameras in the near term but eventually hope they can be used to train AI models.

The sheer scale of AI energy use adds urgency to everyone working on ways to make the technology more efficient, says Victor Zhirnov, chief scientist at Semiconductor Research Corp., an industry think tank. “AI desperately needs energy efficient solutions,” he says. "Otherwise it will kill itself very soon."

AI Energy Crisis Boosts Interest in Chips That Do It All

So-called in-memory computing is drawing new funding—and even inspiring some geopolitical maneuvering.