It might seem I am straying from my normal beat in talking about implementation; after all, I normally write on systems, applications and front-end design. But while I’m not an expert in implementation, I was curious to understand how the trending applications of today (automotive, AI, 5G, IoT, etc.) create new demands on implementation, over and above the primarily application-independent challenges associated with advancing semiconductor processes. So, with apologies in advance to the deep implementation and process experts, I’m going to skip those (very important) topics and talk instead about my application perspectives.

An obvious area to consider is low power design. Automotive, mobile (phone and AR/VR) and IoT applications obviously depend on low power (even in a car, all the electronics we are adding can quickly drain the battery). AI is also becoming very power-critical, especially as it increasingly moves to the edge for FaceID, voice recognition and similar features. 5G on the edge, in enhanced mobile broadband applications for example, must be very carefully power managed thanks to heavy MIMO support and the consequent parallelism required to support high throughput rates.

Power is a special challenge in design because it touches virtually all aspects, from architecture through verification and synthesis, then through to PG netlist. Certainly you need uniformity in specifying power intent. The UPF standard helps with this but we all know that different tools have slightly different ways of interpreting standards. Mix-and-match flows will struggle with varying interpretations, to the point that design convergence can become challenging. The same could even be true within a flow built on a single vendor’s tools unless they pay special attention to uniformity of interpretation. So this is one key requirement in the implementation flow.

Another big requirement, associated certainly with automotive but also long-life, low-support IoT, is reliability. We demand very low failure rates and very long lifetimes (compared to consumer electronics) in this area. Implementation must take into consideration on-chip variation (OCV) in timing. I’ve written before about the impact of local power integrity variations on local timing. Equally, power-inrush associated with power switching and unexpectedly high current demand in certain use modes increases the risk of damaging electromigration (EM). Traditional global margin approaches to manage this variability are already painfully expensive in area overhead. Better approaches are needed here.

Aging is a (relatively) new concern in mass market electronics. One major root-cause is negative-bias temperature instability (NBTI) which occurs when (stable) electric fields are applied for a long time across a dielectric (for example, when a part of a circuit is idle, or a clock is gated for long periods). This causes voltage thresholds to increase over time which can push near-critical paths to become critical. Again, it would be overkill (and too expensive) to simply margin this problem away so you have analyze for risk areas based in some manner on typical use cases.

Thermal concerns are another factor and here I’ll illustrate with an AI example. As we chase the power-performance curve, advanced architectures for deep neural nets are moving to arrays of specialized processors with need for very tightly coupled caching and faster access to main memory, leading to a lot of concentrated activity. Thanks to FinFET self-heating and Joule heating in narrower interconnects this raises EM and timing concerns which must be mitigated in some manner.

Still on AI, there’s an increasing move to varying word-widths through neural nets. Datapath layout engines will need to accommodate this efficiently. Meanwhile, the front-end of 5G, for enhanced mobile broadband (eMBB) and even more for mmWave must be a blizzard of high performance and highly parallel activity, in order to sustain bit-rates of 10Gbps. For eMBB at least (I’m not sure about mmWave), this is managed through a multi-input, multi-output (MIMO) interface through multiple radios to basestations, therefore multiple parallel paths into and out of the modem. In addition, there is support for highly parallel processing from each radio into one or more DSPs to implement beamforming to identify the strongest signal. Getting to these data-rates requires very tight timing management in implementation, also very tight power management.

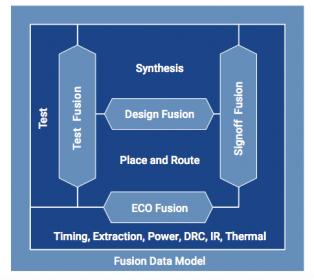

So yeah, I would expect implementation for these applications to have to advance beyond traditional flows. Synopsys has developed their Fusion Technology (see the opening graphic) as an answer to this need, tightly tying together all aspects of implementation: synthesis, test, P&R, ECO and signoff. The premise is that all these demands require much tighter correlation and integration through the flow than can be accomplished with a mix-and-match approach. Fusion Technology brings together all of the Synopsys’ implementation, optimization and signoff tools and purportedly has demonstrated promising early results.

If you’re wondering about power integrity/reliability, Synopsys and ANSYS have an announced partnership, delivering RedHawk Analysis Fusion, an unsurprising name in this context. So they have that part covered too.

I picked out here a few topics that make sense to me. To get the full story on the Fusion technology, check out the Synopsys white-paper HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.