Dual in-line memory modules (DIMM’s ) with double data rate synchronous dynamic random access memory (DDR SDRAM) have been around since before we were worried about Y2K. Over the intervening years this format for provisioning memory has evolved from supporting DDR around 1995, to DDR1 in 2000, DDR2 in 2003, DDR4 in 2007 and DDR4 in 2014. Over this time bus clock speeds have gone from 100-200 MHz to 1.06-2.13GHz, along with this has come a commensurate increase in performance. While the DIMM format is still king of the hill when it comes to overall capacity, the time has arrived for an entirely different format – based on much newer technology – to provide SDRAM for compute intensive applications that demand the highest bandwidth.

JEDEC’s High Bandwidth Memory (HBM) specification represents the most significant reformulation of SDRAM format in over a generation. Not only does HBM go faster, but it has a dramatically reduced the physical footprint. I recently had a chance to review a presentation given at the Synopsys User Group Silicon Valley 2017 Conference that does an excellent job of clarifying how HBM operates and differs from DDR based technologies. The title of the presentation is “DDR4 or HBM2 High Bandwidth Memory: How to Choose Now”. It was prepared by Graham Allan from Synopsys.

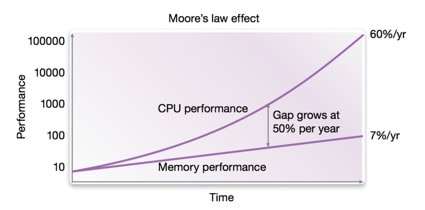

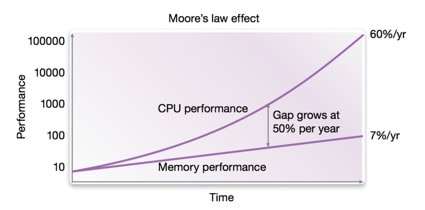

While CPU performance has been scaling at ~60% per year, memory has languished at a rate closer to 7% per year. This means that in some applications CPU performance is outpacing the capabilities of the system memory. Interestingly the DDR specifications have diversified for different applications: DDR3/4 are migrating to DDR5 for servers, PC’s and consumer uses. LPDDR2/3/4 are moving to LPDDR5 for mobile and automotive. Lastly GDDR5 is leading to GDDR6 for graphics. The issues that need to be addressed are bandwidth, latency, power, capacity and cost. And as always there is a relentless trade space between these factors.

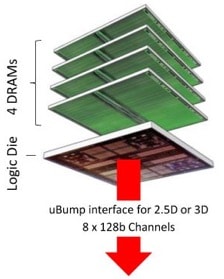

HBM has taken the strategy of going wider while keeping operating speeds at modest levels. What’s nice is that the JEDEC DRAM spec is retained, but the operating bus is now 1,024 pins. The HBM2 spec allows 2000Mb/s per pin. This is done using stacked die and through silicon vias (TSV’s). In fact the die can be stacked 2,4 or 8 high, with a logic and PHY die on the bottom.

HBM started out as a memory specification for graphics, which is often a driver for leading edge technologies. HBM has however proven itself in many other areas. These include parallel computing, digital image and video processing, FPGA’s, scientific computing, computer vision, deep learning, networking and others.

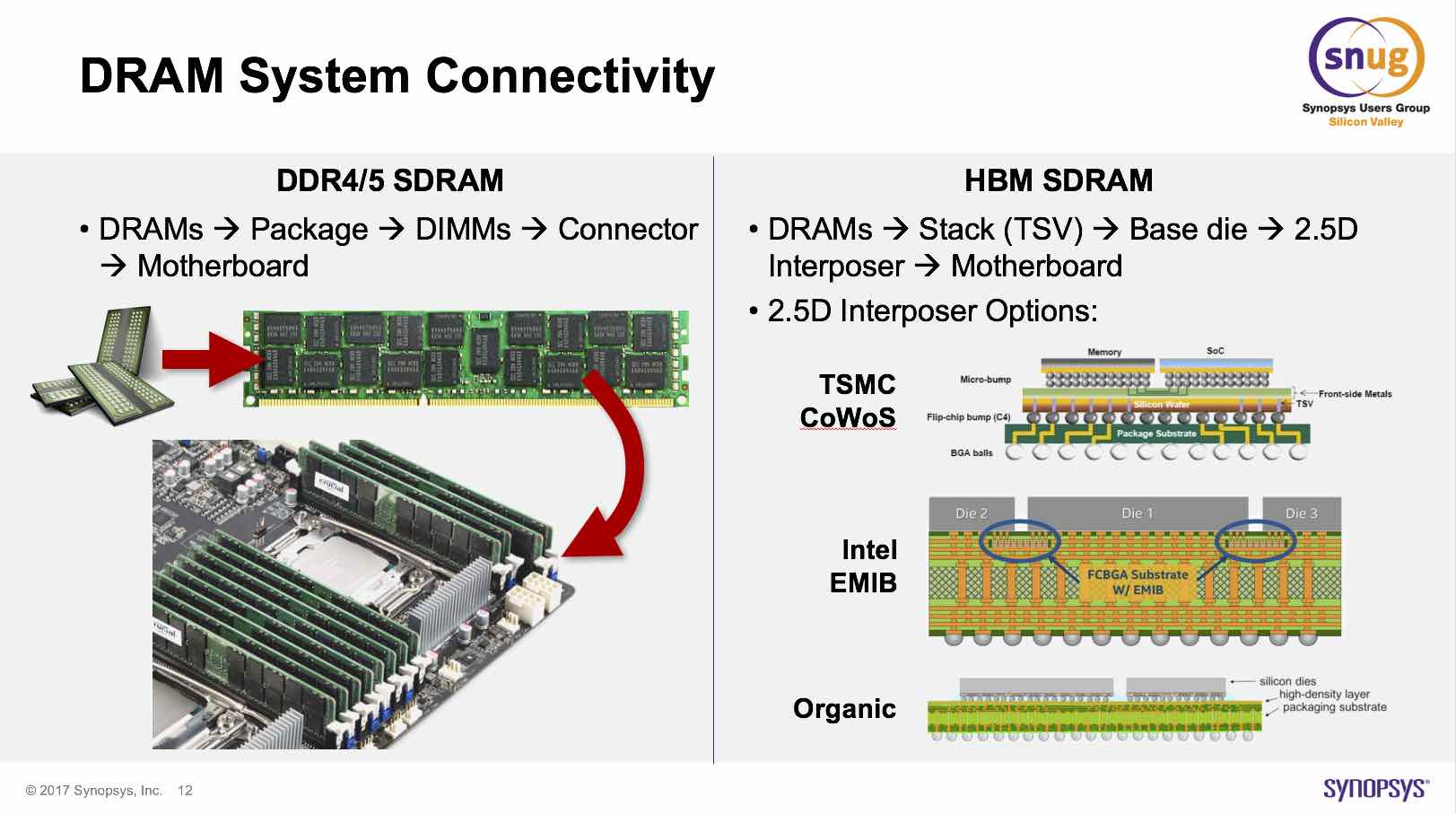

The Synopsys presentation from SNUG is full of details about how DDR4/5 compares to HBM. Here are some of the highlights. The physical configuration is completely different, with the HBM memory die planar to the system board and other SOC’s. Instead of a socket perpendicular to the motherboard, HBM is placed on top of an interpose. Three prominent choices today for this are TSMC’s CoWoS, Intel’s EMIB, or an organic substrate. The smaller size and shorter interface connections offer big benefits to HBM.

On the capacity front DIMM’s win with up to 128GB in 2 ranks RDIMM with stacked 3DS 8GB SDRAM die. It seems that stacked die is a trick that is being utilized in DIMM configurations too. Also the “R “in RDIMM means that there are registers added to help allow increased fanout for more parallel devices on the same bus. All told, high end servers can sport 96 DIMM slots yielding over 12TB of RAM. In comparison, a host SOC with 4 HBM interfaces each with 8GB can provide 32GB of RAM. Despite the large difference in peak capacity, the HBM interface will be able to run much faster – around 250GB/s. This is the result of many important architecture changes made in moving to HBM. There are 8 independent 128-bit channels per stack. Also, refresh efficiency has been improved.

The last topic I want to cover here is thermal. DIMM configurations take up a larger volume, so consequently are easier to cool. Simply using a fan will usually provide enough cooling. HBM is much denser and usually resides much closer to the host SOC. There are features to limit temperature increases so they do not reach catastrophic levels. Regardless, more sophisticated cooling solutions are required. In many cases, liquid cooling is called for.

There are many trade-offs in deciding ultimately which is the best solution for any one design. I would strongly suggest reviewing the Synopsys presentation in detail. It covers many of the more nuanced differences between DIMM or HBM based memory solutions. Indeed, there may be cases where a hybrid solution can offer the best overall system performance. Please look at the Synopsys web site for more information on this topic and the IP they offer as potential solutions for design projects.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.