The Jasper part of Cadence announced jointly with Sonics a relationship whereby Sonics uses JasperGold Apps as part of their verification. I talked to Drew Wingard, the CTO, about how they use it.

One way is during the day when their design engineers use Jasper as part of their verification arsenal. Interestingly it is the design engineers that use it, not the verification engineers.

Formal verification is especially good at finding corner case problems that simulation misses. It is almost impossible to build a cache-coherent network without formal since you will never think of everything to simulate, for example. It finds deep bugs in multiprocessor systems, which is pretty much what a modern SoC is. Sonics have a bigger problem than an SoC in that it is so configurable so it is not just a matter of running formal to prove something about a static design. It goes without saying that it is not possible to generate exhaustively all the possible configurations or even anything close, any more than you can exhaustively simulate all conditions on a modern SoC.

But the big motivation for using JasperGold was at night when they run their nightly regressions. Sonics have discovered in the past that almost any error in the design eventually shows up as a protocol error, in much the same way that stuck-at faults are surprisingly powerful in testing ICs even though many faults are technically something else. The value in a network-on-chip (NoC) is often being able to mix and match protocols: ARM, AXI, OCP, old VSIA stuff even. For a long time Sonics have developed protocol checkers that make sure that they are executed properly. They even distribute these to their customers and always the first question when a customer reports a bug is to ask if they have turned the protocol checkers on. Often the customer is soon back: “my bad.”

But the big motivation for using JasperGold was at night when they run their nightly regressions. Sonics have discovered in the past that almost any error in the design eventually shows up as a protocol error, in much the same way that stuck-at faults are surprisingly powerful in testing ICs even though many faults are technically something else. The value in a network-on-chip (NoC) is often being able to mix and match protocols: ARM, AXI, OCP, old VSIA stuff even. For a long time Sonics have developed protocol checkers that make sure that they are executed properly. They even distribute these to their customers and always the first question when a customer reports a bug is to ask if they have turned the protocol checkers on. Often the customer is soon back: “my bad.”

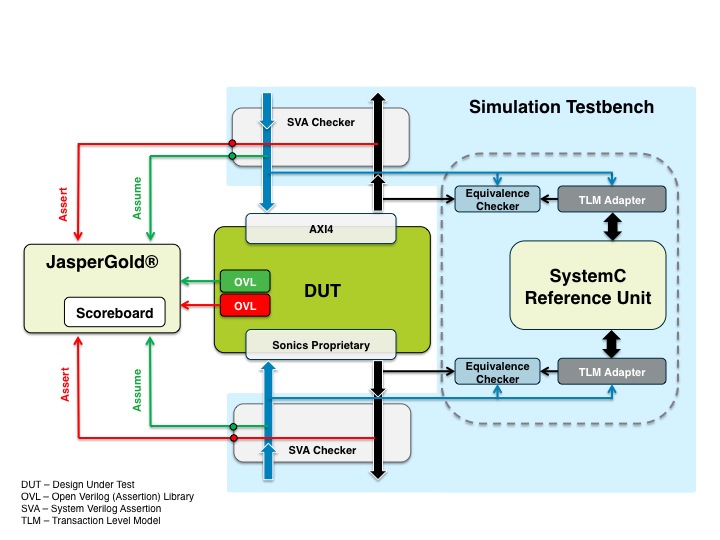

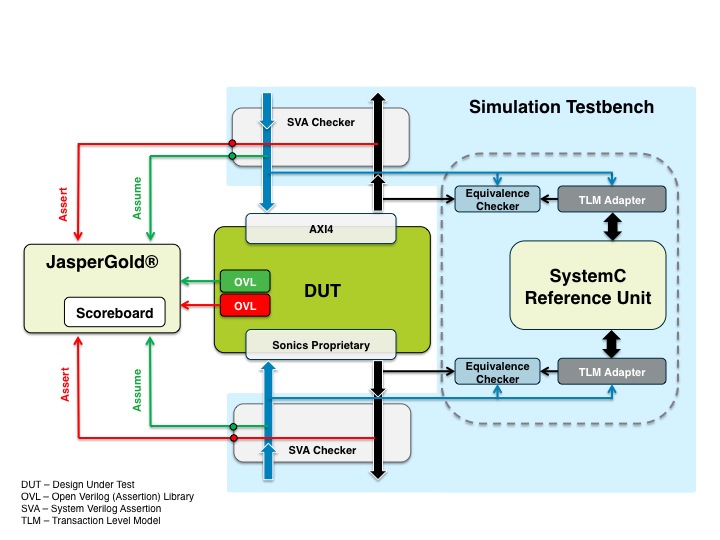

So the first requirement to adopt JasperGold was that the protocol checkers could be used to generate formal constraints and assertions with only minimal incremental effort, otherwise adoption would be too time-consuming and expensive. That would then make it easy to run formal techniques in the nightly regressions alongside simulation.

The Sonics nightly regressions work by generating constrained random configurations and then running constrained random simulations on them, and now JasperGold too. There is a tradeoff between how many random configurations are generated and how deeply they are exercised, either in the number of vectors or the amount formal proof time that is allowed. After all, there is a fixed size regression farm and the nightly regressions have to run in a night, by definition. That way problems can be fixed the next day and licenses are freed up for use by engineers when they arrive at work.

Sonics have discovered that it is much better to generate lots of configurations and then do relatively shallow testing on them. After all, a bug that shows up after 21 transfers but doesn’t create any issues in the first 20 is not highly likely. Better to generate more configurations and do some constrained random testing using different starting seeds. In fact Sonics generate thousands every night.

With limited modifications they could reuse their existing protocol checkers (which are standard SystemVerilog constraints). The worst case was a protocol checker requiring about 15% rewrite. So the Sonics verification approach is to generate a huge number of configurations of the NoC(s). Test them a bit with all the protocol checkers using either simulation or now formal proof techniques. The fact that the same protocol checkers work in both environments makes this very powerful.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.