During his keynote address at the CadenceLIVE 2021 conference, CEO Lip-Bu Tan made some market trend comments. He observed that most of the data nowadays is generated at the edge but only 20% is processed there. He predicted that by 2030, 80% of data is expected to be processed at the edge. And most of this 80% will be processed on edge devices as AI workloads. This migration is already happening rapidly and is calling for different types of on-device AI SoCs.

During the same conference, president Anirudh Devgan presented Cadence’s strategy guiding their next wave of innovations and technology offerings. One of the three prongs of the strategy is enabling design excellence for its customers. Cadence delivers it through its EDA tools, emulation platforms, semiconductor IP and productivity software tools.

Cadence has been executing its strategy and announcing new capabilities at a nice pace. In July, it announced Cerebrus, the Intelligent Chip Explorer. Cerebrus falls under the EDA tool category of its design excellence drive. This month, Cadence announced its Tensilica AI Platform for accelerating AI SoC development. The related press release mentioned three product families optimized for varying on-device AI requirements. The products fall under the category of semiconductor IP solutions for design excellence. This is the context for this blog.

I had an opportunity to discuss this product announcement with Pulin Desai. Pulin is the group director of product marketing and management for Tensilica Vision & AI at Cadence. The following is a synthesis of what I gathered from our conversation.

Pulin stated that Cadence is focused on bringing the most energy-efficient on-device IP solutions for AI SoCs. And it wants to do this for all types of workloads over a broad range of market segments. This in turn requires the product to be configurable, extensible and scalable across performance and energy parameters. Cadence took a platform approach that can deliver solutions to address these varying requirements.

Market Requirements

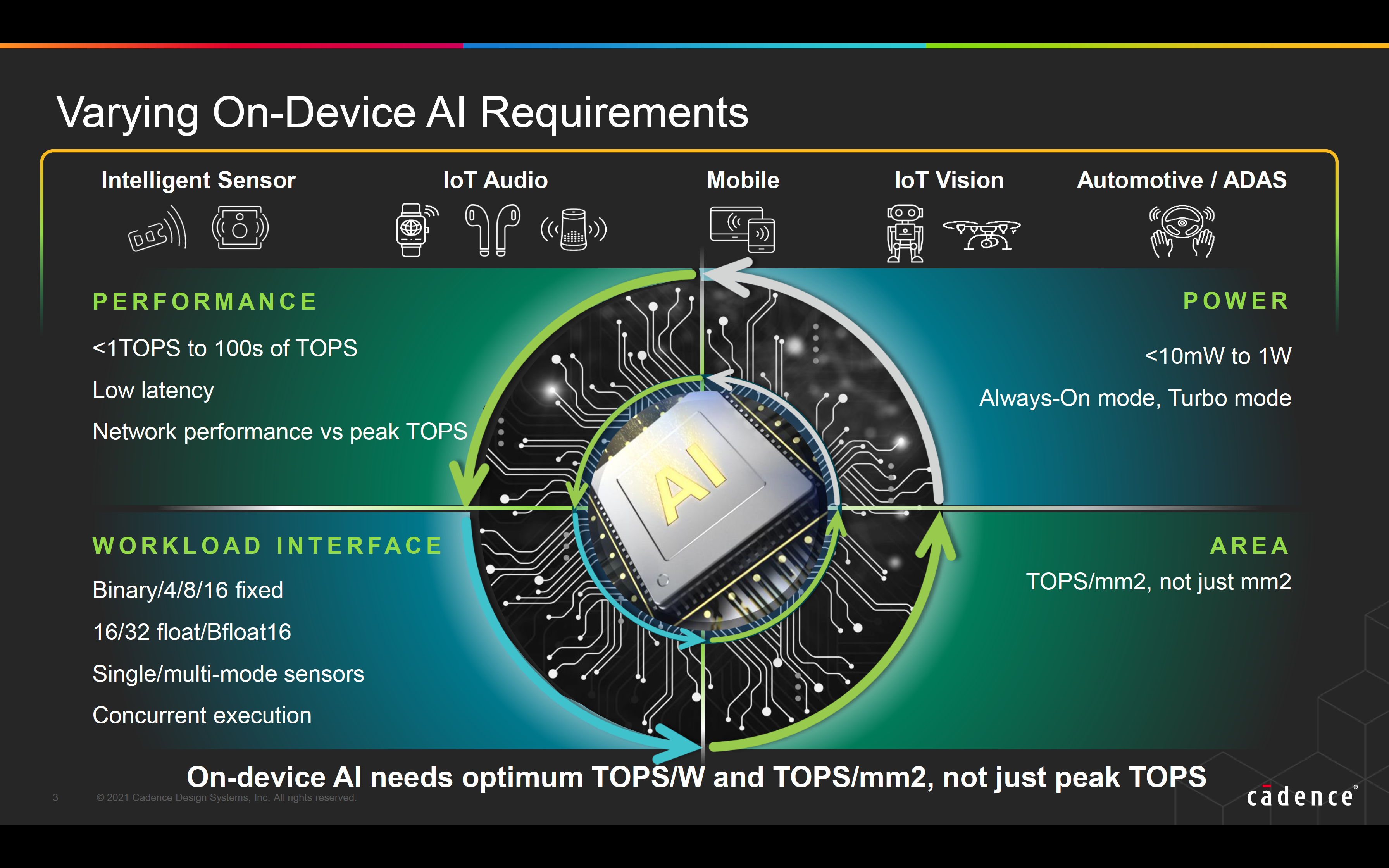

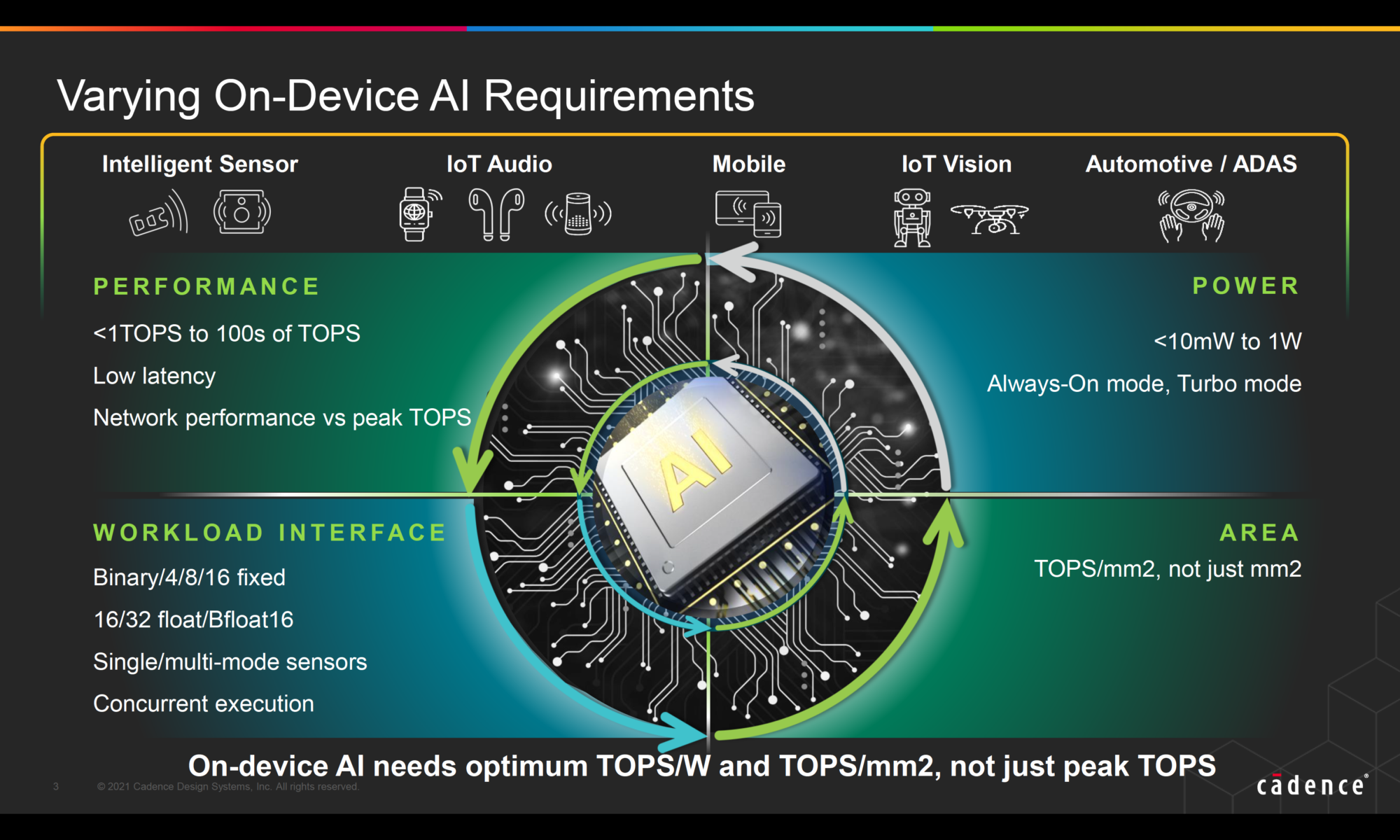

The supported market segments range from simple intelligent sensors to IoT audio/vision, mobile and automotive/ADAS. These market segments are looking for a solution that offers/enables low cost, rapid development, longer battery life and quick product differentiation. Customers also want configurable and extensible software-hardware platform that makes it easy for them to scale their products to meet different segments of their markets.

Product Requirements

The above identified market requirements translate into the following product-level requirements. It is no longer just the Tera Operations Per Second (TOPS) rating that matters. It is optimum TOPS per Watt and TOPS per sq.mm of silicon area. It is performance/speed at the lowest latency. It is the ability to

interface with varying types of workloads that operate on fixed or floating-point data. It is the capacity to process data from single or multi-mode sensors and execute concurrently. Refer to the figure below to see the very wide range of performance, power and workload interfaces that need to be addressed.

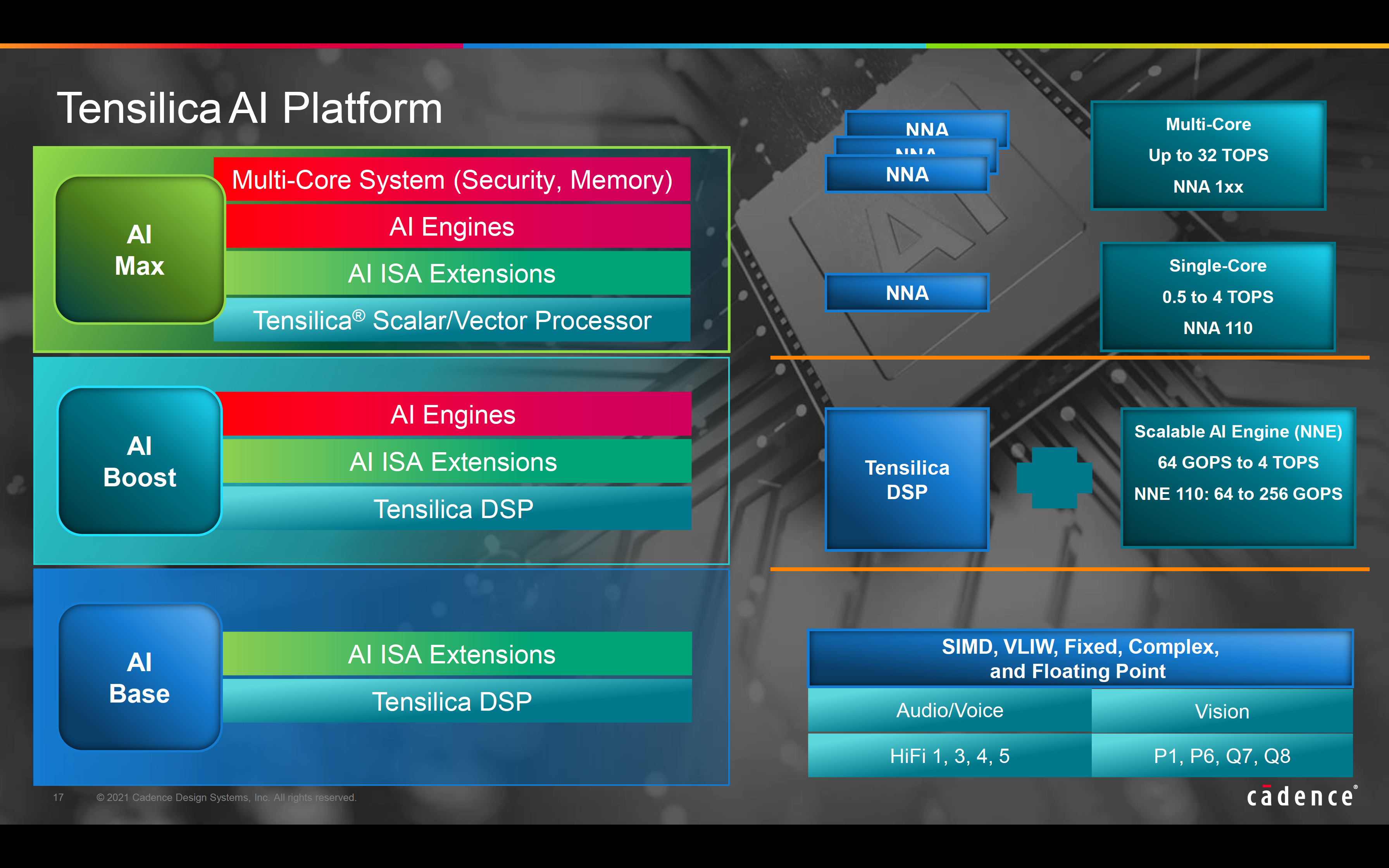

Tensilica AI DSPs

The AI SoCs that are to implement the above on-device AI product requirements need AI engines as well as DSP capabilities. The DSP functions are needed for processing the inputs received from multiple sensors. Cadence already has a long track record of successful Tensilica DSPs with AI ISA extensions based on the time-tested Xtensa® configurable and extensible processor platform. Tensilica DSPs are shipping in volume production in numerous SoCs and end products. It made strategic sense to expand these AI solutions further based on this strong DSP foundation to offer a wider range of AI-enabled products. Refer to figure below.

Tensilica AI Platform

The Tensilica AI Platform supports three AI product families to satisfy a broad range of market requirements: AI Base, AI Boost and AI Max. The comprehensive common software platform enables ease of scalability across these product families. The configurability and extensibility features allow some markets/applications to be addressed by multiple Tensilica AI solutions. The platform includes a Neural Network Compiler which supports industry-standard frameworks such as: TensorFlow, ONNX, PyTorch, Caffe2, TensorFlowLite and MXNet for automated end-to-end code generation; Android Neural Network Compiler; TFLite Delegates for real-time execution; and TensorFlow Lite Micro for microcontroller-class devices.

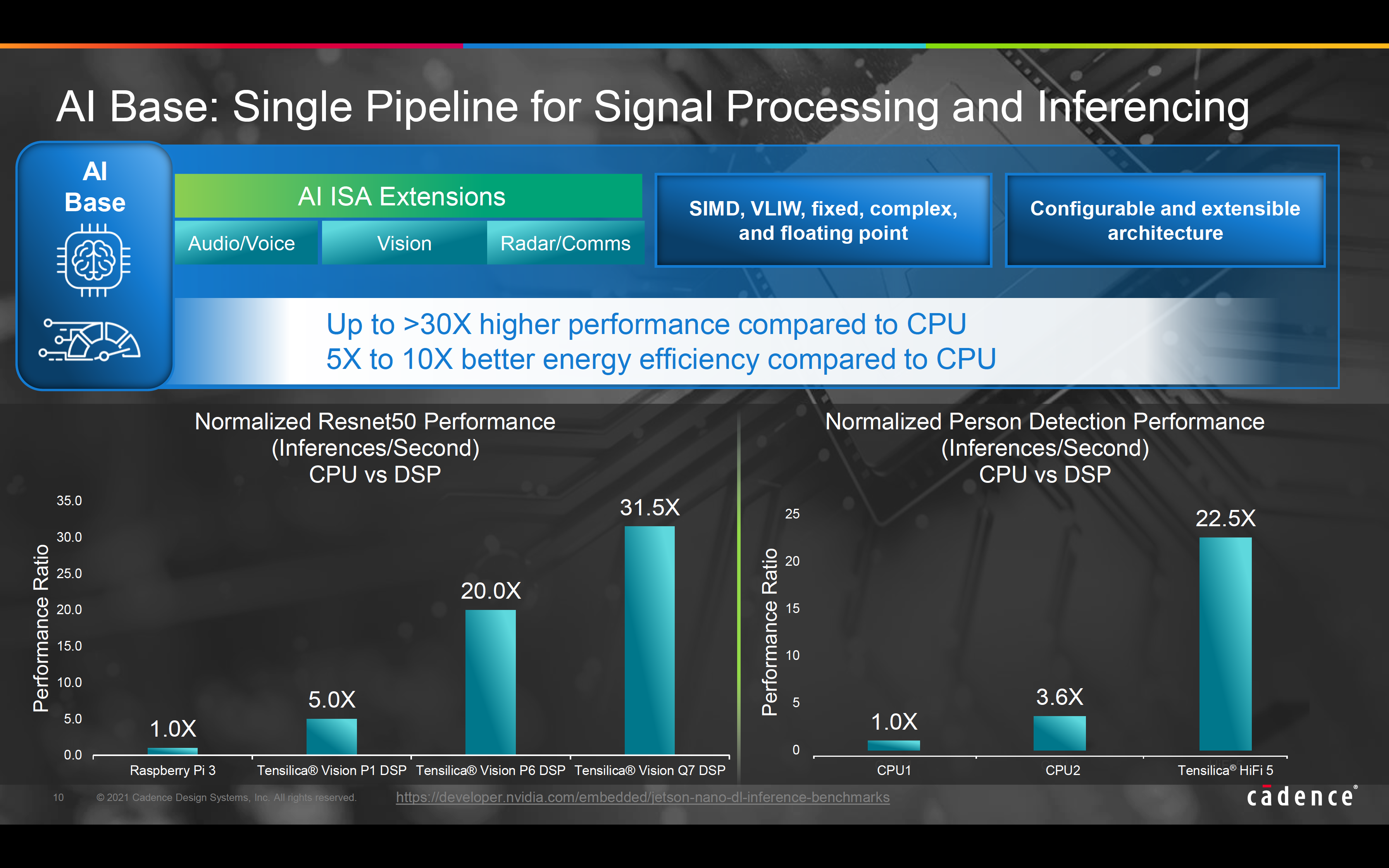

AI Base: The low-end product including Tensilica DSPs with AI ISA extensions targets voice/audio/vision/radar/LiDAR related applications. It can deliver up to 30x better performance and 5x-10x better energy efficiency compared to a regular CPU based solution. Refer to figure below for some benchmark results.

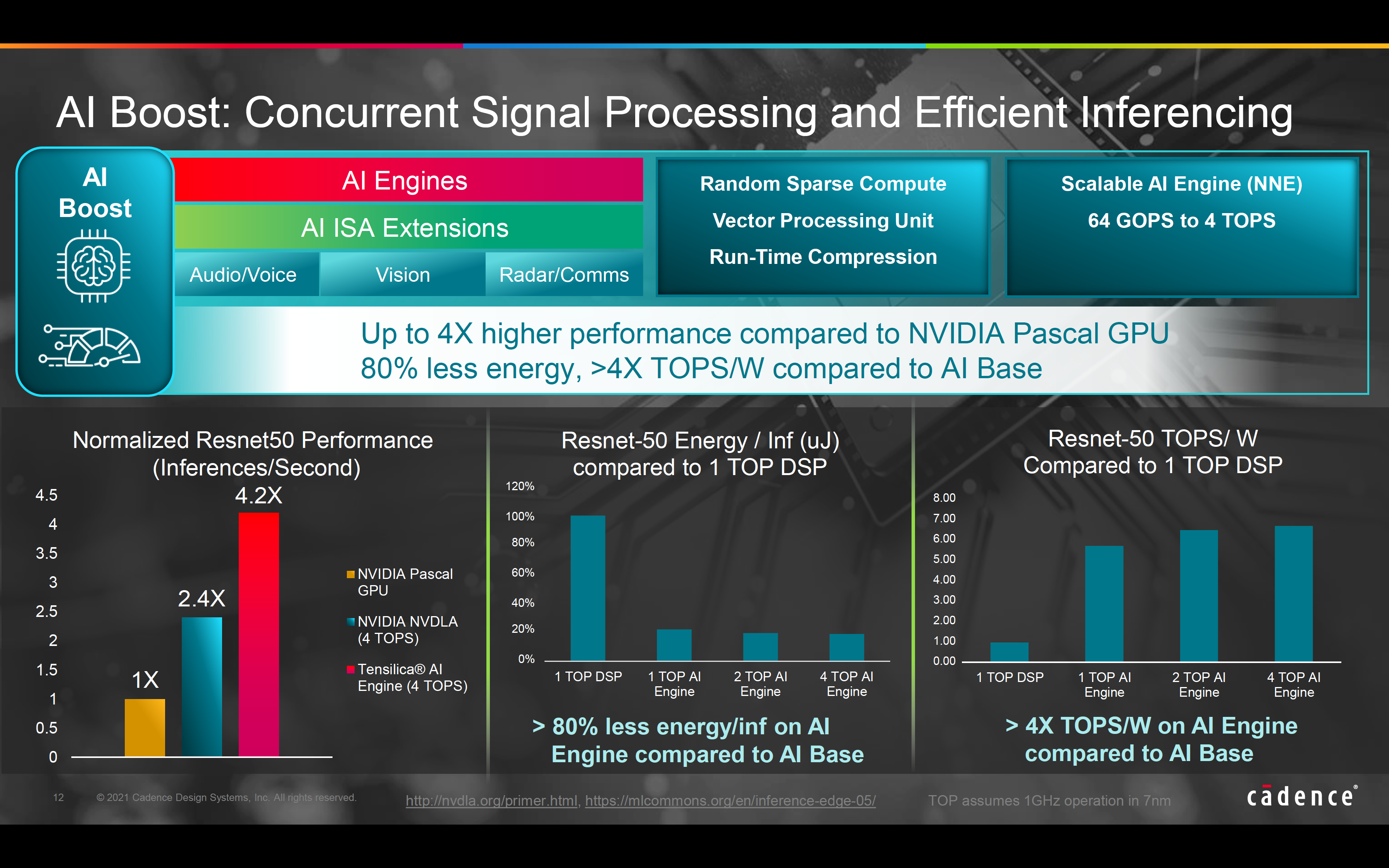

AI Boost: The mid-level product can be used with any of the AI Base applications when performance and power need to be optimized. It integrates the AI Base technology with a differentiated sparse compute Neural Network Engine (NNE). The initial version NNE 110 can scale from 64 to 256 GOPS and provides concurrent signal processing and efficient inferencing. It consumes 80% less energy per inference and delivers more than 4X TOPS per Watt compared to industry-leading standalone Tensilica DSPs. Refer to figure below for some benchmark results.

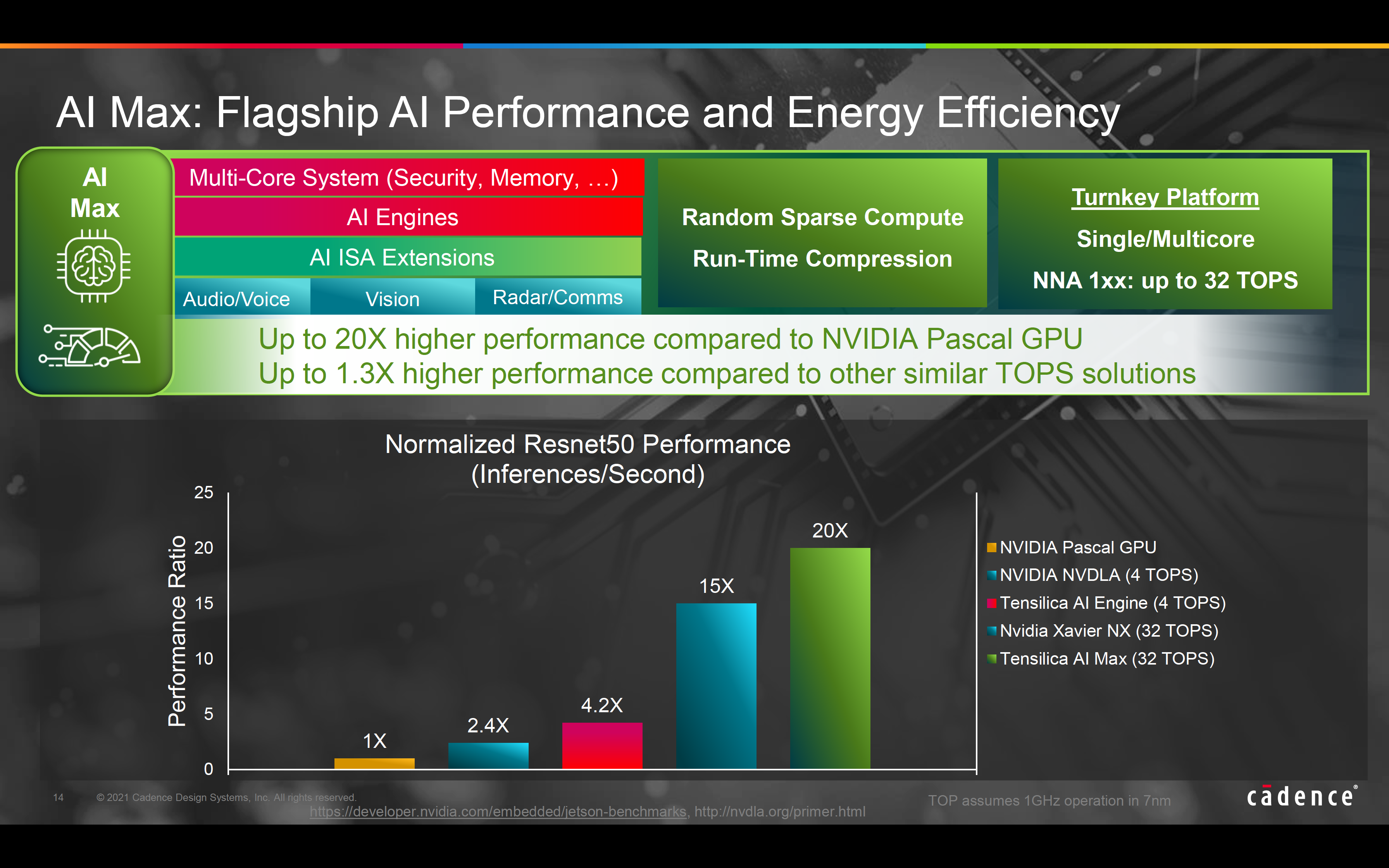

AI Max: The high-end product integrates the AI Base and AI Boost technology with a Neural Network Accelerator (NNA) family. The NNA family currently includes single core (NNA 110), 2-core (NNA120), 4-core (NNA 140) and 8-core (NNA 180) options. The multi-core NNA accelerators can scale up to 32 TOPS, while future NNA products are targeted to scale to 100s of TOPS. Refer to figure below for some benchmark results.

Summary

The Cadence Tensilica AI Platform enables industry-leading performance and energy efficiency for on-device AI applications. It is built upon the mature, volume-production proven Tensilica Xtensa architecture. The low-end, mid-level and high-end product families cover the full spectrum of PPA and cost points for various market segments. The solution currently scales from 8 GOPS to 32 TOPS, with additional products expected to deliver 100s of TOPS to meet future requirements.

For more information on the Tensilica AI Platform and new AI IP solutions, visit their product page by clicking here. To read the full press release, click here.

Also Read:

Cadence Tensilica FloatingPoint DSPs

Features of Short-Reach Interface IP Design

112G/56G SerDes – Select the Right PAM4 SerDes for Your Application

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.