Paul Cunningham, CVP and GM of the System Verification Group at Cadence gave the afternoon Keynote on Tuesday at DVCon and doubled down on his verification-throughput message. At the end of the day, what matters most to us in verification is the number of bugs found and fixed per dollar per day. You can’t really argue with that message. This is the ultimate metric for semiconductor product verification. Cycles per second, debug features, this engine versus that engine—these are ultimately mechanisms for delivering that outcome.

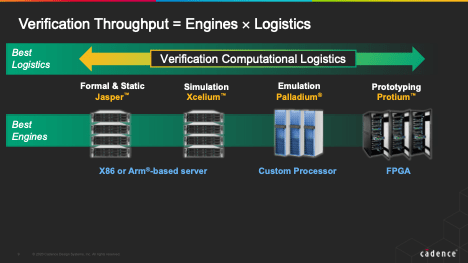

Throughput starts with best in class engines

That’s not to say that cycles per second and so on are unimportant. Paul has a very grounded engineering viewpoint. The horsepower underneath this throughput needs to be the best of the best in each instance. But, on top of that, what Paul (and Anirudh) call logistics—the most effective use of these resources to meet that ultimate goal—have become just as important. This view draws on an analogy with package delivery in our increasingly online world. Planes are used for long-distance transportation, long-haul trucks for plane-to-warehouse transportation and vans for last-mile delivery/pickup. Each mode has strengths and weaknesses: Speed versus setup time versus reach. Logistics is about combining these effectively to maximize throughput.

The same applies in verification. Simulation is the last-mile equivalent; emulation is the long-haul truck, and FPGA prototyping is the fastest throughput with longest time to setup. Paul added that no analogy is perfect; in verification we also have formal, which plays an important role in this throughput story. Maybe the physical logistics people need to find a parallel to up their game!

Logistics and machine learning

But having fast planes and trucks and vans is not enough to maximize throughput. FedEx, UPS and others put huge investments into scheduling and routing traffic to meet their throughput goals. The same principle must be applied to verification, for which you need logistics management on top of the engines. Xcelium-ML provides an example which leverages machine learning (ML) to reduce regression runs while maintaining the same level of coverage. We all know that some number of regression cycles are low value because they’re simply proving over and over again that “even though this code didn’t change, we checked it anyway and it still works.” This is particularly important in randomized simulations, where many randomizations may be very low value. The trick is to know what tests you can drop without missing some subtle but fatal error. That’s where machine learning comes in.

Another area where ML can play a significant role is in formal proving and regressions. We often think of formal as hard because there are many different proof engines and methodologies. You may need several of these to find your way to a proof. Which method to use on what problem has in the past been seen a question requiring a lot of deep expertise in the domain. The Jasper team has captured a lot of that expertise through ML methods, to find the best engines and methodologies to quickly arrive at a proof. Or to navigate through an optimum chain of alternatives.

Logistics between emulation and prototyping

Better logistics is not always about ML. Cadence have optimized Palladium emulation and Protium FPGA prototyping for better logistics between the engines through a unified compile front-end, unified transactor support and unified hardware bridge support. When you want to run high-performance emulations with maximum debuggability on Palladium, you do so. And when you want to switch to even higher performance for embedded software debug, you switch to Protium. With a minimum of fuss. Run into a problem during a Protium debug? Switch back to Palladium for better debug insight. Logistics again. You can switch from truck to plane and back to truck as needs demand.

Optimizing engines for better throughput will always be a priority. Optimizing logistics for better throughput between regression runs and between engines is what will squeeze out the maximum in bugs found per dollar per day. Which is ultimately what we have to care about.

For more information, visit the Cadence verification portal HERE.

Also Read

TECHTALK: Hierarchical PI Analysis of Large Designs with Voltus Solution

Finding Large Coverage Holes. Innovation in Verification

2020 Retrospective. Innovation in Verification

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.