Physical verification is an important and necessary step in the process to tapeout an IC design, and the foundries define sign-off qualification steps for:

- Physical validation

- Circuit validation

- Reliability verification

This sounds quite reasonable until you actually go through the steps only to discover that some of the same metadata is being generated multiple times, even when there’s no change to input files, so this is just duplicating efforts without any benefits.

Let’s talk about two examples. Changing interconnect layout in the back-end doesn’t effect front-end schematics, but a verification flow would generate and verify schematic information during each run. An ECO for Back End Of Line (BEOL) would still start jobs on the Front End Of Line (FEOL) layers for extraction and validation, although the FEOL layers haven’t changed.

One smart solution to this dilemma comes from Mentor in their Calibre PERC tool, as they have figured out a method for IC designers to only generate design metadata one time, not multiple times. Four areas that can now use one-time metadata generation include:

- Voltage Aware Design Rule Checking (VA-DRC)

- Point to Point (P2P) Verification

- Current Density (CD) Verification

- Failure Analysis (FA) and design profiling

Reliability Verification Flows

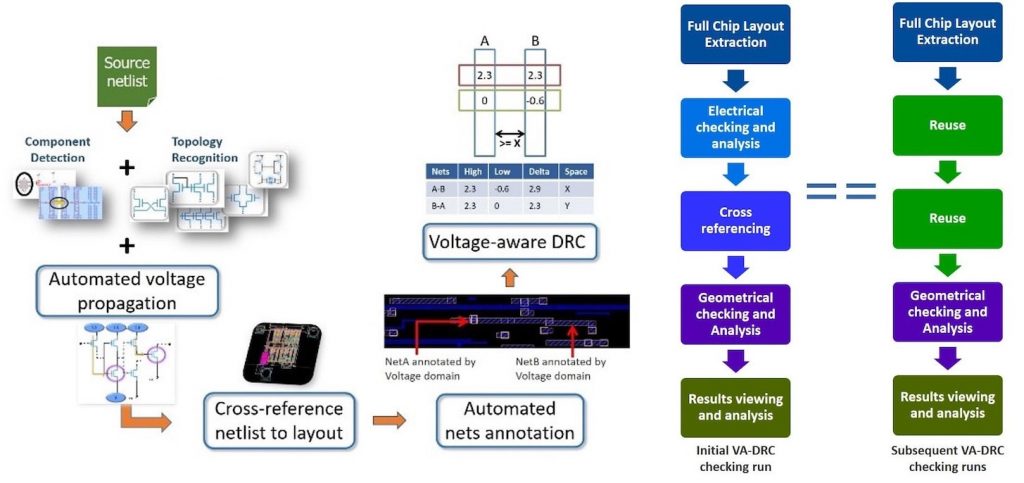

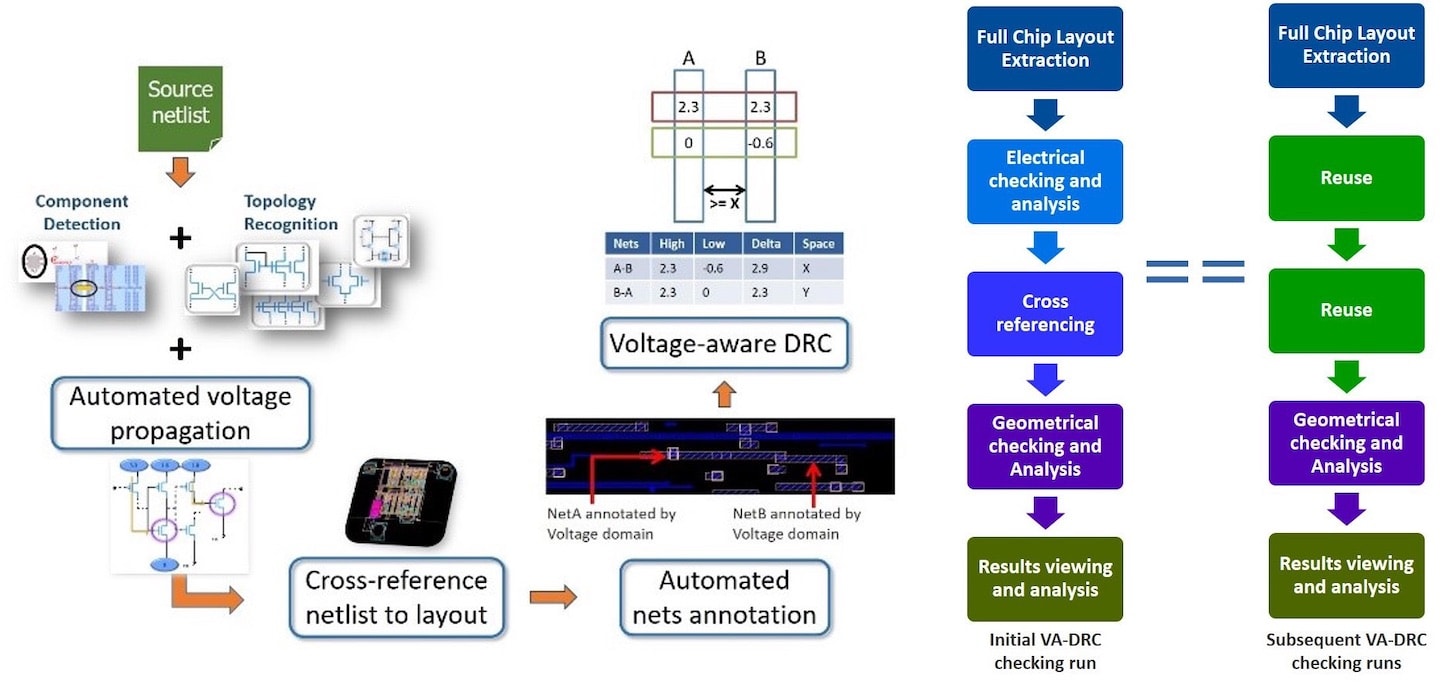

VA-DRC can have a rule for adjacent metal spacing that depends on the voltage levels, and the Calibre PERC tool knows how to propagate voltages from the top layout ports then check the rules based on nets of interest. During subsequent design rechecking the tool knows how to re-use the initial voltage propagation and netlist cross-referencing information. Here’s a diagram of how this rechecking happens:

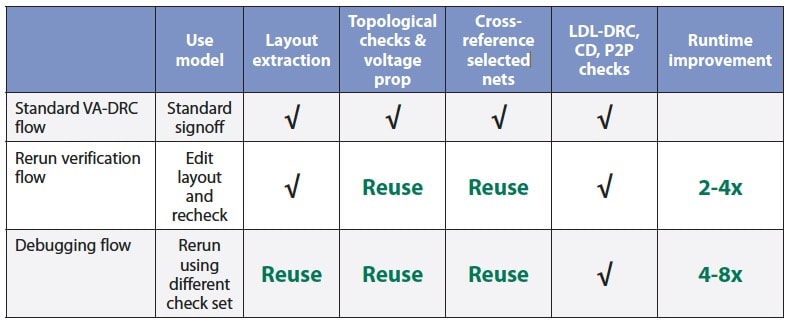

In theory this sounds useful, but what about in practice? Here’s a table to show how a standard VA-DRC flow can be improved by 2-8X during reruns, depending on how much reuse there is:

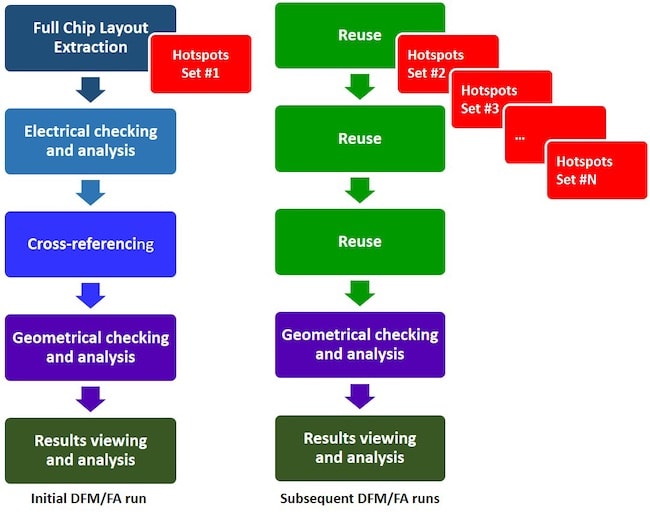

DFM and Failure Analysis Flows

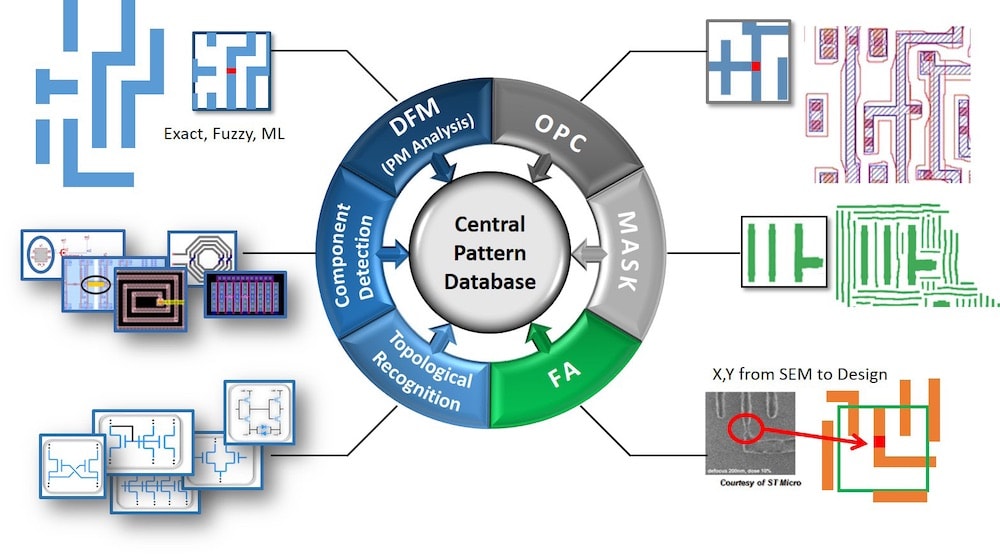

In these flows we have metadata generated from many design profile sources:

- Scanning Electron Microscope (SEM) images

- Lithographic and Optical Process Correction (OPC) hotspots

- Test hotspots

- DRM yield detractors

By saving metadata elements for reuse the TAT is reduced, and it aids in debugging, pre-silicon and post-silicon analysis.

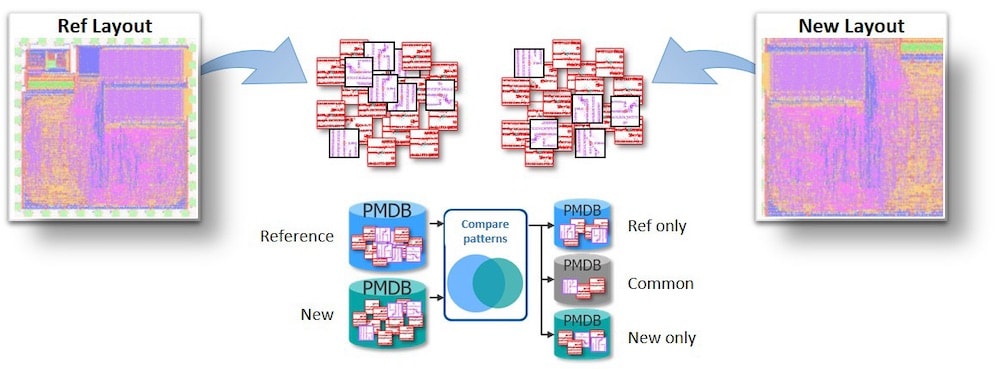

Another way to reduce TAT in a DFM analysis flow is to use a centralized, reference pattern library, so that you can identify any new, existing and common information with any new layout as shown below:

Libraries are built for reference, common and new patterns, then hotspots found in the new layout can be overlaid with the reference to quicker debugging and Root Cause Deconvolution (RCD):

Summary

I just love the familiar adage, “Work smarter, not harder.” That’s exactly what the engineering team at Mentor has accomplished using Calibre PERC to reuse metadata during sign-off validation steps, speeding up the time by not generating duplicate metadata. Other benefits include reducing CPU loading, all while keeping the same accuracy of results. Engineers will experience improvements in debugging, DFM optimization and FA by reusing metadata.

Read the complete 6 page white paper here, Increase Productivity by Reusing Metadata for Signoff & ECOs.

Related Blogs

- Calibre Commences Cloud Computing

- IC to Systems Era

- A Collaborative Driven Solution

- Qualcomm Attests Benefits of Mentor’s RealTime DRC for P&R

TSMC vs Intel Foundry vs Samsung Foundry 2026