If you follow my blogs you know that Arteris IP is very active in these areas, leveraging their central value in network-on-chip (NoC) architectures. Kurt Shuler has put together a front-to-back white-paper to walk you through the essentials of AI, particularly machine learning (ML) and its application for example in cars.

He also highlights an interesting point about this rapidly evolving technology. As we build automation from the edge to the fog to the cloud, functionality, including AI, remains quite fluid between levels. Kurt points out that this is somewhat mirrored in SoC design. In both cases architecture is constrained by need to optimize performance and minimize power across the system through intelligent bandwidth allocation and data locality. And for safety-critical applications, design and verification for safety around intelligent features must be checked not only within and between SoCs in the car but also beyond, for example in V2x communication between cars and other traffic infrastructure.

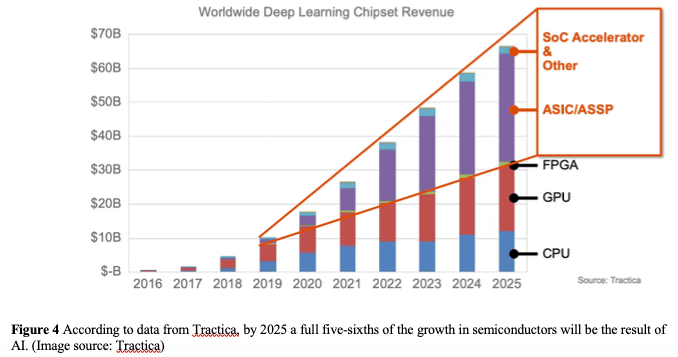

What’s driving the boom in AI-centric design? If you’re not in the field it might seem that AI is just another shiny toy for marketing, destined for the garbage can when some inevitable fatal flaw emerges. You couldn’t be more wrong (pace Sheldon Cooper). Tractica estimates that more than 80% of the growth in semiconductors between now and 2025 with be driven by AI applications, whether based on standard platforms (CPU, GPU or FPGA) or custom-built ASIC/ASSPs or highly-specialized accelerators. (The overlay in the image above is Kurt’s addition).

None of this demand depends on major inflection points in the way we live. It’s all about incremental improvements to safety, productivity, security, convenience – all the things technology has been improving for a long time. Whether or not ADAS in cars impresses insurance providers (per my earlier blog), it definitely impresses this owner and apparently many others. I’ve said before I’ll never switch back to a car with lesser ADAS features – they more than pay for themselves. Security for business and industrial plants is already moving from need to constantly monitor security camera feeds to only having to check when unusual movement and/or sounds (dog barking, glass breaking) are detected. Also incidentally this is much better for home security.

Oncologists may be able to more reliably detect potentially cancerous lung tissue based on CT scans (following further analysis). Smart storage solution providers now need to build intelligence into their solutions to better manage housekeeping and other scheduling to maximize throughput and minimize failures. All of this is bread-and-butter stuff, no consumer, industrial or business revolutions required, just adding more automation to what we already have.

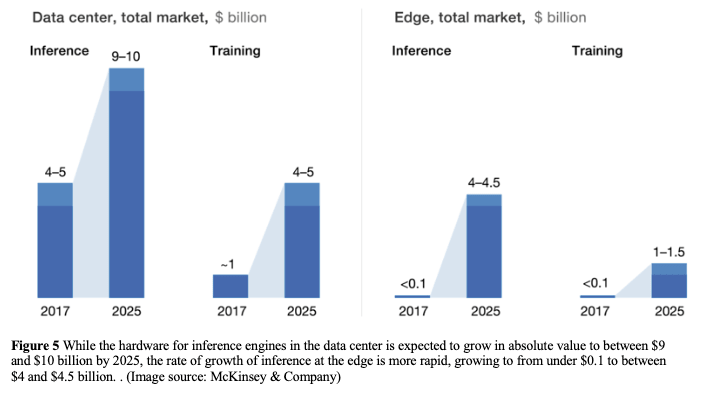

McKinsey went a step further than Tractica, breaking down AI hardware growth between training and inference in the datacenter and training and inference on the edge. The biggest contributors are in the datacenter (I’m curious to know how much of that is for cat recognition and friend-tagging in Facebook photos.) And the highest growth is on the edge, growing in 7 years from essentially zero to $5-6B. These two surveys don’t come up with the same numbers for 2025, but that’s not very surprising. What is consistent is the high growth.

Why do Kurt and Arteris IP care about this? They care because the interconnect within an SoC is proving to be at the nexus of AI performance, power, cost and safety for these fast-growing applications. On performance, power and cost, these are big SoCs, smartphone AP-size or even bigger. Which means on-chip networks have to be NoCs and Arteris is pretty much the only proven commercial supplier in that area. Also, AI accelerators are not intrinsically coherent with the compute cluster on the SoC but have to become so to share imaging, voice and other data with the cluster. Arteris IP solutions help here through proxy caches and last-level cache solutions. And with the more advanced accelerators these NoC solutions often play an even deeper role in islands of cache coherence, in mesh, ring or torus architectures and in connecting to external dedicated high-bandwidth memory.

Finally on safety, Arteris IP is well plugged into ISO 26262 (Kurt has been on the working group for many years), they work very closely with integrators and with a certification group to ensure their product meets the letter and the spirit of the standard (increasingly important in ISO 26262-2). And they provide safety-related features within the logic they develop to support ECC, duplication and other safety related requirements with the domain of the logic they supply.

You can learn more by reading this white-paper.

Share this post via:

Comments

7 Replies to “AI, Safety and the Network”

You must register or log in to view/post comments.