There is a lot of discussion about removing barriers to innovation these days. Semiconductor systems are at the heart of unlocking many forms of technical innovation, if only we could address issues such as the slowing of Moore’s Law, reduction of power consumption, enhancement of security and reliability and so on. But there is another rather substantial barrier that is the topic of this post. It is the dramatic difference between processor and memory performance. While systems of CPUs and GPUs are delivering incredible levels of performance, the memories that manage critical data for these systems are lagging substantially. This is the memory wall problem, and I would like to examine how Arteris is unleashing innovation by breaking down the memory wall.

What is the Memory Wall?

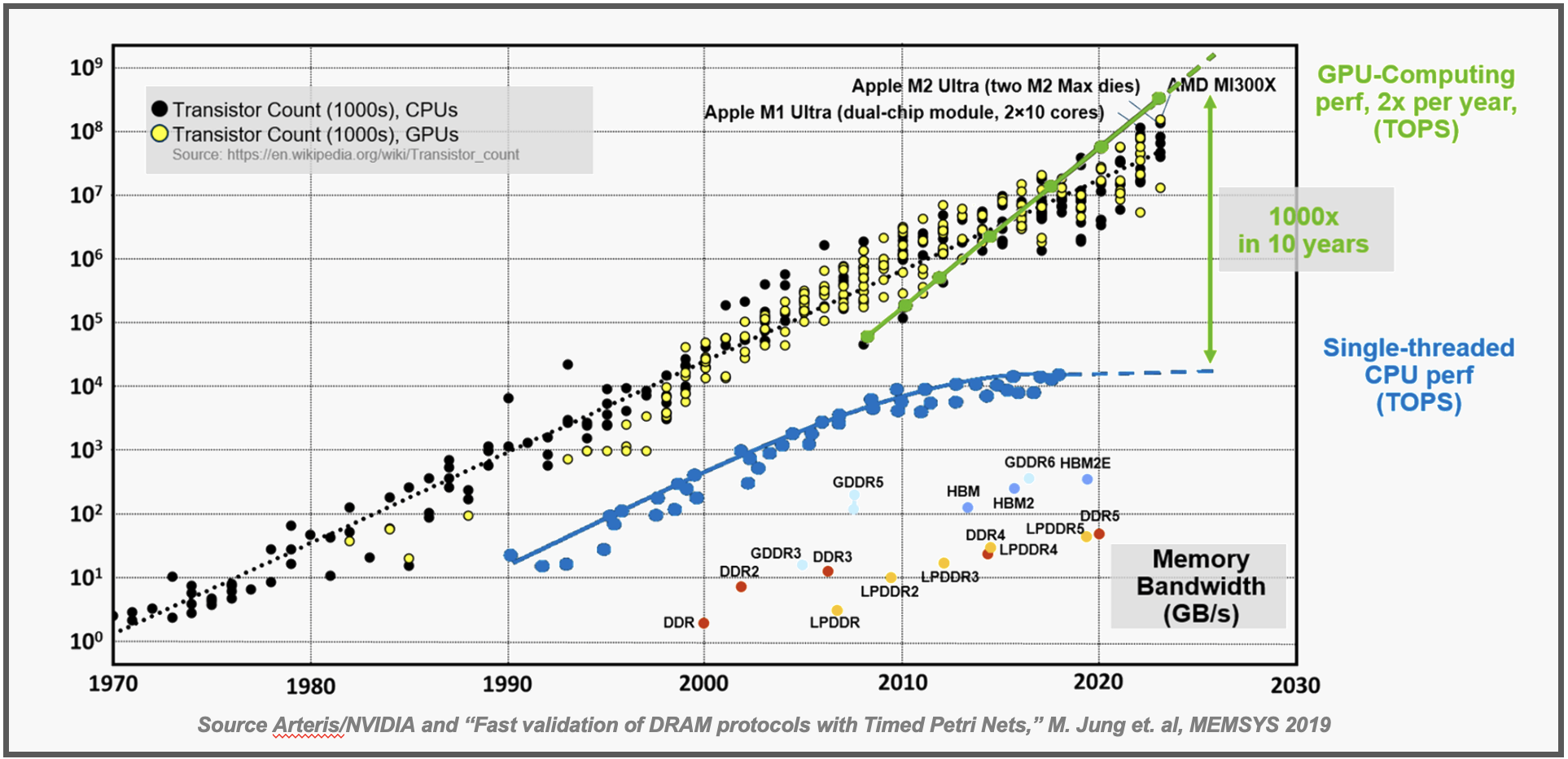

The graphic at the top of this post illustrates the memory wall problem. You can see the steady increase in performance of single-threaded CPUs depicted by the blue line. The green line shows the exponential increase in performance being added by clusters of GPUs. The performance increase of GPUs vs. CPUs is estimated to be 100X in 10 years – a mind-boggling statistic. As a side note, you can see that the transistor counts for both CPUs and GPUs cluster around a similar straight line. GPU performance is delivered by doing less tasks much faster as opposed to throwing more transistors at the problem.

Many systems today are a combination of a number of CPUs doing broad management tasks with large numbers of GPUs doing specific tasks, often related to AI. The combination delivers the amazing throughput we see in many products. There is a dark side to this harmonious architecture that is depicted at the bottom of the chart. Here, we see the performance data for the various memory technologies that deliver all the information for these systems to process. As you can see, delivered performance is substantially lower than the CPUs and GPUs that rely on these memory systems.

This is the memory wall problem. Let’s explore the unique way Arteris is solving this problem.

The Arteris Approach – A Highly Configurable Cache Coherent NoC

A well-accepted approach to dealing with slower memory access speed is to pre-fetch the required data and store it in a local cache. Accessing data this way is far faster – a few CPU cycles vs. over 100 CPU cycles. It’s a great approach, but it can be daunting to implement all the software and hardware required to access memory from the cache and ensure the right data is in the right place at the right time, and consistent across all caches. Systems that effectively deliver this solution are called cache coherent, and achieving this goal is not easy. A software-only coherency implementation, for example, can consume as much as ~25% of all CPU cycles in the system, and is very hard to debug. SoC designers often choose cache coherent NoC hardware solutions instead, which are transparent to the software running on the system.

Recently, I had an opportunity to speak with Andy Nightingale, vice president product management & marketing at Arteris. Andy did a great job explaining the challenges of implementing cache coherent systems and the unique solution Arteris has developed to cope with these challenges.

It turns out development of a reliable and power efficient cache coherent architecture touches many hardware and software aspects of system design. Getting it all to work reliably, efficiently and hit the required PPA goals can be quite difficult. Andy estimated that all this work could require 50 engineering years per project. That’s a lot of time and cost.

The good news is that Arteris has substantial skills in this area and the company has created a complete cache coherent architecture into one of its network-on-chip (NoC) products. Andy described Ncore, a complete cache coherent NoC offered by Arteris. Management of memory access fits well in the overall network-on-chip architecture that Arteris is known for. Ncore manages the cache coherent part of the SoC transparently to software – freeing the system designer to focus on the higher-level challenges associated with getting the CPU and all those GPUs to perform the task at hand.

Andy ran down a list of Ncore capabilities that was substantial:

- Productive: Connect multiple processing elements, including Arm and RISC-V, for maximum engineering productivity and time-to- market acceleration, saving 50+ person-years per project.

- Configurable: Scalable from heterogenous to mesh topologies, supporting CHI-E, CHI-B, and ACE coherent, as well as ACE-Lite IO coherent interfaces. Ncore also enables AXI non-coherent agents to act as IO coherent agents.

- Ecosystem Integration: Pre-validated with the latest Arm v9 automotive cores, delivering on a previously announced partnership with Arm.

- Safe: Supporting ASIL B to ASIL D requirements for automotive safety applications, and being ISO26262 certified.

- Efficient: Smaller die area, lower power, and higher performance by design, compared with other commercial alternatives.

- Markets: Suitable for Automotive, Industrial, Enterprise Computing, Consumer and IoT SoC solutions.

Andy detailed some of the benefits achieved on a consumer SoC design. These included streamlined chip floorplanning thanks to the highly distributed architecture, promoting efficient resource utilization. The Arteris high-performance interconnect with a high-bandwidth, low-latency fabric ensured seamless data transfer and boosted overall system performance.

Digging a bit deeper, Ncore also provides real-time visibility into the interconnect fabric with transaction-level tracing, performance monitoring, and error detection and correction. All these features facilitate easy debugging and superior product quality. The comprehensive ecosystem support and compatibility with industry-standard interfaces like AMBA, also facilitate easier integration with third-party components and EDA tools.

This was a very useful discussion. It appears that Arteris has dramatically reduced the overhead for implementation of cache coherent architectures.

To Learn More

I mentioned some specifics about the work Arteris is doing with Arm. Don’t think that’s the only partner the company is working with. Arteris has been called the Switzerland of system IP. The company also has significant work with the RISC-V community as detailed in the SemiWiki post here.

Arteris recently announced expansion of its Ncore product. You can read how Arteris expands Ncore cache coherent interconnect IP to accelerate leading-edge electronics designs here. In the release, Leonid Smolyansky, Ph.D. SVP SoC Architecture, Security & Safety at Mobileye offered these comments:

“We have worked with Arteris network-on-chip technology since 2010, using it in our advanced autonomous driving and driver-assistance technologies. We are excited that Arteris has brought its significant engineering prowess to help solve the problems of fault tolerance and reliable SoC design.”

There is also a short (a little over one-minute) video that explains the challenges that Ncore addresses. I found the video quite informative.

If you need improved performance for your next design, you should definitely take a close look at the cache coherent solutions offered by Arteris. You can learn more about Ncore here. And that’s how Arteris is unleashing innovation by breaking down the memory wall.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.