You are currently viewing SemiWiki as a guest which gives you limited access to the site. To view blog comments and experience other SemiWiki features you must be a registered member. Registration is fast, simple, and absolutely free so please,

join our community today!

Wi-Fi Bulks Upby Bernard Murphy on 04-23-2020 at 6:00 amCategories: Ceva, IP

Wireless discussion these days seems to be dominated by 5G, but that’s not the only standard that’s attracting attention. The FCC just circulated draft rules to dramatically expand bandwidth available to Wi-Fi in the new Wi-Fi 6e standard.

Is this a tragic plea for attention from a once-important standard, now eclipsed by its … Read More

Last-level cache seemed to me like one of those, yeah I get it, but sort of obscure technical corners that only uber-geek cache specialists would care about. Then I stumbled on an AnandTech review on the iPhone 11 Pro and Max and started to understand that this contributes to more than just engineering satisfaction.

Caching

A brief… Read More

It might seem paradoxical that simulation (or equivalent dynamic methods) might be one of the best ways to run security checks. Checking security is a problem where you need to find rare corners that a hacker might exploit. In dynamic verification, no matter how much we test we know we’re not going to cover all corners, so how can it… Read More

This blog is the next in a series in which Paul Cunningham (GM of the Verification Group at Cadence), Jim Hogan and I pick a paper on a novel idea we appreciated and suggest opportunities to further build on that idea.

We’re getting a lot of hits on these blogs but would like really like to get feedback also.

The Innovation

Our next pick… Read More

As someone who was heavily involved with rules for IP reuse for many years, I have a major sense of déja vu in writing again on the topic. But we (in SpyGlass) were primarily invested in atomic-level checks in RTL and gate-level designs. There’s a higher level of best practices in process we didn’t attempt to cover. ClioSoft just released… Read More

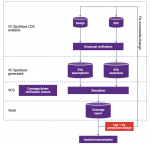

Synopsys just released a white paper, a backgrounder on CDC. You’ve read enough of what I’ve written on this topic that I don’t need to re-tread that path. However, this is tech so there’s always something new to talk about. This time I’ll cover a Synopsys survey update on numbers of clock domains in designs, also an update on ways to… Read More

The irony around this topic in the middle of the coronavirus scare – when more of us are working remotely through the cloud – is not lost on me. Nevertheless, ingrained beliefs move slowly so it’s still worth shedding further light. There is a tribal wisdom among chip designers that what we do demands much higher security than any other… Read More

Mentor just released a white paper on this topic which I confess has taxed my abilities to blog the topic. It’s not that the white paper is not worthy – I’m sure it is. I’m less sure that I’m worthy to blog on such a detailed technical paper. But I’m always up for a challenge, so let’s see what I can make of this, extracting a quick and not very… Read More

It’s a matter of pride to me and many others from Atrenta days that the brand we built in SpyGlass has been so enduring. It seems that pretty much anyone who thinks of static RTL checking thinks SpyGlass. Even after Synopsys acquired Atrenta, they kept the name as-is, I’m sure because the brand recognition was so valuable.

Even good… Read More

I was scheduled to attend the Mentor tutorial at DVCon this year. Then coronavirus hit, two big sponsors dropped out and the schedule was shortened to three days. Mentor’s tutorial had to be moved to Wednesday and, as luck would have it, I already had commitments on that day. Mentor kindly sent me the slides and audio from the meeting… Read More

An AI-Native Architecture That Eliminates GPU Inefficiencies