For decades, high-performance CPU design has been dominated by traditional out-of-order (OOO) execution architectures. Giants like Intel, Arm, and AMD have refined this approach into an industry standard—balancing performance and complexity through increasingly sophisticated schedulers, speculation, and runtime logic. Yet, as workloads diversify across datacenter, mobile, and automotive domains, the weaknesses of conventional OOO architectures—power inefficiency, complexity, and inflexibility—are becoming more pronounced.

Now, a new paradigm is emerging: Time-Based OOO microarchitecture. Anchored in both research and new patents, this approach offers a disruptive alternative that may give RISC-V its first defensible high-performance edge against entrenched incumbents. In the RISC-V era, where openness, extensibility, and ecosystem leverage are key differentiators, time-based OOO provides a path to leapfrog legacy incumbents.

At Hot Chips 2025, Ty Garibay and Shashank Nemawarkar from Condor Computing gave a talk on this topic. They presented details of their processor architecture (code name: Cuzco), a high-performance, RVA23 compatible RISC-V CPU IP, featuring a time-based OOO execution and a slice-based microarchitecture. Ty is the company’s President and Founder and Shashank is a Senior Fellow and the Director of Architecture.

The Key Idea: Time as a First-Class Resource

Traditional OOO processors rely on per-cycle schedulers that dynamically resolve dependencies and issue instructions. While effective, this method requires large, power-hungry hardware structures—reservation stations, wakeup/select logic, and dynamic scoreboard tracking—that scale poorly with wider, superscalar cores.

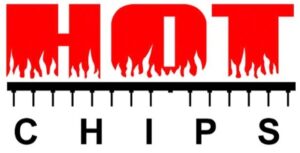

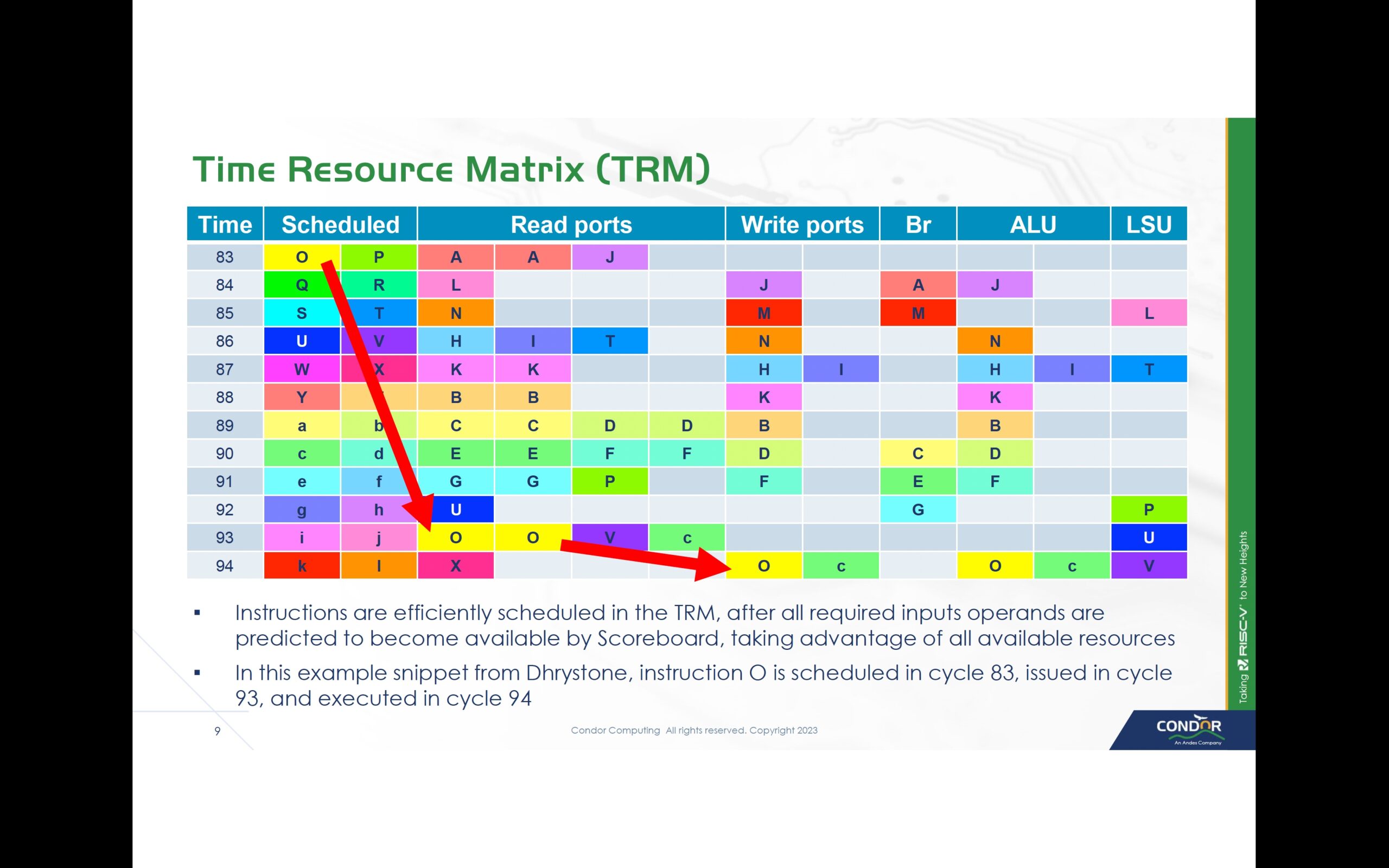

Time-based OOO execution flips this model. A Register Scoreboard tracks the future “write time” of instructions, so that downstream instructions automatically know when operands will be ready. A Time Resource Matrix (TRM) records busy intervals for execution resources such as ALUs, buses, load/store queues, which helps predict resource availability cycles ahead of time. This enables predictive scheduling, where instructions are issued with knowledge of exact future cycles for operands and resources.

In practice, this transforms instruction scheduling into something akin to a compiler’s static analysis, but executed in hardware with runtime adjustments for mispredicts, cache misses, and dynamic latencies. This results in lower gate count, reduced dynamic power, and simpler logic—while still delivering high IPC performance.

Why Now? Closing the Tooling and Ecosystem Gap

The concept of time-based scheduling is not new in academic research—but several barriers prevented its adoption in industry:

Historically, CPU design relied on proprietary, closed toolchains and performance modeling frameworks. Implementing a radically different scheduling model required deep compiler and simulator co-design—an almost impossible ask without community-driven support. The rise of RISC-V changes the equation. Open-source modeling frameworks like Sparta, Olympia, Spike, and Dromajo provide extensible platforms for exploring new scheduling strategies. Condor Computing has contributed new tools, such as Fusion Spec Language (FSL), and actively contributed toward Dromajo and Spike enhancements, to enable precise modeling and ecosystem-wide adoption. Where traditional OOO once benefited from standardization and inertia, the high performance RISC-V OOO now benefits from open-source leverage and community contributions. Time-based OOO rides on plug-and-play comparisons and refinements over traditional OOO techniques using these tools.

Cuzco’s Slice-Based Design: Flexible, Efficient and Scalable

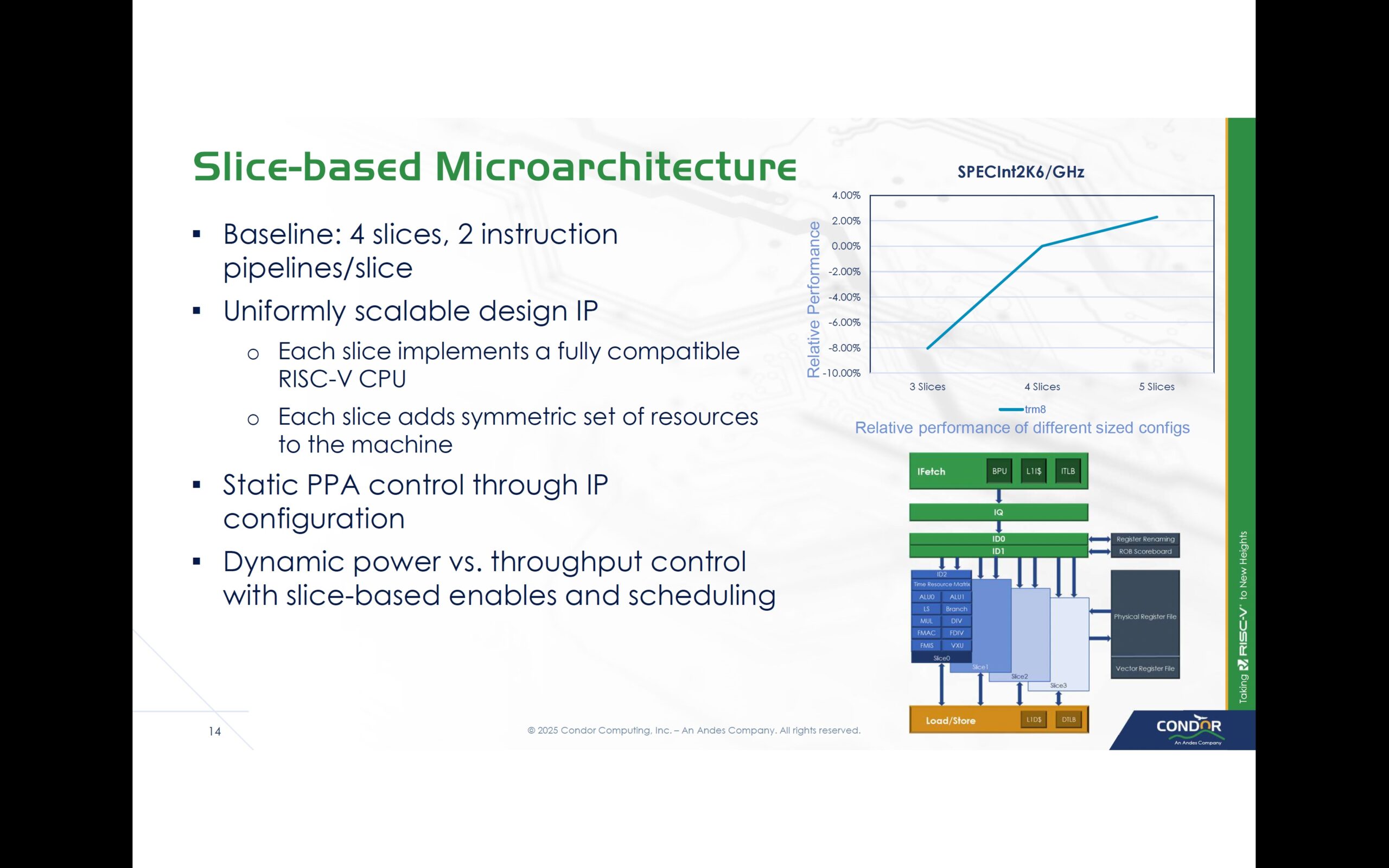

Slice-based microarchitecture delivers scalability, efficiency, and flexibility by breaking a CPU into modular, repeatable “slices,” each with its own pipelines and resources. This approach avoids the critical-path bottlenecks of monolithic superscalar designs, enabling predictable performance scaling from low-power IoT to datacenter workloads. Customers achieve static configurability by choosing two, three or four slices depending on their area/power/performance requirements. They can also achieve dynamic configurability by power-gating slices at runtime, allowing the processor to scale down for lower-power workloads. The result is higher performance-per-watt, faster time-to-market, and a more flexible IP offering that customers can tailor to diverse use cases.

Customer Benefits

For customers evaluating licensable CPU IP, the appeal of time-based OOO is not only architectural elegance but also tangible benefits:

- Performance-per-Watt: Comparable or superior IPC to traditional OOO

- Scalability: Supports up to 8 cores per cluster with private L2 and shared L3 caches, delivering datacenter-grade throughput without prohibitive power budgets.

- Predictability: Simplified scheduling reduces verification complexity and gate count, speeding up time-to-market compared to traditional OOO designs.

- Customization: Native RISC-V ISA extensibility, combined with TRM-driven scheduling, enables faster deployment of domain-specific accelerators—critical for AI, networking, and automotive use cases.

Summary

Cuzco’s time-based out-of-order execution represents a fundamental rethinking of CPU design. By eliminating the inefficiencies of per-cycle scheduling, it reduces complexity, lowers power, and enables broader scalability—all while remaining fully compatible with the RISC-V ISA and software ecosystem.

It’s a RVA23 compatible processor that delivers the best performance per watt and per sq.mm in licensable CPU IP. This is not an incremental improvement but rather a structural shift that could define the high-performance era of RISC-V.

Cuzco is designed for broad applicability:

- Datacenters: High throughput with lower power budgets translates to lower TCO.

- Mobile & Handsets: Energy efficiency with competitive performance.

- Automotive: Predictability and determinism, critical for safety workloads.

- Custom Accelerators: Domain-specific optimizations unlocked by RISC-V ISA extensibility.

To learn more:

Contact Condor Computing at condor-riscv@andestech.com

Visit Andes Technology website.

Visit Condor Computing website.

You can access this talk, on-demand from here. [Link once Hot Chips provides the link for general access]

Also Read:

Andes Technology: Powering the Full Spectrum – from Embedded Control to AI and Beyond

Andes Technology: A RISC-V Powerhouse Driving Innovation in CPU IP

Andes RISC-V CON in Silicon Valley Overview

Share this post via:

Comments

One Reply to “Beyond Traditional OOO: A Time-Based, Slice-Based Approach to High-Performance RISC-V CPUs”

You must register or log in to view/post comments.