While sensor-based control and activation systems have been around for several decades, the development and integration of sensors into control systems have significantly evolved over time. Early sensor-based control systems utilized basic sensing elements like switches, potentiometers and pressure sensors and were primarily used in industrial applications. With rapid advances in electronics, sensor technologies, microcontrollers, and wireless communications, sensor-based control and activation systems have become more advanced and widespread. Sensor networks, Internet of Things (IoT) platforms, and wireless sensor networks (WSNs) further expanded the capabilities of sensor-based control systems, enabling distributed sensing, remote monitoring, and complex control strategies.

Today, sensor-based control and activation systems are integral components in various fields, including industrial automation, automotive systems, robotics, smart buildings, healthcare, and consumer electronics. They play a vital role in enabling intelligent and automated systems that can adapt, respond, and interact with the environment based on real-time sensor feedback. With so many applications counting on sensor-based activation and control, how to ensure robustness, accuracy and precision from these systems? This was the context for Amol Borkar’s talk at the recent Embedded Vision Summit conference in Santa Clara, CA. Amol is a product marketing director at Cadence for the Tensilica family of processor cores.

Use of Heterogeneous Sensors

Heterogeneous sensors refer to a collection of sensors that are diverse in their sensing modalities, operating principles, and measurement capabilities and offer complementary information about the environment being monitored. Image sensors, Event-based image sensors, Radar, Lidar, Gyroscopes, Magnetometers, Accelerometers, and Global Navigation Satellite System (GNSS) are heterogeneous sensor types to name a few. Heterogeneous sensors are commonly used for redundancy to enhance fault tolerance and system reliability.

Why Sensor Fusion

As different sensors capture different aspects of the environment being monitored, combining these data allows for a more comprehensive and accurate understanding of the surroundings. The result is an enhanced perception of the environment, thereby enabling more informed decision-making.

While more sensors mean more data and that is good, each sensor type has its limitations and measurement biases. Sensors also often provide ambiguous or incomplete information about the environment. Sensor fusion techniques help resolve these ambiguities by combining complementary information from different sensors. By leveraging the strengths of different sensors, fusion algorithms can fill gaps, resolve conflicts, and provide a more coherent and reliable representation of the data. By fusing data from multiple sensors in a coherent and synchronized manner, fusion algorithms enable systems to respond in real time to changing conditions or events.

In essence, sensor fusion plays a vital role in improving perception, enhancing reliability, reducing noise, increasing accuracy, handling uncertainty, enabling real-time decision-making, and optimizing resource utilization.

Fusion Types and Fusion Stages

Two important aspects of sensor fusion are: (1) what types of sensor data to be fused and (2) at what stage of processing to fuse the data. The first aspect depends on the application and the second aspect depends on the types of data being fused. For example, if stereo sensors of the same type are being used, fusing is done at the point of data generation (early fusion). If image-sensor and radar are both used for identifying an object, fusing is done at late stage of separate processing (late fusion). There are other use cases where mid-fusion is performed, say for example, when doing feature extraction based on both image-sensor and radar sensing.

Modern Day Solution Trend

While traditional digital signal processing (DSP) is still the foundation for heterogeneous sensors-based systems, it is not easy to scale and automate these real-time systems as they get more complex. In addition to advanced DSP capabilities and superior sensor-fusion capabilities, AI processing is needed for scalability, robustness, effectiveness and automation requirements of large complex systems. AI-based sensor fusion combines information at the feature level, instead of fusing all data from different individual sensors.

Cadence Tensilica Solutions for Fusion-based Systems

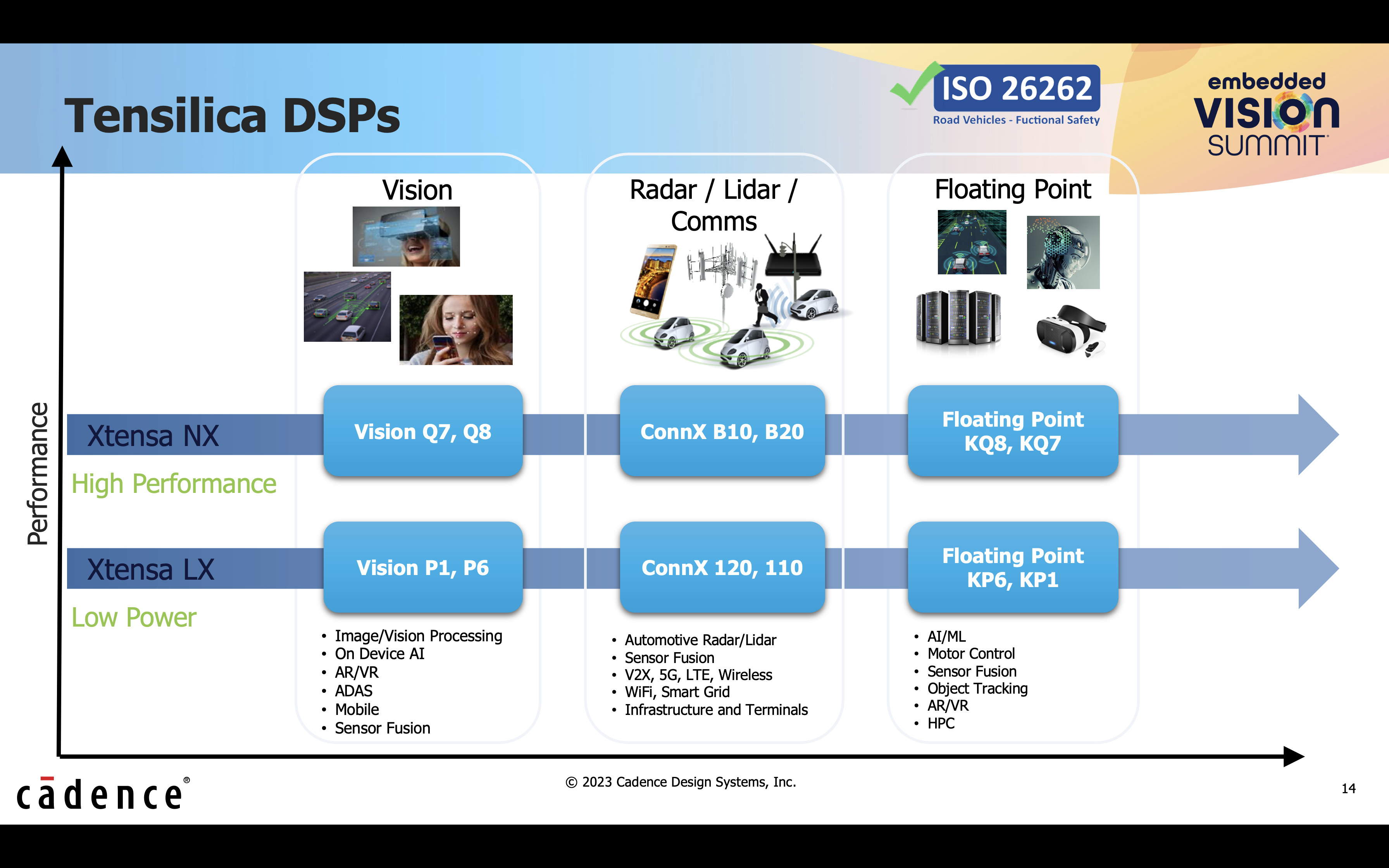

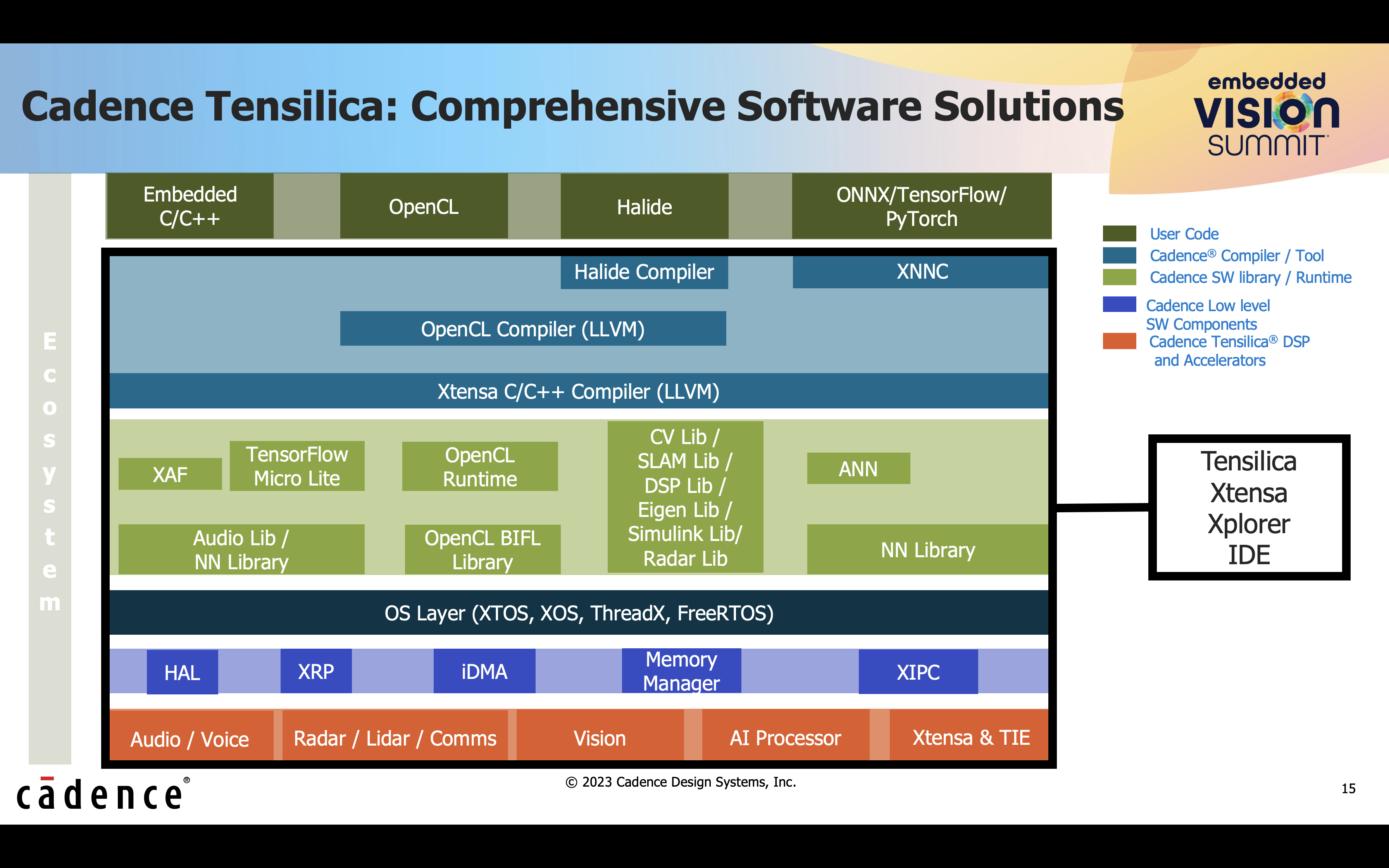

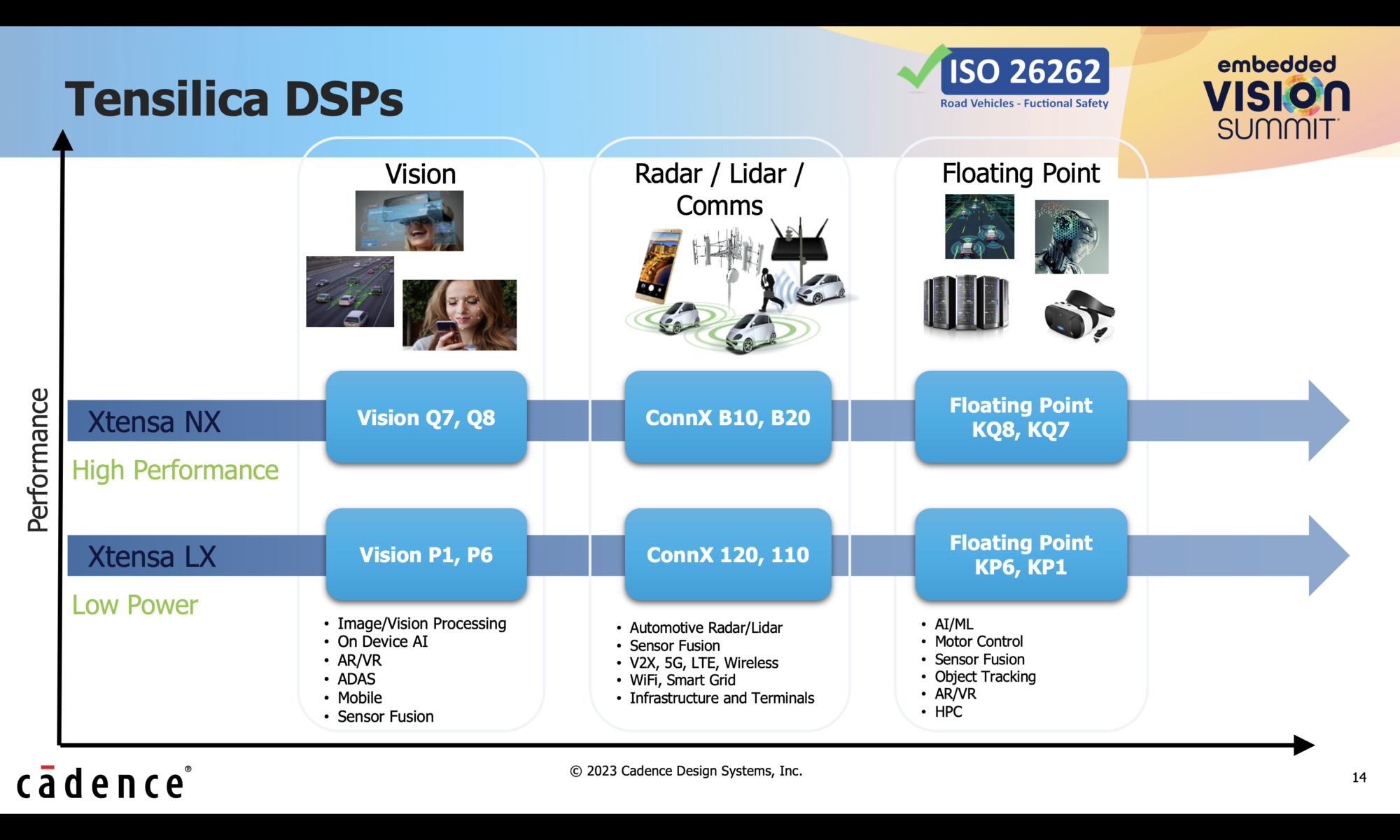

Cadence’s Tensilica ConnX and Vision processor IP core families and floating point DSP cores, make it easy to develop sensor fusion applications. Cadence also provides a comprehensive development environment for programming and optimizing applications targeting the Tensilica ConnX and Vision processor IP cores. This environment includes software development tools, libraries, and compiler optimizations that assist developers in achieving high performance and efficient utilization of the processor resources.

Tensilica ConnX is a family of specialized processor cores designed for high-performance signal processing, AI, and machine learning applications. The architecture of ConnX cores enables efficient parallel processing and accelerates tasks such as neural network inference, audio processing, image processing, and wireless communication. With their configurability and optimized architecture, these cores offer efficient processing capabilities, enabling developers to build power-efficient and high-performance systems for a range of applications.

The Tensilica Vision Processor is a specialized processor designed to accelerate vision and image processing tasks. With its configurability and architectural enhancements, it provides a flexible and efficient solution for developing high-performance vision processing systems across various industries, including surveillance, automotive, consumer electronics, and robotics.

Summary

Cadence offers a wide selection of DSPs ranging from compact and low power to high performance optimized for radar, lidar, and communications applications in ADAS, autonomous driving, V2X, 5G/LTE/4G, wireless communications, drones, and robotics. To learn more about these IP cores, visit the following pages.

Also Read:

Deep Learning for Fault Localization. Innovation in Verification

US giant swoops for British chipmaker months after Chinese sale blocked on national security grounds

Opinions on Generative AI at CadenceLIVE

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.