Vision pipelines, from image signal processing (ISP) through AI processing and fancy effects (super-resolution, Bokeh and others) has become fundamental to almost every aspect of the modern world. In automotive safety, robotics, drones, mobile applications and AR/VR, what we now consider essential we couldn’t do without those vision capabilities. Since Cadence Tensilica Vision platforms are already used in some impressive applications from companies including Toshiba, Kneron, Vayyar and GEO Semiconductor, so when they extend their vision and AI product line, that’s interesting news.

Fast evolution

Remember that this is a fast-moving space. You can already find phones with 6 cameras to support digital zoom and higher quality than you could get out of a single (phone-sized) camera. AR headsets that will let you measure depths through time-of-flight sensing (supported by a laser/LED). And, by extension, distances through a little trigonometry. Which can be invaluable in personal or work-related AR applications where you need to capture dimensions.

ISPs themselves are evolving rapidly because the quality of the image they produce critically affects the quality of recognition in the subsequent AI phase. This isn’t just about a pleasing picture. Now you must worry about whether the camera can distinguish a pedestrian stepping off the sidewalk in difficult lighting conditions. Before you even get to the AI phase. Dynamic range compression is a hot area here.

Then there’s AI, a world which continues to raise the bar on innovation in so many ways. This part of the pipeline is constantly advancing, spinning new neural net architectures to boost performance for safety critical applications. And to reduce power for most applications, but especially at the edge.

And finally post-processing. Bokeh for a nice background blur around that picture of your kids. Merging a real view with an augmented overlay (suitably aligned) for an AR headset or glasses. Or consider SLAM processing for that robot vacuum to navigate around your house. Or a robot orderly to navigate around a hospital, delivering medications and meals to patients. SLAM works largely through vision, building a map on the fly and correcting frequently to guide navigation. Curiously, SLAM doesn’t yet depend on AI, though there are indications AI is starting to appear in some applications.

What it takes

All of this means multiple high-resolution video streams, plus perhaps time-of-flight sensor data, converging first into an ISP, requiring very intensive signal processing. Then into pre-processing to build say a 3D point cloud. Then perhaps into SLAM for all that localization and mapping. These are massive linear algebra tasks, generally at least requiting single precision floating point, sometimes double.

The AI task is becoming a little more familiar. Sliding windows over massive fixed-point convolution, RELU and other operations across many neural net planes. Requiring heavy parallelism and lots of MAC (multiply-accumulate) primitives. With as much of the computation as possible staying in local memory because off-chip memory access is even uglier for AI power and performance than for regular algorithms.

Then you must fuse those inputs to enhance accuracy in object recognition through redundancy (low false positives and negatives), to compute depths and dimensions and whatever other conclusions could be derived from these images. Doing all of this requires a platform that is very fast, supporting all that signal processing, linear algebra and convolution. And very flexible because the algorithms continue to evolve. A platform which can also support hardware differentiation, to make your product better than your competitors’ offerings. The only way that I know to fit that profile is with embedded customizable DSPs.

A spectrum of solutions

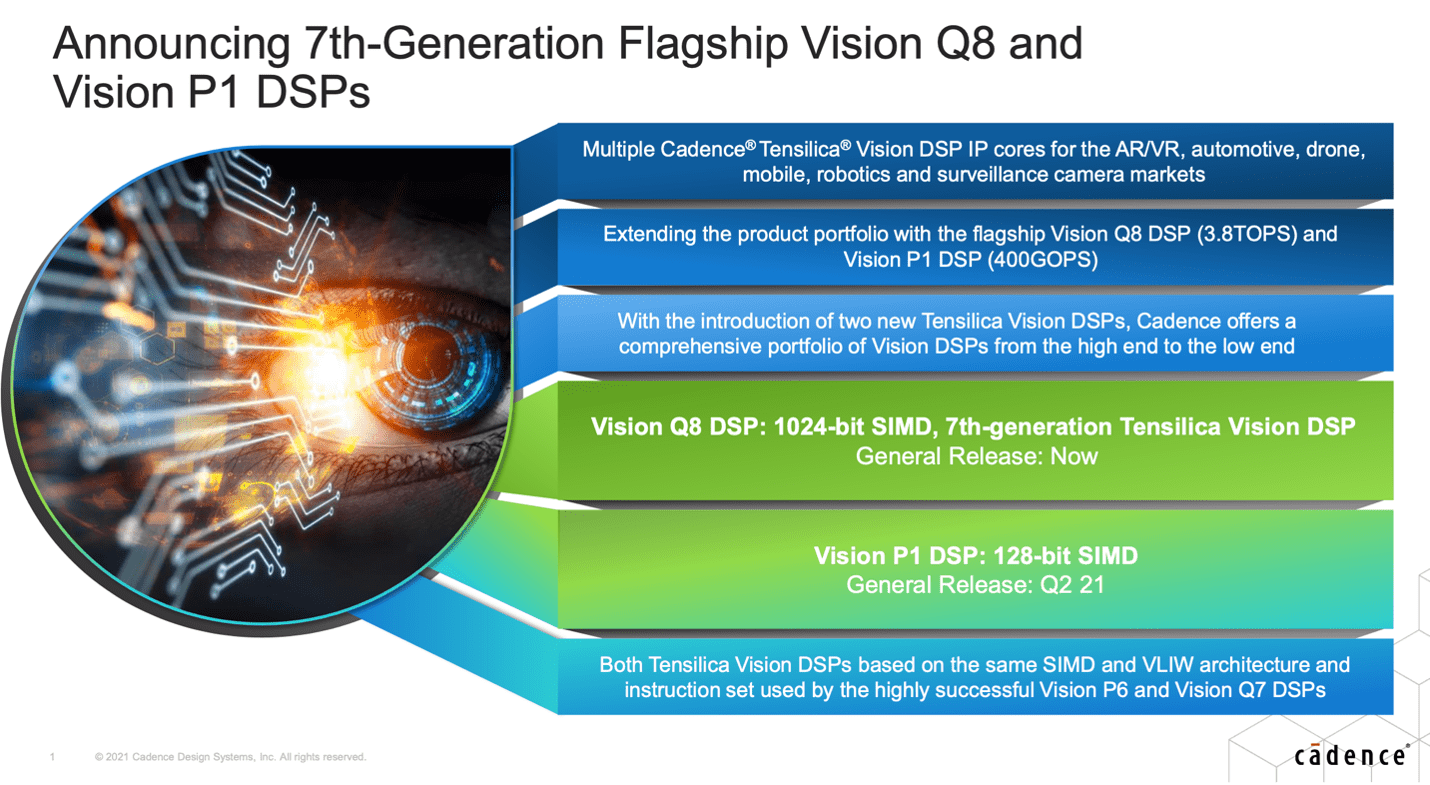

The range of applications an embedded solution like this must support demands both high performance and low power options. Tensilica already provides their Vision Q7 and Vision P6 platforms for high throughput and low power respectively. Now they have extended the family. The Vision Q8 offers 2X performance on the Q7 in computer vision, AI and floating point and addresses high end mobile and automotive applications. The Vision P1 offers a third of the power and area of the P6 and is targeted to always-on applications (face recognition, smart surveillance, video doorbell, …). Sensors for these applications will trigger (on movement or proximity for example) a wakeup call to the app.

Both processors use the same SIMD and VLIW architecture used in the Q7 and P6, along with the same software tools, library and interfaces. OpenCL, Halide, C/C++ and OpenVx for computer vision, all the standard networks for AI.

And this is really cool. Suppose you have your own AI acceleration hardware. Not the full accelerator but some part of it where you will add your own special sauce. The Tensilica platforms will operate as the AI master engine but can offload those planes to your special hardware. Which then return to the master when they are done. The compile flow through Tensilica XNNC-Link supports this division of labor starting from a common input.

You can learn more about these Tensilica platforms HERE.

Also Read

Agile and Verification, Validation. Innovation in Verification

Cadence Dynamic Duo Upgrade Debuts

Reducing Compile Time in Emulation. Innovation in Verification

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.