Let’s face it, AI is everywhere. From the cloud to the edge to your pocket, there is more and more embedded intelligence fueling efficiency and features. It’s sometimes hard to discern where human interaction ends, and machine interaction begins. The technology that underlies all this is quite complex and daunting to understand. Sometimes low power is critical, sometimes it’s all about raw throughput and it’s always about flexibility and programmability. Putting all this in perspective can be daunting. Just cataloging all the disciplines and suppliers involved is hard. When I saw a recent White Paper from Synopsys on the topic of AI, SoCs and IP, I took notice. After reading the piece I came away with a much better grasp of everything that is going on in the area of chips and AI. Indeed, Synopsys delivers a brief history of AI chips and specialty AI IP that covers a lot of ground.

If you are interested in AI, no matter what your job is, you’re going to want to read this White Paper. A link is coming. Let’s review some of the topics covered first. The author is Ron Lowman, DesignWare IP Strategic Marketing Manager at Synopsys. Besides Synopsys, Ron has had a long stint at Motorola and then Freescale. Ron clearly understands the space and does a great job explaining how all the pieces fit together. The breadth of application of AI is truly remarkable. The White Paper references a Semico report that is helpful. A quote from the report is included that is quite telling, “some level of AI function in literally every type of silicon is strong and gaining momentum.” That says a lot.

The various types of silicon for AI are discussed and there are two of note. Stand-alone accelerators that connect in some fashion to an apps processor and application processors with added neural network hardware acceleration on-device. The market segments served by these kinds of chips are detailed. There are many, and they exhibit various levels of three key parameters, depending on the application:

- Performance in TOPS

- Performance in TOPS/Watt

- Model compression

If you want to know how these parameters fit the various markets and what process technologies are used, download the White Paper. An interesting view of market growth is also provided that looks at expansion across high (>100 W), medium (5–100 W) and low (<5 W) power requirements. As you might imagine, the growth is extremely large. The split between the various power regimes may surprise you.

The design challenges associated with AI chip design are then discussed. There are many, including balancing power/latency/performance, special memory architectures, data connectivity and security. A useful set of core challenges that span all markets is provided:

- Adding specialized processing capabilities that are much more efficient performing the necessary math such as matrix multiplications and dot products

- Efficient memory access for processing of unique coefficients such as weights and activations needed for deep learning

- Reliable, proven real-time interfaces for chip-to-chip, chip-to-cloud, sensor data, and accelerator-to-host connectivity

- Protecting and securing data against hackers or data corruption

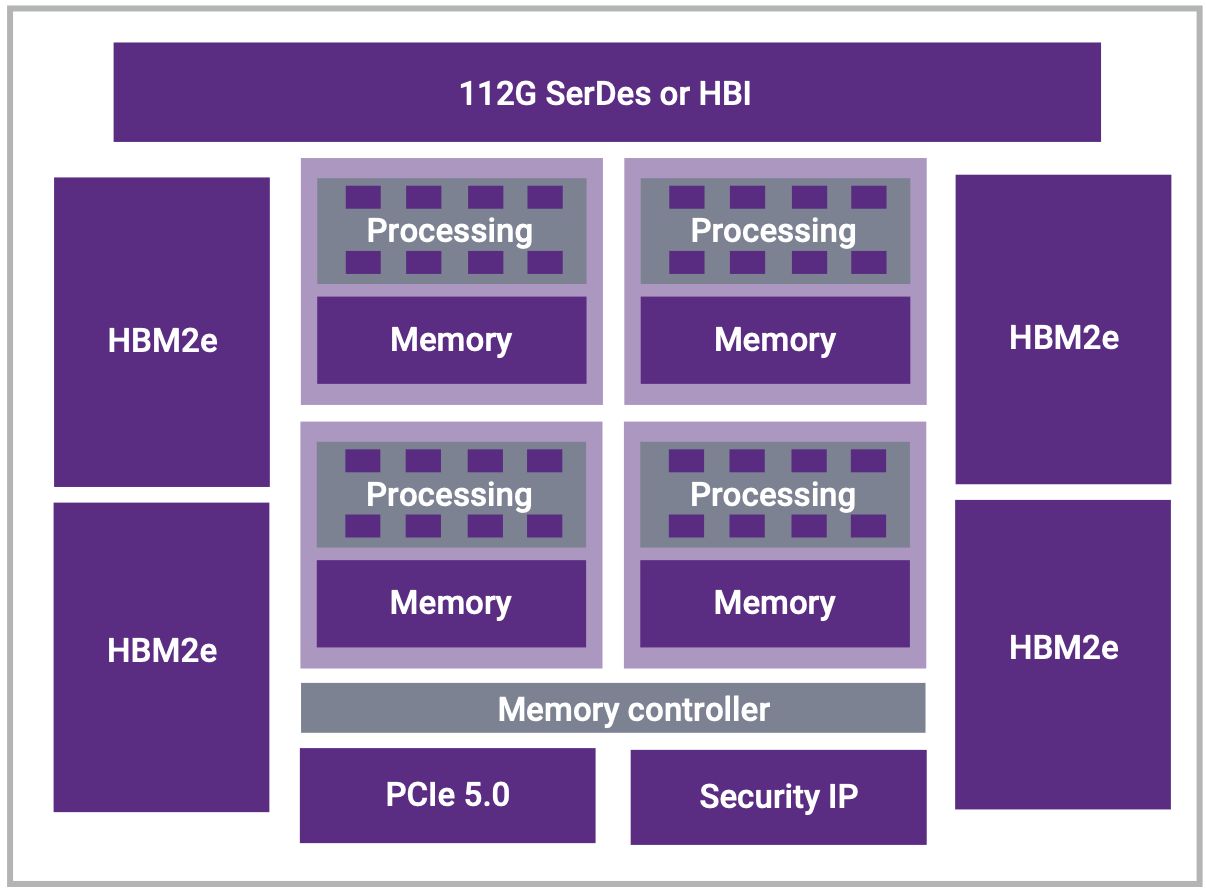

Typical architectures for various types of AI devices are then discussed. Applications here include cloud, edge and on-device. The figure at the top of this post is a representation of a cloud AI accelerator SoC. The White Paper then outlines the DesignWare IP that is available for AI SoCs. The categories discussed include:

- Specialized processing

- Memory performance

- Real-time data connectivity

- Security

Synopsys has quite a large footprint here, there’s a lot to browse. I’ve covered Synopsys IP support for AI/ML in this prior post as well. The White Paper concludes with a very important discussion regarding the tools and support Synopsys provides to help you get your AI application out the door. This includes tools for software development, verification, and benchmarking as well as expertise and IP customization. You can also review several customer successes, including Tenstorrent, Black Sesame, Nvidia, Himax, Infineon and Orbecc. This White Paper has something for everyone. Synopsys really delivers a brief history of AI chips and specialty AI IP that covers a lot of ground. The White Paper title is “The Growing Market for Specialized Artificial Intelligence IP in SoCs”, and you can download your copy here.

The views, thoughts, and opinions expressed in this blog belong solely to the author, and not to the author’s employer, organization, committee or any other group or individual.

Also Read:

The Heart of Trust in the Cloud. Hardware Security IP

Synopsys is Extending CXL Applications with New IP

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.