Intelligence at the edge is a hot topic these days. Not having to go all the way to the cloud to recognize objects, faces, speech and so on. But I find promoters can be rather fuzzy about what they mean by “the edge”. For many, intelligence at the edge means intelligence closer to the edge than the cloud. In a gateway for example. Not actually in an edge device such as an earbud, surveillance camera, agricultural moisture sensor or whatever. Which I get. Surely AI is going to burn too much power to run in a tiny device. Is this a non-problem? Are there really use-cases for low energy intelligence at the extreme edge?

Use-cases

Yes there are. Wireless earbuds are getting smarter with active noise cancellation and 3D audio (what Apple calls spatial audio). Command recognition is still on the phone, but it doesn’t have to be. Smart glasses are making a comeback in support of AR. For surgeries, engine maintenance, guidance around factories, warehouses. Also for consumers, guiding you to features and products while walking around a store or finding a restaurant. For the hearing-impaired, supporting audio-zoom, amplifying sound from a speaker you’re looking at while suppressing other ambient noise. No need to point your phone at the speaker.

Gateways? Yeah, but…

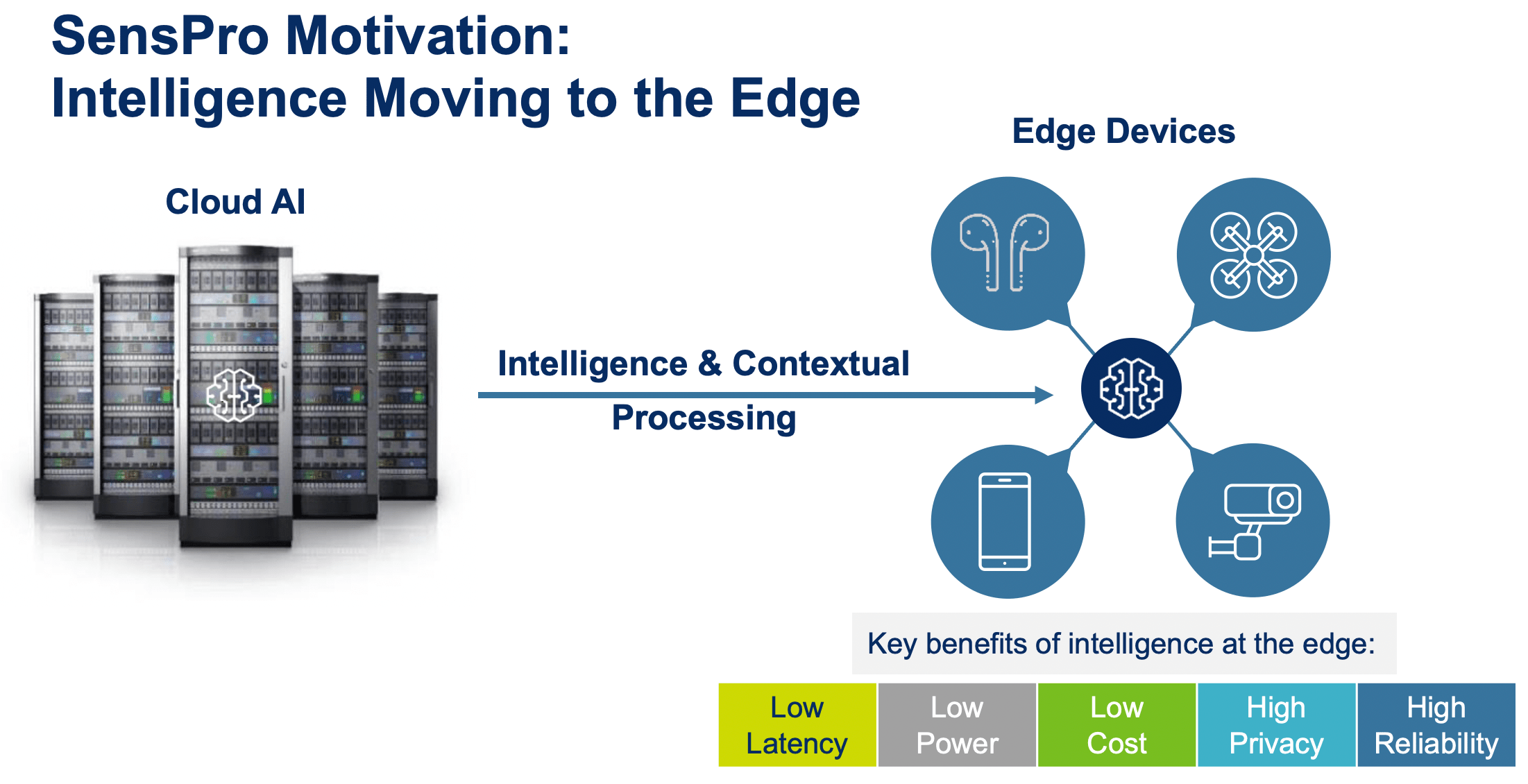

Gateway support would be great if it was always nearby, always reliable, zero latency, close to zero cost, guaranteed privacy, the edge device didn’t have to burn a lot of power uploading raw video/audio and you had unlimited communication bandwidth so that all these smart devices don’t interfere with each other. That’s a lot of ‘ifs’. In reality, communication is imperfect and sometimes blocked. On a gateway your tasks compete with others, which adds different latencies to image and audio feeds. Which then leads to motion sickness or unusability.

Edge devices by design have tiny batteries and gateways are expensive so not always nearby. And the final insult, the more smart devices we have, the more we’ll struggle with bandwidth. I already have a problem with Bluetooth and Wi-Fi collisions. A better solution is what’s already happening in cars. Push more basic intelligence into the real edge. Let your earbuds, smart glasses, hearing aids carry the initial intelligence burden without need for communication. Upload to the gateway only for the hard AI tasks – natural language processing for example.

High performance, low energy requirement

This demands a very low energy budget for intelligent processing, yet still being able to do that processing quickly. Which should sound familiar to my readers. You want to run fast then stop. That starts with a very low-energy trigger, say voice activity detection and trigger recognition (e.g. OK Google). Which then turns on the real engine. You want performance, so vector processing and a lot of MACs to support neural net processing. But you want to run at low frequency (while still delivering good performance) because you’re running on tiny batteries. As soon as the task completes, the engine turns off again.

The next wave?

We’re on very active search for natural interfaces to practical value everywhere. Getting away from not just the laptop but even the cellphone. (Maybe the cellphones become a pocket gateway.) Connectivity and audio VR at our wireless earbuds, tight directional amplification for the hearing impaired, useful AR, utility robots as home vacuums (they need SLAM, also practical in this kind of solution), anomaly detection for home security and factory performance monitoring. Moving everything that now needs a screen and a keyboard closer to the way we naturally function. Which demands low energy intelligence at the extreme edge. CEVA recently introduced their SensPro family of IPs for just this purpose. Vectorized DSP IP, range of MAC options and Tensor Flow Lite micro support. Bringing the goal closer. Check them out.

Also Read:

Combo Wireless. I Want it All, I Want it Now

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.