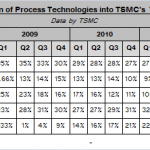

As I write this I sit heavyhearted in the EVA executive lounge returning from my 69[SUP]th[/SUP] trip to Taiwan. I go every month or so, you do the math. This trip was very disappointing as I can now confirm that just about everything you have read about TSMC 28nm yield is absolutely MANURE!

Continue reading “The Truth of TSMC 28nm Yield!”

Arteris evangelization High Speed Interfaces!

Kurt Shuler from Arteris has written a short but useful blog about the various high speed interface protocols currently used in the wireless handset (and smartphone) IP ecosystem. Arteris is well known for their flagship product, the Network-on-Chip (NoC), and the Mobile Application Processor market segment represent the first target for NoC: NoC is the IP which help increasing overall chip performance by optimizing internal interconnect, allows avoiding routing congestion during Place & Route and finally helps SoC design team integrating more quickly the tons of various functions, such an IP is more than welcome in such a competitive IC market segment! To make it clear, NoC is supporting interconnects inside the chip, when Kurt’s blog deals with the various functions used to interface the SoC with the other IC, still located inside the system (smartphone or media tablet). The blog provides a very useful summary, under the form of a table listing the various features of: MIPI HIS (High Speed Interface), USB HSIC (High Speed Inter-Chip), MIPI UniPro & UniPort, MIPI LLI (Low Latency Interface) and C2C (Chip-To-Chip Link).

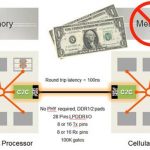

We will come back later on the listed MIPI specifications and USB HSIC, but I would like to highlight the last two in the list: LLIand C2C.

The first is based on high speed serial differential signaling and require using MIPI M-PHY physical block when the second is a parallel interface and requiring only LPDDR2 I /Os, but both functions are used in the aim of sharing a single memory (DRAM) between two chips, usually the application processor and the modem. The result is that the system integrator will save 2$ in the bill of material (BOM)… It does not look so fantastic, until you start multiplying these two bocks by the number of systems built by an OEM. Just multiply several dozens of million by 2$ and you realize that the return on investment (the additional cost of the C2C or LLI IP license) can come very fast, and represent several dozen of million dollar!

I should also add that Arteris is marketing these both controller IP functions, and if the company has the full rights on C2C, LLI is one of the numerous MIPI specifications. Just to give you some insight, LLI has been originally developed by one of the well known application processor chip makers, then the company has offered LLI to MIPI alliance and has asked Arteris to turn this internally developed function into a marketable IP, that Arteris is doing with an undisputable success. As far as I am concerned, I think that both LLI and C2C are “self selling”, as soon as you know that you can save $2 on the system BOM, you can imagine that OEM are pushing hard the chip makers to integrate such a wonderful function!

About Arteris

Arteris provides Network-on-Chip (NoC) interconnect semiconductor intellectual property (IP) to System on Chip (SoC) makers so they can reduce cycle time, increase margins, and easily add functionality. Arteris invented the industry’s first commercial network on chip (NoC) SoC interconnect IP solutionsand is the industry leader. Unlike traditional solutions, Arteris interconnect plug-and-play technology is flexible and efficient, allowing designers to optimize for throughput, power, latency and floorplan.

To know more about MIPI, you can visit:

MIPI Alliance web

MIPI wikion Semiwiki

MIPI surveyon IPNEST

Reminder: for Kurt’s blog, just go here!

Eric Esteve from IPNEST

Handsets, what’s up?

So who’s in and who’s out these days in handsets?

It looks as if Samsung has finally achieved a long-held goal to be the largest handset vendor, taking over from Nokia which has been the market leader for 14 years since 1998 when it passed Motorola. Nokia hasn’t reported yet but they cut their forecast. Samsung had a record quarter. Bloomberg estimates that Samsung sold 44M smart phones in Q1, and 92M phones in total, easily beating 83M for Nokia. Samsung also have a goal to be number one in semiconductor and overtake Intel, which they may well do but not immediately.

Nokia, as I’m sure you know, is largely betting its future on Microsoft and, in the US, on AT&T. It launched its new Lumia phone over easter weekend (when most AT&T stores were closed, not exactly like an iPhone lanuch with people camped out overnight to get their hands on the new model). There were also technical glitches about not being able to connect to the internet, which is a pretty essential feature for a smartphone. My own prediction is that WP7 is too little, too late and as a result Nokia is doomed. But maybe I underestimate the desperate need of the carriers to have an alternative to Android and iPhone that is more under their own control.

Funny isn’t it to look back just 8 or 10 years to when the carriers were paranoid about Microsoft, worrying that it might do to them in phones what they did to PC manufacturers where they took all the money (well, Intel got some too)? In the end it seems that it is then-tiny-market-share Apple that is taking all the money, 1/2 of the entire handset profits by some reports. iPhone alone is bigger than the whole of Microsoft. Samsung is also making good money but all the other smartphone handset makers such as HTC seem to be struggling. Now Microsoft is seen as the weakling, able to be bullied around the schoolyard by the carriers.

I still don’t entirely understand Google’s Android strategy. This quarter, for the first time, over 50% of new smartphones were Android based but Google makes very little from each one and all that is in incremental search. Like the old joke about if all you have is a hammer everything looks like a nail, every business seems to look like search to Google. Amazon is certainly making money with Android, and so is Samsung. There are rumors that Microsoft makes more than Google (through patent licenses to the major Android handset and tablet manufacturers). It remains to be seen what Google does with Motorola Mobility. If it favors them too much it risks alienating its other partners and pushing them away from Android. If it doesn’t favor them at all I don’t see why they should suddenly become a market leader in smartphones.

iPhone5 is expected in June or July. Presumably containing the quad-core A6. Presumably with LTE like the new iPad. But, of course, Apple isn’t saying anything.

Chip in the Clouds – "Gathering"

Cloud computing is the talk of the tech world nowadays. I even hear commentaries about how entrepreneurs are turned down by venture capitalists for not including a cloud component into their business plan no matter what the core business may be. The commentary goes “It’s cloudy without any clouds.” Add some clouds to your strategy and the future will be bright and sunny.

With such a strong trend, one might have expected companies within the $300B semiconductor market to have adopted “cloud” into their strategies by now and the answer is yes to varying degrees. Large established semiconductor companies as well as semiconductor value chain producer companies have built their enterprise-wide clouds for their engineers to tap into their vast compute farm. But access to the right number of latest and greatest compute resources may not always be available for the task on hand, independent of the size of the compute farm. This is because the compute farm is typically upgraded with new hardware resources on an incremental basis. So although the engineers may have their own private clouds to address the chip design needs, peak-time compute resource needs are not addressed optimally. And then there is the matter of peak-time EDA tools resource. Companies are still limited by the number of EDA tools licenses they own. If you’re a major customer of an EDA tools supplier, it is not an issue as peak-load license needs are addressed through temp licenses or short-term licenses. For everyone else, it is a painful negotiation with their EDA tools supplier. As much planning as can be done, peak-load needs cannot always be predicted well ahead of time. And the longer the negotiation with the EDA supplier takes, the more the customer falls behind on their tapeout schedule and consequently time-to-market schedule.

In other words, today, large to medium sized semiconductor companies have a private cloud for their compute needs and a kludge solution for their EDA license needs given their stature with their EDA suppliers. This solution has many issues. (1) they don’t need to maintain their compute servers but they do only to ensure they have access to compute power on-demand (2) they don’t have an automatic solution to add EDA tools resources on-demand (3) if using an EDA tools supplier’s cloud, customers don’t have a seamless cloud-based design flow simply because the design flow involves tools from more than one supplier.

As for smaller semiconductor companies, they neither have their own private cloud nor do they have the same flexible access to EDA tools licenses on-demand. And if using an EDA tools supplier’s cloud, they face the same issue as the larger customers do.

If a secure cloud-based chip design platform from a third-party company provides EDA suppliers agnostic, seamless design flow where the customer could tap into one particular set of tools for one chip project and a different set of tools (as per their team needs/skills) for a different chip project, that would be the ultimate offering. That ultimate offering is what would be called “Chip in the Clouds” platform.

“Chip in the Clouds” may have sounded a lot like “Head in the clouds.” But it is not. The time has arrived for “Chip in the clouds” platform to play a key role in redefining how chips are designed and implemented. Why do I say this? Stay tuned for future installments of my blog in which I’ll discuss the driving factors for the adoption as well as what is happening in the platform offering space.

I Love DAC

For the fourth year Atrenta, Cadence and Springsoft are jointly sponsoring the “I LOVE DAC” campaign. In case you have been hibernating all winter, DAC is June 3-7th in San Francisco at the Moscone Center.

There are two parts to “I LOVE DAC”. First, if you register by May 15th (and they haven’t all gone) then you can get a free 3-day exhibit pass for DAC. In fact this pass entitles you not just to the exhibits but also the pavilion panels (which take place in the exhibit hall), the three keynotes and the evening receptions after the show closes.

Secondly, if you go to the Atrenta, Cadence or Springsoft booths you can get an “I LOVE DAC” badge. Each day somebody who is walking the show floor wearing one of the badges will be randomly given a new iPad (aka, but not by Apple, as iPad3). If you still have an “I LOVE DAC” from the previous 3 years then you can wear that one and still be eligible.

The “I LOVE DAC” page on the DAC website, where you can register, is here.

EDPS: 3D ICs, part II

Part I is here.

In the panel session at EDPS on 3D IC a number of major issues got highlighted (highlit?).

The first is the problem of known-good-die (KDG) which is what killed off the promising multi-chip-module approach, perhaps the earliest type of interposer. The KDG problem is that with a single die in a package it doesn’t make too much sense to invest a lot of money at wafer sort. If the process is yielding well, then identify the bad die cheaply and package up the rest. Some will fail final test due to bonding and other packaging issues, and some die weren’t good to begin with (so you are chucking out a bad die after having spent a bit too much on it). With a stack just 4 die and a wafer sort that is 99% effective (only 1% of bad die get through), the stack only yields 95% and those 5% discarded do not just contain bad die, there are (almost) 3 good die and an expensive package too. Since these die are not going to be bonded out, they don’t automatically have bond pads for wafer sort to contact and it is beyond the state of the art to put a probe on a microbump (and at 1gm of force on 20um bump, that is enormous pressure) so preparing for wafer sort requires some thought.

The next big problem is who takes responsibility for what, in particular, when a part fails who is responsible. Everyone is terrified of the lawyers. The manufacturing might be bad, the wafer test may be inadequate, the microbumping assembly may be bad, the package may be bad and, generally, assigning responsibility is harder. It looks likely that there will end up being two manufacturers responsible, the foundry who does the semiconductor manufacturing, the TSV manufacturing and (maybe) the microbumps. And the assembly house or OSAT as we are now meant to call them (outsourced semiconductor assembly and test) who puts it all together and does final test.

The third big problem is thermal analysis. Not just the usual how hot does the chip get and how does that affect performance. But there are different thermal coefficients of expansion which can cause all sorts of mechanical failure of the connections in the stack. This was one of the biggest challenges in getting surface mount technology for PCBs to work reliably: the parts kept falling off the board due to the different reaction to thermal stresses. Not good if it was in your plane or car.

Philip Marcoux had a quote from the days of surface mount: “successful design and assembly of complex fine-pitch circuit boards is a team sport.” And 3D chips obviously are too. The team is at least:

- the device suppliers (maybe more than one for different die, maybe not)

- the interposer designer and supplier (if there is one)

- the assembler

- the material suppliers (different interconnects, different TSVs, different device thicknesses will need different materials, solder, epoxy…)

- an understanding pharmacist or beverage supplier (to alleviate stresses)

His prescription for EDA:

- develop a better understanding of the different types of TSV (W vs Cu; first/middle/last etc)

- coordinate with assembly equipment suppliers to create an acceptable file exchange for device registration and placement

- create databases of design guidelines to help define the selection of assembly processes, equipment and materials

- encourage and participate in the creation of standards

- develop suitable floorplanning tools for individual die

- develop 3D chip-to-chip planning tools

- provide thermal planning tools (chips in the middle get hot)

- provide cost modeling tools to address designer driven issues such as when to use 3D vs 2.5D interposer vs big chip

It is unclear to me whether these are all really the domain of EDA. Process cost modeling is its own domain and not one where EDA is well-connected. Individual semiconductor companies and assembly houses guard their cost models as tightly as their design data.

Plus one of the challenges with standards is when to develop them. Successful standards require that you already know how to do whatever is being standardized and as a result most successful standards start life as a de facto standard and then the known rough edges are filed off.

As always with EDA, one issue is how much money is to be made. EDA tools partially make money based on how valuable they are, but also largely by how many licenses large semiconductor companies need. In practice, the tools that make money either run for a long time (STA, P&R, DRC) or you sit in front of them all day (layout, some verification). Other tools (high level synthesis, bus register automation, floorplanning) suffer from what I call the “Intel only needs one copy” problem, that they don’t stimulate license demand in a natural way (although rarely in such an extreme way that Intel really only needs a single copy, of course).

Doing what others don’t do

Wally Rhines’ keynote at U2U, the Mentor users’ group meeting, was about Mentor’s strategy of focusing on what other people don’t do. This is partially a defensive approach, since Mentor has never had the financial firepower to have the luxury of focusing all their development on sustaining their products and then make acquisitions of startups to get new technology. Even when they have acquired startups, they have tended to be ones in which nobody else was very interested.

In his keynote at DAC in 2004, Wally pointed out that every segment basically grows fast as it gets adopted and then goes flat. This is despite the significant investment that is required to keep products up to date (for example, there has been no growth in the PCB market despite the enormous amount of analysis that has been added since that early market phase). Once there are no new users moving into a product segment then the revenue goes flat. Consequently all the growth in EDA has come from new segments. Back 8 years ago Wally predicted that the growth would come from DFM, system level design and analog-mixed signal. DFM has grown at 12% CAGR since then, ESL at 11%, formal verification at 12%. But mainstream EDA grew at just 1%.

So that raises the question of what next? Which are the next areas that Mentor sees as adding growth.

First, low power design at higher levels. Like so much in design, power suffers from the fact that you only have accurate data when the design is finished and you have the least opportunity to change it, whereas early in the design you lack good data but it is comparatively influence it. Embedded software is increasingly an area that has a lot of effect on power and performance but the environments for hardware design are just not optimized for embedded software. Mentor has put a lot of investment into Sourcery CodeBench to enable software development on top of virtual platforms, emulators, hardware and so on. To give an idea of just how different the scale is in embedded software versus IC design, there are 20,000 download per month.

Second, functional verification beyond RTL simulation. Most simulation time is spent simulating things that have already been simulated. By being more intelligent about directing constrained random simulation, Mentor is seeing reductions of 10 to 50 times in the amount of simulation required to achieve the same coverage. With server clock rates static and multicore only giving limited scalability, emulation is the only way to do full-chip verification on the largest designs and increasingly surrounding an emulator with software peripherals makes it available to dozens of designers to share.

Third, physical verification beyond DFM. Calibre’s PERC (programmable electrical rule checking) allows much more than simple design rules to be checked: power, ESD, electromigration, or whatever you program. 3D chips also require additional rule checking capability to ensure that bumps and TSVs align correctly on different die and so on.

Fourth, DFT beyond just compression. Integrating BIST with compression and driving compression up to 1000X. Moving beyond the stuck-at model and looking inside cells for all the possible shorts and opens which catches a lot more faulty parts that pass the basic scan test. 3D chips, again, require special approaches to test to get the vectors to the die that are not directly connected to the package.

Fifth, system design beyond PCB. This means everything from ESL and the Calypto deal, to chip-package-board co-design.

Mentor also has even more off the beaten track products. Wiring design for automotive and aerospace. Heat simulation. Thermal analysis of LEDs. Golf club design?

Well, something is working. Mentor have gone from having leading products in just 3 of Gary Smith EDA’s categories to 17 today, on a par with Synopsys and Cadence. And, of course, last year was Mentor’s first $1B year, making Mentor the #2 EDA company.

Cadence support for the Open NAND Flash Interface (ONFI) 3.0 controller and PHY IP solution + PCIe Controller IP opening the door for NVM Express support

The press release about ONFI 3.0 support was launched by Cadence at the beginning of this year. It was a good illustration of Denali, then Cadence, long term commitment to Nand Flash Controller IP support. The ONFI 3 specification simplifies the design of high-performance computing platforms, such as solid state drives and enterprise storage solutions; and consumer devices, such as tablets and smartphones that integrate NAND Flash memory. The new specification defines speeds of up to 400 mega-transfers per second. In addition to the new ONFI 3 specification, the Cadence Flash and controller IP also support the Toggle 2.0 specification.

“NAND flash is very dramatically growing in the computing segment and is no longer just for storing songs, photos, and videos,” said Jim Handy, director at Objective Analysis. “The result is that the bulk of future NAND growth will consist of chips sporting high-speed interfaces. Cadence support of ONFI 3 and other high-speed interfaces is coming at the right time for designers of SSDs and other systems.”

If you look at this IP segment size, and compare the design starts count with the DDRn Controller IP design starts, it was so far one order of magnitude less. Looking at the design wins made by Cadence on the IP market, you can see that the Denali products have generated 400+ design wins for DDRn memory controller when the Flash memory design wins are in the 50+ range. To make it clear, we are talking about the Flash based memory products used in:

- Data centers to support Cloud computing (high IOPS need)

- Mobile PC or Tablets to support “instant on” (SSD replacing HDD)

- NOT the eMMC and various flash cards

The latter market segment generates certainly a lot more IP sales, but at a fraction only of the cost of the IP license for a Flash controller managing NVM used in data center or SSD. Flash memory controller IP family from Cadence is targeting the high end of the market.

It’s also interesting to notice that Synopsys, covering most of the Interface protocols IP, including DDRn memory controller where the company is enjoying good market share as well as Cadence, is not supporting Flash memory controllers. You may argue that this market segment is pretty small, and why should Synopsys care about it? Simply because it could be the future of the storage market! If you look at storage, you probably think “SATA” and Hard Disk Drive (HDD)… All HDD shipped to be used inside a PC are SATA enabled, as well as the very few SSD integrated to replace HDD in the Ultra Notebook market. That’s right. But, as a matter of fact, SATA, as the standalone protocol to support storage, has reached a limit. A technology limit, as SATA 3.0, based on a 6 Gbps PHY, will be the latest SATA PHY.

We can guess that SATA, as a protocol stack, will survive, as some features like Native Command Queuing (NCQ) are unique to SATA and very efficient to optimize storage access (whether HDD or SSD). But the PHY part is expected to be PCI Express based in the future, the protocol name becoming “SATA Express”, at least for the PC (desktop, enterprise, mobile) or Media Tablet segments, where the use of one lane PCIe gen-3 will offer 1 GB/s bandwidth, to be compared with 0.48 GB/s with SATA 3.0.

Still in the storage area, but Flash based, the current solution for high I/O Per Second (IOPS) application, is based on the use of a Nand Flash Memory Controller integrated with an Interface protocol which could be SATA 3.0, USB 3.0 or PCI Express (in theory) but which is in practice based on PCIe, like for example a x4 PCIe gen-2 offering 20Gb/s raw bandwidth, or 2GB/S effective.

Here, the emerging standard will be named NVM Express and will confirm afterwards the solution currently used, and probably define a roadmap to support higher bandwidth needs associated with cloud computing development.

Using Nand Flash devices has a cost: accessing a specific memory point will end up degrading the device (at that specific point), especially for Multi Level Flash (MLC). This effect being amplified when you use Flash devices manufactured in smaller technology nodes, and getting worse as far as you are using higher capacity devices, as they are built on the smallest nodes. In other words, the more you use a SSD, the more important is the risk to generate an error. Cadence implements sophisticated, highly configurable error correction techniques to further enhance performance and deliver enterprise class reliability. Delivering advanced configurability, low-power capabilities and support for system boot from NAND, the Cadence solution is scalable from mobile applications to the data center. The IP is backward-compatible with existing ONFI and Toggle standards. The existing Cadence IP offering supports the ONFI 1, ONFI 2, Toggle 1 and Toggle 2 specifications, and also provides asynchronous device support. Cadence also offers supporting verification IP (VIP) and memory models to ensure successful implementation.

The move from SATA based storage, to HDD, SATA Express compliant, or SSD, NVM Express compliant, will certainly change the storage landscape, as well as the IP vendors positioning. Synopsys is well positioned on SATA IP and PCI Express IP segment, when Cadence is not supporting SATA IP, but supports Nand Flash and PCI Express Controller IP. With the emerging of “SATA Express” and “NVM Express”, it will be a new deal for IP vendors, interesting to monitor!

By Eric Esteve from IPNEST

Analog Automation – Needs Design Perspective

Recently I was researching the keynote speeches of isQED (International Society for Quality Electronic Design) Symposium 2012 and saw the very first, great presentation, “Taming the Challenges in Advanced Node Design” by Tom Beckley, Sr. VP at Cadence. I know Tom very well as I have worked with him and I admire his knowledge, authority and leadership in analog and mixed-signal domain. Inspired by his presentation, I became curious and further read his detailed speech put into blogs at EDA360 page. It was great pleasure going through the astounding collection of details and ideas there. I read it twice very minutely.

As Custom IC, AMS, Physical Design has been my core expertise; it reminded me about one of my article, “Need and Opportunity for Higher Analog Automation” in early Feb. In that article my emphasis was on systematic variation and layout dependent effects at lower nodes, which are primarily analog specific depending on device parameters and their relative placement, thereby making it essential to automate the detection and correction of such effects early in the design cycle. It is heartening to see Cadence coming up with Rapid Prototyping methodology to develop design building blocks called ‘modgens’ which account for layout dependent effects, parasitics and new P&R rules at 20nm, the methodology even uses double patterning technology to increase the routing pitch.

It’s great methodology which can address mega function generation employing automatic detection of analog structures like current mirror and differential pair from schematic and generating layout followed by extraction and verification. Using these building blocks for higher level design can serve the purpose, but not in all cases. Preserving the placement constraints to re-construct the layout with any changes is fine till ECO, but that cannot be employed for large changes. If we look at the problem from design perspective, in today’s context, at 20nm, a typical analog IP block could be big in the range of 40000 to 50000 transistors. Clearly and specifically knowing that analog design can be an ocean of secrets, all of that may not rightly fit into the scheme of rapid prototyping building blocks into abstracts and then assembling them. Even if 75% to 80% of that fits into the building block level automation, the rest 20% (about 10000 transistors) needs to be done by hand which would be a substantial task considering the effects and complexities with each transistor at 20nm we talked about. At 14nm, it is going to be tougher. This leaves us at the same place we were with manual looping between circuit and layout.

Considering the design perspective, it needs a general approach to placement and routing the analog design with due attention to 20nm issues. In my article, I had also talked about the need for a general approach to analog automation based on an open standard analog constraint format which includes design constraints for symmetry, matching, shielding, placement, floorplanning, routing, clocking, timing, and electrical and so on. Of course, the abstraction approach solves the problem to great extent, but for completeness of the design, it needs a general automation applicable to the whole design. Once that becomes available, that can take the centre place.

Comments from design and EDA community are welcome. I would be happy to know if there are more new ideas for analog automation.

By Pawan Kumar Fangaria

EDA/Semiconductor professional and Business consultant

Email:Pawan_fangaria@yahoo.com

EDPS: 3D ICs, part I

The second day (more like a half-day) of EDPS was devoted to 3D ICs. There was a lot of information, too much to summarize in a few hundred words. The keynote was by Riko Radojcic of Qualcomm, who has been a sort of one-man-band attempting to drive the EDA and manufacturing industries towards 3D. Of course it helps if you don’t just have a sharp arrow but the wood of Qualcomm behind it. Curiously, though, Qualcomm themselves have been cautious in actually using 3D IC technology. Possibly they have done some test chips but I don’t know of any parts that they have in production. Other presentations were by Altera, Mentor and Cadence, plus a panel discussion.

Those who have been in hi-tech for years know about the hype cycle, I believe originated by Dataquest. Gartner, Dataquest’s heir, reckons TSV are now on the slope of enlightenment where things become real. At the top of the hype cycle are nanotube electronics, wireless power and Occam processes (whatever they are).

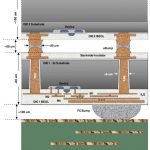

There are a number of varieties of 3D IC with different challenges for both EDA and manufacturing. If you include package-on-package (POP) then you probably have a 3D IC in your pocket. Almost all the smartphone SoCs including Apple’s A4/A5 series, have memory and logic in the same package. POP does not require Thru-Silicon-Vias (TSVs) since it is all wire-bonded inside the package.

One thing I learned is that while everyone talks about copper TSVs, the small TSVs that are so far in use are actually tungsten. Nobody knows how to make copper TSVs that small. There is actually a reasonable possibility that we will end up with two types of TSV: tungsten for signal, small and tightly spaced, and copper for power supply and perhaps clock, much bigger and with higher current capacity.

One big development earlier this year was the finalization of the Jedec Wide I/O standard which allows memory to be stacked on top of logic, so called memory-on-logic (MOL). With much shorter distances, lower capacitance and 512 bits wide this allows a much higher bandwidth lower power interface between the processor and memory. The memory is also designed to be stacked, with more than one memory die. In fact all the memory suppliers have already announced various forms of memory stacks.

Interposer based designs, so called 2.5D, have the big advantage of not requiring TSV except through the interposer itself (unless there is a memory stack). As everyone who watches 3D ICs probably knows, Xilinx is shipping a high-end FPGA using silicon interposer technology, perhaps the only high(ish) volume product in production.

All camera chips are also 3D of some sort, with the CCD device on top of the image processing chip. There was an inconclusive debate as to how many of these use TSVs and how many use another technology whereby the signals are brought around the edge of the thinned die rather than going through it.

Thermal is going to be a big issue. Mobile chips are low power but they are not that big, so the power density and thus many of the thermal problems are the same as for server CPUs. IBM is looking at liquid cooling through microchannels. That has its own set of challenges and may or may not work out, but for sure that is not going to be the solution in your phone.

The motivation for 3D is a mixture of economic and what has come to be called “More than Moore”. We can no longer continue to scale CMOS the way we have been doing, and we are falling off the 30% per year cost reduction treadmill. It is still an open question whether we have a post optical lithographic process that we can manufacture economically, EUV being the most promising with E-beam the only other serious alternative. High-density TSV and 3D stacking offers an alternative way to keep Moore’s law on track (think of Moore’s law as being about transistors per volume, just that we only used one die for the first 50 years). It is the first of several disruptive technology changes that substitute for just scaling our lithography.

But, as with any disruptive technology, you don’t get it for free. It is disruptive. You need new tools, a new supplier ecosystem. There are new sorts of interactions you need to worry about such as where TSVs can be placed, how they affect silicon through stress, managing electrical and thermal coupling in the stack and…and…

The roadmap, at the highest level, goes through POP (in production today), to wide-I/O MOL and eventually LOL.