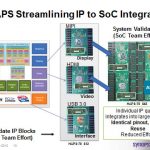

The most exciting EDA + Semi IP company that I ever worked at was Silicon Compilers in the 1980’s because it allowed you to start with a concept then implement to physical layout using a library of parameterized IP, the big problem was verifying that all of the IP combinations were in fact correct. Speed forward to today and our industry still faces the same dilemas, how do you assemble a new SoC designed with hard and soft IP, and know that it will be functionally and physically correct?

They say that it takes a village to raise a child, so then in our SoC world it takes collaboration between Foundry, IP providers and EDA vendors to raise a product. One such collaboration is between:

- TSMC with their Soft IP alliance program

- An Atrenta IP Kit

- Sonics has developed Soft IPusing Atrenta and TSMC

These three companies are hosting a webinar on Tuesday, March 5, 2013 at 9AM, Pacific time to openly discuss how they work together to ensure that you can design SoCs with Soft IP and not get burned.

Agenda

- Moderator opening remarks

Daniel Nenni (SemiWiki) (5 min) - The TSMC Soft IP Alliance Program – structure, goals and results

(Dan Kochpatcharin, TSMC) (10 min) - Implementing the program with the Atrenta IP Kit

(Mike Gianfagna, Atrenta) (10 min) - Practical results of program participation

(John Bainbridge, Sonics) (10 min) - Questions from the audience (10 min)

Speakers

Daniel Nenni

Founder, SemiWiki

Daniel has worked in Silicon Valley for the past 28 years with computer manufacturers, electronic design automation software, and semiconductor intellectual property companies. Currently Daniel is a Strategic Foundry Relationship Expert for companies wishing to partner with TSMC, UMC, SMIC, Global Foundries, and their top customers. Daniel’s latest passion is the Semiconductor Wiki Project (www.SemiWiki.com).

John Bainbridge

Staff Technologist, CTO office, Sonics, Inc.

John joined Sonics in 2010, working on System IP, leveraging his expertise in the efficient implementation of system architecture. Prior to that John spent 7 years as a founder and the Chief Technology Officer at Silistix commercializing NoC architectures based upon a breakthrough synthesis technology that generated self-timed on-chip interconnect networks. Prior to founding Silistix, John was a research fellow in the Department of Computer Science at the University of Manchester, UK where he received his PhD in 2000 for work on Asynchronous System-on-Chip Interconnect.

Mike Gianfagna

Vice President, Corporate Marketing, Atrenta

Mike Gianfagna’s career spans 3 decades in semiconductor and EDA. Most recently, Mike was vice president of Design Business at Brion Technologies, an ASML company. Prior to that, he was president and CEO for Aprio Technologies, a venture funded design for manufacturability company. Prior to Aprio, Mike was vice president of marketing for eSilicon Corporation, a leading custom chip provider. Mike has also held senior executive positions at Cadence Design Systems and Zycad Corporation. His career began at RCA Solid State, where he was part of the team that launched the company’s ASIC business in the early 1980’s. He has also held senior management positions at General Electric and Harris Semiconductor (now Intersil). Mike holds a BS/EE from New York University and an MS/EE from Rutgers University.

Dan Kochpatcharin

Deputy Director IP Portfolio Marketing, TSMC

Dan is responsible for overall IP marketing as well as managing the company IP Alliance partner program.

Prior to joining TSMC, Dan spent more than 10 years at Chartered Semiconductor where he held a number of management positions including Director of Platform Alliance, Director of eBusiness, Director of Design Services, and Director of Americas Marketing. He has also worked at Aspec Technology and LSI Logic, where he managed various engineering functions.

Dan holds a Bachelor of Science degree in electrical engineering from UC Santa Barbara, a Master of Science in computer engineering, and an MBA from Santa Clara University.

Registration

Sign up here.