Since the beginning of time, people on Earth have peered into the night sky, pondering if they were alone in the universe. Today, we have a large group of scientists that are working to answer that question. The precision required for their search often depends on the performance of a key piece of technology – the analog-to-digital converter (ADC).

Continue reading “Searching for Extraterrestrials”

New Protocol (NB- IoT) Requires New DSP IP and New Business Model

If we agree on the definition of IoT as a distributed set of services based on sensing, sharing and controlling through new nodes, we realize that these nodes are a big hardware opportunity. The chip makers and IP vendors have to create innovative SoC, delivering high performance at low cost and low energy. Moreover, the new systems will have to integrate multiple sensors and stay in Always Alert mode.

The problem comes when you realize that no processor core is ideally suited for all these 3 functions: sensing, computation and communication.

Sensing is going from voice/face trigger to biometric monitoring, indoor navigation to sensor fusion, and probably many more as we can be confident about the creating power of innovators. Computation is linked with maths: Digital Signal Processing, bit manipulation, floating point, encryption, security, etc. when communications is based on protocol standards like NB-IoT, Wi-Fi Halow, GNSS, BLE or Zigbee.

Because every IoT application is different and because the above list is pretty long, no processor cores are ideally suited for all 3 functions, sensing, computing and communicate. Cadence, and more precisely Tensilica team developing the highly configurable DSP IP core, has brainstormed and realize that cost and ease of use are driving the desire for single core for IoT SoCs. The next step has been to develop the new Fusion F1 DSP core, able to compute, support sensing and communication protocols.

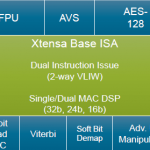

The Fusion F1 DSP core is above pictured and the green box is the core basis. Because Tensilica want to address various applications, IoT and even more, the flexibility has been the driver for the architecture definition. The 7 blue boxes (FPU, AVS, AES-128, 16-bit Quad MAC, Viterbi, Soft Bit Demap and Bit Manipulation) are proposed as pre-verified and proven option. A chip maker can really optimize the DSP core definition in respect with the real needs of the application, and minimize the DSP area and power consumption.

This flexibility is added to the natural DSP flexibility, as you can use the same core to support the communication protocol (the modem) when active, then switch to support sensor fusion when needed. This strategy is also good for power consumption optimization, which is key for this type of application.

This Fusion DSP can target technology nodes from 55 nm, 40 nm, 28 nm to 22 FD-SOI (all of these sounding good for IoT applications), and obviously smaller nodes when designing to support very complexes platforms.

If you take a look at the above picture, it’s clear that the Fusion F1 DSP is architecture to efficiently support the modem function in wireless communication, thanks to the selection of Advanced Bits Ops., Viterbi or Soft Bit Demap., but also sensor fusion and audio/voice/speech. When you look at the end products, wearable, smart home or automotive, you can guess that the majority of these applications will integrate one or more wireless communication protocols.

A good way to introduce the very interesting partnership built by Cadence with Commsolid, providing ultra-low power solutions for standardized wide-area networks (WAN) like Cat-NB1 (NB-IoT) and 5G IoT in the future. The NB-IoT protocol should see a large adoption in the applications requiring ultra-low power but low modem bandwidth (< 100 kbps), which fit well with the specification of wearable and most of IoT systems.

Commsolid has built an ultra-low power baseband IP, the CSN130, designed for the new NB-IoT standard specified in 3GPP Release 13. The CSN130 is a complete pre-certified NB-IoT baseband, consisting of hardware and software. In fact, the physical layer implementation, the modem, target the Fusion F1 DSP and this explain why this partnership is beneficial for a customer needing fast TTM at no risk, as the Commsolid NB-IoT baseband IP is pre-certified, using Tensilica DSP.

As such, the Cadence/Commsolid partnership is a very good solution to develop an application supporting NB-IoT wireless protocol, but the story doesn’t stop here, as it goes up to the business model. Commsolid has the right to license the complete solution made of the CSN130 IP AND the Fusion F1 DSP from Cadence to a customer asking for business simplification (only one license agreement make the project manager life easier, as far as legal and business negotiation are concerned).

The adoption of emerging IoT technology and innovative wireless protocol like NB-IoT going together with simplified business model should make the solution more attractive.

Last but not least, the FUSION F1 DSP core can be power conscious AND performant, with 4.61 CoreMark/MHz, a very good figure in this product category.

The following link to more information about the Fusion DSPs: https://ip.cadence.com/ipportfolio/tensilica-ip/fusion

By Eric Esteve fromIPnest

These design-win with ForteMedia, Realtek or CyWeeMotion sensor fusion demonstrate that the Fusion F1 is flexible, appropriate for voice processing solutions and IoT modems:

Realtek

What You Don’t Know about Parasitic Extraction for IC Design

Out of college my first job was doing circuit design at the transistor-level with Intel, and to get accurate SPICE netlists for simulation we had to manually count the squares of parasitic interconnect for diffusion, poly-silicon and metal layers. Talk about a burden and chance for mistakes, I’m so thankful that EDA companies have come to our rescue and have automated the extraction of parasitics that directly effect the quality of any digital or analog circuit performance in terms of speed, currents, power, IR drop and even reliability. If you were to just simulate your circuits without parasitic values, then the results are going to be quite a bit different from silicon, so it really makes sense to do SPICE simulations with extracted parasitic values in order to predict how silicon will behave.

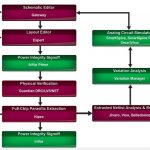

Silvaco is an EDA vendor with a growing list of tools for IC design as shown in this tool flow diagram:

To learn more about parasitic extraction from Silvaco you should consider attending their webinar on March 23rd from 10AM – 11AM PST. The three tools shown under Extracted Netlist Analysis & Reduction will also be discussed in the webinar. The presenter for the webinar is Jean-Pierre Goujon, an AE Manager based in France, and he previously worked at Edxact, a company acquired by Silvaco in June 2016.

If you do IC design with SPICE, or support CAD tools in a flow and work with PDKs, then this webinar is sure to answer your questions on what Silvaco has to offer. Registration for the webinar is here.

Parasitic extraction is a mandatory step for physical design sign off, and at advanced nodes, parasitics substantially impact the behavior of circuit design. Any analysis must consider the interconnect parasitic effects to produce meaningful and realistic results. In this webinar, we will review a flow that can qualitatively and quantitatively compare two extraction flavors for the same design. We will then present an in-depth exploration of exactly where the differences are coming from. At the end of this webinar, switching between different layout parasitic extraction tools, calibrating settings to a new technology node, qualifying PDK updates etc will no longer be a bottleneck for CAD teams.

What attendees will learn:

- Powerful and accurate methods to qualify and quantify different parasitic extraction methods

- How to recognize traps in the flow and possible work-arounds

- How to explore parasitics and analyze what is different and where

PRESENTER:

Mr. Jean-Pierre Goujon is Application Manager for Silvaco France. He is responsible for customer technical support for EDA products, with a specific interest in parasitic analysis and reduction products. Prior to this position, he has been AE manager for Edxact for 12 years and had various AE responsibilities at Cadence, Simplex and Snaketech mostly in the field of parasitic extraction.

Mr. Goujon holds a BSc in EEE from Robert Gordon University, Aberdeen, UK and a MS in EEE from Ecole Supérieure de Chimie, Physique, Electronique de Lyon, France.

Related Blogs

Power and Performance Optimization for Embedded FPGA’s

Last month, I made a “no-brainer” forecast that 2017 would be the year in which embedded FPGA (eFPGA) IP would emerge as a key differentiator for new SoC designs (link to the earlier article here).

The fusion of several technical and market factors are motivating design teams to incorporate programmable logic functionality into their feature set:

- the NRE and qualification cost of a new SoC design is increasingly significantly, at newer process nodes; a single part number that can adapt to multiple applications is a significant cost savings

- many algorithms can be more efficiently implemented in logic than using code executing on a processor core; the flexibility of reconfiguring the algorithm logic to specific market requirements enables broader applicability

- new (on-chip and external) bus interface specifications are emerging, yet final ratification is pending — a programmable logic IP block affords adaptability

Yet, field programmable logic implementations are often associated with higher power dissipation than cell-based logic designs. And, many of the applications for eFPGA technology are power-sensitive.

To better understand how eFPGA IP will address power optimization, and how that compares to logic library design, I reached out to Cheng Wang, Senior VP of Engineering at Flex Logix Technologies Inc., for his insights.

As with conventional logic designs, Cheng indicated that addressing power/performance for eFPGA IP is a multi-faceted task. Architecturally, the programmable logic fabric needs to define the look-up table (LUT) size that best fits the target applications. From a circuit perspective, the LUT array implementation needs to leverage all the available foundry technology features, in a manner similar to standard cell library offerings, but with specific consideration for eFPGA circuits.

Cheng highlighted, “Customers are seeking the low-power and ultra-low power foundry offerings, such as TSMC’s 40LP/ULP. These processes offer a wide supply voltage range and a variety of device threshold voltage variants, such as SVT, HVT, and UHVT (in 40ULP). For an eFPGA block we offer customers the flexibility in device selection for their application, to which we have incorporated additional power optimizations. For example, the eFPGA configuration storage bits always utilize low-leakage devices, which corresponds to ~30-40% of the block area. We have included support for a local body-bias voltage. And, perhaps most significantly, the eFGPA IP block support full power-gated operation.”

The figure below depicts the power gating microarchitecture.

The LUT cell includes state-retention when power gated, also utilizing low-leakage devices to minimize quiescent dissipation. A single block input pin defines the sleep state — the necessary internal turn-on/turn-off signal ramp to control current transients is engineered within the block itself.

In short, power optimization for an eFGPA IP block involves many of the same trade-offs as a cell-based design — e.g., supply and bias conditions, device threshold selection. Some library cell-based logic implementation flows support biasing and power gating, with additional verification and electrical analysis steps. The eFGPA IP includes the additional design engineering within the LUT and configuration circuits for these power optimizations — which are available across the range of eFPGA array sizes.

As an aside, Cheng spoke briefly about the verification of these power optimization features. Shuttle testsites are used to confirm and qualify the silicon eFPGA array implementations. Qualification is performed over the supply voltage ranges supported by the foundry (e.g., 0.6V – 1.0V for TSMC’s 16FFC).

Finally, we chatted about a different class of eFPGA customers, for which performance, not power dissipation, is the key requirement. Chung characterized this market segment as, “We have customers who are strictly seeking the fastest implementation available, for applications such as user-defined networking. The configurability of an eFGPA array enables them to incorporate various packet-processing algorithms. Architecturally, for these designs, the LUT expanded from 4 to 6 logic inputs — ideally, these algorithms can thus be realized in fewer logic stages. In several design examples we’ve reviewed with customers, more than 90% of the LUT’s do utilize more than 4 logic inputs. Correspondingly, the full eFPGA array architecture needed to support a larger number of block I/O’s.”

Cheng continued, “From a technology and circuits perspective, these customers are designing in FinFET technologies, such as TSMC’s 16FF+/FFC. The circuit drivers in the 16nm design are larger. For performance, we have implemented a clock distribution mesh(as opposed to a tree), which is expandable across the range of eFPGA array sizes and aspect ratios. The device selection is available in SVT and LVT variants. As an IP provider, we work with the foundry to utilize the ‘converged PDK’ metal stack, which the foundry’s customers are adopting for the lower metal levels. Within this metal stack definition, our LUT circuits and switch fabric optimize metal linewidth/space dimensions for performance.” (The Flex Logix EFLX-100 utilizes metals M1-M5, the EFLX-2.5K utilizes M1-M6.)

For these performance-driven customers, the tradeoff in area, performance, and power in the eFPGA design suggests a low value for the power gating and body biasing features included in the older process node implementations.

I learned that eFGPA array design is both similar and, in many ways, different than cell library-based logic design, when considering power and performance target optimizations. The synthesis of RTL functionality into the target logic offering is similar, to be sure. And, foundry process technology device offerings are leveraged in the logic circuits. Yet, the eFGPA offering from Flex Logix is more than a logic block with programmable functionality — it has been engineered to integrate specific power/performance features that customers expect from a complex IP offering.

For more information on the power/performance capabilities of the FPGA technology from Flex Logix, here are a couple of links as starting points: TSMC 40LP/ULP offering TSMC 16FF+/FFC offering

-chipguy

Zero Power Sensing

We’ve become pretty good at reducing power in IoT edge devices, to the point that some are expected to run for up to 10 years on a single battery charge. But what if you wanted to go lower still or if, perhaps, your design can’t push power down to a level that would meet that goal? One area in systems where it can be challenging to further reduce power is in sensors, since these need some level of standby current to be able to sense and wake other circuitry.

Some of the lowest standby currents I have seen are ~0.3uA which is impressive (one case I found is able to support a sensor for up to 20 years on a coin cell battery) but maybe not good enough in some applications where you might need to support an array of sensors; even a 3×3 array would reduce that inspiring performance to just over 2 years, which may not be adequate for an infrequent service application.

A team at the University of Bristol in the UK have improved on this by reducing standby current to zero. They have built a voltage detector IC, called UB20M, which can use the intrinsic power generated by the sensor (for certain classes of sensor) to turn on and enable the rest of the system. They mention wireless antennas, infrared diodes, piezoelectric accelerometers, and other voltage-generating sensors as compatible with this device. The sensor must generate a ~650mV signal, but with only a few picowatts of energy to activate the UB20M. The power switch following the UB20M (to turn on power for the rest of the system) will naturally have some level of leakage (they mention 100pA in their test cases) which could perhaps be further optimized in commercial applications but is still 3 orders of magnitude better than commercial alternatives today.

So that array of sensors could become a negligible factor in the battery life of the parent IoT device. Other factors, probably leakage, would dominate. Not bad. The team is understandably cagey about how the device works since the design is patent pending in the UK. They are offering samples for testing. You can read more about the UB20M HERE.

"Ten-hut!" Attending the Signal Integrity Bootcamp

The engineering team for the design and analysis of a complex system consists of a diverse set of skills — with the increasing emphasis on both high-speed interface design and multi-domain power management, a critical constituent of the team is the group of signal integrity (SI) and power integrity (PI) engineers.

The training of SI/PI engineers requires both breadth and depth of understanding of:

- electromagnetic fields

- transmission line theory

- current loop analysis

- RLCG impedance modeling

- decomposition of signal transmission into scattering parameter-based analysis

- frequency-dependent loss calculation

- stripline and microstrip interconnect topologies

- printed circuit board manufacture (including FR-4 glass weave tolerances and line roughness)

- PCB stack-ups, via barrels and antipad design, and

- the cause of resonant impedance in a set of PCB power planes

In short, the SI/PI engineer must be well-versed in a multitude of technical disciplines.

In recognition of the need to assist new SI/PI engineers gain additional experience, Mentor Graphics recently sponsored a full-day intensive SI Bootcamp at their Fremont CA education facility. The day was filled with a mix of lectures, labs, and in the teaching style of Socrates, numerous technical questions posed to the attendees. The instructor was the “Jedi Knight” of SI/PI practical and theoretical education, Eric Bogatin.

Eric began with a unique perspective, to put the audience in the correct mindset. “You need to be the signal — consider the instantaneous impedance, voltage, and current at each step down an interconnect from a driver’s transient. Consider what occurs at each point where the impedance seen by the instantaneous signal changes, and what reflections must occur. And, consider the propagation delay associated with each signal and each reflected transient.”

The attendees were encouraged to use the software utilities available in Mentor’s HyperLynx platform to analyze how impedance is dependent upon interconnect topology and PCB materials, to optimize impedance and minimize reflections.

The class was also encouraged to explore the time-based simulation superposition of instantaneous transients, continuing with the “be the signal” theme.

The next topic was to explore a complete model of a interconnect lane, with specific attention on the driver/package parasitics. Labs were included to allow the SI engineer to evaluate the high-frequency content of signal rise/fall times seen at the receiver, and how the composite losses in the model impact that transient. HyperLynx allows the ability to simulate a pseudo-random bit sequence (PRBS) serial stream to plot a superimposed signal arrival at the receiver, commonly referred to as the eye diagram.

A brief review of crosstalk analysis was presented, with insights into how crosstalk transients from an aggressor propagates to both the far-end (FEXT) and near-end (NEXT) of the quiet signal. The “snowball effect” of FEXT along the coupled length of the signal traces was emphasized.

The next topic was the representation of insertion losses in the system using scattering parameter models, including the familiar S-parameter matrix, where the S21 element is the primary representation for the attenuation of V_in when observed at V_out.

Eric shifted gears to discuss the characteristics of a differential signaling interface, from the differential driver’s IBIS model to the receiver. The presentation focused on the perspective of differential mode and common mode analysis, rather than as a pair of separate signals. In this manner, it is easier to understand how energy is converted from differential to common mode, and what differential/common termination strategies are the most effective. Eric challenged the bootcamp cadets to “think about what trace and via design decisions impact the differential impedance, and what should be the impact of topology optimizations, before you simulate — tools should be used to confirm your intuition, not simply to submit iterative testcases.”

The final topic focused on frequency-dependent power integrity analysis, how the selection and placement of signal and power planes in the stackup (and multi-layer signal vias through anti-pads in power planes) impact the loop currents of the instantaneous signal. An effective visualization for power integrity analysis is the power impedance plot between planes, highlighting the capactive, inductive, and resonant behavior at different frequency intervals. Eric reminded everyone that decoupling capacitors have their own mounting inductance that needs to be included in a composite model of the power plane cavity and decaps. Via-to-via crosstalk analysis requires a co-simulation of the SI/PI model.

At the end of the day, the attendees at the bootcamp review gave Eric a hearty round of applause. It was clear that all gained a greater appreciation of how to apply their academic knowledge to the practical tasks of SI/PI design, optimization and analysis.

If you have an opportunity to attend an SI Bootcamp session, I would strongly encourage you to “enlist”. Eric maintains a set of instructional materials available to SI/PI engineers, with a tremendous amount of video and written information, including a regular newsletter — the link is http://www.bethesignal.com (Not a surprise. :))

Also, Mentor has provided a free, online HyperLynx SI “virtual lab” with some very interesting applications — additional information is available at this link.

-chipguy

Plasmonics Integrates Photonics and Electronics

In the last year we have seen a marked increase in activity around the integrated photonics space, especially around silicon-based photonics. Last year the American Institute for Manufacturing of Photonics (better known as AIM Photonics) was started to boot-strap U.S. efforts in integrated photonics. We also saw multiple announcements by all three major Electronic Design Automation (EDA) players and a host of smaller Photonic Design Automation (PDA) players who are feverishly working to enter the market as photonics takes off. Pushing all of this is the insatiable demand for greater bandwidth density required by mega data centers who are quickly running out of rack front-panel space needed to satisfy the ever increasing data loads of an ever expanding internet of things (IoT) universe(s). Integrated photonics promises to accelerate us to the next level of bandwidth density and data communication speeds but the move into the data centers means much higher chip volumes and the need to push manufacturing costs down.

While silicon-based photonics is moving forward at a rapid pace, one sticking point for getting better cost-reduction has been that photonics does not scale or shrink in size at the same rate as its electronic counterparts. State-of-the-art electronic transistors are now in the sub 10nm range whereas photonic components are up in the 100’s of microns. Although integrated photonics are thousands of times smaller than using discreet photonic components, the photonics are still too large. Most silicon-based photonic integrated circuits (PICs) require waveguides with cross-sectional dimensions comparable in size to the wavelength of the transmitted light. That translates to waveguides with widths in 100’s of nano-meters.

Last year a new effort called PLASMOfab was started in Europe to tackle the photonics size problem. PLASMOfab is working on new structures known as Surface Plasmon Polariton (SPP) waveguides. These SPP waveguides are capable of guiding light at sub-wavelength scales as they can confine light into a width of a few nano-meters. They do this by using plasmonics which taking advantage of electron plasma oscillations near the metal interface. Plasmonics provides the capability to guide the optical field on a metal surface or between two metal surfaces or dielectric surfaces. This is remarkable on several fronts. First it provides for ultra-fine sensing capabilities that could be using for sensing applications and second, it enhances non-linearity’s such as Pockel’s effects used for polarization control in data communications applications. Additionally, SPP waveguides are unique in that they can be used to send both electronic and photonics signals along the same waveguide at the same time using geometries similar to those of electronic IC interconnect. If the work PLASMOfab is doing is successful they will indeed shrink the photonics down to the size of the electronics. Obviously it’s not that easy else it would have already been done but the jest is that there is a path to get there.

PLASMOfab is actually a 3-year collaborative project that brings together ten leading academic and research institutions and companies. The project was launched in January 2016 and it is funded by the European Union’s Horizon 2020 ICT research and innovation program. PLASMOfab’s goal is to develop CMOS compatible planer processes to shrink photonics and integrate it with electronics using plasmonics. Wafer scale integration will be used to demonstrate low cost, volume manufacturing and high yielding powerful photonic ICs. If successful, this new technology could produce a number of innovations in enhanced light-matter interaction for optical transmitters and biosensors.

In 2016 PLASMOfab worked to advance CMOS-compatible metals for the fabrication of plasmonic structures in commercially available foundries. TiN, Al and Cu were targeted for investigation as an alternative to gold or silver. Additionally, they were to generate a number of low-loss plasmonic waveguides on co-planar photonic substrates including SOI, SiO2 and Si3N4 using CMOS compatible metals. This work included interfacing the plasmonic waveguides to photonic waveguides.

In 2017 PLASMOfab is to work with PhoeniX Software to develop a plasmonic/photonic design automation flow and then use this flow to first, develop functional prototypes of an optical modulator and a biosensor with superior performance and then second, to then integrate the modular with 100 Gb/s SiGe electronics in a monolithic 100 Gb/s serial NRZ transmitter. Lastly in the bio-sensing arena, they are to integrate Si3N4-plasmonic biosensors with micro-fluidics and high-speed techniques in a multi-channel, ultra-sensitive lab-on-chip for medical diagnostics.

In 2018 their goal is to then demonstrate volume manufacturing and cost reduction by complying with large wafer-scale CMOS fabs and establishing a plasmonics/photonics/electronics fab-less integration service eco-system that can be adopted by commercial silicon and silicon nitride foundry services.

These are bold goals to be sure, but the potential is more than exciting as this could be a way to push photonics into the really low cost arena of silicon ICs.

For more information, see:

PLASMOfab website http://www.plasmofab.eu/

PLASMOfab write up in PIC Magazine http://www.publishing.ninja/V2/page/2416/143/168/1

PLASMOfab YouTube video https://youtu.be/0bAszCXUOag

Mentor gets Busy at DVCon

You’d expect Mentor to be covering a lot of bases at DVCon and you wouldn’t be wrong. They’re hosting tutorials, a lunch, papers, posters, there’s a panel and of course they’ll be on the exhibit floor. I’ll start with an important tutorial that you really should attend, Monday morning, on creating Portable Stimulus Models based on the PSS standard. I know verification engineers barely get time to think, much less look ahead but this one stands between you and more effective test generation, coverage and debug so you pretty much must deal with it. Mentor as a leader in defining the standard already has technology in this area so what you learn here can be valuable immediately.

Monday afternoon they have a speaker in a tutorial on SystemC (also featuring speakers from Intel, NXP and Coseda). SystemC is a curious beast – when viewed purely from the perspective of RTL and as a higher-level alternative to RTL, it looks a marginal player (maybe 10% of design). But when viewed from a broader perspective it becomes a lot more interesting. System-level designers (at Google for example) want a fast path from concept to silicon, see no need to fiddle with intermediate RTL and apparently see a high level of success in using this path. Conversely, architectural modelers are finding they can hand off high-level test-benches, including software-driven testbenches, to RTL verification teams thanks to progress in the UVM-SystemC standard. Food for thought – maybe you don’t think you’re ready yet but you might want to find why others have already started.

Tuesday late morning Harry Foster will present on trends in functional verification based on a double-blind survey in 2016. If you didn’t get a chance to see this his summaries last year though Mentor webinars, this is a chance to hear the whole thing in one go. This talk is worth the time for several reasons:

- You can see where your team sits relative to industry averages in adoption of major technologies

- You can see where you sit relative to others on power verification

- Most important (or embarrassing) of all, you can see where you sit relative to others in schedule overrun and reasons for respins

- You get to understand that FPGA verification has become just as complex as ASIC verification, using constrained random, coverage and even formal methodologies

On Wednesday, there’s a great panel in the early afternoon asking the hard question – looking back over 15 years, was SystemVerilog really the best answer to our verification needs? Phil Moorby (the guy who created Verilog and played a significant part in SystemVerilog) is on the panel along with participants from Mentor, Cadence, Synopsys and of course Cliff Cummings so this can’t fail to be fun. Panelists will also talk about what they think an ultimate replacement for SV might look like.

Mentor is all over Thursday. They kick off the morning with how to use just formal (no simulation) to verify a drone’s electronics so you can send the thing off with a message to come recuse you from a desert island. Perhaps you’d never go that far with formal, but pushing it to the limit is a good way to understand how to better use formal more broadly. For lunch Harry Foster and Steve Bailey from Mentor are going to discuss the need for a platform to support enterprise verification, looking at trend analyses across IPs and across designs to identify opportunities to reduce risk, spur innovation and introduce new methods.

And in the afternoon, they’re going to reprise a popular topic – how to build a complex testbench quickly when you’re not a UVM expert. While UVM has been widely adopted as the standard for developing testbenches, the fact remains that many engineers still struggle with the complexities of the language and often fail to use it as effectively as they could. Sure, they’ll get up the curve eventually but design schedules wait for no man or woman. There are ways to become more effective quickly without having to first become a UVM back-belt – this tutorial will show you how.

Now for papers. Rich Edelman, a friend from way back, kicks off Tuesday morning with a paper on using the Direct Programming Interface. At the same time (darn), Mentor is presenting on a random directed approach to low power coverage, which sounds like an important one to hear. Wednesday mid-afternoon is a Mediatek/Mentor paper which should get an award for best title – “Ironic but effective; how formal analysis can perfect your simulation constraints”. And again, unfortunately at the same time there’s a paper on making legacy verification suites portable for the PSS standard.

The posters are always well worth checking out. This year they include speeding up functional CDC verification, incrementally refining UPF to manage the complexity of power state tables and (especially interesting to me) using Jenkins continuous integration to improve regression efficiency. If you’re following state of the art methods in continuous build and delivery automation, you’ll know that Jenkins already plays a significant role in software development flow and is starting to make its way into some hardware flows. This poster could be a nice introduction to some of the techniques and benefits.

You can get full details HERE. Make sure you sign up for the conference!

CEO Interview: Srinath Anantharaman of ClioSoft

It will soon be 20 years since ClioSoft started its journey of selling design management software for the semiconductor industry. It was a slow start considering that designs were relatively small and only digital front-end designers had begun to realize the importance of version control and design management. With open source solutions available and design management deemed as a nice-to-have feature, it was a tough sell.

Fortunately the steady focus and persistence have paid off with rich dividends for ClioSoft. Today, with over 200+ customers all over the world, ClioSoft is the leader in design management from concept-to-silicon for all types of designs in the semiconductor industry.

I have known ClioSoft since 2011 as they were one of the first companies we worked with on SemiWiki. Srinath is an EDA pioneer with great vision and it is an honor to work with him, absolutely.

20 years is a long time in the industry. What factors do you think have been responsible for this remarkable growth?

We are essentially an engineering company focused on providing quality products. We relate to the pains felt by the engineers and try to solve their data management problems. But there are a number of factors, which have helped us grow. Some of them are:

- Our products are reliable, easy to use and built primarily for designers.

- Since we do not have any investors, we do not have to worry about meeting growth rates and sales targets. Instead we can focus on delivering a quality product and helping our customers succeed. We believe sales and growth are by products of this goal.

- As a company, we are easy to work with and fully committed to providing excellent support. As a result of this EDA vendors prefer to partner with us and we have a lot of word-of-mouth as well as repeat customers..

- Trust, credibility and integrity are core values, which form the DNA of ClioSoft. We do not oversell potential or existing customers about the availability or quality of any features. Simply put, we work with design teams to solve their problems.

- We support small and big customers with equal urgency. Our goal is to provide quality support to all.

- And lastly we play fair. At the end of the day, companies want a partner they can trust and in most cases, ClioSoft is that partner.

Most of ClioSoft customers seem to be doing analog/mixed signal designs. Does ClioSoft also support other types of designs such as digital and RF.

That is a misconception. ClioSoft’s SOS design management platform supports all types of designs – Analog, Digital and RF. We initially started by evangelizing design management to digital designers. Soon thereafter we provided integrated design management for analog flows from Cadence[SUP]®[/SUP], Mentor Graphics and Synopsys[SUP]®[/SUP]. Thereafter Keysight Technologies (then Agilent) approached us to provide design management capabilities for their RF flow. We were the first company and probably still the only company to support RF designs. Since there was more demand from AMS designers, we have been a bit more focused on in this area.

ClioSoft’s SOS® is perceived as a design management platform exclusively for the semiconductor industry. Why is that?

From a data management perspective, the requirement of analog, RF and digital designers is quite varied. Every type of designer uses distinctly different types of tools and has their own flow for designs. The requirements for digital designers and software engineers are somewhat similar since their code is text based. With text, it is easy to manage, check for differences between the versions or merge the differences. As a result, digital designers often use software configuration management systems such as Subversion or Git, which are popular with software engineers. But with analog and RF designs the design database is binary making it more difficult to manage. A number of factors such as the interdependencies between the various cell views and the design hierarchy needs to be considered while managing the various revisions of the design or checking for differences in the schematics or layout views. The size of the design data is also a big factor in managing designs and a company cannot afford to have everyone copying large amounts of project data into their work space due to the relatively high capital and management cost of network storage. The design management platform needs to be flexible and robust enough to manage the diverse needs of the designers without interfering with their complex flows or compromising on performance – remote or local.

Our tools are architected from the ground up to meet the requirements of these engineers and not a layer glued on to third party software configuration systems such as Git, Subversion or Perforce.

Your competition also targets ClioSoft as being a proprietary design management system. Any comments?

Isn’t Perforce proprietary software too? We have always believed in owning the entire system as it enables us to provide solutions which best meet our customer needs. Any enhancement requests from our customers can be easily entertained and done in a timely manner. Owning the entire system also enables our solutions to be very robust and easy to use. We are not limited by the capabilities of a 3[SUP]rd[/SUP] party software that we do not control. If there is an issue the buck stops with us. We will not have to refer to or wait for the 3[SUP]rd[/SUP] party to provide a fix or enhancement. We also do not believe in holding the customer’s data hostage. If for any reason the customer decides to stop using our design management solution we will provide read-only licenses in perpetuity so they can always have access to their data.

With the growing emphasis on IP, what is ClioSoft’s perspective on design reuse and IP Management?

To meet the tight design schedules, design teams try to reuse IPs to the maximum extent possible. The growing use of third party IPs in todays SoCs, provides an additional overhead of managing costs and licensing liabilities. Internal IPs unfortunately do not get reused as much as one would like. This is due to a multitude of reasons. The quality of internal IPs are always suspect. In addition there is always the fear of getting support for these IPs in the event of a problem. We believe that having an ecosystem where one can easily find use internal and third party IPs alike and validate their quality is very important. The usage of internal IPs also needs to be pushed from the top management and needs to be a priority for the company. It is a common misunderstanding that the internal IPs are not reusable. Creating a culture of sharing will lead to significant improvements to productivity in the long run. We hope to help customers realize that goal.

Your competition seems to be providing hardware related solutions but ClioSoft has not bitten the bullet and jumped into the fray by adding hardware based solutions. Why is that?

We are primarily a software company and our focus is to provide solutions which will work with industry standard hardware. Our products are created keeping the following criteria in perspective namely performance, security, robustness and ease of use. We do not require any specialized hardware to make our solutions run fast. I am a veteran of the EDA industry and have seen how early EDA startups like Daisy and Valid failed. They built their own hardware but pretty soon they could not keep up with general purpose workstation manufactures like Apollo and SUN Microsystems and pure software based EDA companies took over. I believe in focusing on our strengths. By building our data management engine and not relying on software configuration system (SCM) we do not have to resort to building specialized hardware or kernel level file system manipulations to overcome the limitations of SCM solutions in managing extremely large design data.

ClioSoft has a very low attrition rate for a company in the Silicon Valley. What would you attribute this to?

We like to have a balance between work and fun. We do not have many levels of hierarchy and like to keep things simple. A strong sense of ethics and values have also helped attract considerable talent who have stayed on in the company. You will notice that we have never had a management team posted on our website. Every member of the team is important and that is not just a slogan.

ClioSoftwas launched in 1997 as a self-funded company, with the SOS design collaboration platform as its first product. The objective was to help manage front end flows for SoC designs. The SOS platform was later extended to incorporate analog and mixed-signal design flows wherever Cadence Virtuoso® was predominantly used. SOS is currently integrated with tools from Cadence®, Synopsys®, Mentor Graphics® and Keysight Technologies®. ClioSoft also provides an enterprise IP management platform for design companies to easily create, publish and reuse their design IPs.

Also Read:

CEO Interview: Amit Gupta of Solido Design

Adaptation or Crisis – Will Security Save Technology

The technology landscape is rapidly changing how we interact and live in our world. The variety of Internet of Things is huge and growing at a phenomenal pace. Every kind of device imaginable is becoming ‘smart’ and connected. Entertainment gadgets, industrial sensors, medical devices, household appliances, and personal assistants are being connected and empowered to relieve burdens of our daily living. The number of phones, tablets, and PC’s is fueling the growth of cloud based service environments.

Emerging innovations around semi-autonomous vehicles, artificial intelligence, merged reality, and machine learning is creating vast amounts of data. Next-generation critical infrastructures and communications are enabling greater capacities for connectivity and services. All of these and other elements are ushering in a new era of both connectivity as well as extending the reach of technology to control aspects of our physical world. With such great power, we will become more reliant on the devices and services which make our lives easier, efficient, and more productive. As a consequence, the need for security, safety, and privacy will become immensely important.

Existential Threat

Orion Hindawi recently penned an article in WIRED “Cybersecurity is an existential threat. Here is what we need to do”. In the article, Orion postulates the real emerging threat will not be around social engineering, the increase of ransomware, or nation-state cyberattacks. Rather he states:

“The hardest challenge for cybersecurity in 2017… will be scalability”

I met Orion and his father many years ago, back when they had started BigFix. Both impressed me as very smart people and possessed a keen understanding of the challenges security products faced in large enterprise environments. At the time, big organizations were having great difficulty in understanding what was on their network, how to keep it patched, and what to do when devices no longer conformed to standards. Security products did not have the breadth to understand all the moving parts and even worse did not function well when tasked to support so many endpoints and connections.

The Ante Goes Up

Well the world is about to experience those problems once again, at a global scale. Orion’s article in Wired takes those challenges and the expertise behind solving some of those problems for business, to the next logical step.

The number of users, device, and usages are ramping up. This, combined with devices beginning to extend beyond data and into ‘control’ of the physical world will bring life-safety concerns to light. The technology and security industry will have a choice: adaptation or crisis. As incidents become apparent, the expectations of people will help drive change in regulations, product purchase criteria, vendor selection, and standards. A real risk remains. If the risks of threats aren’t put in check soon enough, a severe backlash could occur.

Safe Technology

Security must rise as we further embrace and empower technology to have insights and control over aspects of our everyday routines. Will security be able to scale? Well, for the benefit of us all, it better adapt quickly. There is far too much is at stake.

Interested in more? Follow me on Twitter (@Matt_Rosenquist), Steemit, and LinkedIn to hear insights and what is going on in cybersecurity.