The automotive industry has a history of bringing about disruptive technological advances. One only needs to look at the invention of the assembly line by Henry Ford to understand the origins of this phenomenon. Today we stand on the brink of a massive change in how cars operate and consequently how they are built. A number of automotive manufactures have promised autonomous vehicles by 2021. The move toward this objective will be a continuous progression of adding technology at each step of the way over the next 3 or 4 years. At the same time consumer expectations about usability and reliability will be huge market drivers.

The design challenges associated with these changes will be enormous. Cars are already probably the most complex consumer items made today. With the addition of the sensor, communications, processing and power train enhancements that are expected, overall complexity will increase. Numerous architectural and design decisions will have to be made. Puneet Sinha, Automotive Manager at Mentor and John Blyler, System Engineer/Program Manager at JB Systems have completed a white paper entitles “Key Engineering Challenges for Commercially Viable Autonomous Vehicles” that delves into the specifics of what we can expect to see coming down the road.

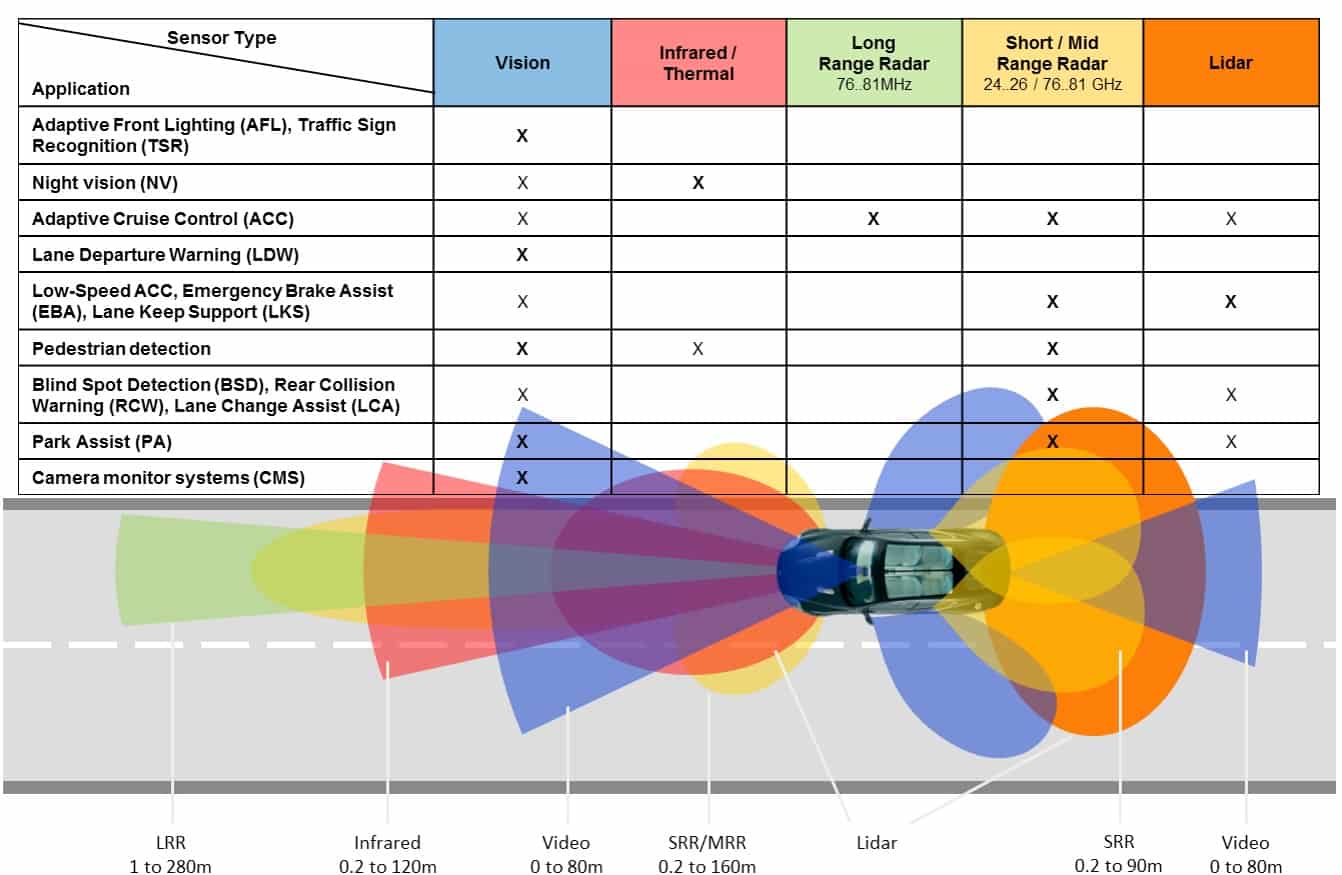

They break the topics involved into 6 different categories. Starting with sensors, they explain how the number of sensors in an automobile is going to grow from the current number between 60 and 100 up to a much higher number. To fully implement 360 degree ‘vision’ a variety of different types of sensors are needed. The diagram below provides an overview of which types of sensors are used for specific tasks. The operating environment for each of these sensors is challenging. The goal of reducing sensor size conflicts directly with thermal management requirements. Automobiles also have to operate in extremely cold and hot environments and often in conjunction with other automotive systems, which exacerbates thermal and reliability issues.

The section I found most interesting discussed sensor fusion. From the advent of the internet of things there has been a push to move sensor fusion to the edge. It made a lot of sense to use smaller processors adjacent to, or integrated with, the sensor to take raw sensor data and convert it into more easily digestible data, which also has the benefit of being smaller in size. However, the Mentor paper points out that in future automobiles there will literally be hundreds of these smaller MCU’s distributed throughout the vehicle. This can lead to reliability issues, as well as potentially creating thermal and power issues. They also point out that edge sensor fusion can lead to an exploding BOM.

Mentor’s approach is to use the high speed data busses on the vehicle to transport raw data and centralize sensor fusion. Mentor has announced a product called DRS360 that enables this centralized fusion approach. They also point out that the raw data can be combined in unique ways if it is processed centrally, thus enabling higher quality results. The end result is a 360 degree perspective of the car and its environment. Their experience implementing this has shown that the overall power usage is dramatically reduced.

The third area they discuss is the electronic and electrical architecture. The automotive wiring harness is actually the third heaviest component in a vehicle. It has grown from the original point to point wire of the first cars to multiple communications networks, each with a specific purpose – Controller Area Network (CAN), Local Interconnect Network (LIN) and Automotive Ethernet. Because there is so much interplay between the electronics connectivity and the physical design of the vehicle, significant planning and interdisciplinary design is required. In many ways solving this design problem looks a lot like place and route used on SOC’s.

The power train is another design domain that is undergoing rapid change due to the advent of electric vehicles. This can include both hybrid and fully electric drive systems. It turns out that autonomous vehicles will have different design parameters than human piloted vehicles. One surprising piece of information from the Mentor white paper is that autonomous drive vehicles will not need to be designed for the so called 90th percentile driver, who can be very rough on the drive train. There are other implications in this area from full autonomous driving.

There will also be dramatic changes in vehicle safety and in-cabin experience. The interior of a fully autonomous vehicle will be quite different from today’s vehicles. Occupants will interact even more with navigation and entertainment systems. Passenger displays will also change quite a bit. Safety requirements will pervasively affect every element of the automotive design process.

Last but not least the white paper addresses vehicle connectivity. The moniker applied to this area is V2V communications. Cars will be communicating with the cloud, each other and possibly the road and other infrastructure elements. There is great opportunity to increase safety and situational awareness in the automotive navigational and safety systems.

Mentor has a comprehensive suite of tools that address these design areas and challenges. The white paper does an excellent job of detailing each of the design areas and also lays out the relevant tools that can be used to deal with the system level integration problems in those areas. I recommend a thorough read of the white paper to fully understand the design challenges and to learn which Mentor tools can address them. Mentor has a long history of working at the system level, and the coming changes in the automotive space are creating a lot of opportunity for them to become a major player.