Neural networks make it possible to use machine learning for a wide variety of tasks, removing the need to write new code for each new task. Neural networks allow computers to use experiential learning instead of explicit programming to make decisions. The basic concepts related to neural networks were first proposed in the 1940’s, but sufficient technology to implement them did not become available until decades later. We are now living in an era where they are being applied to a multitude of products, most notable of these being autonomous vehicles. In a presentation from ArterisIP, written by CTO Ty Garibay and Kurt Shuler, they assert that the three key ingredients for making machine learning feasible today are big data, powerful hardware, and a plethora of new NN algorithms. Big data makes available vast amounts of training data, which can be used by the neural networks to create the weights or coefficients for the task at hand. Powerful new hardware is also making it possible to perform processing that is optimized for the algorithms used in machine learning. This hardware includes classic CPU’s, as well as GPU’s, DSP’s, specialized math units, and dedicated special purpose logic. The final ingredients are the algorithms that are used to assemble the NN itself. The original basis for the design of all machine learning is the human brain, which uses large numbers of computing elements (neurons) connected in exceedingly elaborate and ever-changing ways. Looking at the human brain, it is clear that much more real estate is dedicated to the interconnection of processing elements than to the processing elements themselves. See the amazing image below to compare the regions of gray and white matter.

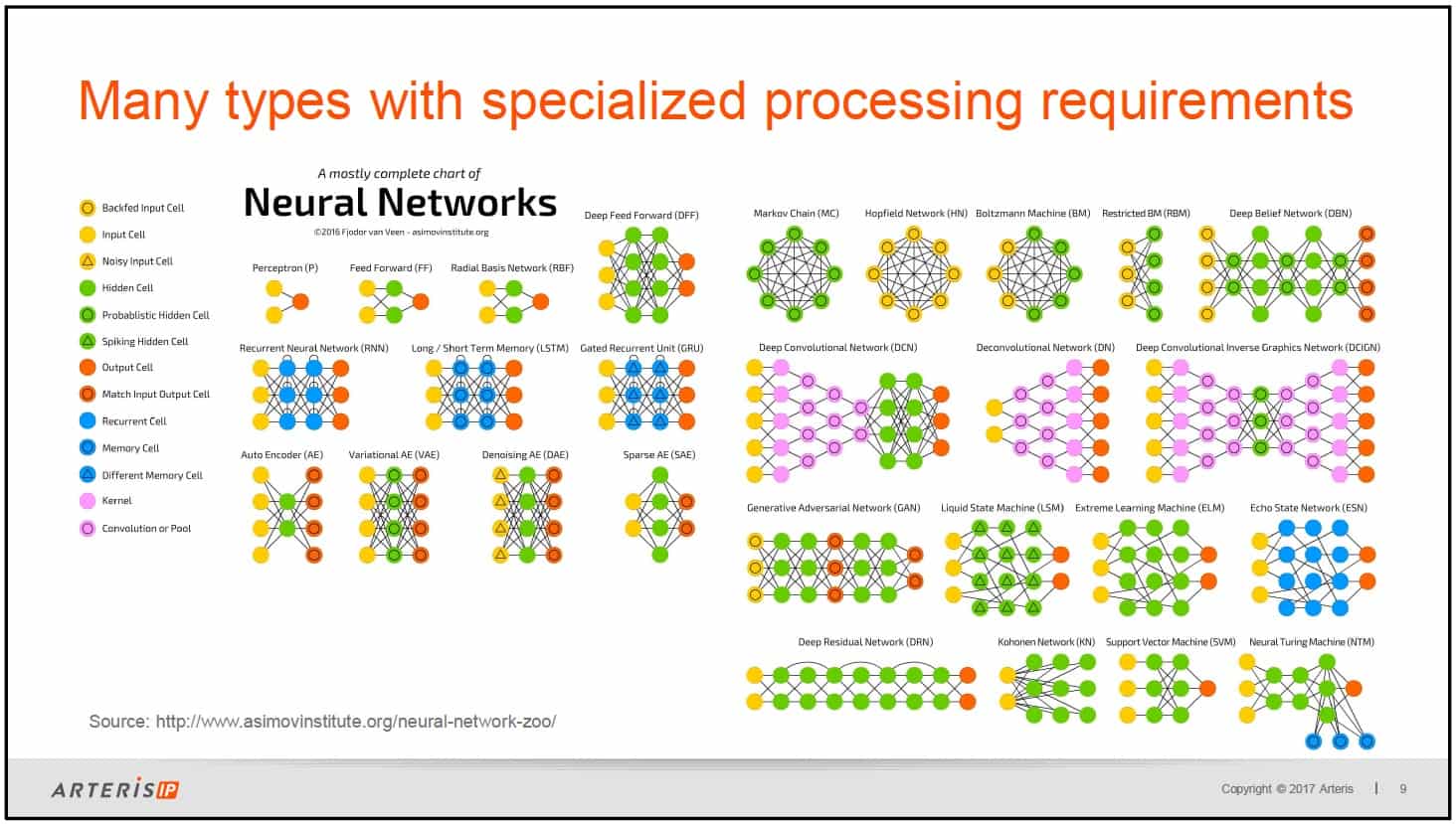

The ArterisIP presentation offers a dramatic chart showing prominent NN classes as of 2016. Again, we see that the data flow and interconnection between the fundamental processing units, or functions, is the significant characteristic of Neural Networks. In machine learning systems, performance is bounded by architecture and implementation. On the implementation side we see, as noted above, that hardware accelerators are frequently used. Also, implementation of the cache and system memory has a profound effect on performance.

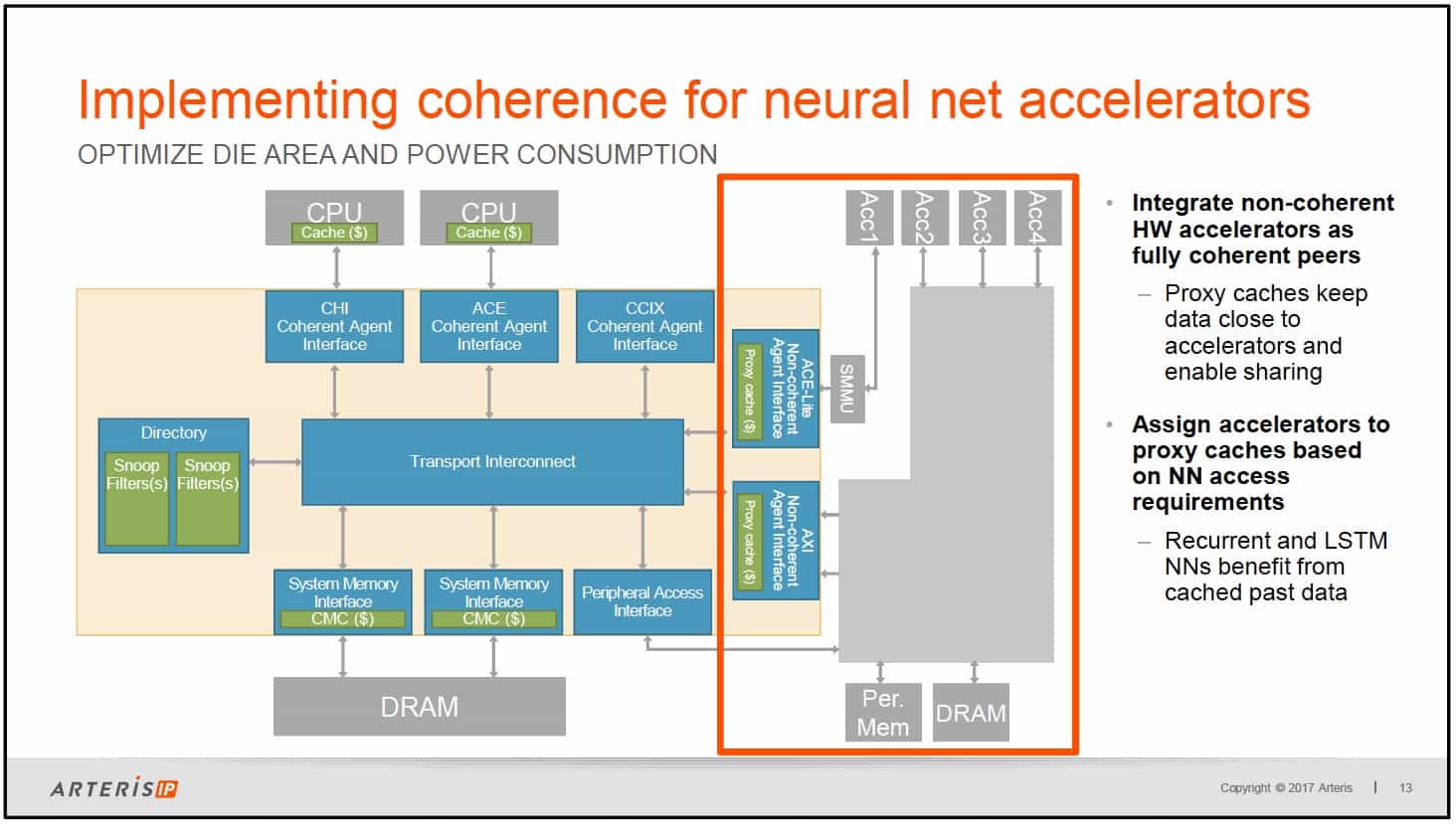

SoC’s for machine learning are being built with IP that supports cache coherency and also with a large number of targeted function accelerators that have no notion of memory coherency. To optimize these SoC’s such that they can achieve the highest performance, it is helpful to add a configurable coherent caching scheme which allows these blocks to communicate efficiently on-chip. ArterisIP, as part of their interconnect IP solution, offers a proxy cache capability that can be custom-configured and added to support non-cache coherent IP.

ArterisIP points out in their presentation that data integrity protection is also needed in many of the applications where NNs are being used. For instance, in automotive systems, the ISO 26262 standard calls for rigorous attention to ensuring reliable data transfer. ArterisIP addresses this requirement with configurable ECC and parity protection for critical sections of an SoC. Also, their IP can duplicate hardware where needed in the interconnect system, in order to dramatically reduce the likelihood of a device failure. ArterisIP has extensive experience providing interconnect IP to the leading innovators developing Neural Network SoCs. The company recently publicly announced nine customers that are designing machine learning and AI SoC’s. The application areas targeted by Arteris IP’s customers include data centers, automotive, consumer and mobile. Neural networks will continue to become increasingly important for computing systems. As the need to write application specific code diminishes, the design of the neural network itself will become the new key design challenge, including both the NN software and the specific hardware implementation implementing the system. ArterisIP’ s interconnect IP can address many of the design issues that arise in the development of these SoCs.

Comments

One Reply to “Neural Networks Leverage New Technology and Mimic Ancient Biological Systems”

You must register or log in to view/post comments.