When we talk about the Internet of Things (IoT), it isn’t a stretch to say that every intelligent device we interact with will become connected, sharing vast amounts of data with one another to make our lives more efficient. It isn’t only consumers of smart home, infotainment, and wearable technologies that are driving the demand, but also industrial, military, and government applications such as smart cities and factories that are changing the connectivity landscape.

Webinar April 4 : Achieve High-performance & High-throughput with Intel based FPGA Prototyping

From the Very Small to the Behemoth

When we explore IoT from this perspective, we understand that these devices can range from the smallest designs comprised of a handful of sensors and actuators made up of only a few million gates to extremely complex machines containing hundreds of sensors and billions of gates. Whatever the size and complexity, these smart systems require a great deal more software and real environment tests especially when integrating commercial IP. All of the IoT examples mentioned mandate interoperable connectivity, sophisticated control, and test efficiency forcing design teams to rethink their development strategies. Add to this the time-to-market pressures that consumer IoT devices demand and it becomes clear that engineers need adequate solutions to address these issues.

Getting Confidence in Your Design Early

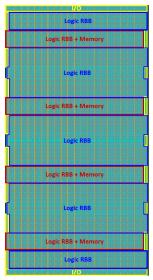

FPGA-based prototyping is specifically geared toward meeting the design and verification demands created by the complexities of IoT devices. Prototyping technology advances in areas of partitioning and multi-FPGA debug have allowed FPGA-based prototyping to scale to address not only the small multi-million gate designs but also designs of up to 1.5 billion gates. FPGA-based prototyping allows designers to develop and test their systems and provides software developers early access to a fully functioning hardware platform long before silicon is available. The hardware prototype is the only solution that can be employed early enough for practical software development and testing. Software models don’t have the speed and capacity that hardware platforms provide for accuracy and reliability.

Even the smallest designs must negotiate very complex software issues and require an enormous amount if rigorous testing. The nature of this type of testing could run any design into the ground missing crucial time-to-market windows. Although prototyping can take weeks to set up, the number of tests that can be performed in a short amount of time after initial set up severely handicaps other solutions. At the modest of speed (just 5 Megahertz) and after 4 weeks of set up, FPGA prototyping can complete a staggeringly higher number of tests than other solutions just days after its initial set up. For more information on this, you can check out the Emulation vs. Prototyping Cross Over Calculator at http://www.s2cinc.com/prototyping-calculator.

Transactors Are Key to IoT Design Success

FPGA-based prototyping is well-suited for designs that are fully rendered in RTL that can be mapped to an FPGA. However, many IoT-natured designs may not be completely mapped to an FPGA and may be partially still only available as behavioral models in descriptions such as C++ or SystemC. In these cases, transaction-level interfaces play a critical role in being able to bridge the abstraction level between behavioral models and live hardware. These transactors offer a way to communicate between software running on a host and an FPGA-based prototyping platform that often includes memories, processors, and high-speed interfaces.

A system that can harness the power of transactors can allows designers to maximize the benefits of FPGA-based prototypes much earlier in the design project for algorithm validation, IP design, simulation acceleration and corner case testing. A prototype combined with a transactor interface makes a range of interesting applications possible throughout the design flow.

As we move into the next stage of connectivity, devices will need to undergo very exhaustive testing, FPGA prototyping is a key technology that will play an effective role in this process.

Webinar April 4 : Achieve High-performance & High-throughput with Intel based FPGA Prototyping