One of the great aspects of modern hardware verification is that we keep adding new tools and methodologies to support different verification objectives (formal, simulation, real-number simulation, emulation, prototyping, UVM, PSS, software-driven verification, continuous integration, …). One of the downsides to this proliferation of verification solutions is that it can become increasingly onerous to manage all the results from these multiple sources (and multiple verification teams) into the unified view you ultimately need – have we covered all testplan objectives to an acceptable level?

You could administrate the combination of all this data through mounds of paperwork and presentations (not advisable) or more realistically through scripting, spreadsheets and databases. I’m sure some of the biggest engineering organizations have the time, skills and horsepower to pull that off. For everyone else this task can be daunting. It isn’t as simple as rolling up dynamic coverage from RTL sims. Consider that some of the testing may be formal-based; how do you roll that up with dynamic coverage? (Answer: by showing combined coverage results – dynamic and formal. Then as you move up through the hierarchy, you see a combined coverage summary). Some may come from emulation, some from mixed-signal sims, some may be for power verification, some for safety goals, some from HLS, some related to application software coverage. You can see why traditional RTL sim rollup scripting may struggle with this range.

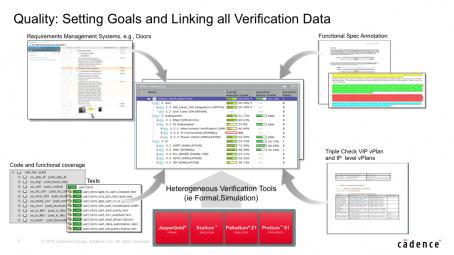

Larry Melling (Director product management and marketing at Cadence) shared with me how vManager automates this objective and how some customers are already using the solution. Briefly, vManager is a system to gather functional verification results from a variety of platforms and objectives and score progress against an executable testplan. You can tie in all the Cadence verification platforms, from formal to prototyping, and objectives from functional to safety, to power and application software coverage. The system can also tie into requirements tracing and functional spec annotation, so you can see side-by-side how well you are meeting expectations for the product definition and provide spec updates/errata where these may deviate.

Larry pointed to several examples where vManager is in active use. Infineon already uses the tool to combine formal and simulation coverage results. I’ve written about this before. I don’t know of any solution (yet) to “merge” these coverage results but seeing them combined is a good enough way to judge whether the spirit of good coverage has been met. Infineon also notes that seeing these results together helps them optimize the split between formal and simulation effort, so that over time they improve the quality of results (more formal, where appropriate) while also reducing effort (less simulation, where appropriate).

ST, who are always very careful to quantify the impact of new tools and methodologies, showed that adoption of vManager with metric-driven verification ultimately was able to reduce verification effort by more than 50% over their original approach. They added that verification productivity increased, they had higher quality RTL and that tracking multi-site project status was much easier than in previous projects.

One last very interesting example. In software product development there have been aggressive moves to agile design and continuous integration (CI) methods. You might not think this has much relevance to hardware design, however RAD, a telecom access solutions provider based in Israel, thinks differently. They do most of their development in FPGAs; here lab testing plays a big role and regression data is not always up-to-date with latest source-code fixes. To overcome this problem, RAD have switched to a CI approach based on Jenkins for its ability to trigger regressions automatically (at scheduled times) on source-code updates.

RAD uses vManager together with Jenkins to run nightly regressions on known-good compilations, thus ensuring that regression results are always up-to-date with the latest source-code changes. They commented on a number of advantages, but one will resonate with all of us – the impact of the well-known “small” fix (trust me – this won’t break anything). A bug caused by such a fix previously could take an indeterminate amount of time to resolve. Now it takes a day – just look at the nightly regression. That’s pretty obvious value.

A quick note on who might need this and how disruptive adoption might be. Larry acknowledges that if you’re building IoT sensor solutions (100K gates), the full scope of vManager (multi-engine, multi-level) is probably overkill. Solutions like this are for big SoCs supported by large development and verification teams. Also, this solution is designed to fit comfortably into existing infrastructures. No need to throw out what you already have; you can adopt vManager just to handle those components for which you don’t have a solution in place – such as combining formal and simulation coverage results.

You can learn more about vManager HERE and read a useful white paper about metric-driven verification HERE.

Share this post via:

Comments

There are no comments yet.

You must register or log in to view/post comments.