This blog is the synthesis of a white paper “New Power Management IP Solution from Dolphin Integration can dramatically increase SoC Energy Efficiency”, which can be found on Dolphin Integration web site.

The power consumption generated by complex chips was not a real issue when the system could be simply plugged in the wall to receive electricity. The most important feature used to be raw performance, expressed in GHz or MIPS, and was used to market PC to end-user, for example.

Nevertheless, with the huge adoption for wireless mobile devices in the 2000 and later, the metric has changed. For battery powered devices, the time between two battery charge became almost as important as the MIPS power delivered by the phone/smartphone. That’s why efficient but costly external power management solution (7$ for the iPhone 6, up to $14 for the iPhone X) have been implemented in smartphone.

With the massive adoption of battery powered system expected in IoT, industrial or medical, power efficiency is becoming a mandatory requirement, together with low system cost. To reach these goals, SoC will have to be architectured for low-power and integrate power management IP on-chip.

In fact we realize that power consumption is also becoming a key concern in automotive application (ADAS) or sever/network infrastructure. These applications will have to be much more energy efficient, for different reasons like reliability (automotive) or cost (raw electricity and cooling cost in data center). In short, the entire semiconductor industry will have to offer more energy efficient devices, at the right cost. The way to do it is through defining power aware architecture and design chips integrating power management IP.

In this white paper “New Power Management IP Solution from Dolphin Integration can dramatically increase SoC Energy Efficiency”, Dolphin Integration has reviewed all the solutions available to decrease SoC power consumption. The first technique is to define and manage power domains inside the SoC, well-known in the wireless mobile industry, but other techniques can be considered.

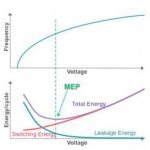

Dynamic voltage frequency sclaing (DVFS), near threshold voltage, body biasing, globaly asynchronous localy synchronous (GALS) are also reviewed and discussed in this paper. But a low-power SoC has much smaller noise margin, and special care must be taken to preserve signal integraty in this SoC. In other words, you need experts to support you when implementing these new power management solutions.

In the second part of the paper, the author explains how implementing power management IP in customer’s SoC. The design team has to start from the architecture level to define “power-aware” SoC architecture.The the first task for the designer will be to identify the various functions belonging to the same power domain. This power domain is not simply defined by voltage, but in respect with the functionality of the various blocks expected to be part of the same task in a given power mode.

Once you have split the chip in power islands (memory, logic, analog, always-on, etc.) it’s time to control, gate (power gating, clock gating) and distribute power and clock in the SoC. This will be done by implementing the various power management IPto power the SoC core, and the designer will select controlling power switches, VREG or Body Biasing generator and clocks gating anddistribution, all part of the power network IP port-folio from Dolphin Integration.

The implementation of the SoC power mode control is made straight-forward, thanks to a modular IP solution (named Maestro). This power mode control IP is equivalent to an external Power Management IC (PMIC), similar to those integrated in a smartphone. Maestro will manage start-up power sequence, power mode transition and optimize the power regulation network.

Dolphin Integration claim offering faster TTM and lower cost for customer selecting their power management IP solution, instead of trying to design a low-power SoC on his own. As the design team implement the power management controller (Maestro) as an IP in the SoC, the sytem BOM is lower than using an external PMIC controller (remember the impact of PMIC on the iPhone 6 and X BOM).

Faster TTM is made possible, thanks to technical support from expert engineer, from top level (power-aware architecture definition) to design phase (implementation of the various power management IP). This expertize in low-power design will allow escaping traps, like new noise issues due to mode transition for a SoC partitioned in power domains, or signal integrity suceptibility to crosstalk when operating at extremelly low voltage level.

In the Moore’s law golden age, a chip maker had just to re-design to the next technology node to automatically benefit from higher performance and lower power consumption-at the same time. Today, the semiconductor industry is addressing new applications like edge IoT, medical wearable devices and many more, requiring low-cost IC probably designed on mature technology nodes.

Frequently battery powered, these SoC require an ultra-low power consumption, which can only be reached by using power management techniques. In this white paper, Dolphin Integration describes these new techniques and how to implement power management IP from the architecture level down to the back-end. This is not an easy task, especially when doing it for the first time. That’s why the SoC design team need technical support from low-power design experts and a silicon proven IP portfolio to release an energy-efficient device, on line with the time to market requirement.

To see the White Paper, go to this link

ByEric Esteve fromIPnest