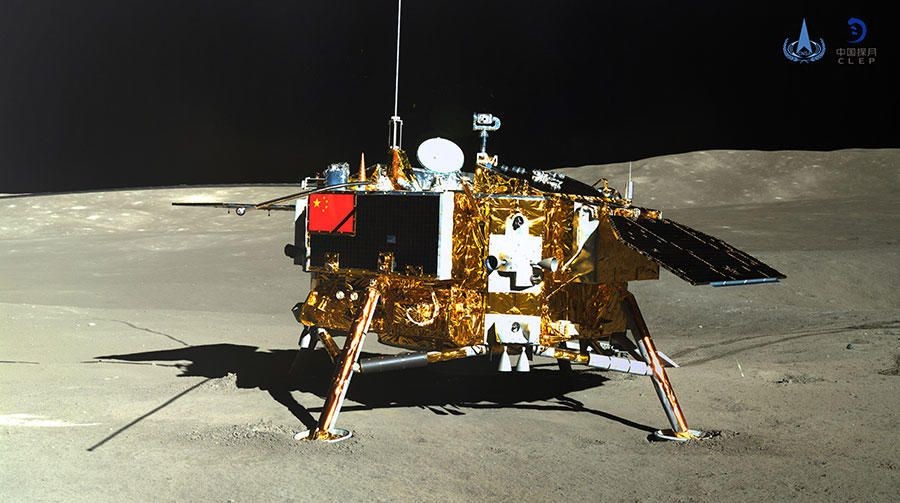

Until January 3, no human being had ever set eyes upon the “dark side” of the moon: the side always facing away from the Earth. It always remained a mystery. But no longer. China’s National Space Administration successfully landed a lunar lander, Chang’e-4, at South Pole-Aitken, the moon’s largest and deepest basin. Its lunar rover Yutu-2 is sending home dozens of pictures so that we can see the soil, rocks, and craters for ourselves. Seeds it took on the journey also just germinated, making this the first time any biological matter from Earth has been cultivated on the Moon.

Scientists had long speculated about the existence of water on the Moon — which would be necessary to grow crops and build settlements. India’s Chandrayaan-1 satellite confirmed a decade ago that there was water in the Moon’s exosphere, and in August 2018, it helped NASA find water ice on the surface of the darkest and coldest parts of its polar regions.

India’s Mangalyaan satellite went even further, to Mars, in 2014, and is sending back stunning images. Prime Minister Narendra Modi has promised a manned mission to space by 2022, and one Indian startup, Team Indus, has already built a lunar rover that can help with the exploration.

The Americans and Soviets may have started the space race in their quest for global domination, but China, India, Japan, and others have joined it. The most interesting entrants are entrepreneurs such as Elon Musk, Jeff Bezos, Richard Branson, and Team Indus’ Rahul Narayan. They are space explorers like the ones we saw in the science fiction, driven by ego, curiosity, and desire to make an impact on humanity. Technology has levelled the playing field so that even startups can compete and collaborate with governments.

In the fifty years since the Apollo 8 crew became the first to go round the moon and return, the exponential advance of technology has dramatically lowered the entry barriers. The accelerometers, gyroscopes, and precision navigation systems that cost millions and were national secrets are now available for a few cents on Alibaba. These are what enable the functioning of Google Maps and Apple health apps—and make space travel possible.

Satellites, rockets, and rovers are also much more affordable.

The NASA space-shuttle program cost about $209 billion over its lifetime and made a total of 135 flights, costing an average per launch of nearly $1.6 billion. Its single-use rockets were priced in the hundreds of millions of dollars. Elon Musk’s company SpaceX now offers launch services for $62 million for its reusable Falcon 9 rockets, which can carry a load of 4020 Kg. And yes, discounts are available for bulk. Team Indus built their lunar rover with only $35 million of funding and a team of rag-tag engineers in Bangalore.

NASA catalyzed the creation of technologies as diverse as home insulation, miniature cameras, CAT scans, LEDs, landmine removal, athletic shoes, foil blankets, water-purification technology, ear thermometers, memory foam, freeze-dried food, and baby formulas. We can expect the new forays into space to yield even more. The opportunities are endless: biological experimentation, resource extraction, figuring out how to live on other planets, space travel, and tourism. The technologies will include next-generation nano satellites, image sensors, GPS, communication networks, and a host of innovations we haven’t conceived of yet. We can also expect to be manufacturing in space and 3D-printing buildings for space colonies.

Developments in each of these frontiers will provide new insights and innovations for life on earth. Learning about growing plants on the moon can help us to grow plants in difficult conditions on this planet. The buildings NASA creates for Mars will be a model for housing in extreme climates.

As with every technology advance, there are also new fears and risks. Next-generation imagery can provide military advantages through intelligence gathering. The military already has an uncanny ability to track specific people and watch them in incredible detail. For any sort of space station or base on another planet or moon, there is the question of who sets the rules, standards, and language that’s used in outer space. Then there’s the larger question of whose ethical and social values will guide the space communities of the future — and the even larger question of whether places beyond Earth are ethically claimable as property at all.

Regardless of the risks, the era of space exploration has begun and we can expect many exciting breakthroughs. We can also start dreaming about the places we want to visit in the heavens.

For more on how we can create the amazing future of Star Trek, please read my book: The Driver in the Driverless Car