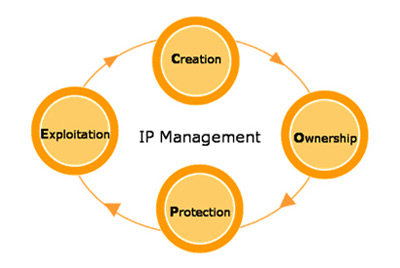

Methodics has been a key player in IP management for over 10 years. In this section, Methodics shares their history, technology, and their role in developing IP Lifecycle Management (IPLM) solutions for the electronics industry.

Methodics is recognized as a premier provider of IP Lifecycle Management (IPLM) and traceability solutions for the Enterprise. Methodics solutions allow semiconductor design teams to benefit from the solutions ability to enable high-performance analog/mixed signal, digital, software, and SOC design collaboration across multi-site and multi-geographic design teams and to track the usage of their important design assets.

The journey started in 2006, when Methodics was founded in 2006 by two ex-Cadence experts in the Custom IC design tools space, Simon Butler and Fergus Slorach. After leaving Cadence, they started a consulting company called IC Methods, active in Silicon Valley from 2000 – 2006. As their consulting business grew, they needed to create a new company to service an engagement that had turned into a product for analog data management. With IP management in their DNA, They reused the IP in their consulting company name and Methodics was born!

Methodics first customer was Netlogic Microsystems, which was later to be acquired by Broadcom. Netlogic used the first commercial product developed by Methodics, VersIC, which provides analog design data management for Cadence Virtuoso. The development of Virtuoso was unique in that Methodics did not have to also develop an underlying data management layer as the first generation design data management companies in the semiconductor industry had to. During the late 1990’s and early 2000’s, a number of data management solutions had entered the market. Some of these solutions were open source, such as Subversion, and others were commercially available, like Perforce. These solutions had developed very robust data management offerings and were in use by 100,000’s of users in multiple industries.

In order to leverage these successful data management solutions, Methodics made the architectural decision to build a client layer on top of these products, allowing the team to focus it’s engineering efforts on developing a unique and full featured client, and not having to develop and maintain a layer for the design data management. Customers would benefit from this arrangement by having a full featured client integrated directly into the Virtuoso environment, and also have a robust data management layer that was widely in use, without necessarily having to concern themselves with the ongoings of the data management system.

It wasn’t too long before Methodics’ customers started asking for a solution that could be used in the digital domain as well. With the increase of companies adopting design reuse methodologies and using third party IP, Methodics decided to not only deliver a solution for digital design, but also one that could be used to manage and track IP reuse throughout their companies. This lead to the development of ProjectIC, which could be used not only for digital design, but analog design as well.

ProjectIC was an enterprise solution for releasing IP’s and cataloging them for reuse, SoC integration, tracking bugs across IP’s and managing permissions. ProjectIC also allowed for the comprehensive auditing of IP usage and user workspaces. With ProjectIC managers could assemble configurations of qualified releases as part of the larger SoC and make this available for designers to build their workspaces. Workspace management was a key technology within ProjectIC as well, and Methodics created a caching function to allow data to be populated in minimal time. Like VersIC before it, ProjectIC was built on top of the growing number of solutions available for data management, which allowed customers to quickly integrate to their development methodologies, especially if design teams had already adopted a commercially available system for data management.

In 2012, Methodics acquired Missing Link Software, which had developed Evolve, a test, regressions and release management tool focused on the digital space. Evolve tracked the entire design test history and provided audit capabilities on what tests were run, when and by whom. These were associated with DM releases and provided a way to gate releases based on the required quality for that point in the designs’ schedule.

With the acquisition of Missing Link, Methodics began to focus on the traceability of design information throughout the entire development process. While the core solutions of Methodics could keep track of who were developing IP, who were using which releases in which designs, and what designs were taped out using specific releases, customer wanted even more visibility into the life cycle of the IP. They wanted to know what requirements were used in developing IP, whether it was internally developed or acquired, what versions of the IP incorporated which features based on requirements, and how that IP was tested, verified, and integrated into the design. What was needed by customers was not only an IP management solution, but a methodology that could be adopted to track the lifecycle of an IP.

In 2017, Methodics released the Percipient platform, the second generation IP Lifecycle Management solution. Percipient built on the success of ProjectIC, but also began to allow for integrations into other engineering systems. In order to fully track an IP’s lifecycle, Percipient created integrations into requirements management systems, issue and defect systems, program and project management systems, and test management systems. These integrations allow for a fully traceable environment, from requirements, through design, to verification, of the lifecycle of an IP. Users of the Percipient platform can now not only track where an IP is used and which version is being used, but can now see what requirements were used in the development of an IP, any outstanding issues that IP might have and what other projects are affected, and whether the IP is meeting requirements based on current verification information.

Today, Methodics continues to develop solutions for fully traceable IP lifecycle management as well as solutions for mission critical industries that require strict adherence to functional safety requirements like automotive and ISO 26262 and Aerospace DO-254. Methodics is also working on solutions to increase engineering productivity. With workspaces growing exponentially, Methodics is developing solutions like WarpStor, which virtualizes engineering workspaces and drastically reduces data storage requirements while increasing network bandwidth. With the adoption of cloud computing by semiconductor companies, Methodics is also working on solutions to help customers work with hybrid compute environments of on premise and cloud based. Just as it was in 2006, Methodics goal is to bring value engineering teams by making the development environment more efficient by enabling close collaboration and the optimization of resources.