My first professional experience with computers and file permissions was at Intel in the late 1970s, where we used big iron IBM mainframes located far away in another state, and each user could edit their own files along with browse shared files from co-workers in the same department. I saw this same file permission concept when using computers from DEC, Wang, Apollo, Sun, Solbourne, HP and others. Even my MacBook Pro computer has an OS based upon Mach OS, derived from BSD UNIX, so it’s very familiar to me when using the command line. SoC designers today are using Linux and UNIX-based computers either on their desktop, network, private cloud or public clouds, and they all have file permissions to help organize how teams share files while the IT group can administer policies.

For an IP-based SoC we need something to help us manage access and track usage of all of those IP blocks, thus the concept of IP Lifecycle Management (IPLM) tools arose and is served by enterprise solutions vendors like Methodics. Using an IPLM approach means that there is one, centralized repository for an SoC design, so that users can get a Bill of Materials (BOM) and know where each IP block is being used. Just like files have permission, each IP block has permissions with IPLM as a way to bring order and allow the IT group to assign roles like Read access or Write access to trusted engineers on a team.

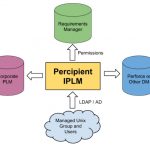

An ideal IPLM system should be a single source of truth, managing IP, related databases, corporate PLMs, requirement managers and even bug trackers. It turns out that Methodics does have an IPLM tool called Percipient that aims to fulfill these ideals. Let’s take a quick look at how the Percipient IPLM approach connects to low-level files, requirements manager, Data Management (DM) systems and PLM tools:

Just like UNIX allows you to set individual file permission of Read, Write, Execute and Owner; with Percipient you can decide to assign Read, Write or Owner permissions to users or groups of users for each IP block within the company. In Unix you’ve already defined the concepts of Users and Groups, so that info can be re-used within Percipient to enable permissions for each IP block.

Percipient also has the concept of hierarchy, meaning that one IP block may itself contain one or many lower-level IP blocks, so you get to define the IP permissions per user and group. If your team has contractors, then it makes sense that you restrict their access to any sensitive IP block details. An admin using Percipient can also grant permissions to all IP hierarchy using a single command, so managing IP access can be quickly updated as your project dynamically changes.

IP permissions are set by knowing who is working on a project, and also which IPs are being used on a project. Engineers that are working on a project will be part of the same UNIX group, so Percipient synchs with your LDAP/AD system to know which engineers belong to each group. To add a new engineer to your project or remove an engineer just update their UNIX group.

Once Percipient knows each UNIX group that are being used, then you can define which IP blocks are assigned as Read, Write or Ownership permission. The IP hierarchy permissions are also defined by an admin either all at once, or by hierarchy level. You define group membership using UNIX and it gets synced within Percipient, so it’s always up to date.

Each IP block with multiple users in different project hierarchies can have multiple permissions with different project groups, so it’s quite flexible to meet your unique project needs.

With Percipient there’s a convenient, centralized place to to view both project and file permissions. Percipient consistently applies permissions into the underlying DM system, whether that is a Perforce IP or another DM. Engineers only see and can modify the specific IP blocks that permission has been granted for.

IP blocks that are changed or re-used in different contexts have their file permissions always in-sync with the DM tool.

Permission management for bug tracking tools like Jira, or a Wiki manager such as Confluence can both be performed by Percipient, extending the utility of a centralized approach.

Let’s say that you wanted to find out the project BOM along with all permissions attached to each IP contained in the BOM. With the Percipient tool there’s a RESTful public API, and here’s an example using the command line, along with the output results:

The results tell us that the users of group “proj_yosemite” have Read permission to the IP, and that user “sasha” has Read, Write and Owner permissions to the IP. Using this API makes it straight-forward for CAD engineerings to integrate Percipient with other software tools that use permissions.

Summary

Both operating systems and IPLM systems have come a long way over the years, making the life of SoC engineers a bit easier by using automation to help manage hierarchy in IP blocks, along with synching up with DM, project requirements and bug tracking tools. Your BOM can now be maintained in a single tool, along with managing all of the permissions to each IP. For more details there’s a 10 page White Paper available at Methodics web site.

Related Blogs