In the rapidly evolving world of Radio Frequency Integrated Circuits (RFIC), the challenge has always been to design efficient, high-performance components quickly and accurately. Traditional methods, while effective, come with a high complexity and a lengthy iteration process. Today, we’re excited to unveil RFIC-GPT, a groundbreaking tool that transforms RFIC design through the power of generative AI.

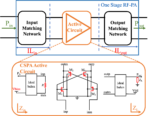

RF chips are known as the crown jewel of analog chips, and RF circuits typically contains not only the active circuits, i.e., the circuits composed of mostly active devices such as transistors, but also a large number of passive components such as inductors, transformers and matching networks. Fig. 1. is an example of a one stage RF power amplifier (PA), the active part of the circuit is a differential common source PA with cross coupled varactors, and it is connected by an input matching network and an output matching network. The matching networks are usually a combination of passive devices such as inductors, capacitors and transformers connected in an optimized configuration.

To design such an RF circuit, both of the devices in the active circuit and the passive layout patterns in the matching networks need to be optimized. The conventional design flow of RFIC circuit is shown in the top half of Fig. 2. On one hand, active circuits need to be first designed and simulated both in schematics and in layouts. On the other hand, the passive components and circuits are iterated repeatedly using more physical and tedious electromagnetic (EM) simulation combined with their layouts, making it a key challenge in RF design.

Thereafter, the parameters of entire layouts are extracted and post layout simulations are run to compare with the design specifications (Specs). Finally, the designs of both active circuits and layouts of passive circuits are re-adjusted and re-simulated, and the results are compared again. This process is iterated for a numerous number of times until the design Specs are achieved. Among others, the main difficulties of designing RFIC can be attributed to:

(1) large design search space of both active and passive circuits;

(2) lengthy and tedious EM simulation required;

(3) Interactions between active and passive circuits, and that between RFIC and its surroundings demands numerous iterations and optimizations.

Therefore, the traditional design flow of RFIC typically takes a lot of human effort, and its design quality in a constrained time also largely depends on the experience of particular IC designers.

Recently, generative AI has been researched and explored extensively for generating contents including but not limited to dialogues, pictures, programming codes. Analogous to this concept, generative AI is also considered for the RFIC design automation in the area of IC design. The bottom half of Fig. 2 exhibits an example RFIC design flow with the assisted generative AI. Essentially, the behavior of small circuit components can be lumped into models and lengthy simulations can be omitted.

Additionally, the solution searching “experience” for the RFIC design can be “learned”, and the solutions, i.e., the initial design of RFIC schematics and layouts, can be quickly “generated”. Importantly, the simulated results of the AI generated RFIC circuits can indeed be already close to the design Specs, and IC design engineers only need to do some final optimization and verifying simulations before they can be applied to the RFIC design blocks for tape-outs. This methodology saves a large amount of the simulation iterations and drastically improves design efficiency. Furthermore, the results are more consistent run to run since the task is performed by “emotionless” computer.

As a pioneer of intelligent chip design solutions, the AI based RFIC design automation tool RFIC-GPT has been launched. Using RFIC-GPT, GDSII or schematic diagrams of RF devices and circuits meeting design specifications (such as Q/L/k of the transformer; matching degree S11 of the matching circuit, insertion loss IL; gain, OP1db of the PA etc.) can be directly generated based on AI algorithm engine. It reduces simulation iterations by over 50%, accelerating the journey from concept to production. This tool is not just about speed; it’s about precision. It generates optimized layouts and schematics that meet design specifications with up to 95% accuracy, ensuring high-quality results with fewer revisions.

What sets RFIC-GPT apart? Unlike traditional tools that rely heavily on manual input and trial-and-error, RFIC-GPT leverages AI to predict and optimize design outcomes, making the process faster and more reliable. This means designers can focus more on innovation and less on the repetitive tasks that often slow down development.

In conclusion, RFIC-GPT represents a significant leap forward in RFIC design technology. By harnessing the power of AI, it offers unprecedented efficiency, accuracy, and ease of use. We’re proud to introduce this innovative tool and are excited about the potential it holds for the future of RFIC design. Join us in this revolution, try RFIC-GPT today, and take the first step towards more efficient, accurate, and innovative RFIC designs. The author encourages designer to try RFIC-GPT online ( www.RFIC-GPT.com ) and give feedback . The practice of RFIC-GPT only takes three steps:

(1) Input your design Specs and requirements;

(2) Consider the design trade-offs and choose the appropriate GDSII or active design;

(3) Click download for your application.

Author:

Jason Liu, Jason is a senior researcher on the design automation solution for RFIC. Jason holds a Ph.D. degree in Electrical Engineering and has been in the EDA industry for more than 15 years.

Also Read:

CEO Interview: Vincent Bligny of Aniah

Outlook 2024 with Anna Fontanelli Founder & CEO MZ Technologies

2024 Outlook with Cristian Amitroaie, Founder and CEO of AMIQ EDA