In a hand-waving way it’s easy to answer why any hardware goes custom (ASIC): faster, lower power, more opportunity for differentiation, sometimes cost though price isn’t always a primary factor. But I wanted to do a bit better than hand-waving, especially because these ML hardware architectures can become pretty exotic, so I talked to Kurt Shuler, VP Marketing at Arteris IP, and I found a useful MIT tutorial paper on arXiv. Between these two sources, I think I have a better idea now.

Start with the ground reality. Arteris IP has a bunch of named customers doing ML-centric design, including for example Mobileye, Baidu, HiSilicon and NXP. Since they supply network on chip (NoC) solutions to those customers, they have to get some insight into the AI architectures that are being built today, particularly where those architectures are pushing the envelope. What they see and how they respond in their products is revealing.

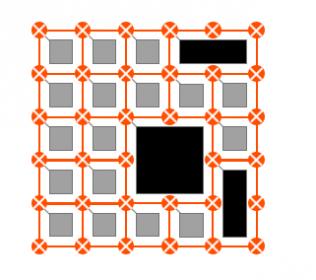

I talked a bit about this in an earlier blog (On-Chip Networks at the Bleeding Edge of ML). There is still very active development, in CPUs and GPUs, around temporal architectures, primarily to drive performance – single instruction, multi-data (SIMD) fed into parallel banks of ALUs. More intriguing, there is rapidly-growing development around spatial architectures where specially-designed processing elements are arranged in a grid or some other topology. Data flows through the grid between processors, though flow is not necessarily restricted to neighbor to neighbor communication. The Google TPU is in this class; Kurt tells me he is seeing many more of this class of design appearing in his customer base.

Why prefer such strange structures? Surely the SIMD approach is simpler and more general-purpose? Neural net training provides a good example to support the spatial approach. In training, weights have to be updated iteratively through a hill-climbing optimization, aiming at maximizing a match to a target label (there are more complex examples, but this is good enough for here). Part of this generally requires a back-propagation step where certain values are passed backwards through the network to determined how much each weight influences the degree of mismatch (read here to get a more precise description).

Which means intermediate network values need to be stored to support that back-propagation. For performance and power you’ll want to cache data close to compute. Since processing is quite massively distributed, you need multiple levels of storage, with register file storage (perhaps 1kB) attached to each processing element, then layers of larger shared caches, and ultimately DRAM. The DRAM storage is often high-bandwidth memory, perhaps stacked on or next to the compute die.

Here it gets really interesting. The hardware goal is to optimize the architecture for performance and power for neural nets, not for general purpose compute. Strategies are based on operation ordering, eg minimizing update of weights or partial sums or minimizing size of local memory by providing methods to multicast data within the array. The outcome, at least here, is that the connectivity in an optimized array is probably not going to be homogenous, unlike a traditional systolic array. Which I’m guessing is why every reference I have seen assumes that the connectivity is NoC-based. Hence Arteris IP’s active involvement with so many design organizations working on ML accelerators.

More interesting still are architectures to support recurrent NNs (RNNs). Here the network needs to support feedback. An obvious way to do this is to connect the right -side of the mesh to the left-side forming (topologically) a 3D cylinder. You can then connect the top-side to the bottom-side, making (again topologically) a torus. Since Kurt tells me he hears about these topologies from a lot of customers, I’m guessing a lot of folks are building RNN accelerators. (If you’re concerned about how such structures are built in semiconductor processes, don’t be. These are topological equivalents, but the actual structure is still flat. Geometrically, feedback is still routed in the usual way.)

I see two significant takeaways from this:

- Architecture is driven from top-level software goals and is more finely tuned to the NN objective than you will find in any traditional application processor or accelerator; under these circumstances, there is no one “best” architecture. Each team is going to optimize to their goals.

- What defines these architecture topologies, almost more than anything else, is the interconnect. This has to be high-throughput, low-power, routing friendly and very highly configurable, down to routing-node by routing-node. There needs to be support for multicast, embedding local cache where needed and high-performance connectivity to high-bandwidth memory. And of course in implementation, designers need efficient tools to plan this network for optimal floorplan, congestion and timing across some very large designs.

I hope Kurt’s insights and my own voyage of discovery added a little more to your understanding of what’s happening in this highly-dynamic space. You can learn more about what Arteris IP is doing to support AI in these leading-edge ML design teams HERE. They certainly seem to be in a pretty unique position in this area.

Share this post via:

Comments

2 Replies to “Why High-End ML Hardware Goes Custom”

You must register or log in to view/post comments.