Interesting read from IEEE:

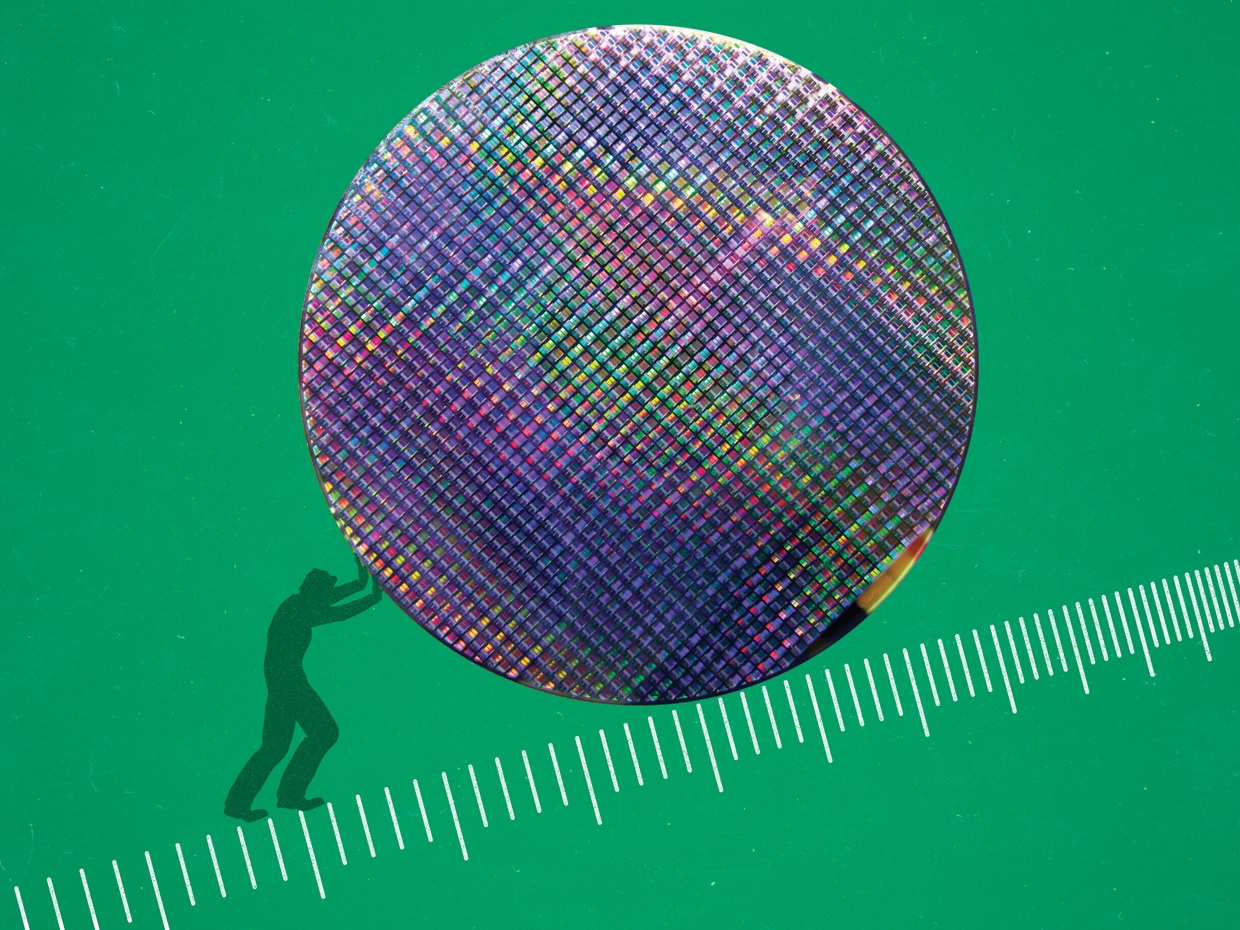

A Better Way to Measure Progress in Semiconductors

It’s time to throw out the old Moore’s Law metric

One of the most famous maxims in technology is, of course, Moore’s Law. For more than 55 years, the “Law” has described and predicted the shrinkage of transistors, as denoted by a set of roughly biannual waypoints called technology nodes. Like some physics-based doomsday clock, the node numbers have ticked down relentlessly over the decades as engineers managed to regularly double the number of transistors they could fit into the same patch of silicon. When Gordon Moore first pointed out the trend that carries his name, there was no such thing as a node, and only about 50 transistors could economically be integrated on an IC.....

A Better Way to Measure Progress in Semiconductors

It’s time to throw out the old Moore’s Law metric

One of the most famous maxims in technology is, of course, Moore’s Law. For more than 55 years, the “Law” has described and predicted the shrinkage of transistors, as denoted by a set of roughly biannual waypoints called technology nodes. Like some physics-based doomsday clock, the node numbers have ticked down relentlessly over the decades as engineers managed to regularly double the number of transistors they could fit into the same patch of silicon. When Gordon Moore first pointed out the trend that carries his name, there was no such thing as a node, and only about 50 transistors could economically be integrated on an IC.....

Last edited: