I attended on Monday, June 25, DAC’s Opening Day, a Cadence-sponsored Lunch panel. Ann Steffora Mutschler (Semiconductor Engineering) was the Moderator and the Panelists were Jim Hogan (Vista Ventures), David Lacey (HP Enterprise), Shigeo Oshima (Toshiba Memory Corp), Paul Cunningham (Cadence).

[Note: these are highlights, not a full transcript, with some paraphrasing]

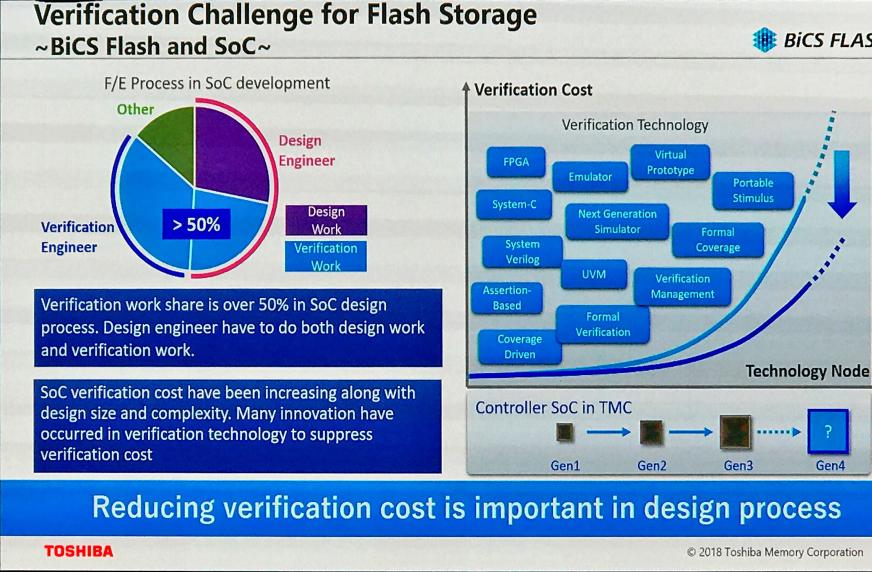

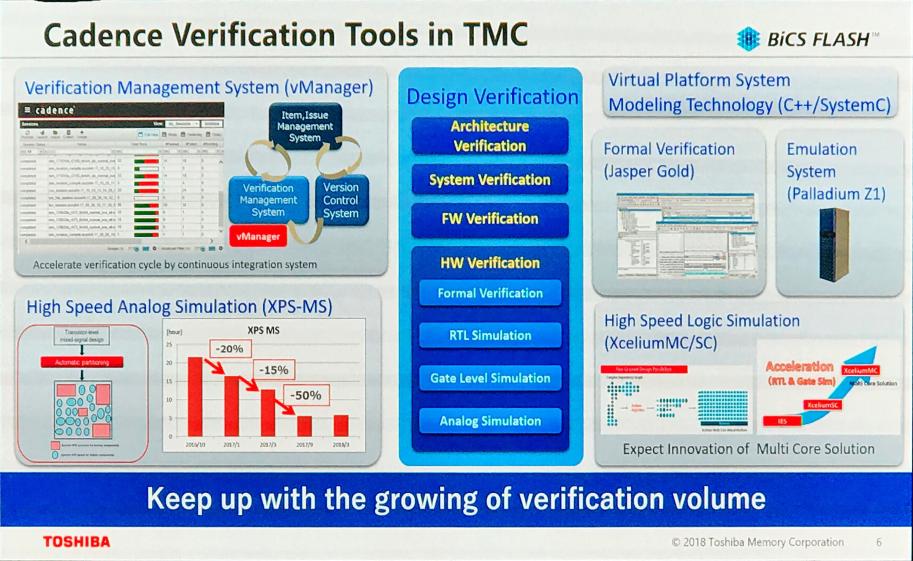

In opening statements, Shigeo Oshima addressed the verification challenges seen by Toshiba Memory Corporation (TMC), that mirror the ones seen by most of the design community, as technology nodes are shrinking, and design size, complexity and costs are rising. He outlined the Cadence verification tools TMC is deploying to address those challenges.

Paul Cunningham stated what Cadence can do to address the current verification challenges, stressing that resources and headcount cannot continue to be squared in order to get to results. “We have many different engines at our disposal from traditional simulation, formal verification and different hardware with traditional simulation running on Intel x86, or ARM servers or custom processors or FPGAs and emulators and we are always looking to find out which engine to use in which case to maximize overall verification throughput. […]. What can we do with ML, AI, how do we go off in this space efficiently? It does not feel efficient right now and we are burning millions of cycles in verification without making any difference in coverage or covering the same thing multiple times. There is a real opportunity to try to be more efficient to address this fundamentally intractable problem.”

David Lacey outlined HPE’s 3 major areas of focus around methodology:

- 1[SUP]st[/SUP]: Make engineers more productive, where most expense lies, with engineers being the biggest expense, and make them as efficient as they can be in methodology and tools.

- 2[SUP]nd[/SUP]: Make the best use of licenses.

- 3[SUP]rd[/SUP]: Understand how to achieve predictability with goals.

One needs data in order to be able to drive answers and so they are looking at big data in order to achieve progress on those 3 areas and look at tools and technologies and if they are using all the technologies and tools that they have. He stressed that it is not a matter of how many features are available but about finding a smart way to utilize these features.

Jim Hogan mentioned he is investing in companies where half are semi or EDA. What interests him in verification is that it is often the case that they are too early to market. He suffered that as an investor or a tool provider. Now there is a sea change with Google and AWS. “The Cloud is allowing new things. There will be hardware changes and business model changes”. In Jim’s case out of 14 businesses he invested in, only 3 are winners. The verification problem is intractable and getting bigger. He loves this as it means a lot of products get bought. Safety and security will require to understand and decide when enough verification is enough. He underlined that verification is a good market for him to invest in.

Mutschler asked if verification is a science or an art.

Cunningham stated: “It is a bit of both. It is an ecosystem problem: What is the type of chip you are doing? Are you verifying at block or component or C-level?” For instance, you can model real-world software ecosystem running a software stack. This works if the design is stable and you are really debugging software with the design. If, however, you are flushing core functionality of the design itself, you have a different set of requirements, and you are better off with emulation. The challenge is overall orchestration, how do you bring multi-engine coverage together? A lot of work is left to do, and there is no strong tool class in this space and no decent solution yet. They invested heavily at Cadence and they have a long way to go to solve that.

Lacey stated that there are certainly different types of engines. Make smart choices, use broad guidelines, know where to use those engines, and when it is tough to convince management, use what you have, there are areas where you can use those engines and target value from them in your flow. Then take it to the next level value out of those engines. Remove overlap of those space engines. Adding more work will not get value. Use formal in some areas, simulation in others and use the data. Cunningham elaborated that in fact, you want less simulation if you can help it.

Hogan said that in fact, you can have smarter test benches. Accellera has a new standard that was just announced. Provide stimulus and assertions sufficient to verify what you want to do. You can simulate forever, telling a story (from his analog simulation days) of when the sales guy asked him ‘when are you going to finish simulating?’ his answer was ‘when are you shipping the product?’. How do we know we have done enough simulation?

Cunningham: use Formal tool to reduce your coverage requirement, if things are not reachable, there is no point in covering. Like things around reset, low power, domain crossings, isolation. Hogan chimed that confining the test problem is what is needed.

Mutschler: All that test verification generates tremendous data. How do you aggregate, know what to look for, put to good use?

Lacey: What data do you pull in? Is it useful? Spend time to identify useful stuff. Toshiba memories use a lot of data. Use data, identify the data to train and what to keep (Hogan).

Hogan: I exited 2 AI companies. Once you have data, in one case you can have an annealing program no one has seen. Saw behavior humans would never see. Cooked up 2 new algorithms […]. AI is not so much a product as it is a feature. We will have training expertise.

Mutschler: What is the cloud plan?

Hogan: It does not cost to send to the cloud, it costs to bring it back. Look at opportunities where you can apply a cost model.

Lacey: It will come down to cost and what is the productivity. Play for the cloud is 5000 tests running all night vs done in 10 minutes. Cloud adds interesting aspects in engineering productivity when lacking compute capacity. There is a tradeoff between investing in private cloud and public cloud.

Oshima: Design complexity is a factor. Even though cloud provides performance and speed, however in classical memory design, a fully customized Data Path design is very unique from the fully digital SoC flavor. They have to learn from logic designers, but classical memory design will continue. They still have to learn Design methodology in the cloud ecosystem.

Mutschler: Metrics and measurement. What are the major challenges? The design process cannot be a black box.

Hogan: Understand transparency. PLM in design space. This happens slowly. Analytics will inform us where to spend money and where the bottlenecks are in hardware, software, and people. Investing in a couple of companies. Not an EDA semi problem, pharma, petrochemical.

Lacey: If we ask a question, and no data exists, we will look for data for it. Track metrics over time and look where things go awry.

Hogan: GCP, AWS have a lot of capability, we do not need to invest in infrastructure, we have to come up with our specific metrics.

Cunningham: First metrics around coverage, coverage data relative to a functional spec to know what we have done. Coverage at a high level. Coverage at a bit level. How you have a scenario of coverage is very real now, significant. Needs to be done.

Lacey: Coverage events you are getting. Ask questions you have not thought of. Static data and put in front of emulators. Get dynamic constraints identified and get in front of the emulator.

Questions from audience: How to get faster signoff?

Cunningham: If have 50K regressions, identify if you could run 10% of them and almost get the same coverage.

Hogan: Think of the world in statistical terms, when looking at AI, push past anomalies and behavior hidden in standard distributions, because in those are new things, crossing timing domains, are they meaningful? Thought whole bunch problems across timing domains, turns out they were not; find and eliminate those. Exciting time to solve new problems.

Cunningham: Spectre and Meltdown is an example when running random instructions until they time out, at some point come up with a magic secret instruction that is a bug. This is not so obviously amenable to verification, but AI/ML can fix that.

Hogan: Intervention of the design engineer is important to address. Requires inventing and directing these things.

Lacey: Constrained random stimulus, need an engineer to orchestrate. AI is fine, but need data generated stimulating design allowing to infer this part of the design is controllable with a stimulus knob. Gao in between, only I have to turn is the constraints.

Question: Verification needs guidance on how to use emulation and simulation, what have you done?

Cunningham: Have Products write enterprise class DB. Merged coverage view and allow to do ranking and filtering. Next iteration you optimize that. The amount of the data is large, with so many regressions, go down the learning curve. Get enterprise-class DB experts.

How to decipher and add formal or not? Finding what is needed?

Lacey: Get coverage from different sources. A tool that allows merging coverage. Smarter verification is understanding what that coverage data represents. What does it mean when you look at them together how do they compare and complement each other?

Hogan: Results make sense sometimes. For EDA, there is an opportunity for more intelligent workbenches and get results from a formal tool that do not result in an error.

Question: How does one get to sign off and is it faster when you do in parallel?

Hogan: Have faith and fix with s/w.

Lacey: Verification plan comes into play. Minimize overlap and provide a holistic standpoint. Sometimes you need silicon.

Cunningham: Does not absolve from doing better emulation and simulation.

Question: Verification as art rather than science. Understand AI, ML how does it apply? Take back to my team. What are the big challenges when can we expect a feasible solution, verification productivity?

Lacey: Begin to explore what is important to us, what data then develop tools to help us; and use them ML

Hogan: Search algorithm and models exist. Invest. More than computer science engineers we need engineers with data science. There is no replacing the engineer.

Cunningham: Neural networks. Fully analog synapses have more fidelity than a 32-bit integer. We are not anywhere close to replacing the design engineer.

Final statement: where do we focus next? 2 sentences.

- Intelligent workbench (Hogan)

- Investments in engineers, licenses, smarter in ways to guide and equip engineers (Lacey)

- Smarter in SOC and memory (Oshima)

- Smart coverage (Cunningham)

Comments

There are no comments yet.

You must register or log in to view/post comments.