For any given design objective, there is what we in the design automation biz preach that design teams should do, and then there’s what designs teams actually do. For some domains, the gap between these two may be larger than others, but we more or less assume that methodologies which have been around for years and are considered to be “givens” among leaders in the field will be, at least conceptually, well-embedded in the thinking of other teams.

In low-power design, here are some of those givens:

- Designer intuition for performance and area of a function can be quite good, but intuition for power is horrible: +/- 50% if you’re lucky.

- ~80% of power optimization is locked down in architecture (leftmost designer above), ~20% or less in RTL (middle designer) and the best reductions you can hope for at implementation are in single digits (rightmost designer).

- From which we conclude that power is an objective that must be addressed all the way through the design flow – from architecture all the way to GDS, with architecture most important.

- Power is heavily dependent on use-case, more so perhaps in mobile, but still important in wired applications. You have to run power estimation on a lot of applications, which is going to run a lot faster at RTL than at gate-level.

- Peak-power is also important, especially for reliability. So averages alone are insufficient.

For anyone designing for mobile applications, all of this is old news. They have been using these principles (and more) for a long time. What came as a shock to me, in discussion with Dave Pursley (Sr. PPM at Cadence) was that some design teams, at well-known companies, seem unaware of or indifferent to even the first two concepts. I can’t speak to why, but I can speculate.

Perhaps the view is that “this isn’t a mobile application, so we just have to squeeze a bit and we can do that in RTL”. Or “between RTL and layout optimizations we’ll get close enough”. Or “we can’t estimate power accurately until we have an implementation so let’s get there first then see where we stand”. Or maybe it’s just “we’ve always done design this way, power is just another thing we have to tweak, we’ll run that near the end”. Whatever the reason, as you might guess sometimes this doesn’t turn out so well. Dave cited examples (no names) of power estimates at layoutthat were 50% over budget.

Some of these were saved by Hail Mary’s. Which sounds heroic but it’s not a good way to run a business. A more likely outcome is a major redesign for the next product cycle or scrapping the product. For those of you who have found yourselves in this position and didn’t enjoy the experience, let’s review what you should have done.

First, just because your target won’t go into a mobile application doesn’t mean you can skip steps in low-power design. If you’re just doing a small tweak to an existing well-characterized design, to be used by the same customer in the same applications, then maybe. Otherwise you need to start from architecture just like everyone else. You don’t have to get anywhere near as fancy as the mobile design teams, but HPC/cloud applications, cost-sensitive applications without active cooling and high-reliability systems now also have tight and unforgiving power budgets.

How do you estimate power at the architecture level? For the system as a whole, simulation coupled with power estimation, if you don’t have any other choice. Emulation coupled with power estimation will be massively more effective in getting coverage across many realistic use cases and particularly in teasing out peak power problems.

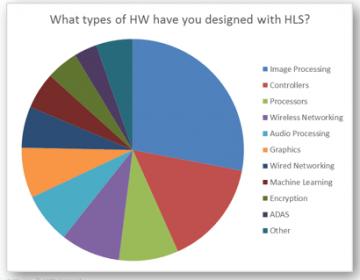

For IP power characterization, you’ll start with RTL or gate-level models. If you’re planning to build a new IP, you might consider starting with high-level design (eg. SystemC). That can be synthesized directly to RTL where you can run power estimation driven by the testbench you developed at that same high-level (also faster to build than an RTL testbench). Developing at high-level allows for quick turn-around architecture exploration to optimize, say, power. You may be surprised to hear that a lot more functions are being developed this way today (see the results of a Cadence 2017 survey above). If this isn’t an option, you’ll have to stick with RTL models. Either way, you know power estimation will be as accurate as RTL power estimation.

Which these days is within ~15% of signoff power estimates. Might seem like a significant error bar, but it’s still a lot better than your intuition. And it’s actually better than 15% for relative estimates, so a pretty good guide for comparing architecture/micro-architecture options.

Next, unless they have a Hail Mary play in mind, don’t believe the RTL team if they tell you they can cut 50% power. (I’m not talking about power and voltage switching here). More common might be 15-30% starting from an un-optimized design, more like ~5% if already optimized. If they can save more than that, that doesn’t speak well to the quality of their design. Clock gating will save some, not much if you only do register-level gating, more if you gate at the IP level (also gating the clock tree), memory-gating can save quite a bit too. Hail Mary’s could include power gating but hang on to your hat if you start thinking of that late in the design. Verification is going to get a whole lot more complicated as is adding on-board power management, power grid design, floorplanning and more complex power integrity signoff.

Most of all squeeze everything you can out of power before you hand it over to implementation and make sure you are within ~5-10% of budget before you handoff. Implementation can fine tune but they absolutely cannot bail you out if you’re way off on power. What they need to focus on is that last few percent, power and signal integrity, thermal (again, not just average but also peak – local thermal spikes affect EM and timing and can easily turn into thermal runaway). And, of course, they worry about timing closure and area.

So now you know power optimization isn’t a back-end feature or tool. It’s a whole string of tools and a responsibility all the way from architecture to implementation, with the bulk of that responsibility starting at architecture. The right way to handle this is through an integrated flow like the that offered by Cadence. Why is integration important? Because a lot of getting this right depends on consistency in handling constraints and estimation through the flow, which you’ll sacrifice if you mix and match tools. And that will be even more frustrating.

Share this post via:

Comments

One Reply to “The Practice of Low Power Design”

You must register or log in to view/post comments.